AWS News Blog

Celebrate 15 Years of Amazon S3 with ‘Pi Week’ Livestream Events

|

|

I wrote the blog post that announced Amazon Simple Storage Service (Amazon S3) fifteen years ago today. In that post, I made it clear that the service was accessed via APIs and that it was targeted at developers, outlined a few key features, and shared pricing information. Developers found that post, started to write code to store and retrieve objects using the S3 API, and the rest is history!

Today, I am happy to announce that S3 now stores over 100 trillion (1014, or 100,000,000,000,000) objects, and regularly peaks at tens of millions of requests per second. That’s almost 13,000 objects for each person in the world, or 50 objects for every one of the roughly two trillion galaxies (according to this 2021 estimate) in the Universe.

A Simple Start

Looking back on that launch, we made a lot of decisions that have proven to be correct, and that made it easy for developers to understand and get started with S3 in minutes:

We started with a simple conceptual model: Uniquely named buckets that could hold any number of objects, each identified by a string key. The initial API was equally simple: create a bucket, list all buckets, put an object, get an object, and put an access control list. This simplicity helped us to avoid any one-way doors and left a lot of room for us to evolve S3 in response to customer feedback. All of those decisions remain valid and code written on launch day will still work just fine today.

After discussing many different pricing models (discussed at length in Working Backwards) we chose a cost-following model. As Colin and Bill said:

With cost following, whatever the developer did with S3, they would use it in a way that would meet their requirements, and they would strive to minimize their cost and, therefore, our cost too. There would be no gaming of the system, and we wouldn’t have to estimate how the mythical average customer would use S3 to set our prices.

In accord with the cost-following model, we have reduced the price per GB-month of S3 many times, and have also introduced storage classes that allow you to pay even less to store data that you reference only infrequently (S3 Glacier and S3 Glacier Deep Archive), or for data that you can re-create if necessary (S3 One Zone-Infrequent Access). We also added S3 Intelligent-Tiering to monitor access patterns and to move objects to an appropriate storage tier automatically.

Giving developers the ability to easily store as much data as they wanted to, with a simple pricing model, was a really big deal. As New York Times writer John Markoff said in Software Out There shortly after S3 was launched:

Amazon recently introduced an online storage service called S3, which offers data storage for a monthly fee of 15 cents a gigabyte. That frees a programmer building a new application or service on the Internet from having to create a potentially costly data storage system.

Durability was designed in from the beginning. Back in 2010 I explained what we mean when we say that S3 is designed to provide “11 9’s” (99.999999999%) of durability. We knew from the start that hardware, software, and network failures happen all of the time, and built S3 to handle them transparently and gracefully. For example, we automatically make use of multiple storage arrays, racks, cells, and Availability Zones (77 and counting).

We had an equally strong commitment to security, and have always considered it to be “job zero.” As you can see from the list below, we continue to refine and improve the array of security options and features that you can use to protect the data that you store in S3.

Days after we launched S3, tools, applications, and sites began to pop up. As we had hoped and expected, many of these were wholly unanticipated and it was (and still is) always a delight to see what developers come up with.

Today, 15 years later, S3 continues to empower and inspire developers, and lets them focus on innovation!

Through the Years

I’m often asked to choose a favorite service, launch, or feature. Because I like to be inclusive rather than exclusive, this is always difficult for me! With that said, here are some of what I believe are the most significant additions to S3:

I’m often asked to choose a favorite service, launch, or feature. Because I like to be inclusive rather than exclusive, this is always difficult for me! With that said, here are some of what I believe are the most significant additions to S3:

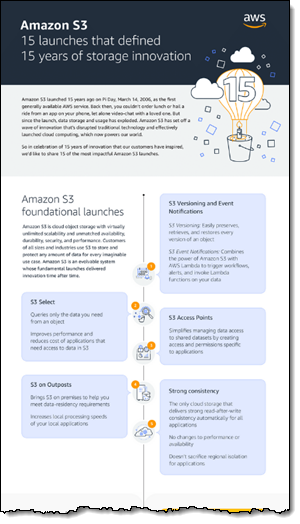

Fundamentals – Versioning, Event Notifications, Select, Access Points, S3 on Outposts, and Strong Consistency.

Storage Classes – S3 Glacier, S3 Standard-Infrequent Access, S3 Intelligent-Tiering, and S3 Glacier Deep Archive.

Storage Management – Cross-Region Replication, Same-Region Replication, Replication Time Control, Replication to Multiple Destination, Lifecycle Policies, Object Tagging, Storage Class Analysis, Inventory, CloudWatch Metrics, and Batch Operations.

Security – Block Public Access, Access Analyzer, Macie, GuardDuty, Object Ownership, and PrivateLink.

Data Movement – Import/Export, Snowmobile, Snowball, and Snowcone.

Be sure to check out the S3 15 Launches Infographic for a more visually appealing, chronological take on the last 15 years.

S3 Today

With more than 100 trillion objects in S3 and an almost unimaginably broad set of use cases, we continue to get requests for more features. As always, we listen with care and do our best to meet the needs of our customers.

We also continue to profile, review, and improve every part of the S3 implementation with an eye toward improving performance, scale, and reliability. This means that S3 (and every AWS service, for that matter) gets better “beneath your feet” over time, with no API changes and no downtime for upgrades. As a simple example, we recently established a way to dramatically reduce latency for (believe it or not) 0.01% of the PUT requests made to S3. While this might seem like a tiny win, it was actually a much bigger one. First, it avoided a situation where customer requests would time out and retry. Second, it provided our developers with some insights that they may be able to use to further reduce latency in this and possibly other situations.

Tune in to ‘Pi Week’ Virtual Events and Learn All About S3

My colleagues have been cooking up a lot of interesting content to help you learn even more about S3 during Pi Week. This is a free, live, virtual 4-day event that gives you the opportunity to hear AWS leaders and experts talk about the history of AWS and the key decisions that were made as we built and evolved S3. Other sessions will show you how to use S3 to control costs and continuously optimize your spend while you build modern, scalable applications.

My colleagues have been cooking up a lot of interesting content to help you learn even more about S3 during Pi Week. This is a free, live, virtual 4-day event that gives you the opportunity to hear AWS leaders and experts talk about the history of AWS and the key decisions that were made as we built and evolved S3. Other sessions will show you how to use S3 to control costs and continuously optimize your spend while you build modern, scalable applications.

Werner Vogels has even interviewed some of the senior architects and leaders of S3 including Mai-Lan Tomsen Bukovec, VP, Block and Object Storage; Bill Vass, VP, Storage, Automation, and Management; and Eric Brandwine, VP, Security.

Livestreams will take place each day from 9 a.m.-1 p.m. (PDT); 12 p.m.-4 p.m. (EDT). I’ll add the links daily as the videos are published. Here are the day’s themes:

- Monday, March 15: Amazon S3 origins.

- Tuesday, March 16: Building data lakes and enabling data movement.

- Wednesday, March 17: Security is job zero – Amazon S3 security framework and best practices.

- Thursday, March 18: Amazon S3 and the foundations of a serverless infrastructure.

Get the full Pi Week agenda and tune in here!

— Jeff;