Category: Amazon RDS

AWS Price Reduction #42 – EC2, S3, RDS, ElastiCache, and Elastic MapReduce

It is always fun to write about price reductions. I enjoy knowing that our customers will find AWS to be an even better value over time as we work on their behalf to make AWS more and more cost-effective over time. If you’ve been reading this blog for an extended period of time you know that we reduce prices on our services from time to time, and todays announcement serves as the 42nd price reduction since 2008.

We’re more than happy to continue this tradition with our latest price reduction.

Effective April 1, 2014 we are reducing prices for Amazon EC2, Amazon S3, the Amazon Relational Database Service, and Elastic MapReduce.

Amazon EC2 Price Reductions

We are reducing prices for On-Demand instance as shown below. Note that these changes will automatically be applied to your AWS bill with no additional action required on your part.

| Instance Type | Linux / Unix Price Reduction |

Microsoft Windows Price Reduction |

| M1, M2, C1 | 10-40% | 7-35% |

| C3 | 30% | 19% |

| M3 | 38% | 24-27% |

We are reducing the prices for Reserved Instances as well for all new purchases. With todays announcement, you can save up to 45% with on a 1 year RI and 60% on a 3 year RI relative to the On-Demand price. Here are the details:

| Instance Type | Linux / Unix Price Reduction |

Microsoft Windows Price Reduction |

||

| 1 Year |

3 Year |

1 Year |

3 Year |

|

| M1, M2, C1 | 10%-40% | 10%-40% | Up to 23% | Up to 20% |

| C3 | 30% | 30% | Up to 16% | Up to 13% |

| M3 | 30% | 30% | Up to 18% | Up to 15% |

Also keep in mind that as you scale your footprint of EC2 Reserved Instances, that you will benefit from the Reserved Instance volume discount tiers, increasing your overall discount over On-Demand by up to 68%.

Consult the EC2 Price Reduction page for more information.

Amazon S3 Price Reductions

We are reducing prices for Standard and Reduced Redundancy Storage, by an average of 51%. The price reductions in the individual S3 pricing tiers range from 36% to 65%, as follows:

| Tier | New S3 Price / GB / Month |

Price Reduction |

| 0-1 TB | $0.0300 | 65% |

| 1-50 TB | $0.0295 | 61% |

| 50-500 TB | $0.0290 | 52% |

| 500-1000 TB | $0.0285 | 48% |

| 1000-5000 TB | $0.0280 | 45% |

| 5000 TB or More | $0.0275 | 36% |

These prices are for the US Standard Region; consult the S3 Price Reduction page for more information on pricing in the other AWS Regions.

Amazon RDS Price Reductions

We are reducing prices for Amazon RDS DB Instances by an average of 28%. There’s more information on the RDS Price Reduction page, including pricing for Reserved Instances and Multi-AZ deployments of Amazon RDS.

Amazon ElastiCache Price Reductions

We are reducing prices for Amazon ElasticCache cache nodes by an average of 34%. Check out the ElastiCache Price Reduction page for more information.

Amazon Elastic MapReduce Price Reductions

We are reducing prices for Elastic MapReduce by 27% to 61%. Note that this is addition to the EC2 price reductions described above. Here are the details:

| Instance Type | EMR Price Before Change |

New EMR Price |

Reduction |

| m1.small | $0.015 | $0.011 | 27% |

| m1.medium | $0.03 | $0.022 | 27% |

| m1.large | $0.06 | $0.044 | 27% |

| m1.xlarge | $0.12 | $0.088 | 27% |

| cc2.8xlarge | $0.50 | $0.270 | 46% |

| cg1.4xlarge | $0.42 | $0.270 | 36% |

| m2.xlarge | $0.09 | $0.062 | 32% |

| m2.2xlarge | $0.21 | $0.123 | 41% |

| m2.4xlarge | $0.42 | $0.246 | 41% |

| hs1.8xlarge | $0.69 | $0.270 | 61% |

| hi1.4xlarge | $0.47 | $0.270 | 43% |

With this price reduction, you can now run a large Hadoop cluster using the hs1.8xlarge instance for less than $1000 per Terabyte per year (this includes both the EC2 and the Elastic MapReduce costs).

Consult the Elastic MapReduce Price Reduction page for more information.

We’ve often talked about the benefits that AWS’s scale and focus creates for our customers. Our ability to lower prices again now is an example of this principle at work.

It might be useful for you to remember that an added advantage of using AWS services such as Amazon S3 and Amazon EC2 over using your own on-premises solution is that with AWS, the price reductions that we regularly roll out apply not only to any new storage that you might add but also to the existing data that you have already stored in AWS. With no action on your part, your cost to store existing data goes down over time.

Once again, all of these price reductions go in to effect on April 1, 2014 and will be applied automatically.

— Jeff;

Amazon RDS – Support for Second Generation Standard Instances

Amazon RDS makes it easy for you to set up, scale, and run a relational database. Today we are making RDS even better with the introduction of the second generation (M3) of the Standard DB instance family. This new generation supports the db.m3.medium, db.m3.large, db.m3.xlarge, and db.m3.2xlarge instance types. These instances have a similar CPU to memory ratio as the first generation Standard (M1) instances, but offer 50% more compute capability per core.

Better Price/Performance

Prices for the M3 instances are about 6% lower than those for the similarly named M1 instances. As a result, you get significantly higher and more consistent compute power at a lower price when you use these instances.

The db.m3.xlarge and the db.m3.2xlarge instances offer dedicated capacity and are optimized for the use of Provisioned IOPS storage. It is possible to realize up to 12,500 IOPS for MySQL and 25,000 IOPS for Oracle and PostgreSQL on a workload consisting of 50% reads and 50% writes, when running on a db.m3.2xlarge instance. For more information, refer to the Working With Provisioned IOPS section of the RDS User Guide.

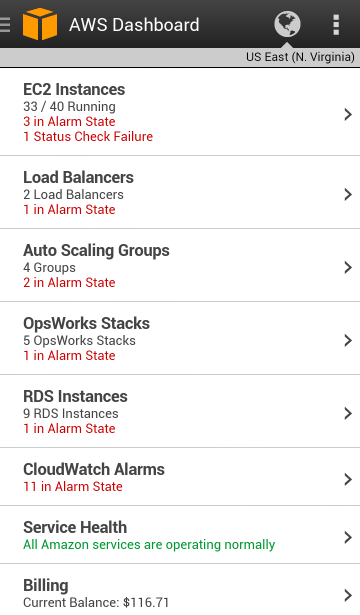

Easy Upgrade

You can upgrade your existing RDS DB instances by simply modifying the instance type:

You can select Apply Immediately for nearly instant gratification, or you can leave it unchecked to wait for the next maintenance window. This operation will briefly impact availability. For a Multi-AZ instance, this impact will typically last less than two minutes. This is the amount of time that it takes for a failover from primary to secondary to complete.

The new instances are available for all database engines in all AWS regions, with AWS GovCloud (US) support coming in the future.

— Jeff;

Amazon RDS for Oracle Database – New Database Version & Time Zone Option

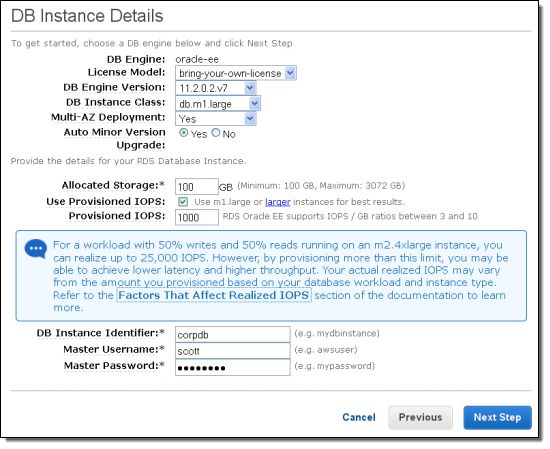

Amazon RDS for Oracle Database makes it easy for you to launch, scale, and manage an Oracle database in

the cloud. You can use the AWS Management Console to create a DB instance with a couple of clicks:

We launched support for version 11.2.0.3 of Oracle Database in late December of 2013. Things were a bit hectic at that time and I didn’t have any time to write a blog post.

In addition to the 11.2.0.3 launch, starting today we are giving you the ability to change the system time zone used by your DB instances that are running Oracle Database.

Support for Oracle 11.2.0.3

You can now launch new DB instances running version 11.2.0.3 of Oracle Database.

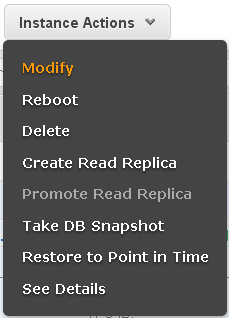

You can also upgrade an existing DB instance to this new version. Of course, you’ll want to create a test DB instance from a recent snapshot, upgrade it, and then run your acceptance tests before doing this to a production database. The upgrade process is very straightforward. You simply select the DB instance in the console and choose the Modify option from the Instance Actions menu:

Choose the new engine version (11.2.0.3v1) and click the Continue button to initiate the upgrade process:

You can check Apply Immediately for a quick update, or leave it unchecked in order to defer the upgrade to the next maintenance window for the DB instance.

Time Zone Option

You now have the ability to use the Time Zone option to change the system time zone for your DB instances that are running Oracle Database. This option changes the time zone at the host level, so you’ll want to do some testing to make sure that you understand the effect it will have on your application. You can use this option to maintain compatibility with a legacy application or an existing on-premises environment.

The Time Zone option must be specified in an option group. Once added, it is persistent and cannot be removed from the group. Further, the group cannot be disassociated from a running DB instance.

To learn more about working with time zones, read the new Oracle Time Zone section of the Amazon RDS User Guide.

These new features are available now and you can start using them today!

— Jeff;

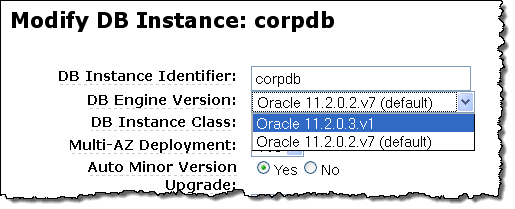

AWS Console for iOS and Android Now Supports AWS OpsWorks

The AWS Console for iOS and Android now includes support for AWS OpsWorks.

You can see your OpsWorks resources — stacks, layers, instances, apps, and deployments with the newest version of the app. It also supports EC2, Elastic Load Balancing, the Relational Database Service, Auto Scaling, CloudWatch, and the Service Health Dashboard.

The Android version of the console app also gets a new native interface.

OpsWorks Support

With this new release, iOS and Android users have access to a wide variety of OpsWorks resources. Here’s what you can do:

- View and navigate your OpsWorks stacks, layers, instances, apps, and deployments.

- View the configuration details for each of these resources.

- View your CloudWatch metrics and alarms.

- View deployment details such as command, status, creation time, completion time, duration, and affected instances.

- Manage the OpsWorks instance lifecycle (e.g. reboot, stop, start), view logs, and create snaphsots of attached Volumes.

Take a Look

Here are some screen shots of the Android console app in action. The dashboard displays resource counts and overall status:

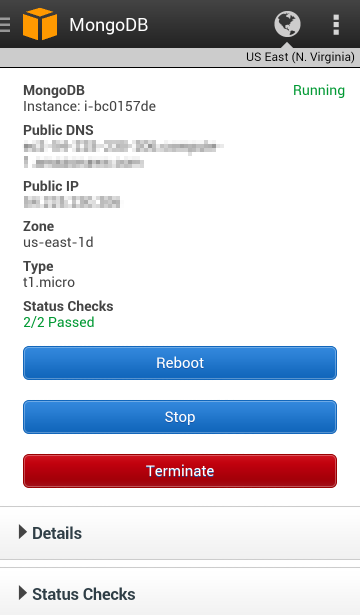

The status of each EC2 instance is visible. Instances can be rebooted, stopped, or terminated:

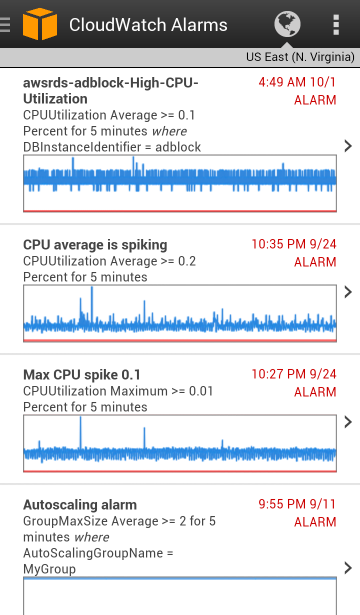

CloudWatch alarms and the associated metrics are visible:

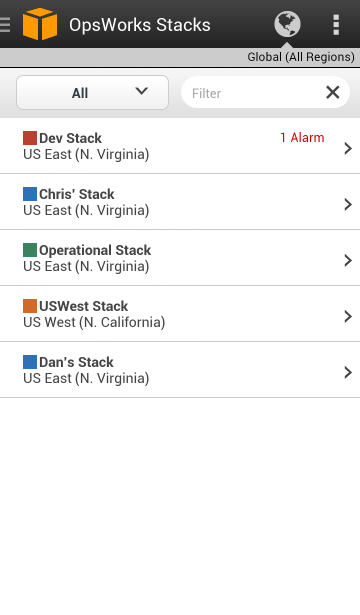

Each OpsWork stack is shown, along with any alarms:

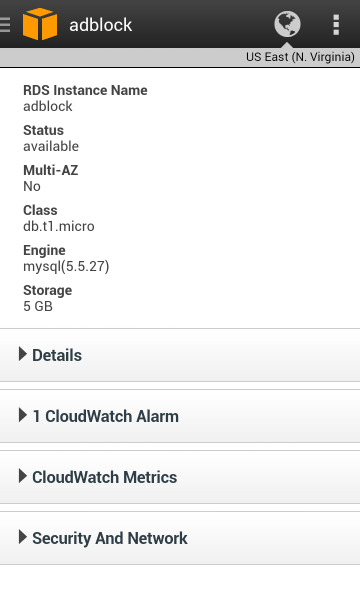

Full information is displayed for each database instance:

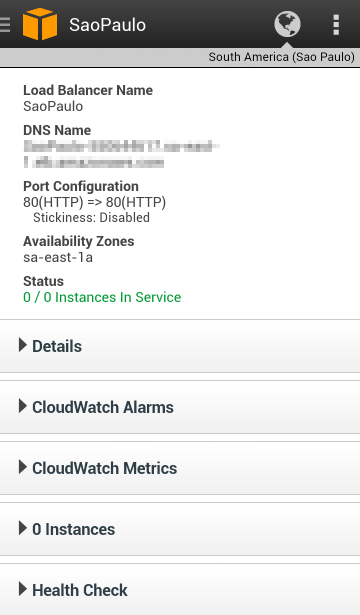

And for each Elastic Load Balancer:

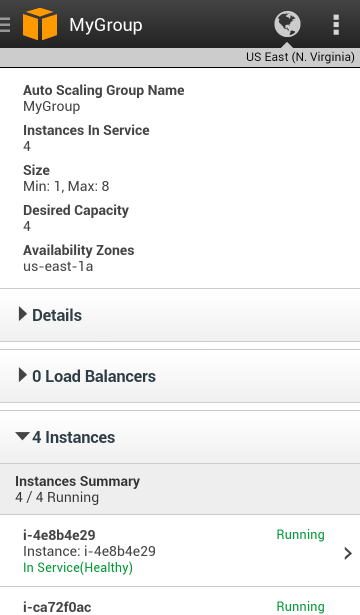

There’s also access to Auto Scaling resources:

Download Today

You can download the new version of the console app from Amazon AppStore, Google Play, or iTunes.

— Jeff;

Cross-Region Read Replicas for Amazon RDS for MySQL

You can now create cross-region read replicas for Amazon RDS database instances!

This feature builds upon our existing support for read replicas that reside within the same region as the source database instance. You can now create up to five in-region and cross-region replicas per source with a single API call or a couple of clicks in the AWS Management Console. We are launching with support for version 5.6 of MySQL.

Use Cases

You can use this feature to implement a cross-region disaster recovery model, scale out globally, or migrate an existing database to a new region:

Improve Disaster Recovery – You can operate a read replica in a region different from your master database region. In case of a regional disruption, you can promote the replica to be the new master and keep your business in operation.

Scale Out Globally – If your application has a user base that is spread out all over the planet, you can use Cross Region Read Replicas to serve read queries from an AWS region that is close to the user.

Migration Between Regions – Cross Region Read Replicas make it easy for you to migrate your application from one AWS region to another. Simply create the replica, ensure that it is current, promote it to be a master database instance, and point your application at it.

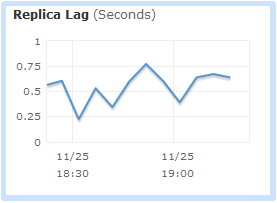

You will want to pay attention to replication lag when you implement any of these use cases. You can use Amazon CloudWatch to monitor this important metric, and to raise an alert if it reaches a level that is unacceptably high for your application:

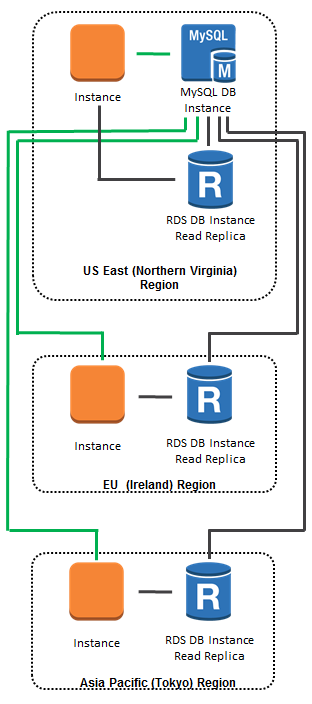

As an example of what you can do with Cross Region Replicas, here’s a global scale-out model. All database updates (green lines) are directed to the database instance in the US East (Northern Virginia) region. All database queries (black lines) are directed to in-region or cross-region read replicas, as appropriate:

Creating Cross-Region Read Replicas

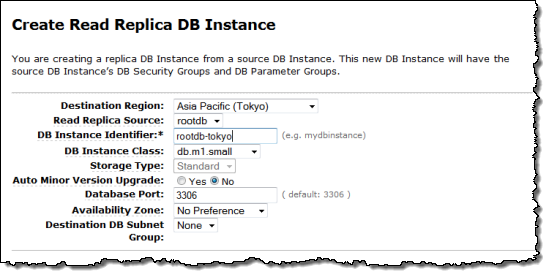

The cross-region replicas are very easy to create. You simply select the desired region (and optional availability zone) in the AWS Management Console:

You can also track the status of each of your read replicas using RDS Database Events.

All data transfers between regions are encrypted using public key encryption. You pay the usual AWS charges for the database instance, the associated storage, and the data transfer between the regions.

Reports from the Field

I know that our users have been looking forward to this feature. Here’s some of the feedback that they have already sent our way:

Isaac Wong is VP of Platform Architecture at Medidata. He told us:

Medidata provides a cloud platform for life science companies to design and run clinical trials faster, cheaper, safer, and smarter. We use Amazon RDS to store mission critical clinical development data and tested many data migration scenarios between Asia and USA with the cross region snapshot feature and found it very simple and cost effective to use and an important step in our business continuity efforts. Our clinical platform is global in scope. The ability provided by the new Cross Region Read Replica feature to move data closer to the doctors and nurses participating in a trial anywhere in the world to shorten read latencies is awesome. It allows health professionals to focus on patients and not technology. Most importantly, using these cross region replication features, for life critical services in our platform we can insure that we are not affected by regional failure. Using AWS’s simple API’s we can very easily bake configuration and management into our deployment and monitoring systems at Medidata.

Medidata provides a cloud platform for life science companies to design and run clinical trials faster, cheaper, safer, and smarter. We use Amazon RDS to store mission critical clinical development data and tested many data migration scenarios between Asia and USA with the cross region snapshot feature and found it very simple and cost effective to use and an important step in our business continuity efforts. Our clinical platform is global in scope. The ability provided by the new Cross Region Read Replica feature to move data closer to the doctors and nurses participating in a trial anywhere in the world to shorten read latencies is awesome. It allows health professionals to focus on patients and not technology. Most importantly, using these cross region replication features, for life critical services in our platform we can insure that we are not affected by regional failure. Using AWS’s simple API’s we can very easily bake configuration and management into our deployment and monitoring systems at Medidata.

Joel Callaway is IT Operations Manager at Zoopla Property Group Ltd. This is what he had to say:

Amazon RDS cross region functionality gives us the ability to copy our data between regions and keep it up to date for disaster recovery purposes with a few automated steps on the AWS Management Console. Our property and housing prices website attracts over 20 million visitors per month and we use Amazon RDS to store business critical data of these visitors. Using the cross region snapshot feature, we already transfer hundreds of GB of data from our primary US-East region to the EU-West every week. Before this feature, it used to take us several days and manual steps to do this on our own. We now look forward to the Cross Region Read Replica feature, which would make it even easier to replicate our data along with our application stack across multiple regions in AWS.

Amazon RDS cross region functionality gives us the ability to copy our data between regions and keep it up to date for disaster recovery purposes with a few automated steps on the AWS Management Console. Our property and housing prices website attracts over 20 million visitors per month and we use Amazon RDS to store business critical data of these visitors. Using the cross region snapshot feature, we already transfer hundreds of GB of data from our primary US-East region to the EU-West every week. Before this feature, it used to take us several days and manual steps to do this on our own. We now look forward to the Cross Region Read Replica feature, which would make it even easier to replicate our data along with our application stack across multiple regions in AWS.

Time to Replicate

This feature is available now and you can start using it today!

You may also want to investigate some of our other cross-region features including EC2 AMI Copy, RDS Snapshot Copy, DynamoDB Data Copy, and Redshift Snapshot Copy.

— Jeff;

Amazon RDS for PostgreSQL – Now Available

The Amazon Relational Database Service (RDS among friends) launched in 2009 with support for MySQL. We added Oracle Database in 2011 and Windows SQL Server in 2012.

The Amazon Relational Database Service (RDS among friends) launched in 2009 with support for MySQL. We added Oracle Database in 2011 and Windows SQL Server in 2012.

Today we are adding support for PostgreSQL. After personally fielding hundreds requests for this database engine over the last couple of years, I am more than happy to be making this announcement! Some of the people that I have talked to want to move their existing applications over to RDS. Others want to build new applications that take advantage of the data compression, ACID compliance, spatial data (via PostGIS), or fulltext indexing that PostgreSQL has to offer.

Over the past few years, PostgreSQL has become the preferred open source relational database for many enterprise developers and start-ups, powering leading geospatial and mobile applications.

All of the Goodies

This is a full-featured release, with support for Multi-AZ deployments, Provisioned IOPS, the Virtual Private Cloud (VPC), automated backups, and point-in-time recovery. If you are new to AWS and/or RDS, here’s what that means:

Multi-AZ Deployments provide enhanced durability and availability by creating primary and secondary database instances in distinct AWS Availability Zones, with synchronous data replication from primary to secondary and automatic failover.

Provisioned IOPS allow you to specify the desired performance of the database, with a maximum value of 30,000 IOPS (Input/Output Operations Per Second).

Virtual Private Cloud support gives you the power to launch RDS database instances in an isolated section of the AWS cloud using a virtual network that you define.

Automated Backups are scheduled to run on a daily basis at a time you designate, with automatic expiration of old backups in 1 to 35 days (your choice). Backups are durably stored in Amazon S3.

Point-in-time Recovery starts with an existing database instance and creates a new instance, representing the original instance as it existed at a specified time.

Cross-Region Snapshot Copy lets you copy your database snapshots across AWS Regions with a few clicks in the AWS Management Console. This allows you to take advantage of multiple AWS Regions for geographical expansion, data center migration, and disaster recovery.

We are launching with support for version 9.3.1 of PostgreSQL, with plans to support new versions as they become available. We also support the PostGIS spatial database extender the PL/Perl, PL/Tcl, and PL/pgSQL query languages, full text search dictionaries, and advanced data types such as Hstore and JSON at launch time. You can learn more about the full set of extensions by checking the rds.extensions parameter or the Amazon RDS User Guide.

When using Amazon RDS for PostgreSQL, you can take advantage of the products and services of a variety of AWS partners. These include geospatial applications from ESRI and CartoDB that leverage PostGIS. You can also use Business Intelligence products and services from Tableau and Jaspersoft to visualize and interact with your PostgreSQL data and leverage the power of cloud while taking advantage of the benefits of Amazon RDS. Check out our testimonials page to learn more.

Getting Started

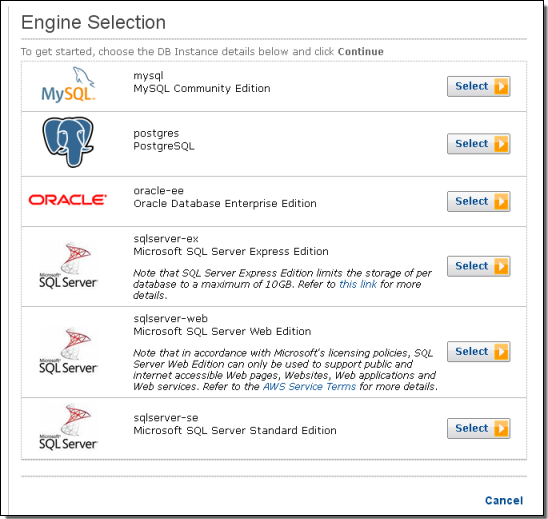

You can launch a PostgreSQL instance from the AWS Management Console. Click the Launch DB Instance button and select the PostgreSQL engine:

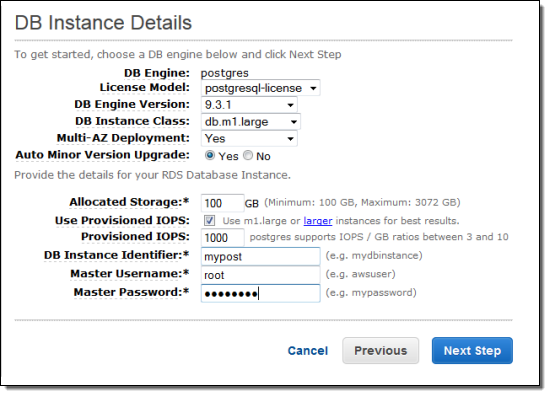

Select the instance size and fill in the other details. Note that you can create DB instances with up to 3 TB of storage and 30,000 Provisioned IOPS:

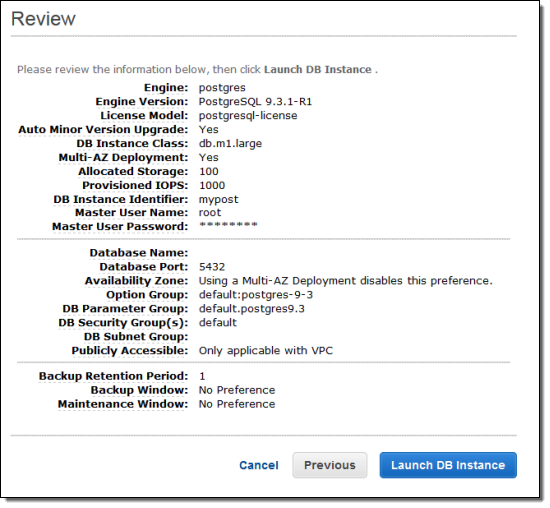

Confirm your intent to create a database instance and click the Launch DB Instance button:

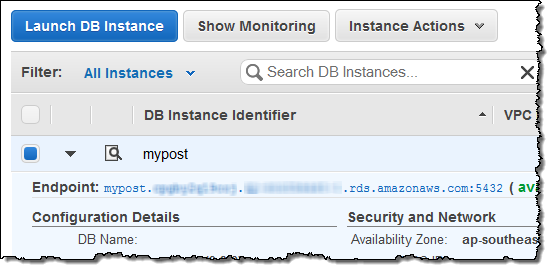

After a few minutes the database instance will be up and running. You can then copy the Endpoint string from the console, plug it in to your code, and start running queries:

Amazon RDS for PostgreSQL is available now in all AWS Regions and you can start using it today!

— Jeff;

Amazon RDS for Microsoft SQL Server – Transparent Data Encryption (TDE)

Amazon RDS for Microsoft SQL Server now supports the use of Transparent Data Encryption (TDE). Once enabled, the database instance encrypts data before it is stored in the database and decrypts it after it is retrieved.

You can use this feature in conjunction with our previously announced support for SSL connections to SQL Server to protect data at rest and in transit. You can also create and access your database instances inside of a Virtual Private Cloud in order to have complete control over your networking configuration.

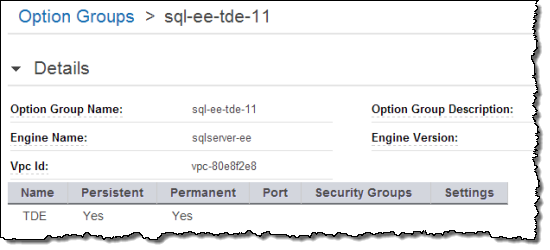

To enable TDE for an Amazon RDS for SQL Server instance, simply specify the TDE option in a Database Option Group that is associated with the instance:

Amazon RDS will generate a certificate that will be used in the encryption process. If running instances are making use of the option group, the certificate will be deployed to the instances.

Then, locate the certificate and encrypt the desired databases using the ALTER DATABASE command.

Here’s how you go about setting things up. First, you locate the certificate using a pattern match:

-- Find a RDSTDECertificate to use USE [master] GO SELECT name FROM sys.certificates WHERE name LIKE 'RDSTDECertificate%' GO

Then you switch to your database and create the encryption key using the certificate name from the previous step:

USE [customerDatabase] GO -- Create DEK using one of the certificates from the previous step CREATE DATABASE ENCRYPTION KEY WITH ALGORITHM = AES_128 ENCRYPTION BY SERVER CERTIFICATE [RDSTDECertificateName] GO

And then you encrypt the database:

-- Enable encryption on the database ALTER DATABASE [customerDatabase] SET ENCRYPTION ON GO

You can verify that the database is encrypted like this:

-- Verify that the database is encrypted USE [master] GO SELECT name FROM sys.databases WHERE is_encrypted = 1 GO SELECT db_name(database_id) as DatabaseName, * FROM sys.dm_database_encryption_keys GO

To learn more about using TDE with Amazon RDS for SQL Server, please visit the Amazon RDS for SQL Server detail page and our documentation.

— Jeff;

PS – If you are running Amazon RDS for Oracle Database, you’ll be happy to know that it also supports Transparent Data Encryption.

Amazon RDS Partners & New RDS Partner Page

As you may know, Amazon RDS makes it easy for our customers to set up, scale, and operate a relational database in the cloud. Amazon RDS supports MySQL, Oracle (Standard Edition One, Standard Edition and Enterprise Edition) and Microsoft SQL Server (Web, Express, Standard, and Enterprise editions). RDS handles the time-consuming database administration tasks so that you can focus on your applications and build your business. With Multi-AZ deployments, RDS provides a Service Level Agreement (SLA) of 99.95% per instance per month up time, making it a great fit for production applications.

Thousands of customers have brought their workloads to RDS over the past four years. Over that time, we have quietly formed an ecosystem of partners. The creative solutions built by these partners add value to RDS and make it an even better fit for specific industries, applications, and use cases. We have created a new Amazon RDS Partner page to showcase our partners and their unique services.

Here are some examples of what the RDS partners are doing:

Alfresco, a leading open document management and collaboration application runs on Amazon RDS. Using RDS Alfresco deploys a scalable, fault-tolerant database to provide their customers with a business-critical document management system.

Alfresco, a leading open document management and collaboration application runs on Amazon RDS. Using RDS Alfresco deploys a scalable, fault-tolerant database to provide their customers with a business-critical document management system.

SugarCRM, the worlds leading open source customer relationship management application, runs on Amazon RDS for MySQL. If you are coming to re:Invent 2013, you can learn how Zac Sprackett, VP of Operations is achieving cloud efficiencies by attending his session, DAT-201 Understanding Database Options.

DLZP focuses on implementing, migrating, and managing Oracle applications in the AWS Cloud. DLZP helps customers to deploy PeopleSoft Financial and Supply Chain Management and Human Capital Management using Amazon RDS for Oracle.

Attunity and Dbvisit enable customers to move their data from on-premise databases as well as multiple heterogeneous sources to Amazon RDS. Equally, they can move the data from Amazon RDS to external targets.

Webyog provides the MONyog data management tool. This “MySQL DBA in a box” tool enables developers to manage and tune MySQL databases running on Amazon RDS easily.

Leading BI tool vendors such as Jaspersoft, Logi Analytics, MicroStrategy, SiSense, and Tableau, (to name a few) make it easy for their customers to connect and analyze data residing in Amazon RDS. Customers can set up a MySQL, Oracle or SQL Server database and connect to their preferred BI tool within minutes.

8KMiles help companies adopt Amazon Web Services. 8KMiles recommends Amazon RDS to their customers because of the ease of deployment, backups with point-in-time recovery, Multi-AZ and low management overhead.

CSS Corp is a global technology solutions provider to enterprises, consumers, and technology companies. CSS uses Amazon RDS for quick small-scale relational database requirement to long period huge-scale relational database requirements.

To read more about the entire Amazon RDS partner ecosystem, please visit our partner page. This is just the beginning. We expect to add many more partners, so stay tuned!

— Jeff;

Cross-Region Snapshot Copy for Amazon RDS

I know that many AWS customers are interested in building applications that run in more than one of the eight public AWS regions. As a result, we have been working to add features to AWS to simplify and streamline the data manipulation operations associated with building and running global applications. In the recent past we have given you the ability to copy EC2 AMIs, EBS Snapshots, and DynamoDB tables between Regions.

RDS Snapshot Copy

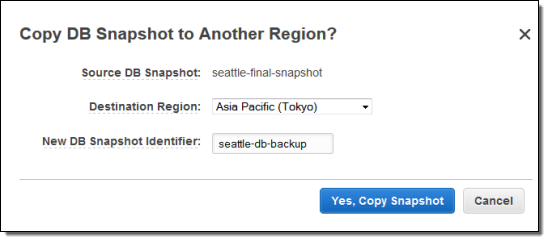

Today we are taking the next logical step, giving you the ability to copy Amazon RDS (Relational Database Service) snapshots between AWS regions. You can initiate the copy from the AWS Management Console, the AWS Command Line Interface (CLI), or through the Amazon RDS APIs. Here’s what you will see in the Console:

You can copy snapshots of any size, from any of the database engines (MySQL, Oracle, or SQL Server) that are supported by RDS. Copies can be moved between any of the public AWS regions, and you can copy the same snapshot to multiple Regions simultaneously by initiating more than one transfer.

As is the case with the other copy operations, the copy is done on an incremental basis, and only the data that has changed since the last snapshot of a given Database Instance will be copied. When you delete a snapshot, deletion is limited to the data that will not affect other snapshots.

There is no charge for the copy operation itself; you pay only for the data transfer out of the source region and for the data storage in the destination region. You are not charged if the copy fails, but you are charged if you cancel a snapshot that is underway at the time.

Democratizing Disaster Recovery

One of my colleagues described this feature as “democratizing data recovery.” Imagine all of the headaches (and expense) that you would incur while setting up the network, storage, processing, and security infrastructure that would be needed to do this on your own, without the benefit of the cloud. You would have to acquire co-lo space, add racks, set up network links and encryption, create the backups, and arrange to copy them from location to location. You would invest thousands of dollars in infrastructure, and the same (if not more) in DBA and system administrator time.

All of these pain points (and the associated costs) go away when you copy backups from Region to Region using RDS.

— Jeff;

Migrate On-Premises MySQL Data to Amazon RDS (and back)

I love to demo Amazon RDS. It is really cool to be able to launch a relational database instance in minutes, and to show my audiences how it manages scaling, backups, restores, patches, and availability so that they can focus on their application.

After my demo, I invariably get questions about data migration. The audiences see the power and value of RDS and are interested in moving their existing data (and applications) to the cloud. However, because most databases are processing changes all the time, this is a non-trivial exercise.

Today we are introducing a pair of new features to simplify the process of migrating data to Amazon RDS running MySQL, and back out again. Both of these features rely on MySQL’s replication capabilities.

As you may know, replication works by copying the data modification operations (INSERT, DELETE, and so forth) performed on the master to the slave, and then running them on the slave. As long as the master and the slave start with identical copies of the replicated database tables, the two will stay in sync.

Migrating Data From an External MySQL Instance to RDS

In order to migrate data from an existing MySQL instance running on-premises or on an EC2 instance, you’ll need to configure it as a replication source. You’ll need to poke a hole in your corporate firewall if your database is on-premises, or add an entry to your EC2 instance’s security group if your database is already hosted on AWS.

Next, you will need to launch and prepare an Amazon RDS running MySQL. Then you will use mysqldump to dump the databases or database tables that you want to migrate, and take note of the current log file name. Next, you’ll use the database dump to initialize the RDS database instance.

Once the RDS instance is initialized, you will need to run the replication stored procedures that we supply as part of this release to configure the RDS instance as a read replica of the instance running on premises or on EC2, and start the replication process. Once your RDS instance catches up with any changes that have taken place on the master, you can instruct your application to use it in preference to the existing version.

You also have the option to use RDS Provisioned IOPS, and you can also set up RDS in Multi-AZ mode if you’d like. However, we recommend that you do not configure Multi-AZ until after the import has completed.

The full process is a bit more involved and we have plenty of documentation to help you along the way. This feature was designed to be used in conjunction with MySQL 5.5 (version 5.5.33 or later) and 5.6 (version 5.6.13 or later).

Migrating Data to an External MySQL Instance from RDS

You can also invert the above process in order to move data from an RDS database instance to a MySQL server that is running on-premises or on EC2. This process uses an RDS Read Replica to convince the master to hold on to the log files for longer than usual.

Again, we have plenty of documentation to help you navigate the process. This feature was designed to be used in conjunction with MySQL 5.6 (version 5.6.13 or later).

The Road to Replication

If you have read this far and you have some experience with MySQL replication, you may be thinking “Cool — I can set up replication from my existing database to the cloud, creating a hot spare for easy failover.”

It is best to think of replication as a component of a fail-to-cloud model, rather than as a complete solution in and of itself. Because there’s a network connection in between the master and the slave, you would need to monitor and maintains the connection, track replication delays, and so forth in order to create a robust solution.

Migrate That Data!

You can start migrating your data today. Here are the documents and stored procedures you’ll need to have in order to get started:

- Importing data from an external MySQL database instance to a RDS MySQL database instance.

- Exporting data from a RDS MySQL database instance to an external MySQL instance.

— Jeff;