AWS News Blog

Now Available – AWS CodePipeline

We announced AWS CodePipeline at AWS re:Invent last fall (see my post, New AWS Tools for Code Management and Deployment for the details). As I said at that time, this tool will help you to model and automate your software release process. The automation provided by CodePipeline is designed to make your release process more reliable and more efficient.

Available Now

I am thrilled to be able to announce that CodePipeline is now available and you can start using it today.

Before I dive in to the details, I’d like to share a bit of the backstory with you.

Inside Amazon: Pipelines

Just as AWS CodeDeploy was inspired by an internal Amazon tool known as Apollo (read The Story of Apollo – Amazon’s Deployment Engine to learn more), CodePipeline has an internal Amazon predecessor.

We built our own continuous delivery service after we measured the time that it took code to be deployed to production after it was checked in. The time was far too long and was a clear measure of the challenge that our development teams felt as they worked to get features into the hands of customers as quickly as possible.

Upon further investigation it turned out that most of the time wasn’t being spent on actually performing builds, running tests, or doing deployments. Instead, manual, ticket-driven hand-offs between teams turned out to be a significant source of friction. Tickets sat in queues waiting someone to notice them, build and test results sat around until they could be reviewed, and person-to-person notifications had to be sent in order to move things along.

Given Amazon’s focus on automation and the use of robotics to speed the flow of tangible goods through our fulfillment centers, it was somewhat ironic that we relied on manual, human-driven processes to move intangible bits through our software delivery process.

In order to speed things up, we built an internal system known as Pipelines. This system allowed our teams to connect up all of the parts of their release process. This included source code control, builds, deployment & testing in a preproduction environment, and deployment to production. Needless to say, this tool dramatically reduced the time that it took for a given code change to make it to production.

Although faster delivery was the primary driver for this work, it turned out that we saw some other benefits as well. By ensuring that every code change went through the same quality gates, our teams caught problems earlier and produced releases that were of higher quality, resulting in a reduction in the number of rollbacks due to bad code that was deployed to production.

Now, Pipelines is used pervasively across Amazon. Teams use it as their primary dashboard and use it to monitor and control their software releases.

With today’s launch of CodePipeline, you now have access to the same capabilities our own developers do!

All About CodePipeline

CodePipeline is a continuous delivery service for software! It allows you to model, visualize, and automate the steps that are required to release your software. You can define and then fully customize all of the steps that your code takes as it travels from checkin, to build, on to testing, and then to deployment.

Your organization, like most others, probably uses a variety of tools (open source and otherwise) as part of your build process. The built-in integrations, along with those that are available from our partners, will allow you to use your existing tools in this new and highly automated workflow-driven world. You can also connect your own source control, build, test, and deployment tools to CodePipeline using the new custom action API.

Automating your release process will make it faster and more consistent. As a result, you will be able to push small changes in to production far more frequently. You’ll be able to use the CodePipeline dashboard to view and control changes as they flow through your release pipeline.

A Quick Tour

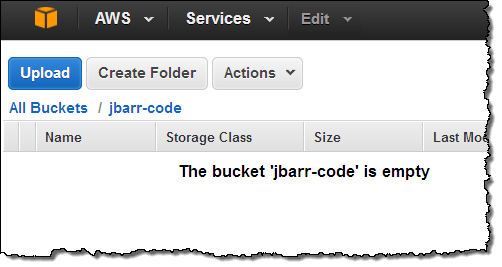

Let’s take a quick CodePipeline tour. I will create a simple two-stage pipeline that uses a versioned S3 bucket and AWS CodeDeploy to release a sample application.

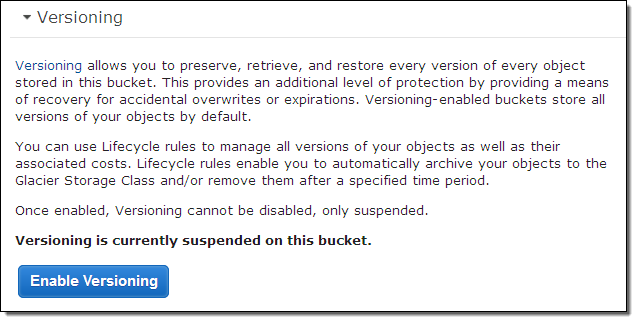

I start by creating a new bucket (jbarr-code) and enabling versioning:

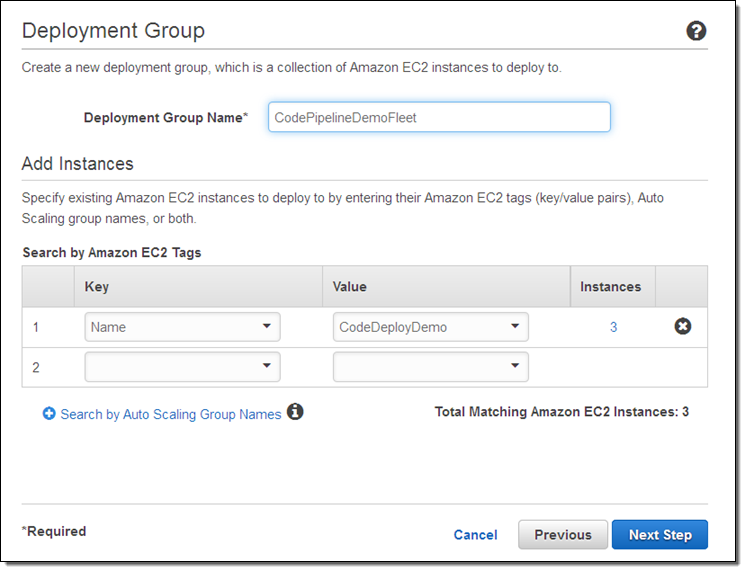

I need a place to deploy my code, so I open up the CodeDeploy Console and use the sample deployment. It launches three EC2 instances into a Deployment Group:

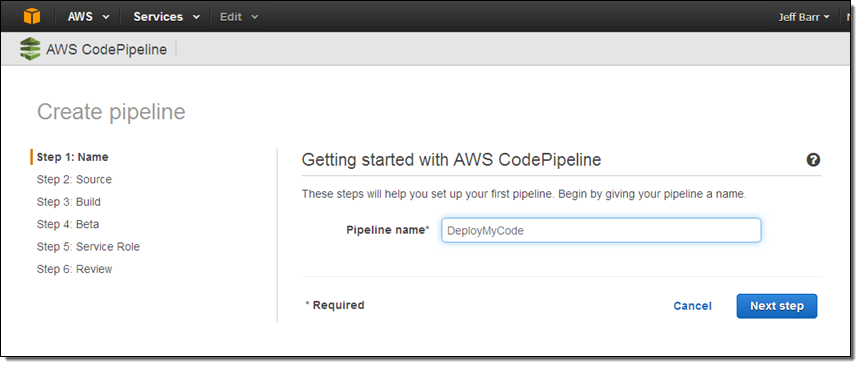

Then I open up the CodePipeline Console and use the Deployment Walkthrough Wizard to create my deployment resources. I’ll call my pipeline DeployMyCode:

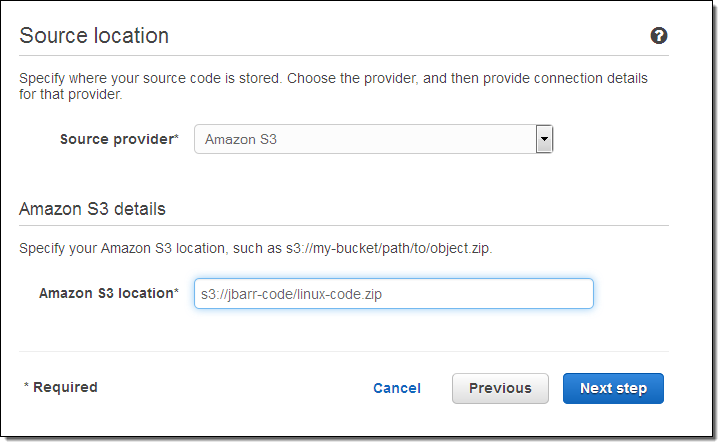

Now I tell CodePipeline where to find the code. I reference the S3 bucket and the object name (this object contains the code that I want to deploy) as the Source Provider:

Alternatively, I could have used a GitHub repo as my Source Provider.

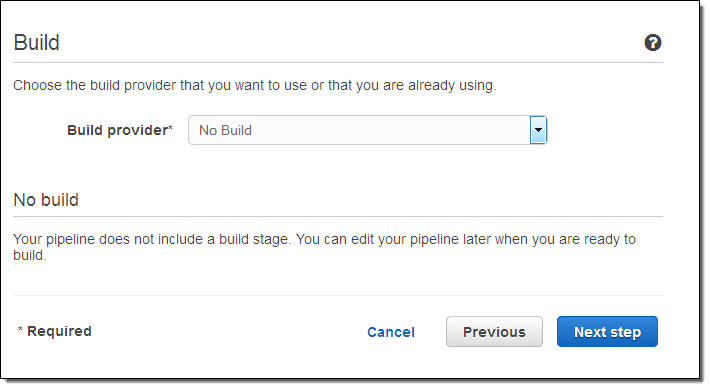

The next step is to tell CodePipeline how to build my code by specifying a Build Provider. In this case the code is a script and can be run as-is, so I don’t need to do a build.

I can also use Jenkins as a Build Provider.

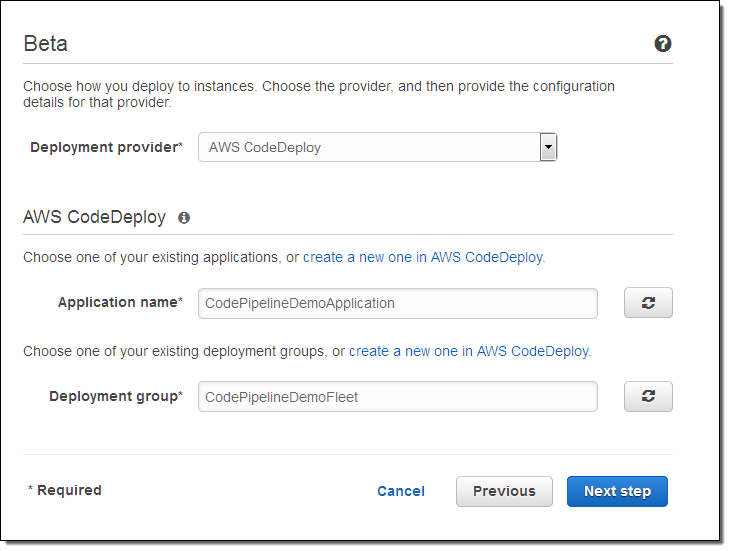

Since we’re not building code (when my pipeline runs) the next step is to deploy it for pre-deployment testing. I arrange to use the CodeDeploy configuration (including the target EC2 instances) that I set up a few minutes ago:

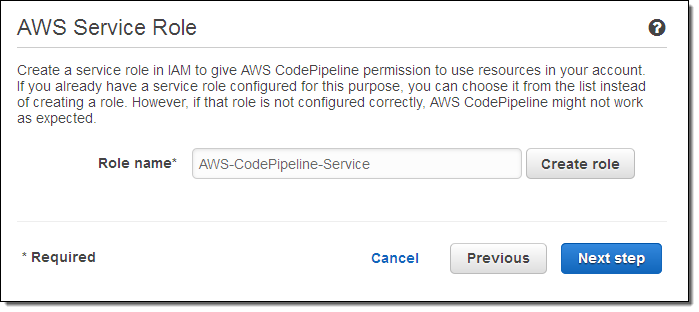

I need to give CodePipeline permission to use AWS resources in my account by creating an IAM role:

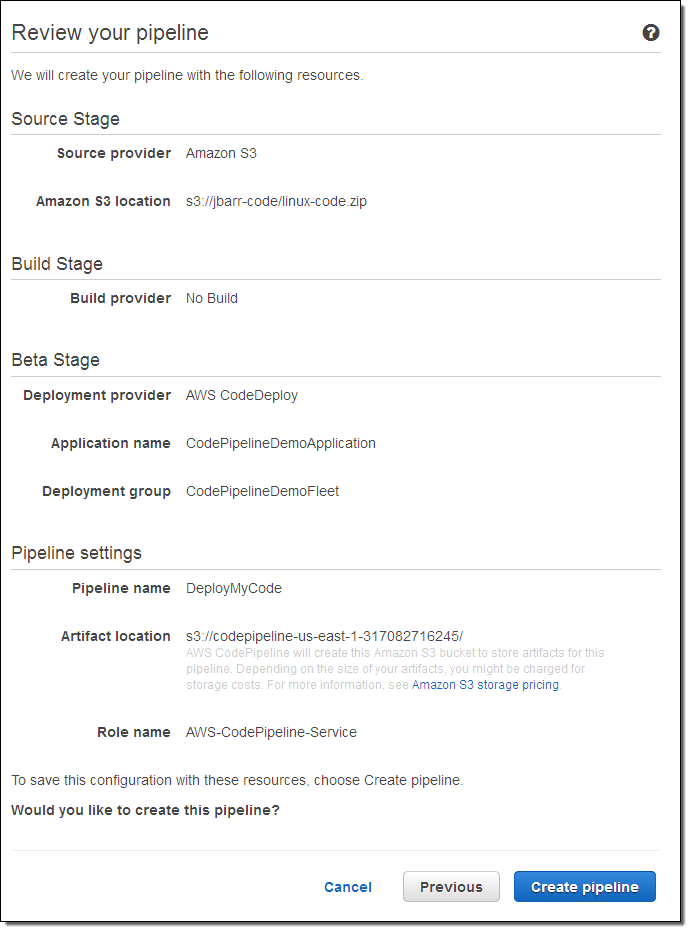

With that step done, I can confirm my selections and create my pipeline:

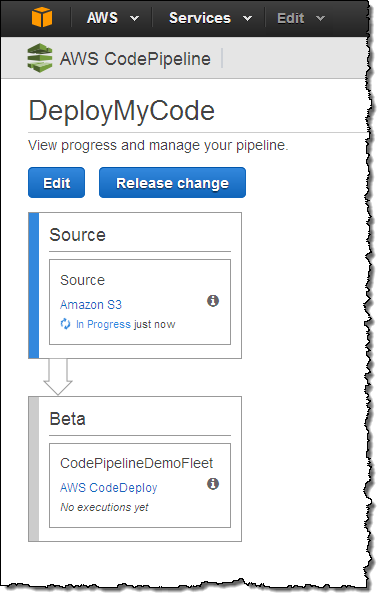

The pipeline starts running immediately:

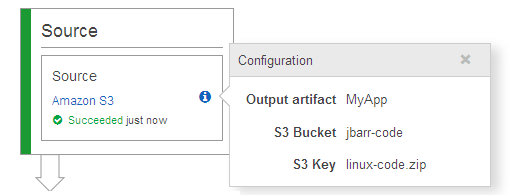

I can hover my cursor over the “i” to learn more about the configuration of a particular stage:

When my code changes and I upload a new version to S3 (in this case), CodePipeline will detect the change and run the pipeline automatically. I can also click on the Release change button to do the same thing.

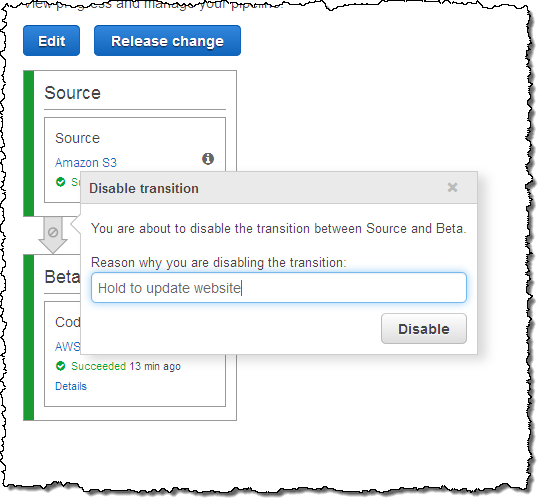

If it ever becomes necessary to pause a pipeline between stages, I can do so with a click (and an explanation):

I can enable it again later with a click!

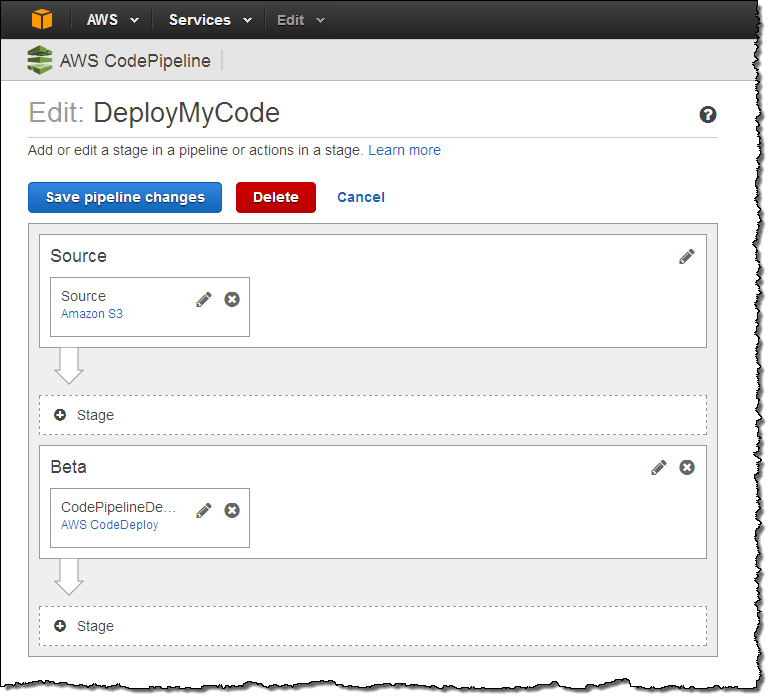

I can also edit my pipeline. I can alter an existing stage, append new stages at the end, or insert them at other points in the pipeline:

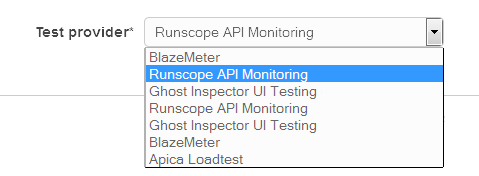

For example, I could add a testing step and then (if the test succeeds) a deployment to production. If I add a test step I also need to select and then connect to a test provider:

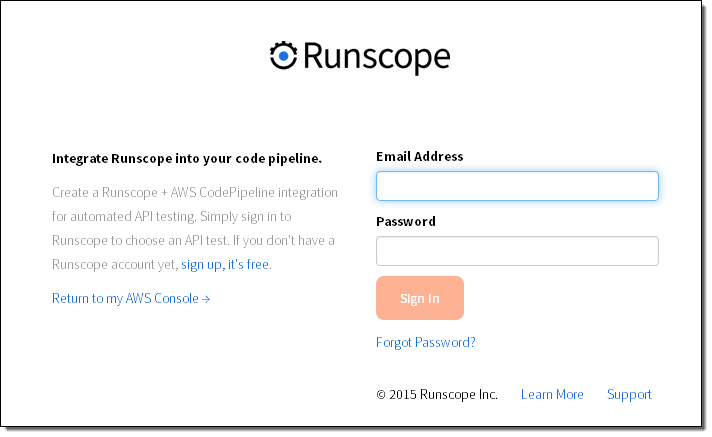

The connection step takes place on the test provider’s web site:

All of the actions described above (any many more) can also be performed via the AWS Command Line Interface (AWS CLI).

CodePipeline Integration

As I mentioned earlier, CodePipeline can make use of existing source control, build, test, and deployment tools. Here’s what I know about so far (leave me a comment if I missed any and I’ll update this post):

Source Control

- Amazon Simple Storage Service (Amazon S3) – Connect a pipeline to release source changes from an S3 bucket.

- GitHub – Connect a pipeline to release source changes from a public or private repo.

Build & Continuous Integration

- Jenkins – Run builds on a Jenkins server hosted in the cloud or on-premises.

- CloudBees – Run continuous integration builds and tests on the CloudBees Jenkins Platform.

Testing

- Apica -Run high-volume load tests with the Apica LoadTest service.

- BlazeMeter -Run high-volume load tests with the BlazeMeter service.

- Ghost Inspector – Run UI automation tests with the Ghost Inspector service.

- Runscope -Run API tests with the Runscope service.

Deployment

- AWS CodeDeploy – Deploy to Amazon Elastic Compute Cloud (Amazon EC2) or any server using CodeDeploy

- AWS Elastic Beanstalk – Deploy to Elastic Beanstalk application containers.

- XebiaLabs – Deploy using declarative automation and pre-built plans with XL Deploy.

Available Now

CodePipeline is available now and you can start using it today in the US East (N. Virginia) region, with plans (as usual) to expand to other regions over time.

You’ll pay $1 per active pipeline per month (the first one is available to you at no charge as part of the AWS Free Tier). An active pipeline has at least one code change move through it during the course of a month.

— Jeff;