Module 1: Understanding Amazon ECS, ECR, & AWS Fargate

LEARNING MODULE

Overview

This module introduces Amazon ECS, Amazon ECS on AWS Fargate, and Amazon ECR. You will learn about clusters, containers and images, tasks and task definitions, services, and launch types, for containers running on Linux and Windows. Finally, you will bring all these elements together to examine scenarios and the paths you can take to arrive at your best container solution using Amazon ECS or Amazon ECS on AWS Fargate for your .NET applications.

Time to Complete

30 minutes

Introduction to Amazon ECS

Amazon ECS is a service used to run container-based applications in the cloud. It provides for highly scalable and fast container management, and integrates with other AWS services to provide security, container orchestration, continuous integration and deployment, service discovery, and monitoring and observability.

You launch containers using container images, similar to how you launch virtual machines from virtual machine images. Amazon ECS deploys and runs containers from container images deployed to Amazon Elastic Container Registry (Amazon ECR), or Docker Hub.

Amazon ECS uses task definitions to define the containers that make up your application. A task definition specifies how your application's containers are run. You can define and use an individual task, which runs and then stops on completion, or you can define your task should run within a service. Services continuously run and maintain a specified number of tasks simultaneously, suitable for longer-running applications such as web applications.

If required, you can choose to configure and manage the infrastructure hosting your containers or use Amazon ECS on AWS Fargate to take advantage of a serverless approach where AWS manages the container infrastructure and you focus on your application. Amazon ECS provides two models for running containers, referred to as launch types.

EC2 launch type

The EC2 launch type is used to run containers on one or more Amazon Elastic Compute Cloud (EC2) instances configured in clusters. When you use the EC2 launch type, you have full control over the configuration and management of the infrastructure that hosts your containers.

Choose the EC2 launch type for your containers when you must manage your infrastructure, or your applications require consistently high CPU core and memory usage, need to be price-optimized, or need persistent storage.

Fargate launch type

The Fargate launch type is a serverless pay-as-you-go option for running your containers. Serverless means you do not have to configure infrastructure to host your container instances, unlike the EC2 launch type, which requires you to understand how to configure and manage clusters of instances to host your running containers.

Amazon ECS Resources

Besides using launch types to control how you want to manage your container infrastructure, you will encounter and use several types of resources when working with Amazon ECS.

Cluster

A cluster is a logical group of compute resources, in a specific Region. Clusters hold the running container instances hosting your applications and application components, helping isolate them so they don't use the same underlying infrastructure. This improves availability, should a particular item of infrastructure hosting your application fail. Only the affected cluster will need to be restarted.

Regardless of whether you use Amazon ECS, or Amazon ECS on AWS Fargate, you will work with clusters. What differs is the level of management expected from you. If you specify the EC2 launch type when creating clusters, you take on the responsibility to configure and manage those clusters. When you use the Fargate launch type, however, it is Fargate's responsibility to manage them.

Container

A container holds all the code, runtime, tools, and system libraries required by the application or application component in the container to run. When you start container instances to host your applications, they run on the compute infrastructure associated with a cluster.

Read-only templates known as container images are used to launch containers. Before you can use an image to run your containers, you need to deploy the container image to a registry, for example, Amazon Elastic Container Registry (Amazon ECR) or Docker Hub.

You define container images using a resource called a Dockerfile. A Dockerfile is a text file that details all the components and resources you want included in the image. Amazon ECS uses the same Dockerfile used when defining container images for .NET applications elsewhere, with no changes. Using the docker build command, you turn the commands and settings defined in the Dockerfile into a container image you can push to a registry, or run locally in Docker. Container tools available from AWS, detailed in module 2, will often handle the build and push of the image for you.

Task Definition

A task definition is a JSON-format text file used to describe the containers that make up your application. A single task definition can describe up to 10 containers.

You can think of a task definition as an application environment blueprint, specifying application and operating system parameters. Examples are which network ports should be open, and any data volumes that need to be attached, amongst other resources.

Amazon ECS does not restrict your application to a single task definition. In fact, it's recommended you combine related containers for a component making up a part of your application into a single task definition, and use multiple task definitions to describe the entire application. This allows the different logical components that make up your application to scale independently.

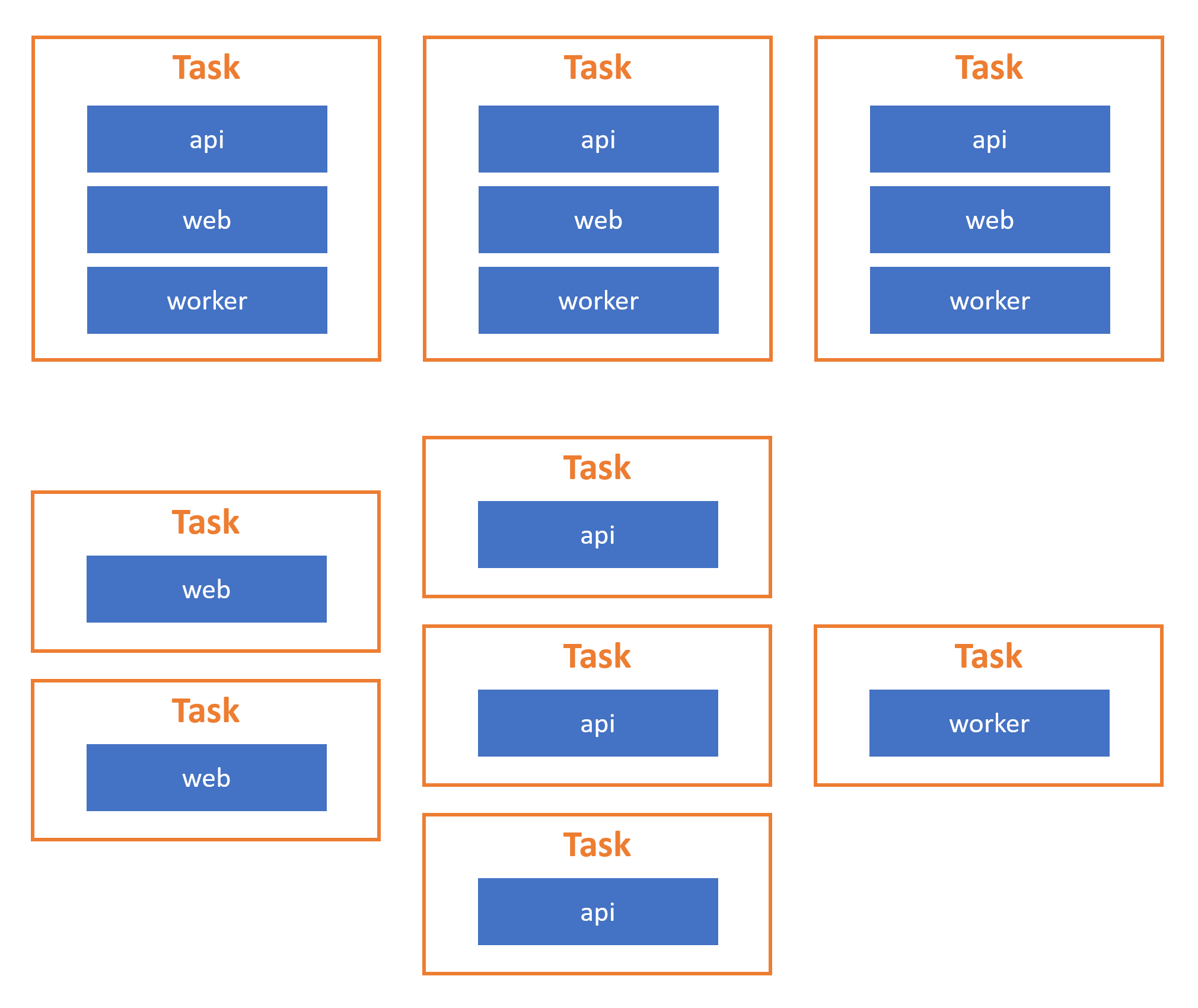

Consider a typical n-tier web application, comprising a web UI frontend tier, API tier, middle tier, and database component tiers. The image below shows how you can group these component tiers in different task definitions. This allows, for example, the web UI tier to scale horizontally, independently of the other components and vice versa, if it experienced a usage surge. If you defined all the tiers in a single task definition, the whole application would scale under load, including tiers that were not experiencing increased usage, increasing the time to scale out (if your application is large) and potentially increasing your financial costs.

Below, you can find an example of a task definition. It sets up a web server using Linux containers on Fargate launch type.

{

"containerDefinitions": [

{

"command": [

"/bin/sh -c \"echo '<html> <head> <title>Amazon ECS Sample App</title> <style>body {margin-top: 40px; background-color: #333;} </style> </head><body> <div style=color:white;text-align:center> <h1>Amazon ECS Sample App</h1> <h2>Congratulations!</h2> <p>Your application is now running on a container in Amazon ECS.</p> </div></body></html>' > /usr/local/apache2/htdocs/index.html && httpd-foreground\""

],

"entryPoint": [

"sh",

"-c"

],

"essential": true,

"image": "httpd:2.4",

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group" : "/ecs/fargate-task-definition",

"awslogs-region": "us-east-1",

"awslogs-stream-prefix": "ecs"

}

},

"name": "sample-fargate-app",

"portMappings": [

{

"containerPort": 80,

"hostPort": 80,

"protocol": "tcp"

}

]

}

],

"cpu": "256",

"executionRoleArn": "arn:aws:iam::012345678910:role/ecsTaskExecutionRole",

"family": "fargate-task-definition",

"memory": "512",

"networkMode": "awsvpc",

"runtimePlatform": {

"operatingSystemFamily": "LINUX"

},

"requiresCompatibilities": [

"FARGATE"

]

}Task

When Amazon ECS instantiates a task definition, it creates one or more tasks that run within a cluster. A task is a running container instance. Besides other environmental settings, the task definition specifies the number of tasks, or container instances, to be run on the cluster.

You can configure tasks to run standalone, causing the container to stop when the task completes, or you can run tasks continuously as a service. When run as part of a service, Amazon ECS maintains a specified number of tasks running simultaneously, replacing failed containers automatically.

Use a standalone task for application code that does not need to run continuously. The code runs once inside the task and then ends, ending the container instance. An example would be batch processing of some data.

Scheduled Task

Use scheduled tasks for application code that needs to run periodically without operator intervention to start the task manually. Example scenarios are code to perform inspection of logs, backup operations, or periodic extract-transform-load (ETL) jobs.

Service

Amazon ECS runs an agent on each container instance in a cluster. You do not need to install or otherwise maintain this agent yourself. The agent reports information on the running tasks and utilization of the container instances, enabling Amazon ECS to detect tasks that fail or stop. When this happens, Amazon ECS replaces the failed tasks with new instances to maintain the specified number of tasks in the service, without requiring you to monitor and take action yourself.

Use a service for application code that needs to run continuously. Examples are a website front-end, or a Web API.

Persisting application data

Running container instances have a writeable layer that can store data. However, the writable layer is transient, and destroyed when the container instance ends either by user-action or because the instance has become unhealthy and Amazon ECS has replaced it. This makes the writable layer an unsuitable approach for long-term data storage, such as data in a database. Further, data in the writeable layer is only accessible by code running on the individual container instance, making it unsuitable for data you need to share in an application spanning multiple container instances.

To enable application data to be stored for a period longer than the lifetime of a container instance, or to be shareable across multiple container instances, AWS provides several storage services. These storage services decouple data storage from compute instances. Using storage decoupled from compute instances enables application data to outlive the container instance(s) the application is running on, and makes the data shareable across multiple instances.

Storage services available to containerized .NET applications depend on whether the applications are running on Linux or Windows containers.

Persistent storage options for Linux containers

Linux containers currently support the widest set of storage services for .NET applications when running in Amazon ECS, or Amazon ECS on AWS Fargate. The choice of storage service will depend on whether the application needs shared, concurrent access to the data.

Amazon Elastic Block Store (Amazon EBS)

Amazon Elastic Block Store (Amazon EBS) is a storage service that provides block level storage volumes. An EBS volume provides applications with storage that's mountable to Linux containers, accessed just like a regular drive device. Amazon EBS automatically replicates data in EBS volumes within an Availability Zone, making it a reliable storage solution that helps improve application reliability should a storage volume fail.

EBS volumes are dynamically resizable, support encryption, and also support snapshots to make copies. If required, you can detach volumes from a container and reattach them to a different one. To suit the performance and price requirements of your application, Amazon EBS provides different volume types.

EBS volumes are created in a specific Availability Zone in a Region. The volume is then mountable to a container instance, using settings in the task definition, running in the same zone. To access the same data from a different Availability Zone, snapshot the volume, and use the snapshot to create a new volume elsewhere in the same, or a different, Region. A single snapshot can create many volumes across Availability Zones and Regions. This is an approach to consider for read-only application data that is to be consumed by applications requiring high availability, and that you've deployed to multiple container instances spanning different Availability Zones and Regions.

Amazon EBS is a good storage solution to consider for applications that require fast, low-latency access to data that is not shared concurrently, as applications scale horizontally (i.e., run in multiple container instances). Examples include general file systems and databases that are accessed from a single container instance.

Amazon EBS does not support concurrent access to a volume. For applications where applications need to share a single file system mounted across multiple containers, consider Amazon Elastic File Service or one of the file systems available from Amazon FSx.

Amazon Elastic File System (Amazon EFS)

Amazon EFS provides a scalable file system service, accessed using Network File System (NFS), that doesn't require you to manage storage. File systems managed through Amazon EFS are attachable to multiple Linux-based container instances simultaneously, with read-write consistency and file locking. This provides the ability to share data on a drive, for read and write access, across multiple containers hosting a scaled-out application. Amazon EFS storage is also dynamic, expanding (and shrinking) capacity automatically as application storage needs change.

You only pay for the storage your applications consume. By default, data in file systems created in Amazon EFS is stored across multiple Availability Zones in a Region to provide resilience and durability. Amazon EFS refers to this mode as the Standard storage class. If an application doesn't need full multi-AZ storage, instead use the One Zone storage class to save cost. Standard-Infrequent Access and One Zone-Infrequent Access storage classes are also available to host data that applications access on a non-regular basis to save further cost.

Amazon EFS file systems are suitable for a broad range of applications, including web applications, content management systems, home folders for users, and general file servers. The file systems support authentication, authorization, and encryption. Access control uses standard POSIX permissions.

The example task definition snippet below shows how to mount an EFS file system for a task.

"containerDefinitions":[

{

"mountPoints": [

{

"containerPath": "/opt/my-app",

"sourceVolume": "Shared-EFS-Volume"

}

}

]

...

"volumes": [

{

"efsVolumeConfiguration": {

"fileSystemId": "fs-1234",

"transitEncryption": "DISABLED",

"rootDirectory": ""

},

"name": "Shared-EFS-Volume"

}

]

Amazon FSx for Lustre

Lustre is an open-source file system designed to address the performance needs of machine learning, high-performance computing (HPC), video processing, and financial modeling. For .NET applications addressing those solutions, or other scenarios requiring sub-millisecond latencies, Amazon FSx for Lustre can provide the persistent storage layer for Linux containers.

Note: at the time of writing, tasks running in AWS Fargate do not support FSx for Lustre file systems.

File systems created in FSx for Lustre are POSIX-compliant. This means you can continue to use the same, familiar file access controls you already use for your .NET applications running on Linux. File systems hosted in FSx for Lustre also provide read-write consistency and file locking.

Depending on the needs of the application, a choice of solid state (SSD) and hard drive (HDD) storage is available, optimized for different workload requirements. SSD storage is suitable for IOPS-intensive applications that are sensitive to latency and which typically see small, random access file operations. The HDD storage type is suitable for applications with high-throughput requirements, typically involving large and sequential file operations. With HDD storage, you can also add a read-only SSD cache, sized to 20% of the HDD storage, to allow for sub-millisecond latency and higher IOPS, improving performance for frequently accessed files.

File systems in FSx for Lustre can also link to an Amazon S3 bucket, with full read-write access. This provides your .NET application with the ability to process objects in the S3 bucket as if they were already resident in a file system, an option for applications built to process data from large, cloud-based datasets already in S3 without needing to copy that data into a file system before accessing, and updating, it.

Note that you can also mount Lustre file systems using a command in your Docker container using the lustre-client package; this enables you to mount file systems dynamically within the container.

Persistent storage options for Windows containers

For Windows containers running .NET and .NET Framework applications, file storage provided by Amazon FSx for Windows File Server is available for data persistence and data sharing across one or more containers running in a task.

Amazon FSx for Windows File Server

FSx for Windows File Server uses actual Windows File Server instances, accessible via standard Windows file shares over SMB, to store and serve application data. Standard Windows file shares enable use of features and administrative tools already familiar to Windows File Server admins, such as end-user file restore using shadow copies, user quotas, and Access Control Lists (ACLs). SMB also enables connection to an FSx for Windows File Server share from Linux containers.

File systems in FSx for Windows File Server can help lower storage costs for applications using data deduplication and compression. Additional features include data encryption, auditable file access, and scheduled automatic backups. Access to file system shares is controllable using integration with an on-premises Microsoft Active Directory (AD), or Managed AD in AWS.

FSx for Windows File Server is suitable for migrating on-premises Windows-based file servers to the cloud to work alongside containerized .NET/.NET Framework applications. It’s also suitable for use with .NET and .NET Framework applications needing access to hybrid cloud and on-premises data stores (with Amazon FSx File Gateway). For applications using SQL Server, FSx for Windows File Server enables running these database workloads with no requirement for SQL Server Enterprise licensing.

The example task definition snippet below shows how to mount a file system created in FSx for Windows File Server for a task.

{

"containerDefinitions": [

{

"entryPoint": [

"powershell",

"-Command"

],

"portMappings": [],

"command": [...' -Force"],

"cpu": 512,

"memory": 256,

"image": "mcr.microsoft.com/windows/servercore/iis:windowsservercore-ltsc2019",

"essential": false,

"name": "container1",

"mountPoints": [

{

"sourceVolume": "fsx-windows-dir",

"containerPath": "C:\\fsx-windows-dir",

"readOnly": false

}

]

},

...

],

"family": "fsx-windows",

"executionRoleArn": "arn:aws:iam::111122223333:role/ecsTaskExecutionRole",

"volumes": [

{

"name": "fsx-windows-vol",

"fsxWindowsFileServerVolumeConfiguration": {

"fileSystemId": "fs-0eeb5730b2EXAMPLE",

"authorizationConfig": {

"domain": "example.com",

"credentialsParameter": "arn:arn-1234"

},

"rootDirectory": "share"

}

}

]

}Other persistent storage options

AWS provides several other specialized file system services for handling persistent storage needs for tasks in ECS. This course will not cover these file systems and services, instead we refer you to the product details below.

- Amazon FSx for OpenZFS provides fully managed file storage using the OpenZFS file system. OpenZFS is an open-source file system for workloads requiring high-performance storage, and features including instant data snapshots, encryption, and cloning. OpenZFS storage is accessible using NFS and enables easy migration of Linux file servers to the cloud for use with .NET application containers.

- Amazon FSx for NetApp ONTAP is another fully managed file storage service providing data access and management capabilities. Applications access NetAPP ONTAP file systems using NFS, SMB, and iSCSI protocols.

Introduction to AWS Fargate

A serverless approach to provisioning and managing cloud infrastructure is attractive to many developers and organizations. With serverless, AWS handles the undifferentiated provisioning and management of the infrastructure resources for hosting applications for you. This frees you, the developer, to focus on your application. You specify what the applications need in order to run, and scale. The how is the responsibility of AWS.

AWS Fargate is a serverless approach to hosting containers in the cloud. When you choose Fargate for Amazon ECS or Amazon EKS-based applications, there is no longer a requirement for you to manage servers or clusters of Amazon EC2 instances to host container-based applications. Fargate handles the provisioning, configuration, and scale up and down of the container infrastructure as needed.

As a developer, you concern yourself with defining the build of your container images using a Dockerfile, and the deployment of those built images to Amazon ECR or Docker Hub. For the runtime infrastructure of applications, you simply specify the Operating System, CPU and memory, networking, and IAM policies. Fargate then provisions, and scales, container infrastructure matching those requirements. Fargate supports running .NET and .NET Framework applications as Services, Tasks, and Scheduled Tasks.

.NET developers wanting to make use of Amazon ECS on AWS Fargate can choose from Windows Server or Linux environments. .NET Framework applications must use Windows Server containers. However, applications built with .NET have a choice of Windows Server or Linux environments.

Note: for applications using a mix of Windows Server and Linux containers, separate tasks are required for the different environments.

.NET on Linux Containers in AWS Fargate

.NET-based applications (.NET 6 or higher) can use container infrastructure provisioned and maintained by Fargate. Fargate uses Amazon Linux 2, which is available in either X86_64 or ARM64 architectures. The task definition specifies the required architecture.

Note: It is also possible to run older .NET Core 3.1 and .NET 5-based applications on Fargate. However, both these versions are out of support from Microsoft, or soon to be. .NET 5 was not a long-term support (LTS) release and is now out of support. At the time of writing, .NET Core 3.1 is in the maintenance support phase, meaning it is 6 months or fewer from end-of-support and receiving patches for security issues only.

.NET on Windows Containers in AWS Fargate

Windows containers on Fargate can run both .NET Framework and .NET applications. Fargate currently supports two versions of Windows Server for applications: Windows Server 2019 Full and Windows Server 2019 Core. Whichever version you use, AWS manages the Windows operating system licenses for you.

Note: that not all features of Windows Server, and some AWS features, are not available with Windows containers on AWS Fargate. Refer to the service documentation for up-to-date information of feature limitations and considerations. Some examples of unsupported features are listed below.

- Group Managed Service Accounts (gMSA).

- Amazon FSx file systems (other than FSx for Windows File Server).

- Configurable ephemeral storage.

- Amazon Elastic File Store (Amazon EFS) volumes.

- Image volumes.

- App Mesh service and proxy integration for tasks.

Choosing between Amazon ECS, and Amazon ECS on AWS Fargate

Use the following to determine whether to select Amazon ECS, or Amazon ECS on AWS Fargate, to host your .NET applications:

- If you prefer to provision, manage, and scale clusters and other infrastructure to host your tasks, or are required to self-manage this infrastructure, choose Amazon ECS.

- If you prefer to allow AWS to provision, manage, and scale the infrastructure supporting your containerized applications, choose AWS Fargate. AWS Fargate supports Windows containers for .NET Framework or .NET applications, or Linux containers for .NET applications.

- For .NET applications that use Amazon FSx for Windows File Server to provide additional persistent storage volumes to your containers, choose Amazon ECS. AWS Fargate does not support this storage option at the time of writing.

Container images and Amazon Elastic Container Registry (Amazon ECR)

Amazon ECR is a fully managed, secure, and scalable container registry for Docker and Open Container Initiative (OCI) container images. Its features make it easy to store, manage, and deploy container images whether you are using Amazon ECS, or Amazon ECS on AWS Fargate. Being a fully managed service, Amazon ECR provides, manages, and scales the infrastructure needed to support your registries.

Note: you can also use Docker Hub to store your container images when working with Amazon ECS and AWS Fargate.

Amazon ECR provides every account with a default private registry in each AWS Region. The registry is used to manage one or more private repositories that hold container images. Before images are pushed to or pulled from a repository, clients must get an authorization token which is then used to authenticate access to a registry. As images are pushed to repositories, Amazon ECR provides automated vulnerability scanning as an optional feature. Repositories also support encryption through AWS Key Management Service (KMS), with the choice of using an AWS-provided or a custom, user-managed key.

To control access, Amazon ECR integrates with AWS IAM. Fine-grained resource-based permissions enable control of who (or what) can access container images and repositories. Managed policies, provided by Amazon ECR, are also available to control varying levels of access.

Using a per-registry setting, repositories are replicable across Regions and other accounts. Additional image lifecycle policies are also configurable. For example, you can configure (and test) a lifecycle policy that results in the cleanup of unused images in a repository.

Both public and private registries are available in Amazon ECR. A pull-through cache, for container images pulled from other public registries, is also available. Pull-through caches insulate builds and deployments from outages in upstream registries and repositories, and also aid development teams subject to compliance auditing for dependencies.

Review the features below to learn more about public and private registries in Amazon ECR, the repositories they contain, and pull through cache repositories.

Private registry and repositories

AWS provides each account with a single private registry in each AWS Region, and each registry can contain zero or more repositories (at least one repository is required to hold images). Each regional registry in an account is accessible using a URL in the format https://aws_account_id.dkr.ecr.region.amazonaws.com, for example https://123456789012.dkr.ecr.us-west-2.amazonaws.com.

Repositories in a private registry hold both Docker and Open Container Initiative (OCI) images and artifacts. You can use as few or as many repositories as you require for images and artifacts. For example, you could use one repository to hold images for a development stage, another for images in a test stage, and yet another for images released to a production stage.

Image names within a repository image are required to be unique, however, Amazon ECR repositories also support namespaces. This allows image names, identifying different images, to be reused across different environment stages or teams in a single repository.

Accounts have read and write access to the repositories in a private registry by default. However, IAM principals, and tools running in the scope of those principals, must get permissions to make use of Amazon ECR’s API, and to issue pull/push commands using tools such as the Docker CLI against repositories. Many of the tools detailed in module 2 of this course handle this authentication process on your behalf.

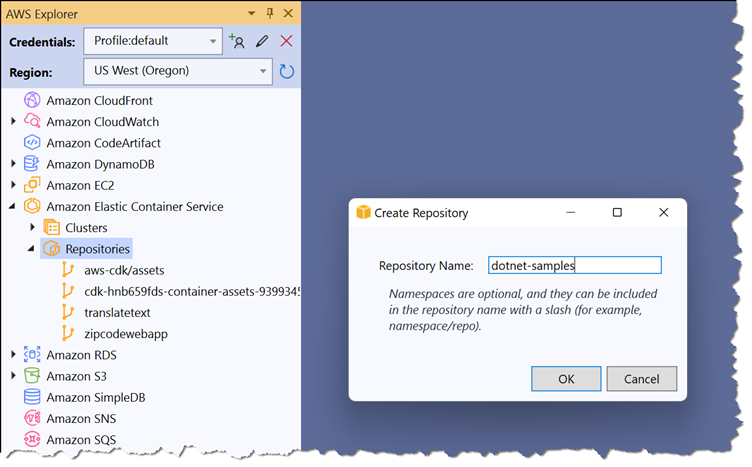

Private repositories can be created using the Amazon ECR dashboard in the AWS Management Console, in the AWS Explorer view of the AWS Toolkit for Visual Studio, or at the command line using the AWS CLI or AWS Tools for PowerShell. The screenshot below shows the creation of a private repository from within Visual Studio. You do this by expanding the Amazon Elastic Container Service entry in the AWS Explorer view, and from the context menu on the Repositories entry, select Create repository:

The AWS Toolkit for Visual Studio does not support working with your ECR public registry, or enabling features such as automated scanning and repository encryption for new repositories in your private registry. If those features are required, create repositories using the AWS Management Console or command-line tools such as the AWS CLI and AWS Tools for PowerShell.

Public registry and repositories

Amazon ECR public registries and repositories are available to anyone to pull images you publish. Each account is provided with a public registry that can contain multiple public repositories. Just as with private repositories, public repositories store Docker and Open Container Initiative (OCI) images and artifacts.

Repositories in public registries are listed in the Amazon ECR Public Gallery. This enables the community to find and pull public images. The AWS account that owns a public registry has full read-write access to the repositories it contains. IAM principals accessing the repositories must get permissions which are supplied in a token, and use that token to authenticate to push images (just as with private repositories). However, anyone can pull images from your public repositories with or without authentication.

Repositories in the gallery are accessed using a URL of the form https://gallery.ecr.aws/registry_alias/repository_name. The registry_alias is created when the first public repository is created, and can be changed. The URI to pull an image from a public repository has the format public.ecr.aws/registry_alias/repository_name:image_tag.

Pushing images to a public repository requires permissions and authentication to the public registry. Permissions are provided in a token, which must be supplied when authenticating to the registry. Images can be pulled from a public repository with or without prior authentication.

Pull through cache repositories

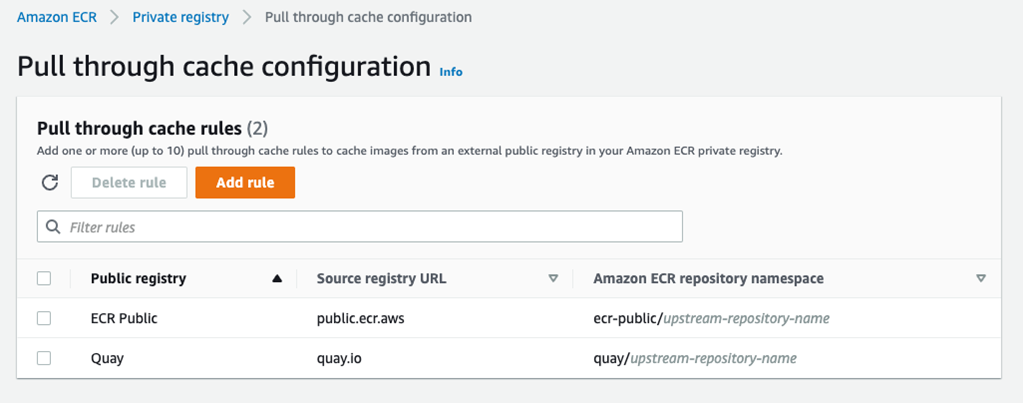

Pull through caches cache images from upstream public registries into your private registry in Amazon ECR. Today, Amazon ECR supports Amazon ECR Public and Quay as upstream registries. Amazon ECR checks the upstream for a new image version, and updates the cache if a new version is available, once every 24 hours. Pull through caches can help insulate your build and deployment processes from outages or other problems affecting upstream registries.

Cache repositories are created automatically the first time an image is pulled from a configured upstream registry. Image pulls use AWS IP addresses and are unaffected by pull rate quotas in place on the upstream registry. Image pulls to cache repositories are configured using rules. A maximum of 10 pull through cache rules may be configured for your private registry.

The image below shows two example rules, one to cache images from Amazon ECR Public and the second from Quay. In this configuration, the first time images are pulled from Amazon ECR Public, a repository will be automatically created under the ecr-public namespace using the name of the upstream repository, and similarly for images pulled from Quay.

The AWS Toolkit for Visual Studio does not support working with your ECR public registry, or enabling features such as automated scanning and repository encryption for new repositories in your private registry. If those features are required, create repositories using the AWS Management Console or command-line tools such as the AWS CLI and AWS Tools for PowerShell.

Images pulled from upstream registries into pull through caches support additional Amazon ECR features available to your private repositories, such as replication and automated vulnerability scanning.

Pushing and pulling images

Accounts have read and write access to the repositories in their private and public registries by default. However, IAM principals within those accounts, and tools running in the scope of those IAM principals, must get permissions to use push/pull commands, and Amazon ECR’s API. These permissions are supplied as an authorization token, which must be supplied when authenticating access to an Amazon ECR private or public registry.

Note: while IAM principals need permissions to push and pull images to private repositories, and push images to public repositories, anyone can pull images from public repositories in an account’s public registry without authentication, called an unauthenticated pull.

Authorizing repository access at the command line

Many of the AWS tools noted in module 2 of this course will handle getting the token for you and using it to authenticate with your private registry, but you can perform the same steps yourself if required, for example, when accessing a registry from a CI/CD pipeline. Alternatively, an Amazon ECR credential helper utility is available on GitHub – see Amazon ECR Docker Credential Helper for more details (this course does not cover using the helper utility further).

The AWS CLI and the AWS Tools for PowerShell contain commands for easily getting an authorization token, that is then used with tools such as the Docker Client to push and pull images. Both commands process the output from the service and emit the required token. For scenarios where using the command line is not suitable, or for custom tools, an Amazon ECR API call GetAuthorizationToken is available.

Note: permissions in the authorization token do not exceed the permissions provided to the IAM principal that requests it. The token is valid for 12 hours.

To authenticate Docker with an Amazon ECR registry using the AWS CLI, use the get-login-password command and pipe the output to docker login, specifying AWS as the username and the URL to the registry:

aws ecr get-login-password --region region | docker login --username AWS --password-stdin aws_account_id.dkr.ecr.region.amazonaws.com

To use the AWS Tools for PowerShell to authenticate a Docker client, use the Get-ECRLoginCommand (available in the AWS.Tools.ECR module, or the legacy AWSPowerShell and AWSPowerShell.NetCore modules). Pipe the Password property in the output object to the docker login command, specifying AWS as the username and the URL to the registry:

(Get-ECRLoginCommand -Region region).Password | docker login --username AWS --password-stdin aws_account_id.dkr.ecr.region.amazonaws.com

Once the Docker client is authorized, images can be pushed to or pulled from repositories in the registry. Note that separate authorization tokens are required for registries in different Regions.

Pushing an image

IAM permissions are required in order to push images to both private and public repositories. As a best practice, consider narrowing the scope of permissions for IAM principals to specific repositories. The example policy below shows the Amazon ECR API operations (“Action”) required by a principal to push images, scoped to a specific repository. When repositories are specified, the Amazon Resource Name (ARN) is used to identify them. Note that multiple repositories can be specified (in an array element), or use a wildcard (*) to widen the scope to all your repositories.

To use the policy below, replace 111122223333 with your AWS account ID, region with the region in which the repository exists, and set the repository-name.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:CompleteLayerUpload",

"ecr:GetAuthorizationToken",

"ecr:UploadLayerPart",

"ecr:InitiateLayerUpload",

"ecr:BatchCheckLayerAvailability",

"ecr:PutImage"

],

"Resource":

"arn:aws:ecr:region:111122223333:repository/repository-name"

}

]

}With an IAM policy similar to the above in place, and having authenticated against your registry, you can push images using Docker CLI or other tools. Before pushing an image to a repository, it must be tagged with the registry, repository, and optional image tag name (if the image tag name is omitted, latest is assumed). The following example illustrates the tag format and command to tag a local image prior to pushing it to Amazon ECR.

Replace 111122223333 with your AWS account ID, region with the identifier of the region containing the repository (us-east-1, us-west-2, etc.), and repository-name with the actual name of the repository.

docker tag ab12345ef 111122223333.dkr.ecr.region.amazonaws.com/repository-name:tag

Finally, push the image:

docker push 111122223333.dkr.ecr.region.amazonaws.com/repository-name:tag

Pulling an image

Images are pulled using the same tagging format to identify the image as used when the image was pushed:

docker pull 111122223333.dkr.ecr.region.amazonaws.com/repository-name:tag

For repositories in your private registry, you must authenticate your client with the registry as described earlier, prior to pulling the image. For public registries, you can pull images with or without authentication.

Knowledge Check

You’ve now completed Module 1, an introduction to Amazon ECS and AWS Fargate. The following test will allow you to check what you’ve learned so far.

Question 1: What is Amazon Elastic Container Registry?

a. A registry for storing container images

b. A registry of running containers

c. A registry of running tasks

d. A registry of storage volumes mapped to containers

Question 2: Persistent storage options are the same for Windows/Linux containers running on Amazon ECS?

a. True

b. False

Question 3: In relation to ECS a cluster is?

a. An instance of a running container

b. A definition of container that will be run

c. A definition of what your application is made up of

d. A logical group of compute resources

Answers: 1-a, 2-b, 3-d

Conclusion

In this module, you first learned about containers: how they differ from virtual machines, and Docker Linux containers vs. Windows containers. They are lightweight, standardized and portable, seamless to move, enable you to ship faster, and can save you money. Containers n AWS are secure, reliable, supported by a choice of container services, and deeply integrated with AWS.

Next, you learned about serverless technologies, which allow you to build applications without having to think about servers. Benefits include elimination of operational overhead, automatic scaling, lowered costs, and building applications more easily via built-in integrations to other AWS services. Use cases are web applications, data processing batch processing, and event ingestion.

You learned about AWS compute services for containers and how to choose a compute service. You learned AWS App Runner is a fully-managed service for hosting containers that is also serverless.