AWS News Blog

NASA JPL, robots and the AWS cloud

NASA Jet Propulsion Lab, NASA’s lead center for robotic exploration of the solar system is doing some pretty extraordinary things with the AWS cloud. I had the opportunity to meet with their CTO to discuss some of the interesting projects they are working on. Like the early explorers of Deep Space, they were also early explorers of the AWS cloud. Cloud computing is high up on JPL CTO’s radar and various teams are leveraging the cloud for various different projects related to missions.

They operate 19 spacecraft and 7 instruments across the solar system. Each instrument sends tons of data and images, which needs to be analyzed and processed. From one such instrument, they processed and analyzed 180,000 images of Saturn in the AWS cloud in less than 5 hours. This would have taken them more than 15 days in their own data center.

Last April, I was at EclipseCon and I was stunned when I saw the e4 Mars Rover Application, which was hosted in the AWS cloud, built by NASA folks, to control the movement of Mindstorm robots. I started to wonder what will be the result of when we combine the potential of NASA, the AWS cloud and robots.

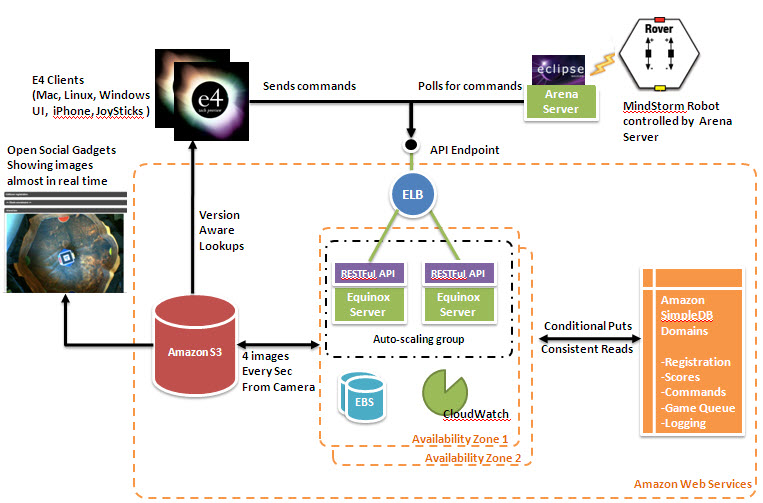

The super smart folks at NASA Jet Propulsion Lab (Jeff Norris, Khawaja Shams and team) developed a system that allows developers to interact with a Mindstorm robot in 4X4 Arena. The system exposes a REST API that takes commands from e4 clients and sends them to the app (RESTful Server running Equinox) hosted on Amazon EC2. The app stores all the commands (using conditional PUTs) in Amazon SimpleDB. The Poller application on the robot (Arena server) pulls the commands sequentially from a developer immediately by consistently reading from Amazon SimpleDB and executes the commands on robot which makes the robot move in the Arena. A sample command looks like Set the velocity of the robot to 5 and move to the left. In essence, developers will be able to send commands to the app on the AWS cloud and move the robot (in the arena). The system also stores all the logs, game queues, registration and scores in different Amazon SimpleDB domains. The RESTful server app is sitting in front of a fault-tolerant Elastic Load Balancer so that it can serve multiple concurrent requests and route traffic to multiple EC2 Instances. The setup is configured to use the Auto-Scaling service and is ready to scale in case they get sudden surge in traffic from clients. So, any developer can send commands using the API and make the robot move; the app will elastically scale with demand. Wait, it does not stop here. The Arena has a camera that is capturing and storing 4 images every second (4 FPS) to Amazon S3 (using the versioning feature) after processing them to find the robot’s actual co-ordinates in the Arena. Millions of images get versioned and stored on Amazon S3 which can then be downloaded almost in real-time to get a live photo feed of the location of robot and its movement in the 4X4 arena. So, with this, any developer, from any where in the world, can actually write a program to send commands to the auto-scalable app hosted on EC2 and see the robot move in the arena.

In summary, large numbers of developers can write innovative clients to interact with the robot and because architecture is scalable and running on scalable on-demand auto-scalable infrastructure.

Kudos goes to NASA JPL team who not only conceived this idea but also built the system using the some of the most advanced features of AWS. They were already using some features which were released just a weeks ago for e.g. consistent reads and conditional puts from Amazon SimpleDB, versioning feature in S3 etc. They have what I call the “full house” of AWS that includes ELB, Auto-scaling, EC2. They are also planning to put together a video feed of the robot movements from the images and stream the video using CloudFront.

Architecture of the system is as follows:

To entice developers to write innovative apps (client apps to manage robot movements, client apps to interpret the messages/logs from the robot and apps to move the robot itself to follow a path), they had announced a contest at Eclipsecon named “e4 Mars Rover Challenge”. They made this system available to EclipseCon developers and opened up the server only to EclipseCon attendees using EC2 Security Groups and limiting the access only to EclipseCon CIDR IPs. I was amazed at the creativity. Contestants built innovative client apps ranging from iPhone Apps (that use the accelerometer to manage the velocity and direction of the robot’s movement) to e4 intelligent clients that displays the telemetry. Winners have been announced. The event at EclipseCon was Mars-themed, and they have made the arena look like Mars with panoramas of Mars acquired by Spirit (Husband hill), and it had an orbiter, LED lights, and cool sound effects. This event was the ultimate crowd-pleaser at EclipseCon.

Imagine the potential when any developer can actually play with the robot from anywhere in the world and build innovative apps.

The fact that NASA engineers are utilizing cloud computing services to develop the contest brings a significant level of credibility to the whole notion of the cloud.

I was so excited after seeing the Arena at EclipseCon that I had to take the interview of masterminds behind this project. See the video below

–Jinesh