AWS News Blog

Amazon Elastic File System – Production-Ready in Three Regions

|

|

The portfolio of AWS storage products has grown increasingly rich and diverse over time. Amazon S3 started out with a single storage class and has grown to include storage classes for regular, infrequently accessed, and archived objects. Similarly, Amazon Elastic Block Store (Amazon EBS) began with a single volume type and now offers a choice of four types of SAN-style block storage, each designed to be a great for a particular set of access patterns and data types.

With object storage and block storage capably addressed by S3 and EBS, we turned our attention to the file system. We announced the Amazon Elastic File System (EFS) last year in order to provide multiple EC2 instances with shared, low-latency access to a fully-managed file system.

I am happy to announce that EFS is now available for production use in the US East (N. Virginia), US West (Oregon), and Europe (Ireland) Regions.

We are launching today after an extended preview period that gave us insights into an extraordinarily wide range of customer use cases. The EFS preview was a great fit for large-scale, throughput-heavy processing workloads, along with many forms of content and web serving. During the preview we received a lot of positive feedback about the performance of EFS for these workloads, along with requests to provide equally good support for workloads that are sensitive to latency and/or make heavy use of file system metadata. We’ve been working to address this feedback and today’s launch is designed to handle a very wide range of use cases. Based on what I have heard so far, our customers are really excited about EFS and plan to put it to use right away.

Why We Built EFS

Many AWS customers have asked us for a way to more easily manage file storage on a scalable basis. Some of these customers run farms of web servers or content management systems that benefit from a common namespace and easy access to a corporate or departmental file hierarchy. Others run HPC and Big Data applications that create, process, and then delete many large files, resulting in storage utilization and throughput demands that vary wildly over time. Our customers also insisted on high availability, and durability, along with a strongly consistent model for access and modification.

Amazon Elastic File System

EFS lets you create POSIX-compliant file systems and attach them to one or more of your EC2 instances via NFS. The file system grows and shrinks as necessary (there’s no fixed upper limit and you can grow to petabyte scale) and you don’t pre-provision storage space or bandwidth. You pay only for the storage that you use.

EFS protects your data by storing copies of your files, directories, links, and metadata in multiple Availability Zones.

EFS protects your data by storing copies of your files, directories, links, and metadata in multiple Availability Zones.

In order to provide the performance needed to support large file systems accessed by multiple clients simultaneously, Amazon EFS performance scales with storage (I’ll say more about this later).

Each Elastic File System is accessible from a single VPC, and is accessed by way of mount targets that you create within the VPC. You have the option to create a mount target in any desired subnet of your VPC. Access to each mount target is controlled, as usual, via Security Groups.

EFS offers two distinct performance modes. The first mode, General Purpose, is the default. You should use this mode unless you expect to have tens, hundreds, or thousands of EC2 instances access the file system concurrently. The second mode, Max I/O, is optimized for higher levels of aggregate throughput and operations per second, but incurs slightly higher latencies for file operations. In most cases, you should start with general purpose mode and watch the relevant CloudWatch metric (PercentIOLimit). When you begin to push the I/O limit of General Purpose mode, you can create a new file system in Max I/O mode, migrate your files, and enjoy even higher throughput and operations per second.

Elastic File System in Action

It is very easy to create, mount, and access an Elastic File System. I used the AWS Management Console; I could have used the EFS API, the AWS Command Line Interface (AWS CLI), or the AWS Tools for Windows PowerShell as well.

I opened the console and clicked on the Create file system button:

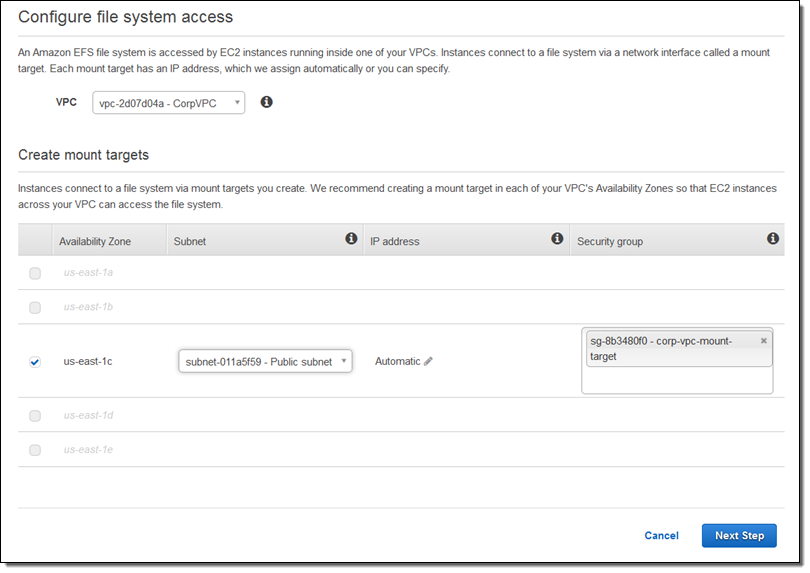

Then I selected one of my VPCs and created a mount target in my public subnet:

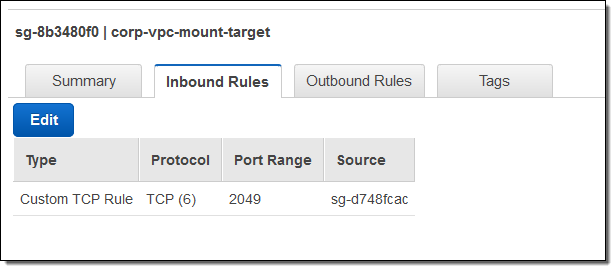

My security group (corp-vpc-mount-target) allows my EC2 instance to access the mount point on port 2049. Here’s the inbound rule; the outbound one is the same:

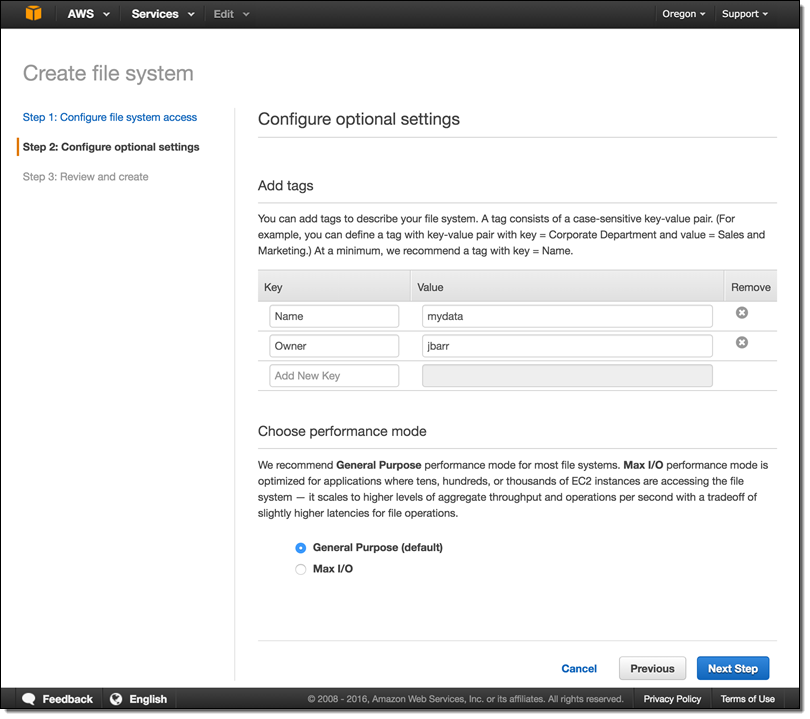

I added Name and Owner tags, and opted for the General Purpose performance mode:

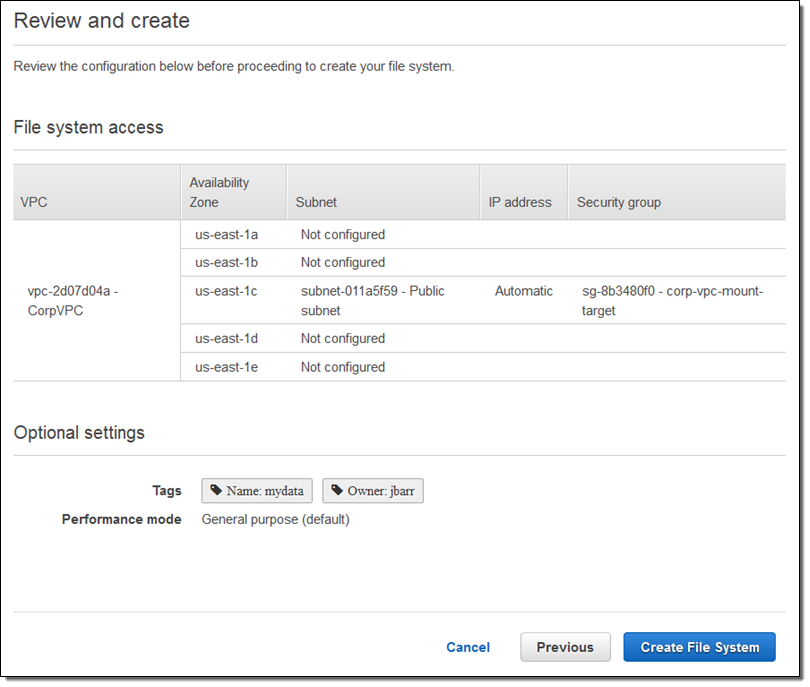

Then I confirmed the information and clicked on Create File System:

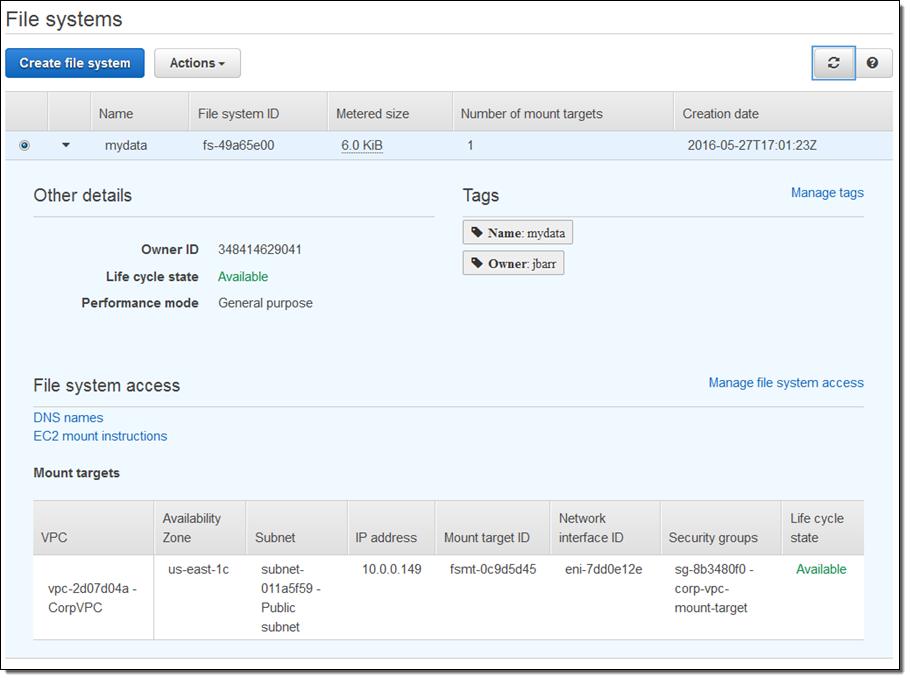

My file system was ready right away (the mount targets took another minute or so):

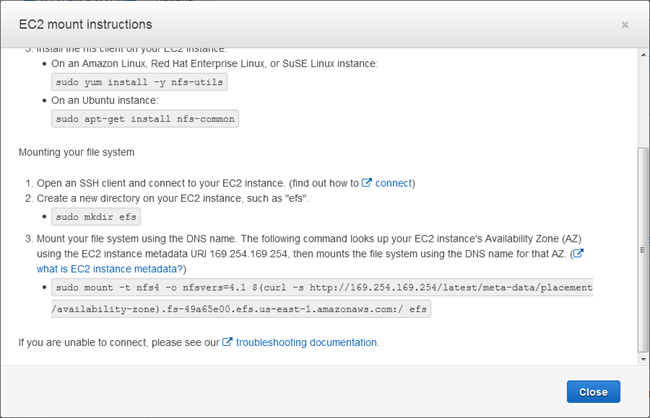

I clicked on EC2 mount instructions to learn how to mount my file system on an EC2 instance:

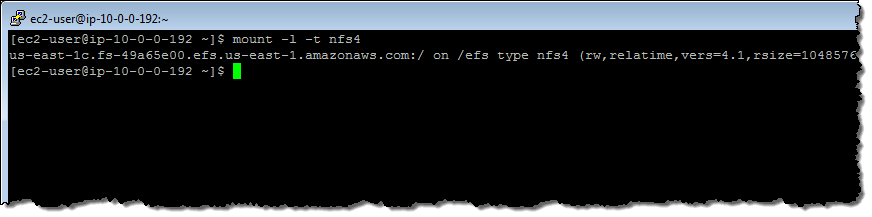

I mounted my file system as /efs, and there it was:

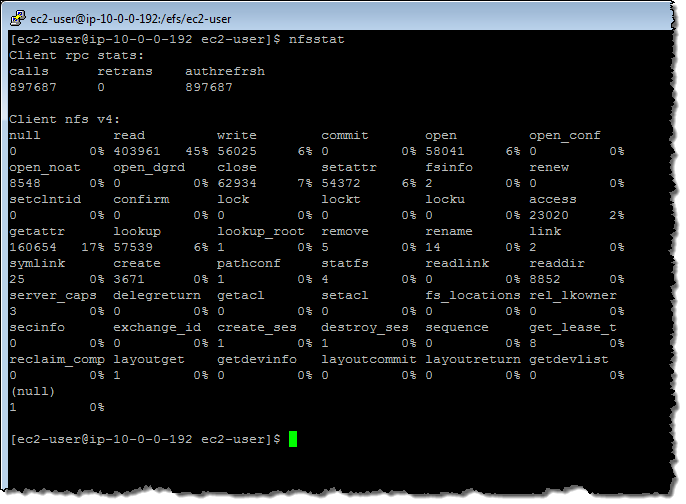

I copied a bunch of files over, and spent some time watching the NFS stats:

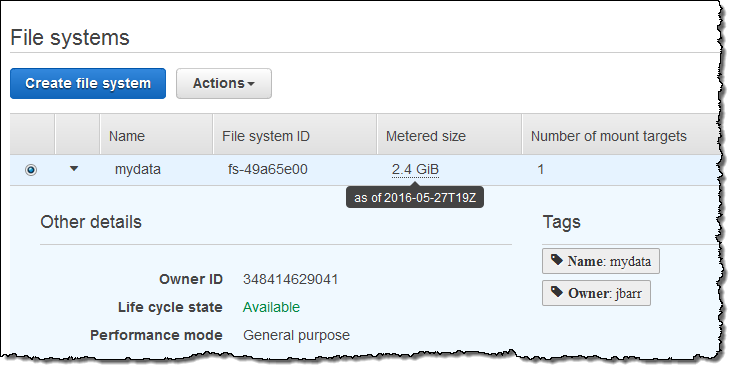

The console reports on the amount of space consumed by my file systems (this information is collected every hour and is displayed 2-3 hours after it is collected):

CloudWatch Metrics

Each file system delivers the following metrics to CloudWatch:

- BurstCreditBalance – The amount of data that can be transferred at the burst level of throughput.

- ClientConnections – The number of clients that are connected to the file system.

- DataReadIOBytes – The number of bytes read from the file system.

- DataWriteIOBytes -The number of bytes written to the file system.

- MetadataIOBytes – The number of bytes of metadata read and written.

- TotalIOBytes -The sum of the preceding three metrics.

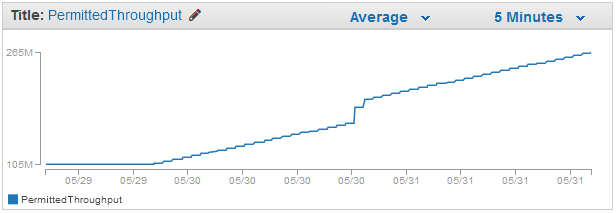

- PermittedThroughput -The maximum allowed throughput, based on file system size.

- PercentIOLimit – The percentage of the available I/O utilized in General Purpose mode.

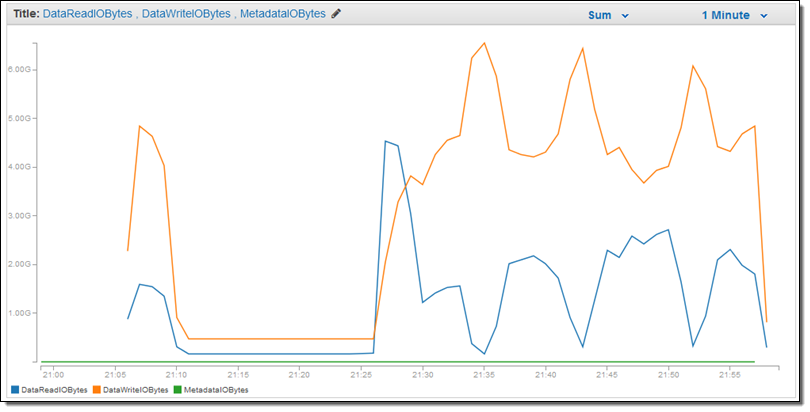

You can see the metrics in the CloudWatch Console:

EFS Bursting, Workloads, and Performance

The throughput available to each of your EFS file systems will grow as the file system grows. Because file-based workloads are generally spiky, with demands for high levels of throughput for short amounts of time and low levels the rest of the time, EFS is designed to burst to high throughput levels on an as-needed basis.

All file systems can burst to 100 MB per second of throughput. Those over 1 TB can burst to an additional 100 MB per second for each TB stored. For example, a 2 TB file system can burst to 200 MB per second and a 10 TB file system can burst to 1,000 MB per second of throughput. File systems larger than 1 TB can always burst for 50% of the time if they are inactive for the other 50%.

EFS uses a credit system to determine when a file system can burst. Each one accumulates credits at a baseline rate (50 MB per TB of storage) that is determined by the size of the file system, and spends them whenever it reads or writes data. The accumulated credits give the file system the ability to drive throughput beyond the baseline rate.

Here are some examples to give you a better idea of what this means in practice:

- A 100 GB file system can burst up to 100 MB per second for up to 72 minutes each day, or drive up to 5 MB per second continuously.

- A 10 TB file system can burst up to 1 GB per second for 12 hours each day, or drive 500 MB per second continuously.

To learn more about how the credit system works, read about File System Performance in the EFS documentation.

In order to gain a better understanding of this feature, I spent a couple of days copying and concatenating files, ultimately ending up using well over 2 TB of space on my file system. I watched the PermittedThroughput metric grow in concert with my usage as soon as my file collection exceeed 1 TB. Here’s what I saw:

As is the case with any file system, the throughput you’ll see is dependent on the characteristics of your workload. The average I/O size, the number of simultaneous connections to EFS, the file access pattern (random or sequential), the request model (synchronous or asynchronous), the NFS client configuration, and the performance characteristics of the EC2 instances running the NFS clients each have an effect (positive or negative). Briefly:

- Average I/O Size – The work associated with managing the metadata associated with small files via the NFS protocol, coupled with the work that EFS does to make your data highly durable and highly available, combine to create some per-operation overhead. In general, overall throughput will increase in concert with the average I/O size since the per-operation overhead is amortized over a larger amount of data. Also, reads will generally be faster than writes.

- Simultaneous Connections – Each EFS file system can accommodate connections from thousands of clients. Environments that can drive highly parallel behavior (from multiple EC2 instances) will benefit from the ability that EFS has to support a multitude of concurrent operations.

- Request Model – If you enable asynchronous writes to the file system by including the async option at mount time, pending writes will be buffered on the instance and then written to EFS asynchronously. Accessing a file system that has been mounted with the sync option or opening files using an option that bypasses the cache (e.g. O_DIRECT) will, in turn, issue synchronous requests to EFS.

- NFS Client Configuration – Some NFS clients use laughably small (by today’s standards) values for the read and write buffers by default. Consider increasing it to 1 MiB (again, this is an option to the mount command). You can use an NFS 4.0 or 4.1 client with EFS; the latter will provide better performance.

- EC2 Instances – Applications that perform large amounts of I/O sometimes require a large amount of memory and/or compute power as well. Be sure that you have plenty of both; choose an appropriate instance size and type. If you are performing asynchronous reads and writes, the kernel use additional memory for caching. As a side note, the performance characteristics of EFS file systems are not dependent on the use of EBS-optimized instances.

Benchmarking of file systems is a blend of art and science. Make sure that you use mature, reputable tools, run them more than once, and make sure that you examine your results in light of the considerations listed above. You can also find some detailed data regarding expected performance on the Amazon Elastic File System (Amazon EFS) page.

Available Now

EFS is available now in the US East (N. Virginia), US West (Oregon), and Europe (Ireland) Regions and you can start using it today. Pricing is based on the amount of data that you store, sampled several times per day and charged by the Gigabyte-month, pro-rated as usual, starting at $0.30 per GB per month in the US East (N. Virginia) Region. There are no minimum fees and no setup costs (see the EFS Pricing page for more information). If you are eligible for the AWS Free Tier, you can use up to 5 GB of EFS storage per month at no charge.

— Jeff;