DeepLens Family Assistant

Inspiration

When thinking of ideas for the DeepLens challenge, we quickly decided that we wanted to create something that could help people in need. We wanted to use Technology to bring people together and avoid using Technology for technology sake. After considering many applications of the DeepLens device, we recognized that the ability to recognize faces would be invaluable in helping those who had difficulty recognizing other people. Individuals diagnosed with Dementia (patients) have difficulty recognizing friends and even family, which can cause them to become disoriented and confused when speaking with loved ones.

What it does

Patients suffering from memory-loss can use our application to help them remember their loved ones. The DeepLens device camera configured with our application can act as an assistant. It recognizes family members and friends in view of the camera and audio play their name with a brief bio. This aids and helps connect the dots for those with memory-loss.

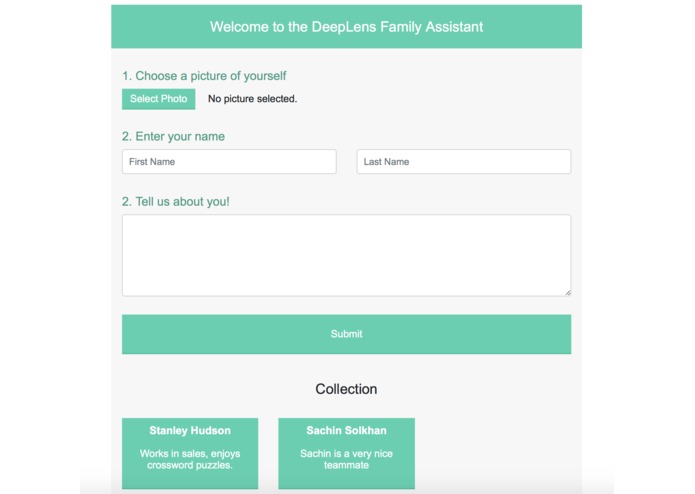

Pictures of family and friends with their brief information is pre-configured and uploaded in a data store. This can be uploaded by anybody in the patient's family through the application's simple web UI.

The DeepLens device camera and application can also be used by patients at home for memory exercises. Studies show that memory exercises can slow the loss of memory. Patients can show pictures of family members and friends, stored in their smart phones to the camera. The picture is recognized and audio of name with bio is played out. Such can be also used by medical practitioners and caregivers of the patient.

Created By: Sachin Solkhan, Kyle Kennedy and Christopher Helms

How we built it

Following AWS services were used to build the application - Face-detection default model provided with DeepLens, Elastic Beanstalk, Rekognition, Polly, Lambda, S3.

UI: Elastic Beanstalk is used to create a simple and user friendly web application to upload master pictures of family members or friends with their bio. The bio would contain brief information about the person that would help make the connections for the patient. All the information is stored in a master S3 bucket.

Machine Learning Model: The default face-detection model provided with DeepLens is used as it is within the application. The lambda function associated with this model, that runs within the greengrass service on device, was customized to upload image frames to S3 bucket and play audio.

Face Recognition: AWS Rekognition service is used to recognize the faces uploaded through frames captured by the device.

Text-to-Speech: We used the synthesizeSpeech functionality of AWS Polly to turn the users memory about a friend, into an audio file that interactively involves the user.

End-to-End Flow: S3 buckets and lambda functions are used to tie all together asynchronously for the end-to-end flow. The image frames dropped in the s3 bucket trigger a lambda function which connects to AWS Rekognition to recognize the person. The person's information stored in master S3 bucket is retrieved and synthesized to speech using AWS Polly. The speech audio is then dropped in to an audio S3 bucket which is picked up by the lambda function running on device to play it out.

Challenges

Most of the challenges were DeepLens device related. We appreciate the help of AWS team patiently answering queries on forums and slack channel. The office hours really helped for almost a 1:1 format help by experts to solve specific issues. Here were some of the device related challenges:

- Registering Device - Setting up device took multiple tries with issues like default URL clashing with wifi subnet.

- Playing Audio - It took lot of help from AWS team and fellow participants to get end-to-end flow with audio played out from the device. Manual change of group.json file with each project deployment and setting up the python boto modules accessible to ggc_user were the major steps to get it working.

Accomplishments that we're proud of

We are really proud of creating something that will actually help people in need. Our application and solution will help those suffering from memory loss to be more social and be part of their family/friend circle. The application provides a tool in their hands which can be used by them independently.

What we learned

- Working successfully with several AWS services including Rekognition, Polly.

- Configuring the DeepLens device, setting it up to run Machine Learning models and extending to take action on inferences.

What's next for DeepLens Family Assistant

- Running the Assistant application without an internet connection or connecting to cloud. This will require creating custom face detection Machine Learning models to recognize faces using SageMaker and MXNet. Uploading it to device so that the face recognition inference happens all on the device itself. This will be a major upgrade that would make the device portable. The basic approach and lambda code could be reused with current DeepLens device or any similar device that can act as a true companion.

- Improving end-to-end performance of recognizing faces and playing the matching audio bio. #1 should help significantly with this.

- Securing to be able to associate a device to a particular patient and family.

Built with

amazon-web-services

lambda

s3

python

rekognition

deeplens

polly