AWS News Blog

Build 3D Streaming Applications with EC2’s New G2 Instance Type

|

|

Do you want to build fast, 3D applications that run in the cloud and deliver high performance 3D graphics to mobile devices, TV sets, and desktop computers?

If so, you are going to love our new G2 instance type! The g2.2xlarge instance has the following specs:

- NVIDIA GRID (GK104 “Kepler”) GPU (Graphics Processing Unit), 1,536 CUDA cores and 4 GB of video (frame buffer) RAM.

- Intel Sandy Bridge processor running at 2.6 GHz with Turbo Boost enabled, 8 vCPUs (Virtual CPUs).

- 15 GiB of RAM.

- 60 GB of SSD storage.

The instances run 64-bit code and make use of HVM virtualization; EBS-Optimized instances are also available. They are initially available in the US East (Northern Virginia), US West (Northern California), US West (Oregon), and EU (Ireland) Regions. You can launch them On-Demand or Spot Instances, and you can also purchase Reserved Instances.

The g2.2xlarge is another member of our GPU Instance family, joining the existing CG1 instance type. The venerable (and widely used) cg1.4xlarge instance type is a great fit for HPC (High Performance Computing) workloads. The GPGPU (General Purpose Graphics Processing Unit) in the cg1 offers double-precision floating point and error-correcting memory. In contrast, the GPU in the g2.2xlarge works on single-precision floating point values, and does not support error-correcting memory.

What’s a GPU?

Let’s take a step back and examine the GPU concept in detail.

As you probably know, the display in your computer or your phone is known as a frame buffer. The color of each pixel on the display is determined by the value in a particular memory location. Back when I was young, this was called memory-mapped video. It was relatively easy to write code to compute the address corresponding to a particular point on the screen and to set the value (color) of a single pixel as desired. If you wanted to draw a line, rectangle, or circle, you (or some graphics functions running on your behalf) would need to compute the address of each pixel in the figure, one at a time. This was easy to implement, but relatively slow.

Moving ahead, as games (one of the primary drivers of consumer-level 3D processing) became increasingly sophisticated, they implemented advanced rendering features such as texturing, shadows, and anti-aliasing. Each of these features contributed to the realism and the “wow factor” of the game, while requiring ever-increasing amounts of compute power for rendering. Think back just a decade or so, when gamers would routinely compare the FPS (frames per second) metrics of their games when running on various types of hardware.

It turns out that many of these advanced rendering features shared an interesting property. The computations needed to texture or anti-alias a particular pixel are independent of those required for the other pixels in the same scene. Moving some of this computation into specialized, highly parallel hardware (the GPU) reduced the load on the CPU and enabled the development of games that were even more responsive, detailed, and realistic.

The game (or other application) sends high-level operations to the GPU, the GPU does its magic for hundreds or thousands of pixels at a time, and the results end up in the frame buffer, where they are copied to the video display, with a refresh rate that is generally around 30 frames per second.

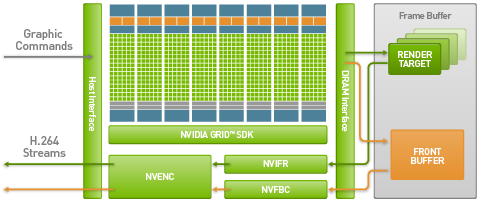

Here’s a block diagram of the NVIDIA GRID GPU in the g2 instance:

GPU in the Cloud?

If you followed my explanation above, recall that the GPU deposited the final pixels in the frame buffer for display. This is wonderful if you are running the application on your desktop or mobile device, but does you very little good if your application is running in the cloud.

The GRID GPU incorporates an important feature that makes it ideal for building cloud-based applications. If you examine the diagram above, you will see that the NVIFR and NVFBC components are connected to the frame buffer and to the NVENC component. When used together (NVIFR + NVENC or NVFBC + NVENC), you can create an H.264 video stream of your application using dedicated, hardware-accelerated video encoding. This stream can be displayed on any client device that has a compatible video codec. A single GPU can support up to eight real-time HD video streams (720p at 30 fps) or up to four real-time FHD video streams (1080p at 30 fps).

Put it all together and your applications can now run in the cloud, take advantage of the CPU power of the g2.2xlarge, the 3D rendering of the GRID GPU, along with access to AWS storage, messaging, and database resources to generate interactive content that can be viewed in a wide variety of environments!

The GPU Software Stack

You have access to a very wide variety of 3D rendering technologies when you use the g2 instances. Your application does its drawing using OpenGL or DirectX.

If you would like to make the technical investment you can also make use of the NVIDIA GRID SDK for low-level frame grabbing and video encoding. At the next level up you have two options:

- You can grab the entire display using NVIDIA’s NVFBC (Full-frame Buffer Capture) APIs. In this model you generate one video stream per instance. It is relatively easy to modify existing GPU-aware applications to make use of this option.

- You can grab an individual render target using NVIDIA’s NVIFR (In-band Frame Readback) APIs. You can generate multiple streams per instance, but you will spend more time adapting your application to this programming model.

To simplify the startup process, NVidia has put together AMIs for Windows and Amazon Linux and has made them available in the AWS Marketplace:

- Amazon Linux AMI with NVIDIA Drivers

- Windows 2008 AMI with NVIDIA Drivers

- Windows 2012 AMI with NVIDIA Drivers

You can also download the NVIDIA drivers and install them on your own Windows or Linux instances.

G2 In Action

We’ve been working with technology providers to lay the groundwork for this very exciting launch. Here’s what they have to offer:

- Autodesk Inventor, Revit, Maya, and 3ds Max 3D design tools can now be accessed from a web browser. Developers can now access full-fledged 3D design, engineering, and entertainment work without the need for a top-end desktop computer (this is an industry first!).

- OTOY’s ORBX.js is a pure JavaScript framework that allows you to stream 3D application to thin clients and to any HTML5 browser without plug-ins, codecs, or client-side software installation.

- The Agawi True Cloud application streaming platform now takes advantage of the g2 instance type. It can be used to stream graphically rich, interactive applications to mobile devices.

- The Playcast Media AAA cloud gaming service has been deployed to a fleet of g2 instances and will soon be used to stream video games for consumer-facing media brands.

- The Calgary Scientific ResolutionMD application for visualization of medical imaging data can now be run on g2 instances. The PureWeb SDK can be used to build applications that run on g2 instances and render on any mobile device.

Here are some Marketplace products to get you started:

- OTOY ORBX Cloud Game Console (Windows, Linux)

- OTOY Cloud Workstation (Windows, Linux)

- OTOY Octane Cloud Workstation – Autodesk Edition

I generally don’t include quotations in my blog posts, but today I had to make an exception. Brendan Eich (the inventor of JavaScript) definitely grasps the power of this model. Here’ what he had to say when he saw a demo of ORBX.js:

“Think of the amazing 3D games that we have on PCs, consoles, and handheld devices thanks to the GPU. Now think of hundreds of GPUs in the cloud, working for you to over-detail, ray/path-trace in realtime, encode video, do arbitrary (GPGPU) computation.”

I think that pretty much sums it up!

Go Forth and Render

As I noted earlier, you can launch g2.2xlarge instances in four AWS regions today! You should use a product based on the Remote Framebuffer Protocol (RFB), such as a member of the VNC family. TeamViewer is another good choice. If you use a product that is based on RDP, your code will not be able to detect the presence of the GPU.

I am really looking forward to seeing some cool new applications (and perhaps even entirely new classes of applications) running on these instances. Build something cool and let me know about it!

— Jeff;