AWS News Blog

Sneak Preview – DynamoDB Streams

|

|

I would like to give you a quick, sneak preview of a new Amazon DynamoDB feature. We will be demonstrating it at AWS re:Invent and making it available to curious developers and partners for testing and feedback.

Many AWS customers are using DynamoDB as the primary storage mechanism for their applications. Some of them use it as a key-value store, some of them denormalize their relational schemas in accordance with the NoSQL philosophy, and others make use of the new support for JSON documents.

DynamoDB Streams

Many of our customers have let us know that they would like to track the changes made to their DynamoDB tables. They would like to build and update caches, run business processes, drive real-time analytics, and create global replicas.

The new DynamoDB Streams feature is designed to address this very intriguing use case. Once you enable it for a DynamoDB table, all changes (puts, updates, and deletes) made to the table are tracked on a rolling 24-hour basis. You can retrieve this stream of update records with a single API call and use the results to build tools and applications that function as described above. You have full control over the records that appear in the DynamoDB Stream: no values, all values, or changed values.

If you are building mobile, ad-tech, gaming, web or IoT applications, you can use the DynamoDB Streams capability to make your applications respond to high velocity data changes without having to track the changes yourself. With the recent free tier increase (now including 25 GB of storage and over 200 million requests per month), you can try DynamoDB for your new applications at little or no cost.

This feature will be accessible from the AWS Management Console. You’ll be able to enable it for existing tables by clicking Modify Table. You will also be able to enable it when you create a new table. Once enabled, changes will be visible in a new Streams tab.

You will be able to retrieve updates at roughly twice the rate of the provisioned write capacity of your table. If you have provisioned enough capacity to perform 1,000 updates per second to a table, you can retrieve up to 2,000 per second. The stream will contain every update made to the table. If multiple updates are made to the same item in the table, those updates will show up in the stream in the same order in which they are made. Updates will be stored for 24 hours, and will stick around for the same amount of time after a table has been deleted.

We will be updating the Kinesis Client Library with support for DynamoDB Streams. We also plan to provide connectors for Amazon CloudSearch, Amazon Redshift, and other relevant AWS services.

Get Started Today

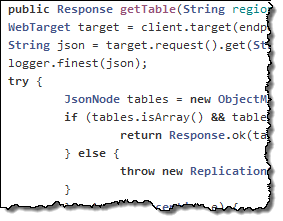

The preliminary documentation contains more information about the newest additions to the DynamoDB API along with a download link for a version of DynamoDB Local that already includes an initial implementation of DynamoDB Streams. It also includes references to an updated AWS SDK for Java, the updated Kinesis Client Library, and a cool library that will show you how to implement Multi-Master Cross Region Replication.

The preliminary documentation contains more information about the newest additions to the DynamoDB API along with a download link for a version of DynamoDB Local that already includes an initial implementation of DynamoDB Streams. It also includes references to an updated AWS SDK for Java, the updated Kinesis Client Library, and a cool library that will show you how to implement Multi-Master Cross Region Replication.

The Cross Region Replication Library is a preview release of an application that uses DynamoDB Streams to keep DynamoDB tables in sync across multiple regions in near real time. When you write to a DynamoDB table in one region, those changes are automatically propagated by the Cross Region Replication Library to your tables in other regions.

I will share additional news about availability of DynamoDB Streams just as soon as I have it. If you would like to get an early start, please contact us and tell us more. We are especially interested in speaking to anyone who’s interested in integrating this feature with other storage, processing, and analytical tools.

— Jeff;