- Machine Learning›

- Amazon SageMaker AI›

- Amazon SageMaker with MLflow

Accelerate generative AI development with Amazon SageMaker AI and MLflow

Track experiments, evaluate models, and trace AI applications with no infrastructure management

Why use Amazon SageMaker AI with MLflow?

Building and customizing AI models is an iterative process, involving hundreds of trainings runs to find the best algorithm, architecture, and parameters for optimal model accuracy. Amazon SageMaker AI offers a managed, serverless MLflow capability that makes it easy for AI developers to track experiments, observe behavior, and evaluate the performance of their AI models and applications without the need to manage any infrastructure. SageMaker AI with MLflow is also integrated with familiar SageMaker AI model development tools like SageMaker AI JumpStart, Model Registry, Pipelines, and serverless model customization capabilities to help you connect every step of the AI lifecycle — from experimentation to deployment.

Benefits of Amazon SageMaker AI with MLflow

Begin tracking experiments and tracing AI applications with no infrastructure to provision and no tracking servers to configure. AI developers get instant access to a serverless MLflow capability that empowers your team to focus on building AI applications instead of managing infrastructure.

SageMaker AI with MLflow automatically scales to match your needs, whether you're running a single experiment or managing hundreds of parallel fine-tuning jobs. MLflow adapts your infrastructure during intensive experimentation bursts and scales back during quiet periods without manual intervention, maintaining consistent performance without the operational burden of managing servers.

With a single interface, you can visualize in-progress training jobs, collaborate with team members during experimentation, and maintain version control for each model and application. MLflow also offers advanced tracing capabilities to help you quickly identify the source of bugs or unexpected behaviors.

As the MLflow project evolves, SageMaker AI customers will continue to benefit from open-source community innovation while enjoying serverless support from AWS.

Amazon SageMaker AI with MLflow automatically upgrades to the latest version of MLflow, giving you access to the newest features and capabilities without maintenance windows or migration effort.

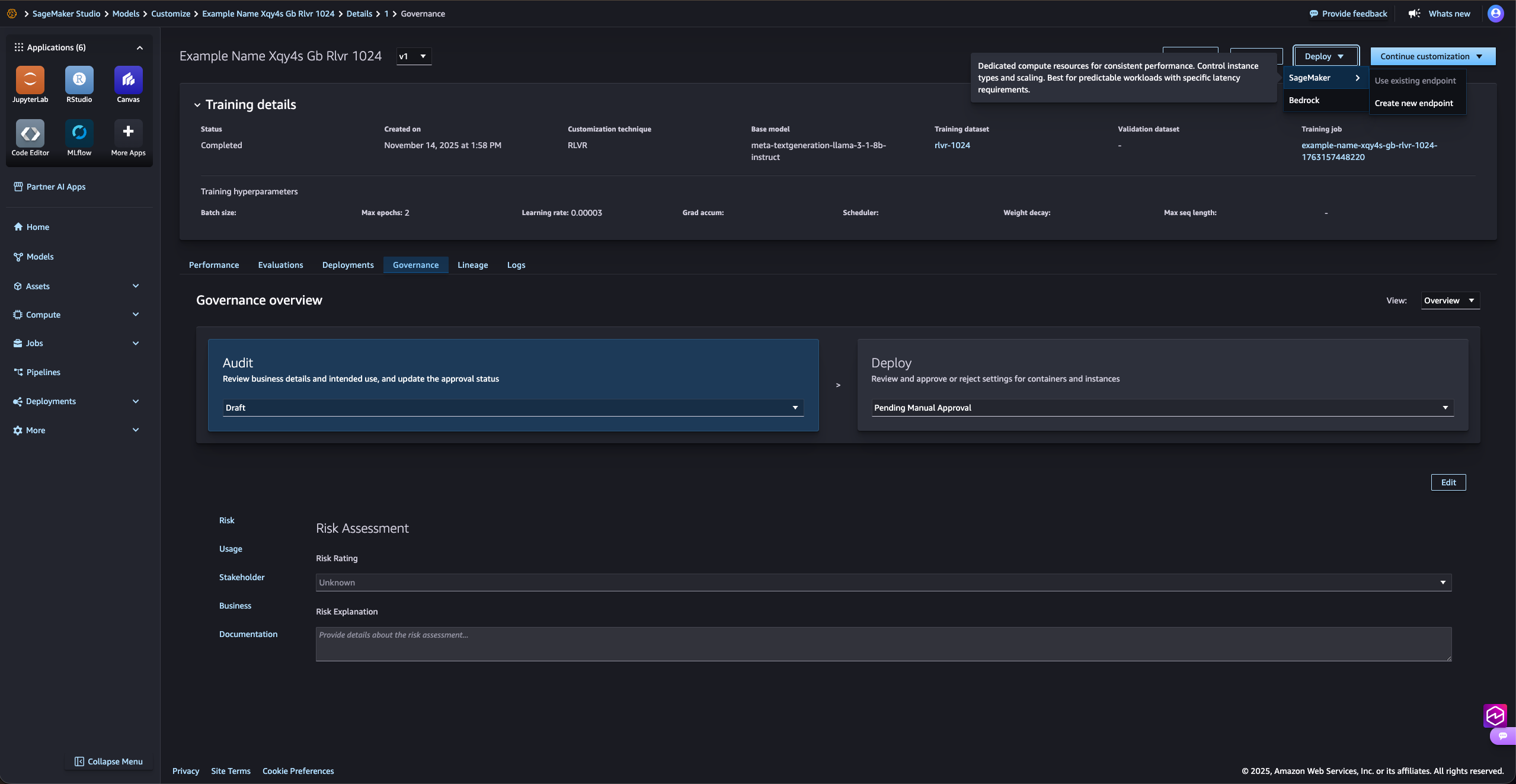

Streamline AI model customization

With MLflow on SageMaker AI, you can track, organize, and compare experiments to identify your best performing models. MLflow is integrated with Amazon SageMaker AI serverless model customization capabilities for popular models like Amazon Nova, Llama, Qwen, DeepSeek, and GPT-OSS, enabling you to visualize in-progress training jobs and evaluations through a single interface.

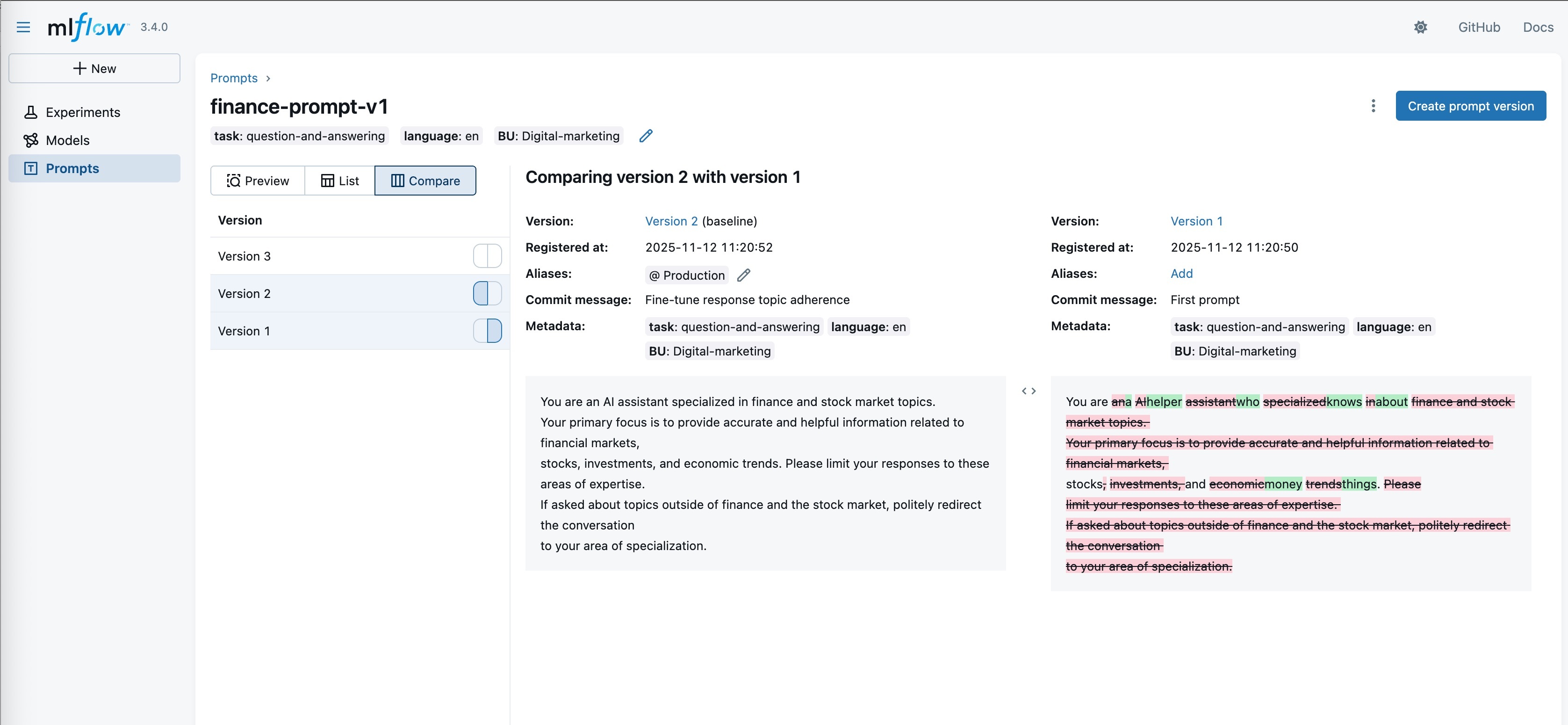

Maintain consistency with prompt management

You can streamline prompt engineering and management in your AI applications with MLflow Prompt Registry, a powerful capability that enables you to version, track, and reuse prompts across your organization, helping maintain consistency and improving collaboration in prompt development.

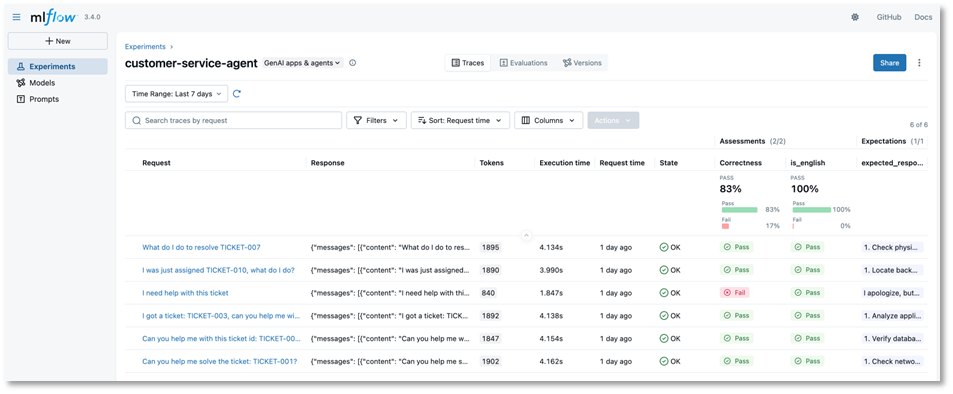

Trace AI applications and agents in real-time

SageMaker AI with MLflow records the inputs, outputs, and metadata at every step of AI development to quickly identify bugs or unexpected behaviors. With advanced tracing for agentic workflows and multi-step applications, you’ll gain the visibility you need for debugging complex generative AI systems and optimizing performance.

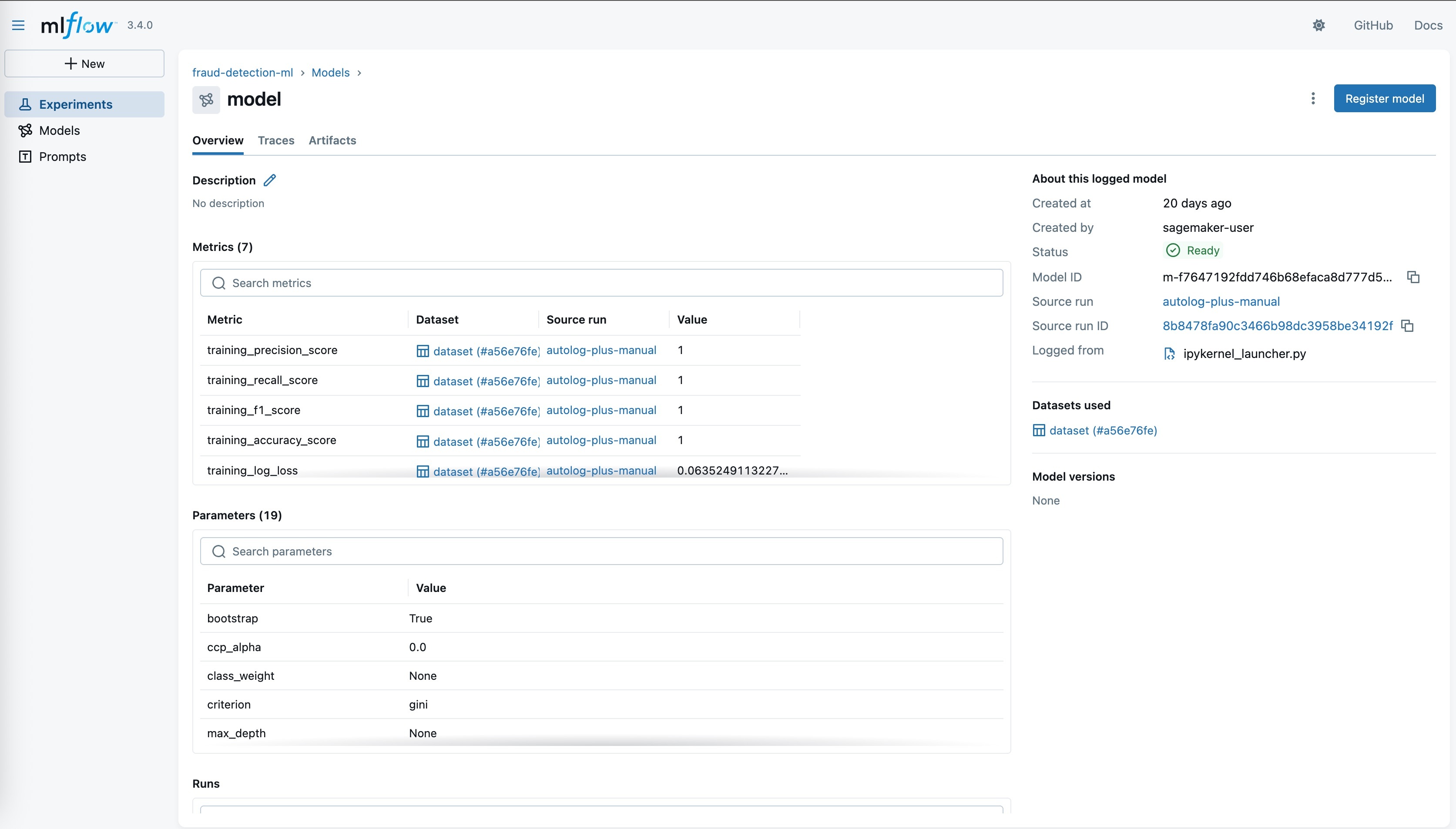

Centralize model governance with SageMaker Model Registry

Organizations need an easy way to keep track of all candidate models across development teams to make informed decisions about which models proceed to production. Managed MLflow includes a purpose-built integration that automatically synchronizes models registered in MLflow with SageMaker Model Registry. This empowers AI model development teams to use distinct tools for their respective tasks: MLflow for experimentation and SageMaker Model Registry for managing the production lifecycle with comprehensive model lineage.

Seamlessly deploy models to SageMaker AI endpoints

Once you have achieved your desired model accuracy and performance goals, you can deploy models to production from SageMaker Model Registry in a few clicks to SageMaker AI inference endpoints. This seamless integration eliminates the need to build custom containers for model storage and allows customers to leverage SageMaker AI’s optimized inference containers while retaining the user-friendly experience of MLflow for logging and registering models.

Wildlife Conservation Society

"WCS is advancing global coral reef conservation through MERMAID, an open-source platform that uses ML models to analyze coral reef photos from scientists around the world. Amazon SageMaker with MLflow has enhanced our productivity by eliminating the need to configure MLflow tracking servers or manage capacity as our infrastructure needs change. By enabling our team to focus entirely on model innovation, we’re accelerating our time-to-deployment to deliver critical cloud-driven insights to marine scientists and managers."

Kim Fisher, MERMAID

Lead Software Engineer, WCS