- AWS Solutions Library›

- Guidance for Scalable Model Inference and Agentic AI on Amazon EKS

Guidance for Scalable Model Inference and Agentic AI on Amazon EKS

Overview

This Guidance demonstrates how to build a robust, enterprise-grade AI architecture that maximizes the value of machine learning investments. It helps organizations optimize their ML workload distribution through intelligent resource allocation, while providing a unified model inference API Gateway for streamlined access and management. The guidance shows how to implement agentic AI capabilities that enable seamless model interactions with external APIs, significantly expanding automation possibilities. Additionally, it demonstrates how to enhance LLM performance by combining specialized tools with Retrieval Augmented Generation (RAG), resulting in more contextually aware and capable AI systems that can better understand and respond to complex business scenarios.

Benefits

Deploy cost-effective AI workloads using AWS Graviton processors and intelligent auto-scaling. Karpenter automatically provisions the right-sized compute resources based on actual workload demands, reducing unnecessary infrastructure expenses while maintaining performance.

Implement a production-ready infrastructure that dynamically scales across multiple availability zones. The architecture efficiently handles varying inference workloads by automatically provisioning GPU, Graviton, and Inferentia-based compute resources as needed, ensuring consistent performance during demand spikes.

Reduce operational complexity with pre-configured observability and security controls. The guidance provides ready-to-deploy infrastructure with managed services for monitoring, logging, and security, allowing your team to focus on model development rather than infrastructure management.

How it works

Deploy EKS cluster with best practices configuration and critical add-ons for AI workloads

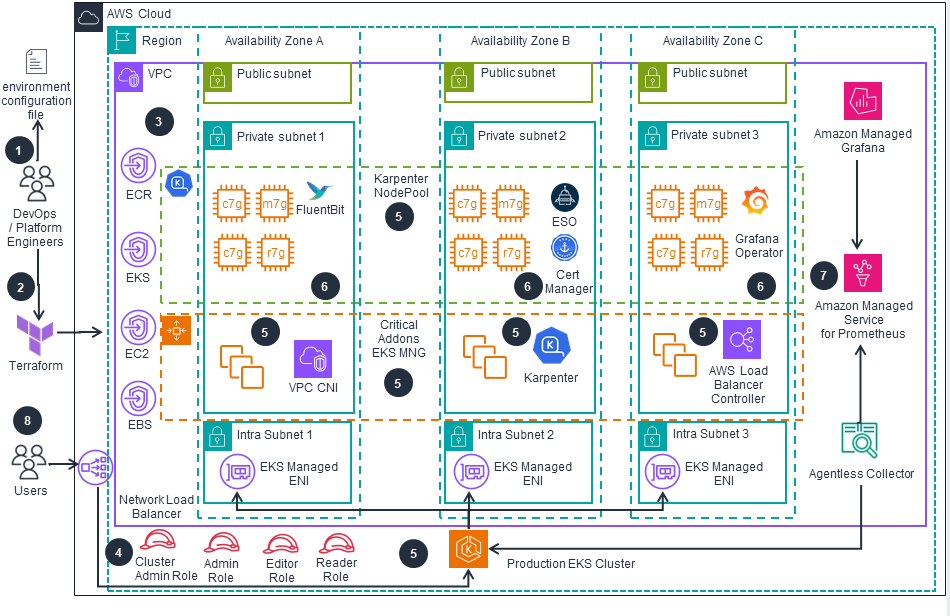

This reference architecture demonstrates how to provision an Amazon Elastic Kubernetes Service (EKS) cluster with best practices configuration and critical add-ons for AI workloads.

Deploy and run Model Inference and Agentic AI applications on EKS cluster

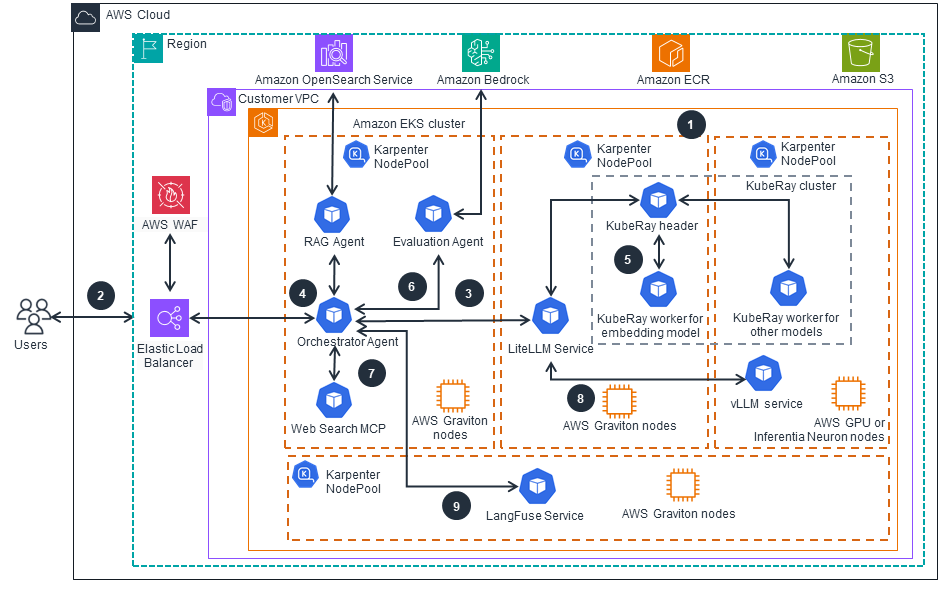

This reference architecture diagram demonstrates how to deploy ML Models and MCP server and agent on EKS cluster, provide unified model inference API Gateway, and implement Agentic AI capabilities to enable models’ interactions.

Deploy with confidence

Everything you need to launch this Guidance in your account is right here

We'll walk you through it

Dive deep into the implementation guide for additional customization options and service configurations to tailor to your specific needs.

Let's make it happen

Ready to deploy? Review the sample code on GitHub for detailed deployment instructions to deploy as-is or customize to fit your needs.

Disclaimer

The sample code; software libraries; command line tools; proofs of concept; templates; or other related technology (including any of the foregoing that are provided by our personnel) is provided to you as AWS Content under the AWS Customer Agreement, or the relevant written agreement between you and AWS (whichever applies). You should not use this AWS Content in your production accounts, or on production or other critical data. You are responsible for testing, securing, and optimizing the AWS Content, such as sample code, as appropriate for production grade use based on your specific quality control practices and standards. Deploying AWS Content may incur AWS charges for creating or using AWS chargeable resources, such as running Amazon EC2 instances or using Amazon S3 storage.

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages