- Industry›

- AWS for Media & Entertainment›

- IBC 2025 Demo Showcase

Generative AI: Apply generative AI and agentic AI to address the most complex M&E challenges

Enhance viewing experiences, streamline operations, and optimize advertising and monetization opportunities using AWS’s generative AI tools, including Amazon Bedrock, Amazon Nova foundation models, and Amazon Bedrock AgentCore.

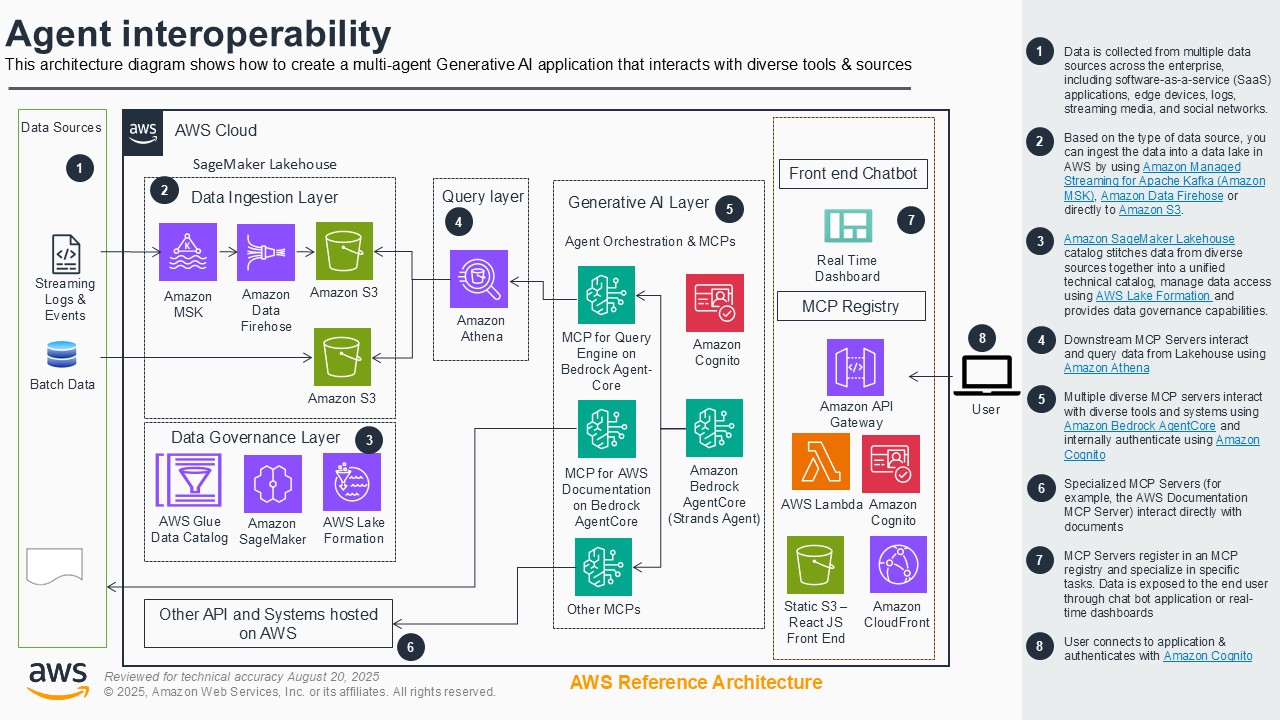

Agent interoperability

AI developers and organizations need standardized protocols to connect AI agents with tools, data sources, and other agents. The agent interoperability demonstration implements the Model Context Protocol (MCP), an open protocol that standardizes how applications provide context to large language models (LLMs), enabling two-way JSON-RPC communication, dynamic tool discovery, and real-time context management for complex workflows. Powered by Amazon Bedrock, it features a server registry for managing media tools at scale and integrates with platforms like Blender and GIMP. This enables teams to streamline agent ecosystems, accelerate AI application development, and simplify cross-platform collaboration by transforming fragmented systems into interoperable AI networks.

Architecture diagram

Human agent collaboration

Media companies need efficient ways to automate content creation while maintaining editorial control. The human-agent collaboration demonstration enables AI-powered highlight reel and trailer generation with human oversight, allowing operators to configure, supervise, and refine automated workflows through intuitive interfaces. Powered by Amazon Bedrock, this demo shows how media teams can use a combination of AI and human operators to accelerate production timelines, reduce repetitive tasks, and maintain creative quality in content operations.

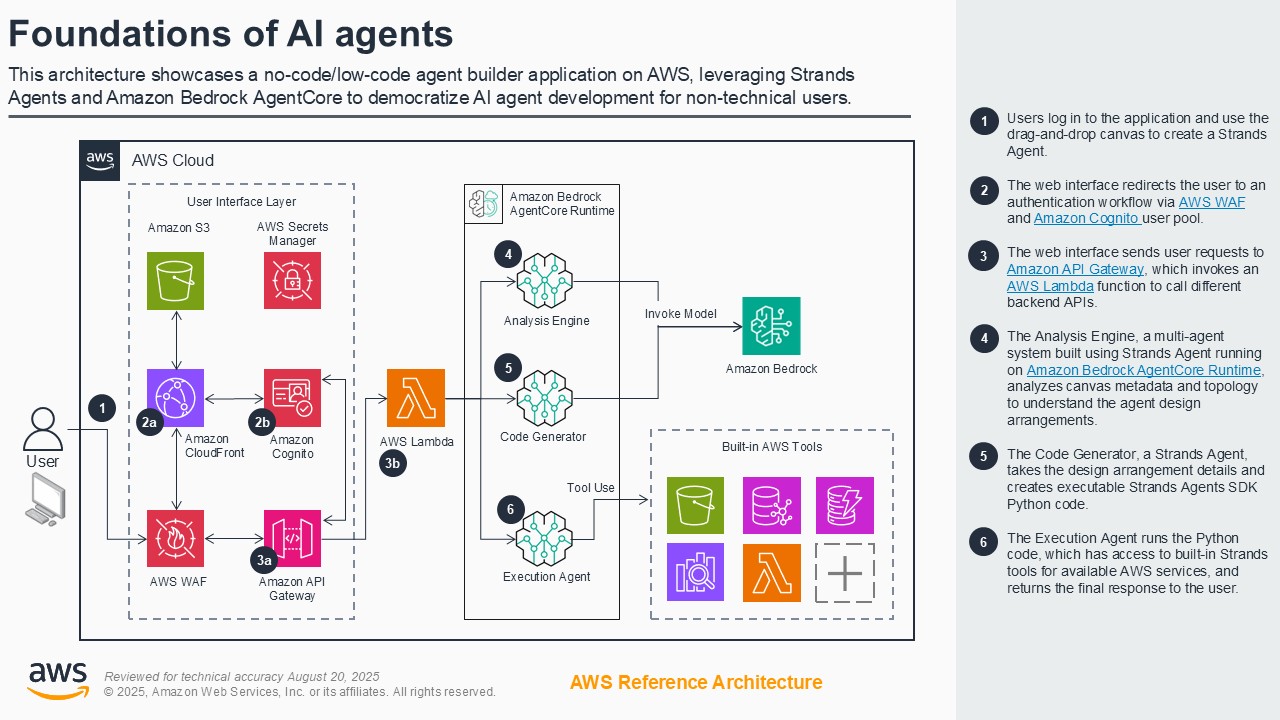

Foundations of AI agents

Developers and media companies need intuitive ways to build and deploy AI agent systems. The foundations of AI agents demonstration provides a visual drag-and-drop interface for creating multi-agent workflows using the Strands Agent SDK. It features AI-powered topology analysis, pattern suggestions, and automatic code generation for production-ready agent systems. Powered by Amazon Bedrock and deployable on AWS, this demo shows how organizations can rapidly prototype, customize, and deploy sophisticated AI agents without complex coding, accelerating time-to-market for intelligent automation solutions.

Architecture diagram

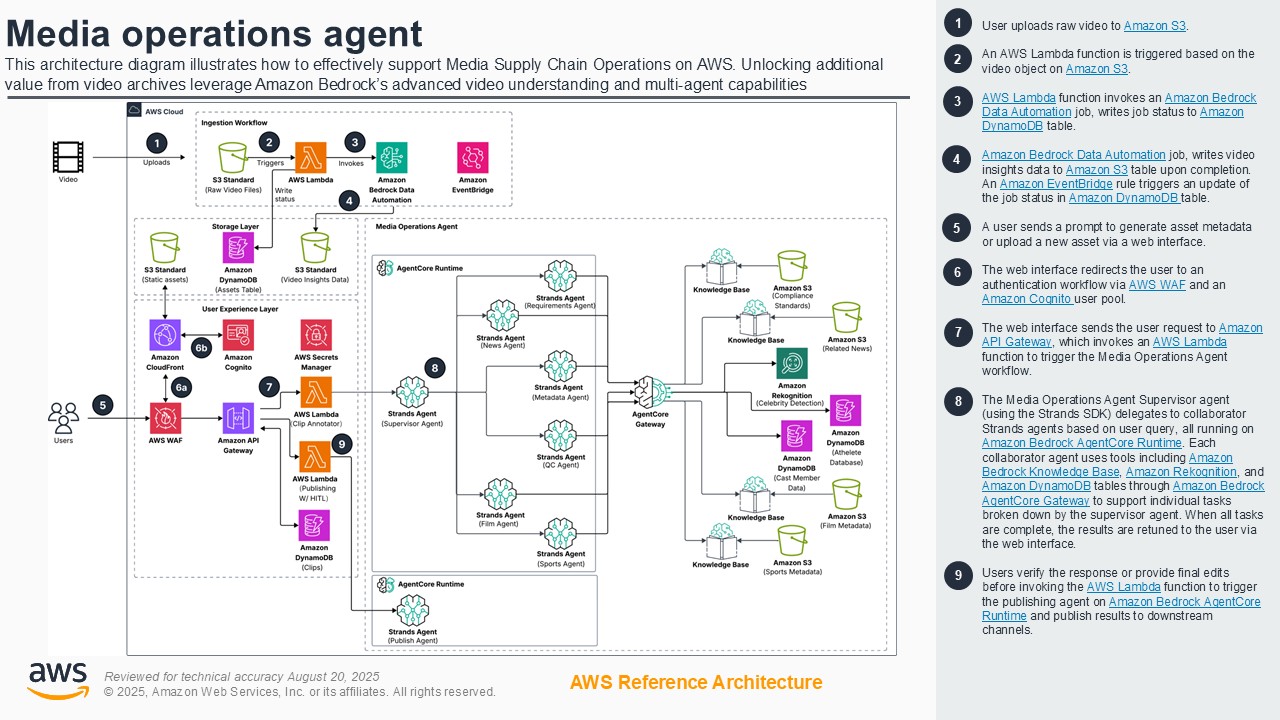

Media operations agent

Media companies need efficient ways to prepare video content for multiple distribution channels. The media operations agent demonstration showcases a multi-agent solution that automates video asset preparation and distribution workflows. Using the Strands Agents framework, specialized agents handle metadata generation, compliance checks, and channel-specific formatting for news and media content. Powered by Amazon Bedrock, the demo shows how broadcasters and content distributors can reduce manual processing time, ensure consistency across platforms, and accelerate content delivery to audiences.

Architecture diagram

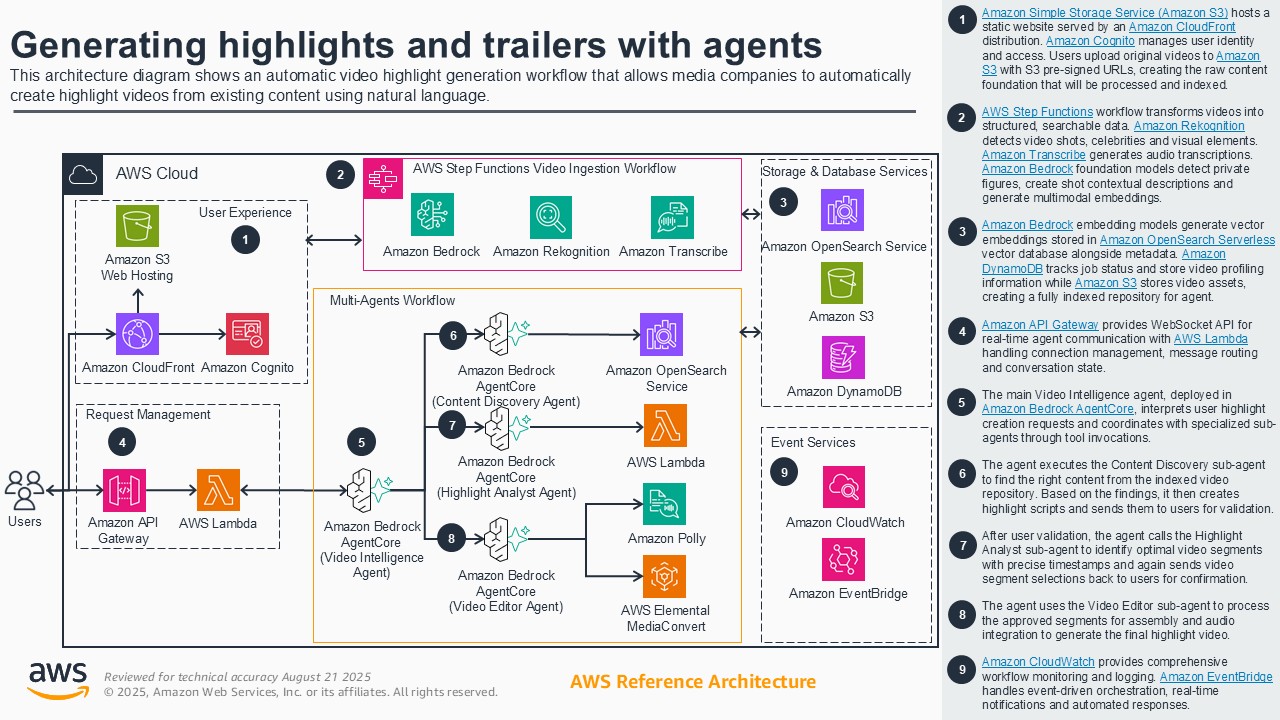

Generating highlights and trailers with agents

Media companies and content marketers need faster ways to create promotional videos from existing content. The generating highlights and trailers with agents demonstration uses natural language prompts to automatically generate trailers, promos, and highlight reels. Specialized agents orchestrate content extraction, scene selection, and assembly across news, episodic, and sports content while incorporating human validation at creative decision points. Powered by Amazon Bedrock, the demo shows how teams can transform resource-intensive editing into scalable operations, accelerating campaign creation and maximizing audience engagement.

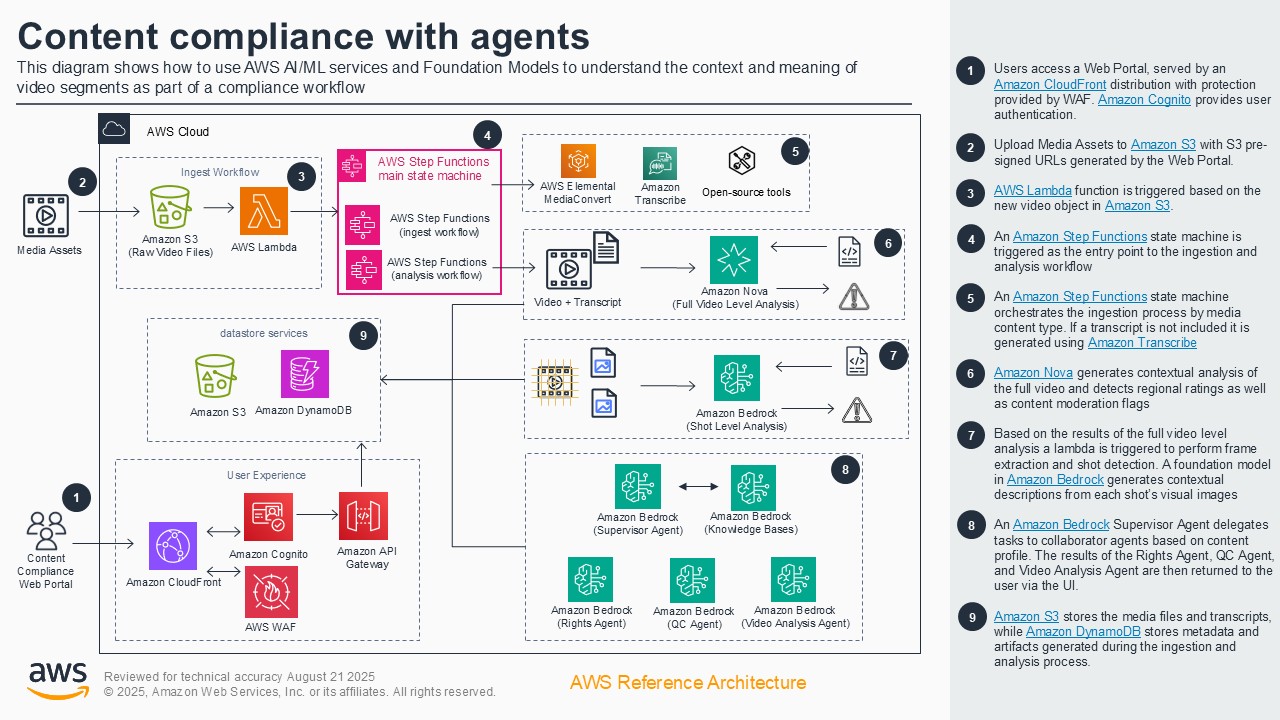

Content compliance with agents

Broadcasters and streaming platforms face challenges with manual content compliance reviews. The content compliance with agents demonstration applies agentic AI to analyze media content against compliance standards. Powered by Amazon Bedrock and Amazon Nova models, the demonstration shows how agentic AI-based compliance processing reduces manual effort, saves time, and ensures consistent adherence to compliance standards. The demo highlights how agentic AI benefits both small and large content creators by enabling efficient scaling and transforming compliance review into a streamlined, cost-effective process.

Architecture diagram

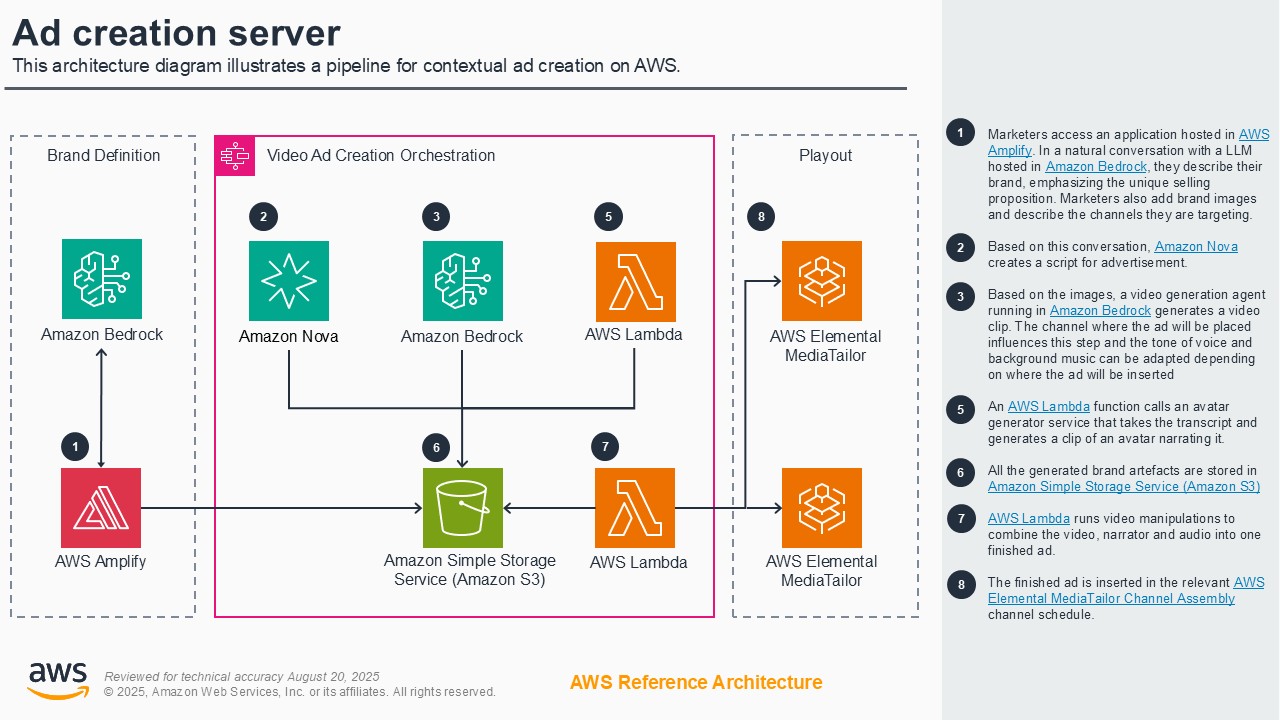

Ad creation server

Media companies and advertising platforms need accessible ways to help small businesses create television advertisements. The ad creation server demonstration shows business owners how to generate professional ads through natural language chat, simply by describing their brand. Publishers provide placement context, allowing ads to be tailored to specific slots at minimal cost. Powered by Amazon Bedrock and Amazon Nova models, the workflows in the demo enable media companies to tap into the small and medium business (SMB) market, democratizing TV advertising while creating new revenue streams from customers that previously focused their ad spend elsewhere.

Architecture diagram

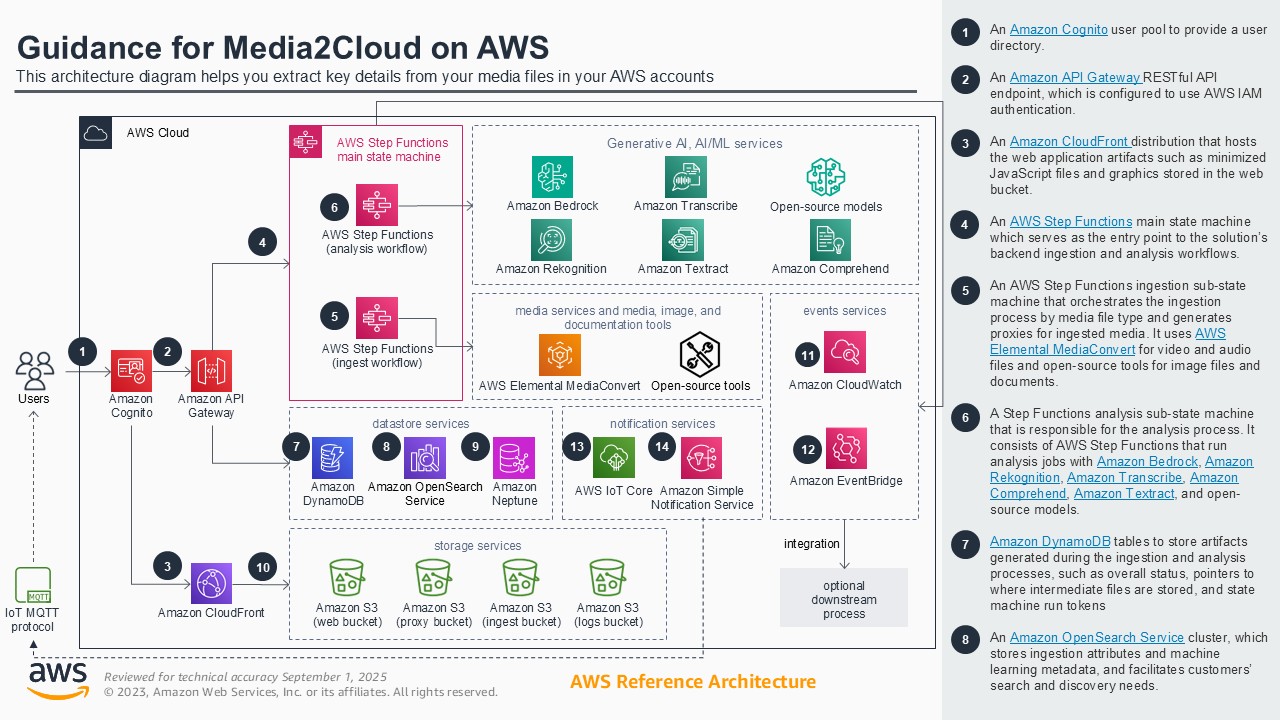

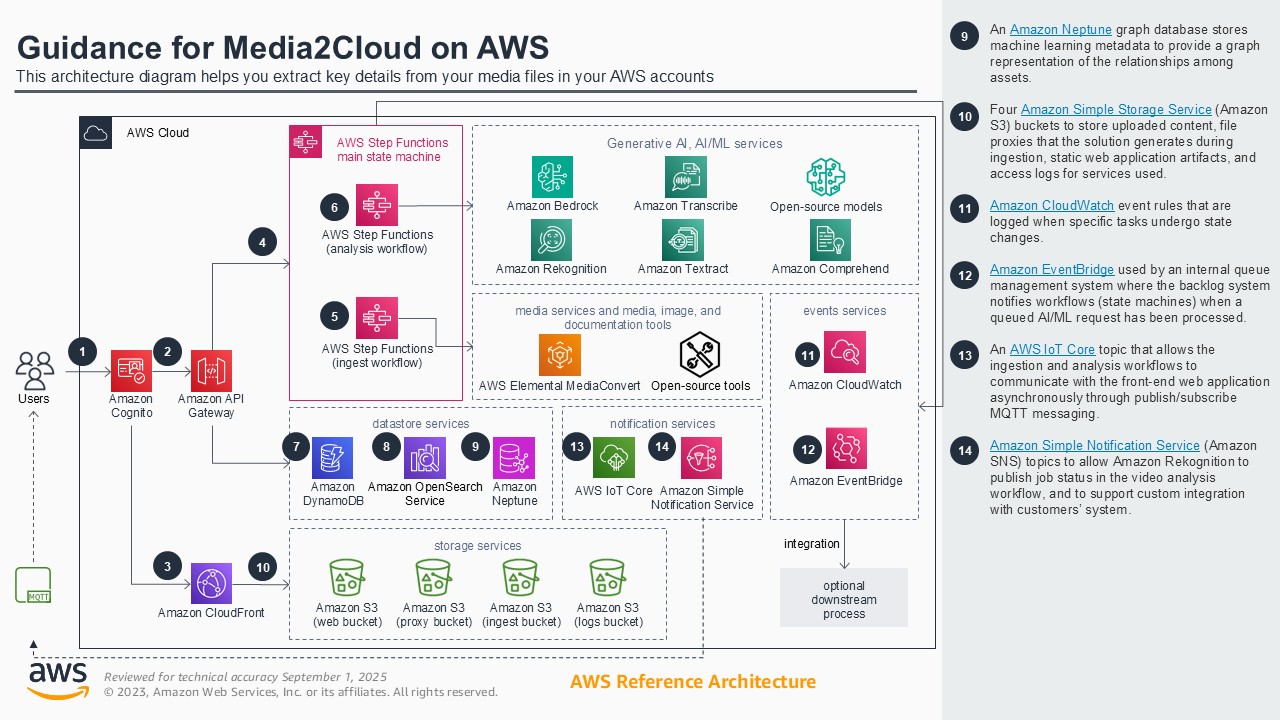

Media2Cloud V5

Media companies need efficient video analysis to detect ads, map faces, generate transcripts, and manage content libraries. The Media2Cloud V5 demonstration uses vector embeddings to automate frame-level metadata extraction, ad-break identification, and voice-to-face mapping across video archives. Powered by Amazon Bedrock, Amazon Nova models, Amazon Rekognition, and Amazon Transcribe, it enables cost-effective detection of segments, credits, and reusable clips while maintaining accuracy. Media2Cloud V5 transforms unstructured video into searchable assets, helping organizations streamline content workflows, reduce manual tagging efforts, and unlock revenue opportunities through faster discovery of archival material.

Architecture diagram

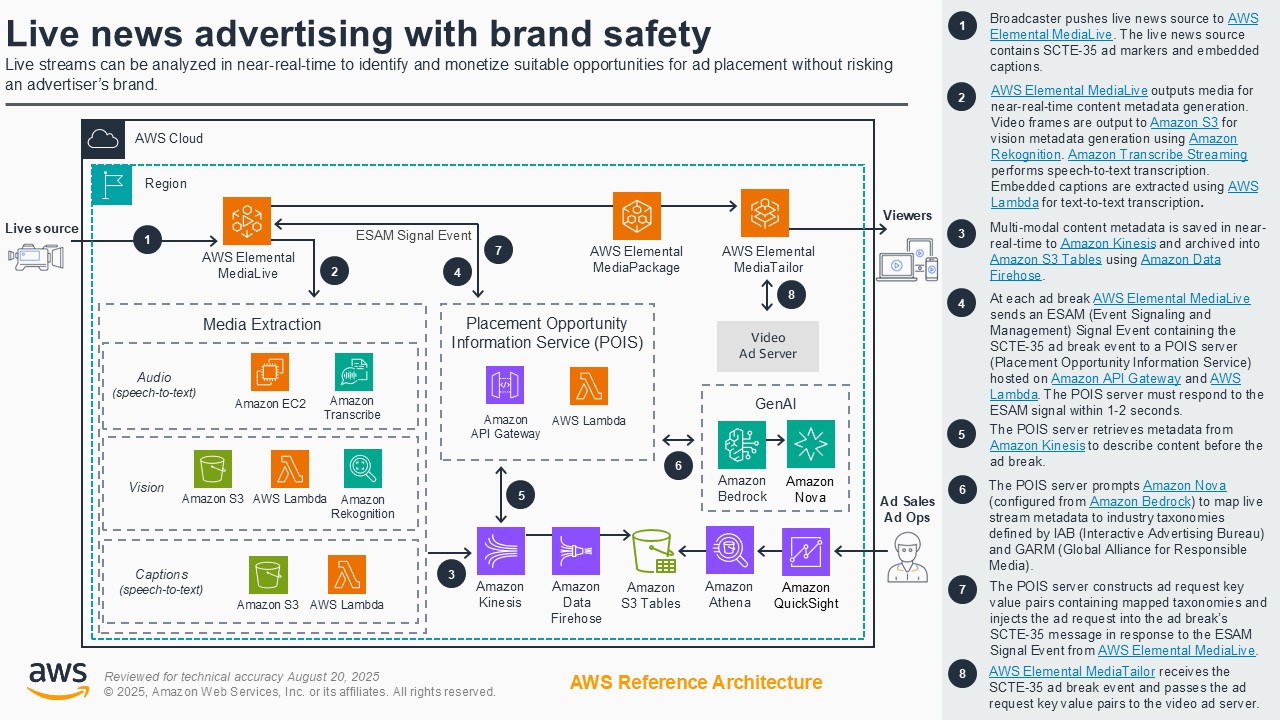

Live news advertising with brand safety

Media companies and broadcasters need to monetize news content while avoiding poorly timed ad breaks and potentially inappropriate ad placements during a broadcast or live stream. The live news advertising with brand safety demonstration analyzes live streams in near real-time and maps content to IAB and GARM taxonomies to create video ad server signals that indicate ad break suitability before programmatic ad insertion. Powered by Amazon AI/ML services, Amazon Bedrock, AWS Elemental Media Services, Amazon S3 Tables, and Amazon QuickSight, the demonstration shows how media organizations can monetize unrealized ad inventory by providing advertisers with a way to ensure brand-safety.

Architecture diagram

Disclaimer

References to third-party services or organizations on this page do not imply an endorsement, sponsorship, or affiliation between Amazon or AWS and the third party. Guidance from AWS is a technical starting point, and you can customize your integration with third-party services when you deploy them.

The sample code; software libraries; command line tools; proofs of concept; templates; or other related technology (including any of the foregoing that are provided by our personnel) is provided to you as AWS Content under the AWS Customer Agreement, or the relevant written agreement between you and AWS (whichever applies). You should not use this AWS Content in your production accounts, or on production or other critical data. You are responsible for testing, securing, and optimizing the AWS Content, such as sample code, as appropriate for production grade use based on your specific quality control practices and standards. Deploying AWS Content may incur AWS charges for creating or using AWS chargeable resources, such as running Amazon EC2 instances or using Amazon S3 storage.

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages