- AWS Solutions Library›

- Guidance for Multi-Region Serverless Batch Applications on AWS

Guidance for Multi-Region Serverless Batch Applications on AWS

Overview

How it works

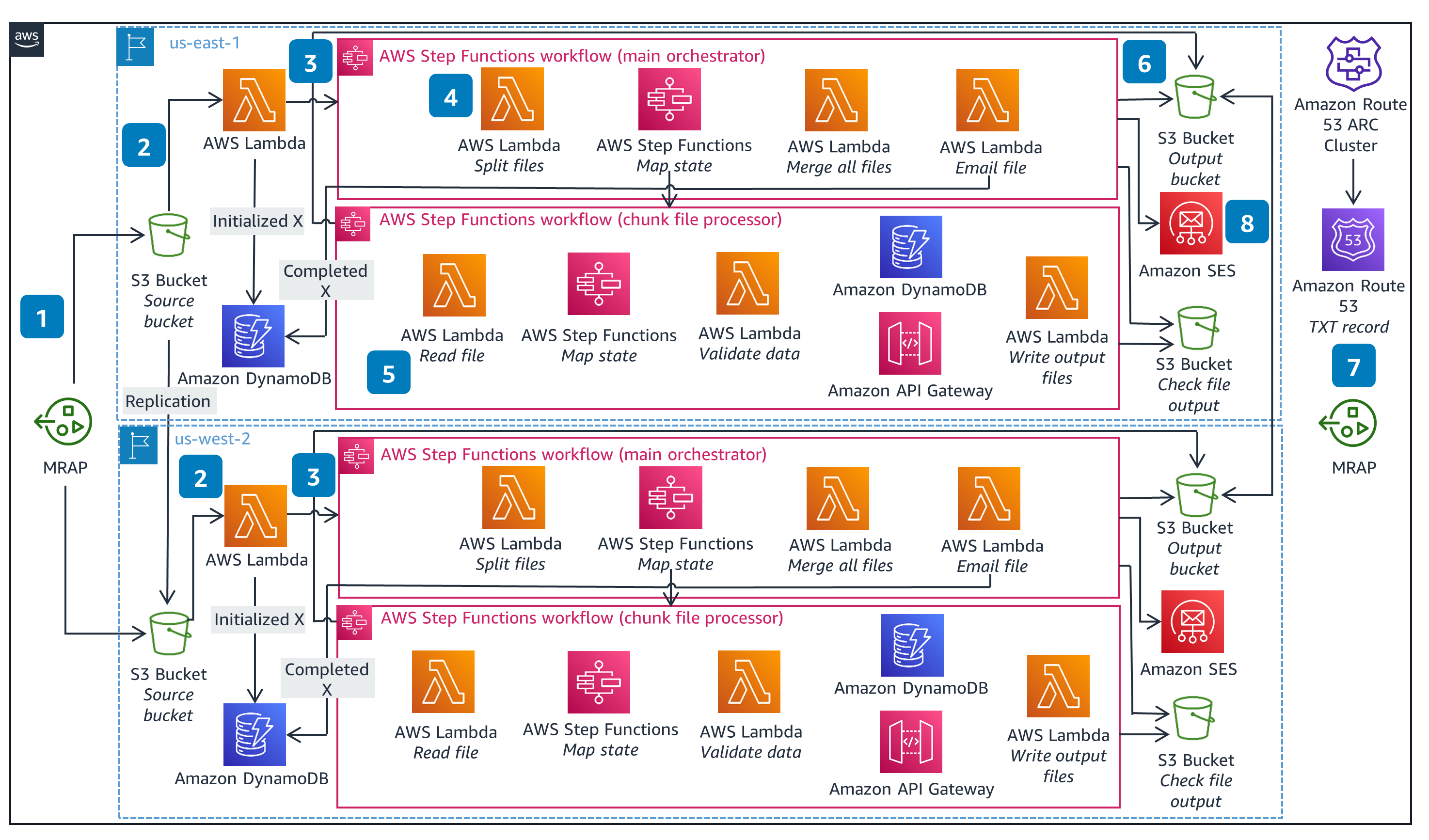

Primary Region

This architecture shows the multi-Region, event-driven workload when running in the primary Region.

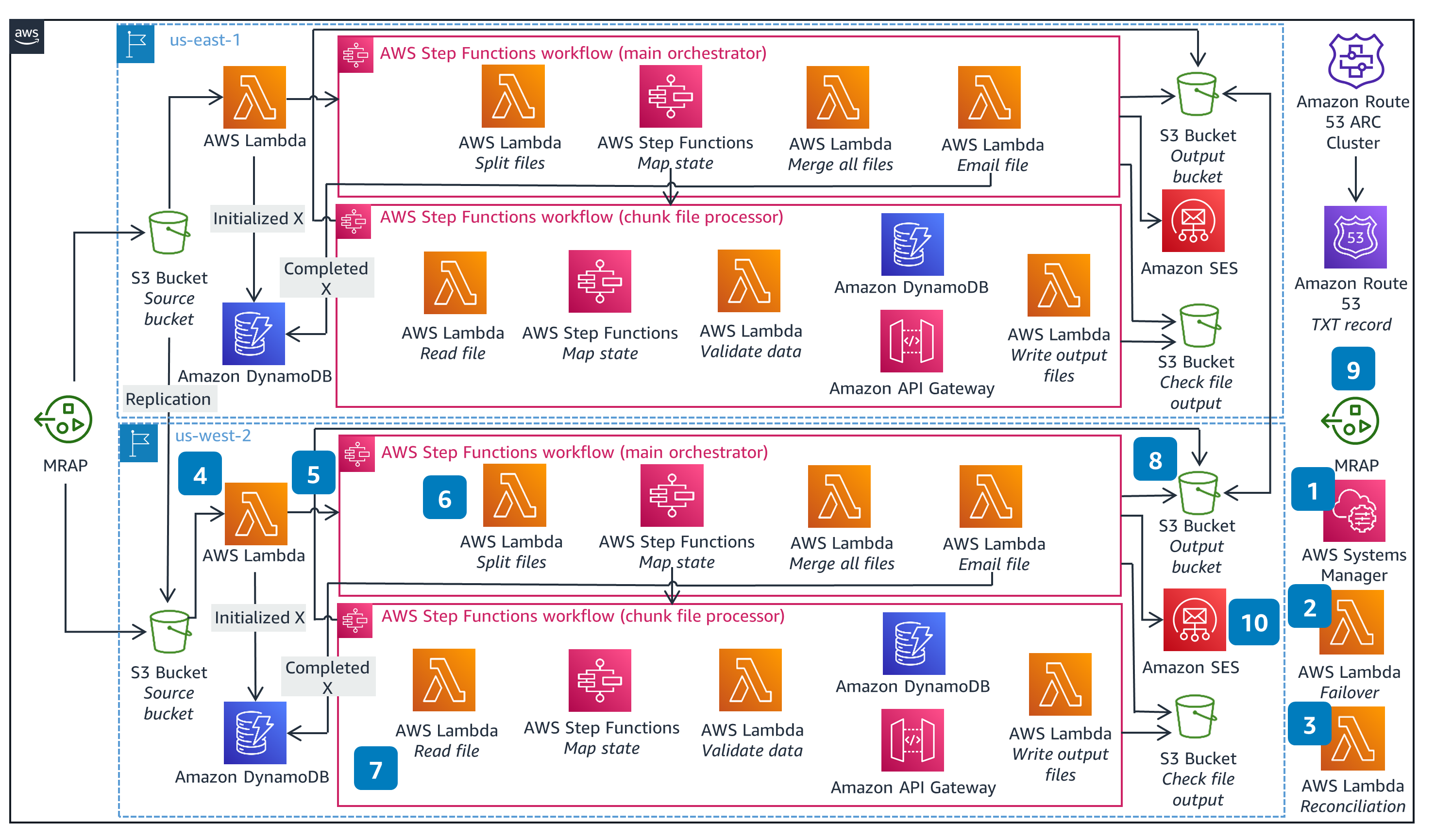

Standby Region

This architecture shows the multi-Region, event-driven workload when failing over to the standby Region.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

You can deploy this Guidance with infrastructure as code (IaC) to make any modifications. We also provide a dashboard that helps you understand performance and make iterations to the Guidance so you can achieve your desired performance characteristics.

We implemented least privilege access on Identity and Access Management (IAM) roles attached to the Lambda functions, so these roles only have permission to access the resources they need. You can use a pre-signed Amazon S3 URL to access S3 buckets. In this Guidance, these URLs come with a set expiration time of sixty minutes to protect resources from unrestricted access.

This Guidance replicates data across Regions to allow for full redundancy in the standby Region. This multi-Region approach allows you to failover to another Region in disaster recovery scenarios. Within the Region, you can use retry logic and decoupled processing.

Read the Reliability whitepaperWe chose the services in this Guidance based on their abilities to reduce cost and complexity and enhance performance. You can test the Guidance with the provided example files and modify processes based on your specific use case.

Read the Performance Efficiency whitepaperThis Guidance uses serverless services that allow you to pay only for the resources you consume during batch processing. With these services, your costs are directly associated to the number of processed items for each batch job.

Read the Cost Optimization whitepaperThe serverless and managed services scale to meet changes in demand. AWS handles the provisioning of the underlying resources. This helps you avoid provisioning unneeded resources.

Read the Sustainability whitepaperDeploy with confidence

Ready to deploy? Review the sample code on GitHub for detailed deployment instructions to deploy as-is or customize to fit your needs.

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages