Optimizing storage costs using Amazon S3

Save with cost-efficient storage classes and lifecycle management tools

Overview

On-premises storage can be costly and complex, with expensive hardware refresh cycles, and data migrations due to system upgrades. It is also difficult to gain insights because your data is in silos from multiple storage systems.

With cloud storage, you adjust on the fly and use what storage you need now, and not get locked into another hardware refresh. Moving to Amazon S3 keeps you agile and reduces costs by eliminating over-provisioning, and provides unlimited scale, while also tearing down data silos to gain insights from data.

Lower your storage costs without sacrificing performance with Amazon S3. Amazon S3 lets you take control of costs and continuously optimize your spend, while building modern, scalable applications. Amazon S3 storage classes offer the flexibility to manage your costs, or have it automated for you, by providing different data access levels at corresponding costs, including the lowest cost cloud storage.

Benefits

Automatic savings with S3 Intelligent-Tiering, which optimizes storage costs for you.

S3 storage classes optimize costs and performance for all workloads.

11 9s of durability across all storage classes.

Lowest cost storage in the cloud with S3 Glacier Deep Archive.

Amazon S3 storage classes

Amazon S3 storage classes are purpose-built to provide the lowest cost storage for different access patterns. These include S3 Standard for general-purpose storage of frequently accessed data; S3 Intelligent-Tiering to automatically optimize costs for data with unknown or changing access patterns; S3 Standard-Infrequent Access (S3 Standard-IA) and S3 One Zone-Infrequent Access (S3 One Zone-IA) for long-lived, but less frequently accessed data; S3 Glacier Instant Retrieval for archive data that needs immediate access, and S3 Glacier Flexible Retrieval (formerly S3 Glacier) for archive data that does not require immediate access but needs the flexibility to retrieve large sets of data at no cost, and Amazon S3 Glacier Deep Archive (S3 Glacier Deep Archive) for long-term archive and digital preservation at the lowest storage costs in the cloud.

Amazon S3 Intelligent-Tiering

The Amazon S3 Intelligent-Tiering storage class is designed to optimize storage costs by automatically moving data to the most cost-effective access tier when access patterns change. For a small monthly object monitoring and automation charge, S3 Intelligent-Tiering monitors access patterns and automatically moves objects that have not been accessed to lower-cost access tiers. S3 Intelligent-Tiering delivers automatic storage cost savings in three low-latency and high-throughput access tiers. For data that can be accessed asynchronously, you can activate automatic archiving capabilities within the S3 Intelligent-Tiering storage class.

- Automatic Access tiers: Frequent, Infrequent, and Archive Instant Access tiers have the milliseconds latency and high-throughput performance

- The Infrequent Access tier saves up to 40% on storage costs

- The Archive Instant Access tier saves up to 68% on storage costs

- Opt-in asynchronous archive capabilities for objects that become rarely accessed

- Archive Access and Deep Archive Access tiers have the same performance as Glacier and Glacier Deep Archive and save up to 95% for rarely accessed objects

- Designed for durability of 99.999999999% of objects across multiple Availability Zones and for 99.9% availability over a given year

- No operational overhead, no lifecycle charges, no retrieval charges, and no minimum storage duration

- No additional tiering charges apply when objects are moved between access tiers within the S3 Intelligent-Tiering storage class

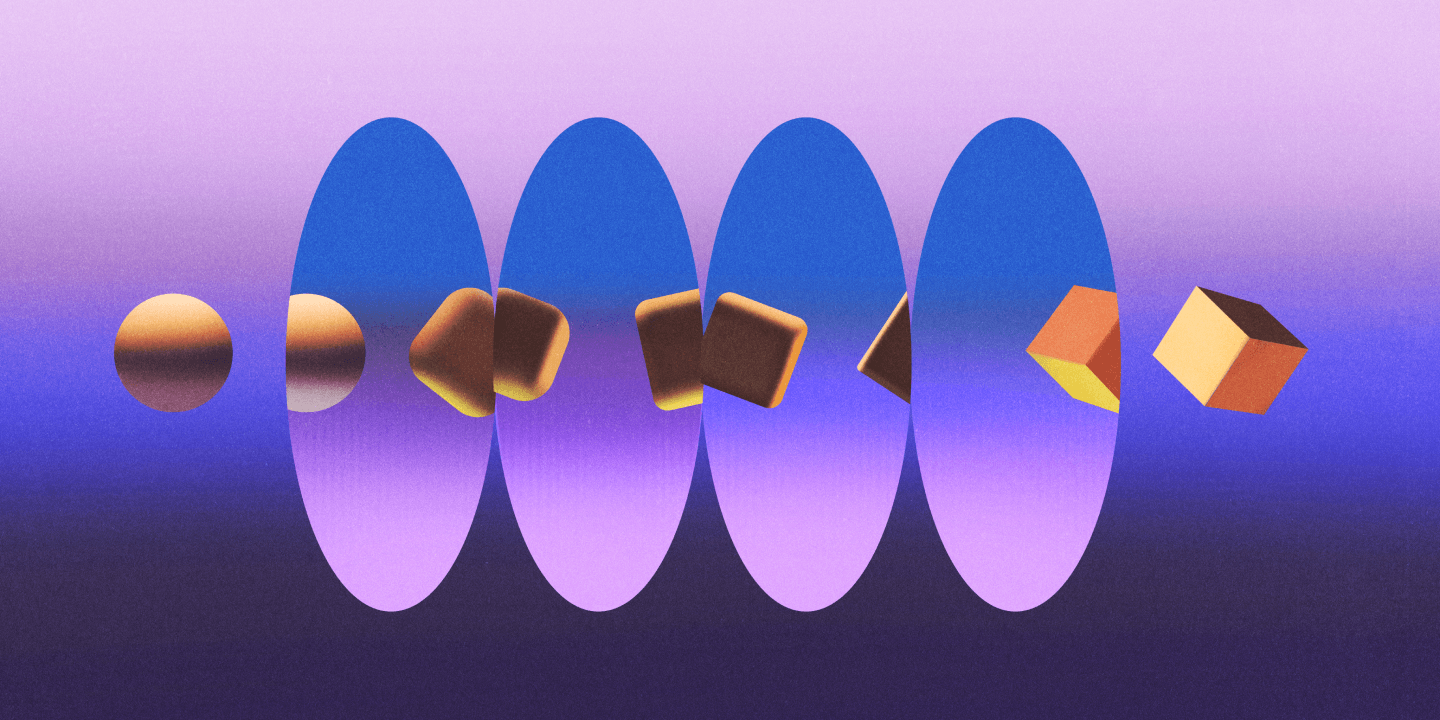

How it works — S3 Intelligent-Tiering

Automatic Access Tiers

The Amazon S3 Intelligent-Tiering storage class is designed to optimize storage costs by automatically moving data to the most cost-effective access tier when access patterns change. S3 Intelligent-Tiering automatically stores objects in three access tiers: one tier optimized for frequent access, a lower-cost tier optimized for infrequent access, and a very-low-cost tier optimized for rarely accessed data. For a small monthly object monitoring and automation charge, S3 Intelligent-Tiering moves objects that have not been accessed for 30 consecutive days to the Infrequent Access tier for savings of 40%; and after 90 days of no access, they’re moved to the Archive Instant Access tier with savings of 68%. If the objects are accessed later, S3 Intelligent-Tiering moves the objects back to the Frequent Access tier. To save even more on rarely accessed storage, view the additional diagrams to see the opt-in asynchronous Archive and Deep Archive Access tiers in S3 Intelligent-Tiering.

There are no retrieval charges in S3 Intelligent-Tiering. S3 Intelligent-Tiering has no minimum eligible object size, but objects smaller than 128 KB are not eligible for auto tiering. These smaller objects may be stored, but they’ll always be charged at the Frequent Access tier rates and don’t incur the monitoring and automation charge. See the Amazon S3 Pricing page for more information. To learn more, visit the S3 Intelligent-Tiering user guide.

Opt-in asynchronous Deep Archive Access tier

The Amazon S3 Intelligent-Tiering storage class is designed to optimize storage costs by automatically moving data to the most cost-effective access tier when access patterns change. S3 Intelligent-Tiering automatically stores objects in three access tiers: one tier optimized for frequent access, a lower-cost tier optimized for infrequent access, and a very-low-cost tier optimized for rarely accessed data. For a small monthly object monitoring and automation charge, S3 Intelligent-Tiering moves objects that have not been accessed for 30 consecutive days to the Infrequent Access tier for savings of 40%; and after 90 days of no access, they’re moved to the Archive Instant Access tier with savings of 68%. To save more on data that doesn’t require immediate retrieval, you can activate the optional asynchronous Deep Archive Access tier. When turned on, objects not accessed for 180 days are moved to the Deep Archive Access tier with up to 95% in storage cost savings. If the objects are accessed later, S3 Intelligent-Tiering moves the objects back to the Frequent Access tier. If the object you are retrieving is stored in the optional Deep Archive tier, before you can retrieve the object you must first restore a copy using RestoreObject. For information about restoring archived objects, see Restoring Archived Objects.

There are no retrieval charges in S3 Intelligent-Tiering. S3 Intelligent-Tiering has no minimum eligible object size, but objects smaller than 128 KB are not eligible for auto tiering. These smaller objects may be stored, but they’ll always be charged at the Frequent Access tier rates and don’t incur the monitoring and automation charge. See the Amazon S3 Pricing page for more information. To learn more, visit the S3 Intelligent-Tiering user guide.

Both opt-in asynchronous Archive Access tiers

The Amazon S3 Intelligent-Tiering storage class is designed to optimize storage costs by automatically moving data to the most cost-effective access tier when access patterns change. S3 Intelligent-Tiering automatically stores objects in three access tiers: one tier optimized for frequent access, a lower-cost tier optimized for infrequent access, and a very-low-cost tier optimized for rarely accessed data. For a small monthly object monitoring and automation charge, S3 Intelligent-Tiering moves objects that have not been accessed for 30 consecutive days to the Infrequent Access tier for savings of 40%; and after 90 days of no access, they’re moved to the Archive Instant Access tier with savings of 68%. To save more on data that doesn’t require immediate retrieval, you can activate the optional asynchronous Archive Access and Deep Archive Access tiers. When turned on, objects not accessed for 90 days are moved directly to the Archive Access Tier (bypassing the automatic Archive Instant Access tier) for savings of 71%, and the Archive Deep Archive Access tier after 180 days with up to 95% in storage cost savings. If the objects are accessed later, S3 Intelligent-Tiering moves the objects back to the Frequent Access tier. If the object you are retrieving is stored in the optional Archive Access or Deep Archive tiers, before you can retrieve the object you must first restore a copy using RestoreObject. For information about restoring archived objects, see Restoring Archived Objects.

There are no retrieval charges in S3 Intelligent-Tiering. S3 Intelligent-Tiering has no minimum eligible object size, but objects smaller than 128 KB are not eligible for auto tiering. These smaller objects may be stored, but they’ll always be charged at the Frequent Access tier rates and don’t incur the monitoring and automation charge. See the Amazon S3 Pricingpage for more information. To learn more, visit the S3 Intelligent-Tiering user guide.

Amazon S3 Glacier storage classes

The Amazon S3 Glacier storage classes are purpose-built for data archiving, and are designed to provide you with the highest performance, the most retrieval flexibility, and the lowest cost archive storage in the cloud. You can now choose from three archive storage classes optimized for different access patterns and storage duration. For archive data that needs immediate access, such as medical images, news media assets, or genomics data, choose the S3 Glacier Instant Retrieval storage class, an archive storage class that delivers the lowest cost storage with milliseconds retrieval. For archive data that does not require immediate access but needs the flexibility to retrieve large sets of data at no cost, such as backup or disaster recovery use cases, choose S3 Glacier Flexible Retrieval (formerly S3 Glacier), with retrieval in minutes or free bulk retrievals in 5-12 hours. To save even more on long-lived archive storage such as compliance archives and digital media preservation, choose S3 Glacier Deep Archive, the lowest cost storage in the cloud with data retrieval from 12-48 hours.

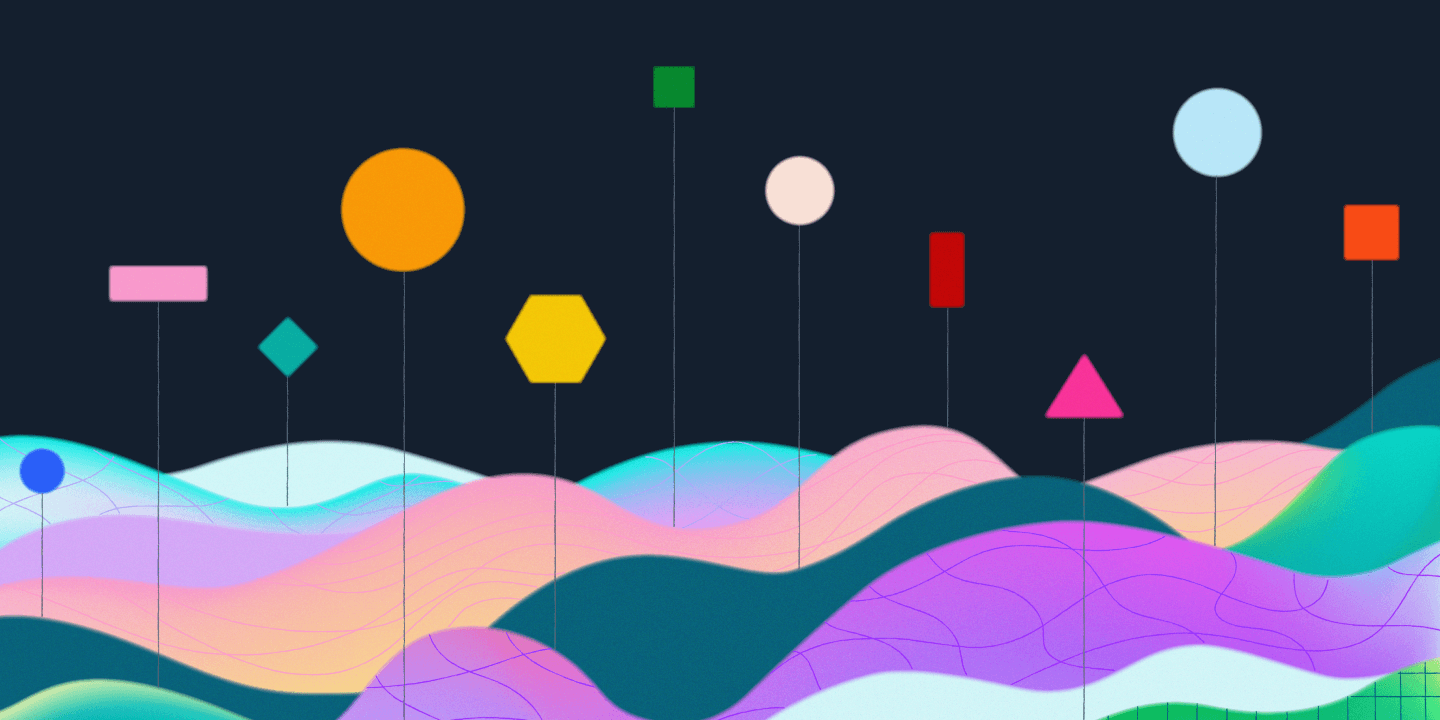

How it works — S3 Glacier storage classes

Amazon S3 Glacier Instant Retrieval

Amazon S3 Glacier Instant Retrieval is an archive storage class that delivers the lowest-cost storage for long-lived data that is rarely accessed and requires retrieval in milliseconds. With S3 Glacier Instant Retrieval, you can save up to 68% on storage costs compared to using the S3 Standard-Infrequent Access (S3 Standard-IA) storage class, when your data is accessed once per quarter. S3 Glacier Instant Retrieval delivers the fastest access to archive storage, with the same throughput and milliseconds access as the S3 Standard and S3 Standard-IA storage classes. S3 Glacier Instant Retrieval is ideal for archive data that needs immediate access, such as medical images, news media assets, or user-generated content archives. For more information, visit the Amazon S3 Glacier Instant Retrieval page.

-

Data retrieval in milliseconds with the same performance as S3 Standard

-

Designed for durability of 99.999999999% of objects across multiple Availability Zones

-

Designed for 99.9% data availability in a given year

-

S3 PUT API for direct uploads to S3 Glacier Instant Retrieval, and S3 Lifecycle management for automatic migration of objects

Amazon S3 Glacier Flexible Retrieval (Formerly S3 Glacier)

S3 Glacier Flexible Retrieval delivers low-cost storage, up to 10% lower cost (than S3 Glacier Instant Retrieval), for archive data that is accessed 1-2 times per year and is retrieved asynchronously. S3 Glacier Flexible Retrieval is the ideal storage class for archive data that does not require immediate access but needs the flexibility to retrieve large sets of data at no cost, such as backup or disaster recovery use cases, S3 Glacier Flexible Retrieval delivers the most flexible retrieval options that balance cost with access times ranging from minutes to hours and with free bulk retrievals.

-

Designed for durability of 99.999999999% of objects across multiple Availability Zones

-

Ideal for backup, disaster recovery use cases when large sets of data need to occasionally retrieved in minutes, without concern for costs

-

Configurable retrieval times, from minutes to hours, with free bulk retrievals

-

S3 PUT API for direct uploads to S3 Glacier, and S3 Lifecycle management for automatic migration of objects

Amazon S3 Glacier Deep Archive

S3 Glacier Deep Archive is Amazon S3’s lowest-cost storage class and supports long-term retention and digital preservation for data that may be accessed once or twice in a year. It is designed for customers — particularly those in highly-regulated industries, such as the Financial Services, Healthcare, and Public Sectors — that retain data sets for 7-10 years or longer to meet regulatory compliance requirements. S3 Glacier Deep Archive can also be used for backup and disaster recovery use cases, and is a cost-effective and easy-to-manage alternative to magnetic tape systems, whether they are on-premises libraries or off-premises services. S3 Glacier Deep Archive complements Amazon S3 Glacier, which is ideal for archives where data is regularly retrieved and some of the data may be needed in minutes. All objects stored in S3 Glacier Deep Archive are replicated and stored across at least three geographically-dispersed Availability Zones, protected by 99.999999999% of durability, and can be restored within 12 hours.

-

Designed for durability of 99.999999999% of objects across multiple Availability Zones

-

Lowest cost storage class designed for long-term retention of data that will be retained for 7-10 years

-

Ideal alternative to magnetic tape libraries

-

Retrieval time within 12 hours

-

S3 PUT API for direct uploads to S3 Glacier Deep Archive, and S3 Lifecycle management for automatic migration of objects

Performance across the S3 storage classes

|

Storage Class

|

S3 Standard

|

S3 Intelligent-Tiering*

|

S3 Standard-IA

|

S3 One Zone-IA†

|

S3 Glacier Instant Retrieval

|

S3 Glacier Flexible Retrieval

|

S3 Glacier Deep Archive

|

|---|---|---|---|---|---|---|---|

|

Designed for durability

|

99.999999999%

(11 9s) |

99.999999999%

(11 9s) |

99.999999999%

(11 9s) |

99.999999999%

(11 9s) |

99.999999999%

(11 9s) |

99.999999999%

(11 9s) |

99.999999999%

(11 9s) |

|

Designed for availability

|

99.99%

|

99.9%

|

99.9%

|

99.5%

|

99.9%

|

99.99%

|

99.99%

|

|

Availability SLA

|

99.9%

|

99%

|

99%

|

99%

|

99%

|

99.9%

|

99.9%

|

|

Availability Zones

|

≥3

|

≥3

|

≥3

|

1

|

≥3

|

≥3

|

≥3

|

|

Minimum capacity charge per object

|

N/A

|

N/A

|

128 KB

|

128 KB

|

128 KB

|

40 KB

|

40 KB

|

|

Minimum storage duration charge

|

N/A

|

N/A

|

30 days

|

30 days

|

90 days

|

90 days

|

180 days

|

|

Retrieval charge

|

N/A

|

N/A

|

per GB retrieved

|

per GB retrieved

|

per GB retrieved

|

per GB retrieved

|

per GB retrieved

|

|

First byte latency

|

milliseconds

|

milliseconds

|

milliseconds

|

milliseconds

|

milliseconds

|

select minutes or hours

|

select hours

|

† Because S3 One Zone-IA stores data in a single AWS Availability Zone, data stored in this storage class will be lost in the event of Availability Zone destruction.

* S3 Intelligent-Tiering charges a small monitoring and automation charge, and has a minimum eligible object size of 128KB for auto-tiering. Smaller objects may be stored, but will always be charged at the Frequent Access tier rates, and are not charged the monitoring and automation charge. See the Amazon S3 Pricing for more information.

** Standard retrievals in the S3 Intelligent-Tiering Archive Access tier and Deep Archive access tier are free. If you need faster access to your objects from the Archive Access tiers, you can pay for expedited retrieval using the S3 console.

*** S3 Intelligent-Tiering first byte latency for frequent and infrequent access tier is milliseconds access time, and the archive access and deep archive access tiers first byte latency is minutes or hours.

Data lifecycle management tools to optimize costs

Amazon S3 has various features you can use to organize and manage your data in ways that enable cost efficiencies, letting you optimize your storage for access patterns and cost.

Tools and features

Customers

Sysco

"With S3, we were able to reduce storage costs by over 40%."

Zalando

Founded in 2008, Zalando is Europe’s leading online platform for fashion and lifestyle with over 32 million active customers. Amazon S3 is the cornerstone of the data infrastructure of Zalando, and they have utilized S3 storage classes to optimize storage costs.

We are saving 37% annually in storage costs by using Amazon S3 Intelligent-Tiering to automatically move objects that have not been touched within 30 days to the infrequent-access tier.

Max Schultze, Lead Data Engineer - Zalando

Teespring

Teespring, an online platform that lets creators turn unique ideas into custom merchandise, experienced rapid business growth, and the company’s data also grew exponentially—to a petabyte—and continued to increase. Like many cloud native companies, Teespring addressed the problem by using AWS, specifically storing data on Amazon S3.

By using Amazon S3 Glacier and S3 Intelligent-Tiering, Teespring now saves more than 30 percent on its monthly storage costs.

Photobox

Photobox wanted to get out of the business of owning and maintaining its own IT infrastructure so it could redeploy resources toward innovation in artificial intelligence and other areas to create a better customer experience. Photobox is an online, personalized photo-products company that serves millions of customers each year in over ten markets.

By migrating from its EMC Isilon and IBM Cleversafe on-premises storage arrays to Amazon S3 using AWS Snowball Edge, Photobox saved a significant amount on costs on storage for its 10 PB of photo storage.

Pomelo

-

Pomelo is a fintech company that develops digital account and credit card solutions for fintechs and companies in the process of digital transformation, helping them launch and scale financial services in Latin America in an agile and secure way. Pomelo built a data lake on Amazon S3 and used a combination of native features and other AWS services to stay secure, manage access, and help ensure compliance at scale, with analytics-driven cost optimization resulting in substantial savings.

Pomelo saves 95% on their encryption costs using Amazon S3 Bucket Keys and projects to save 40-50% on data storage using the Amazon S3 Glacier storage classes.

To save on storage costs, customers choose Amazon S3.

Start saving today - migrate your storage to Amazon S3

The AWS Migration Acceleration Program for Storage consists of AWS services, best practices, and tools to help customers save costs and accelerate migrations of storage workloads to AWS. Reach your migration goals even faster with AWS services, best practices, tools, and incentives. Workloads that are well suited for storage migration include on premises data lakes, large unstructured data repositories, file shares, home directories, backups, and archives.

AWS offers more ways to help you reduce storage costs, and more options to migrate your data. That is why more customers choose AWS storage to build the foundation for their cloud IT environment. Learn more about the Storage Migration Acceleration Program

Cost optimization resources

Durability and Global Resiliency

Amazon S3 is designed to deliver 99.999999999% data durability. S3 automatically creates copies of all uploaded objects and stores them across at least three Availability Zones (AZs), besides S3 One-Zone Infrequent Access. This means your data is protected by a multi-AZ resiliency model and against site-level failures. Watch the video to learn more about what the 11 9s of durability means for your data and global resiliency.