- AWS Solutions Library›

- Guidance for Building a High-Performance Numerical Weather Prediction System on AWS

Guidance for Building a High-Performance Numerical Weather Prediction System on AWS

Overview

How it works

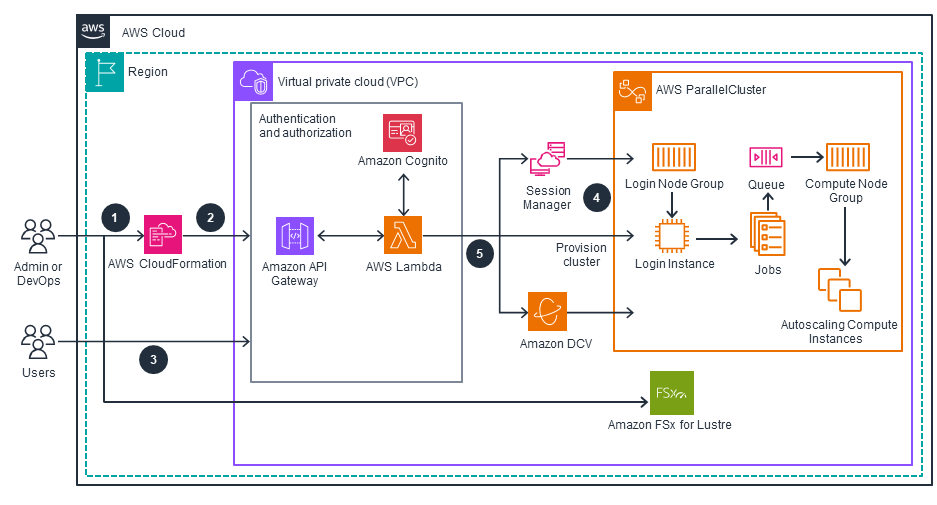

HPC Cluster Deployment

This architecture diagram shows how to provision the AWS ParallelCluster user interface (UI) and configure an HPC cluster with compute and storage capabilities. For the numerical weather prediction workflow, open the other tab.

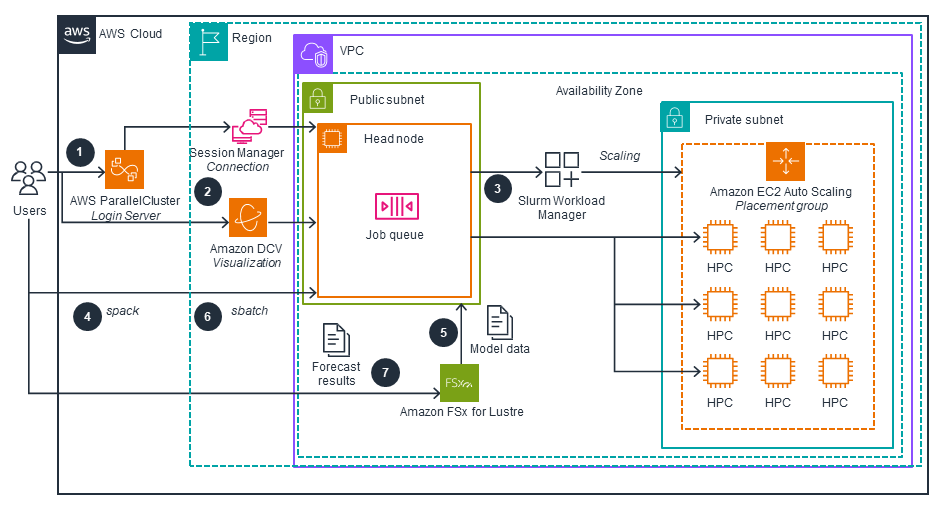

Prediction Workflow

This architecture diagram shows how to predict the weather for CONUS by deploying the WRF model on AWS and setting up the numerical weather prediction workflow. For the HPC cluster deployment, open the other tab.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

This Guidance uses a combination of fully managed services (including API Gateway, Amazon Cognito, and Lambda) and self-managed services (including FSx for Lustre and Amazon EC2). The latter services are deployed to a configurable HPC cluster by means of a template and can be reconfigured or updated if cluster performance requirements change. You can use Amazon CloudWatch to monitor all these services through event logging.

Amazon Cognito and API Gateway provide secure authentication and authorization and secure API access management. You can then log in to the HPC cluster’s head node for application deployment and management, using the AWS Systems Manager Session Manager secure shell—which provides greater security—or by using NICE DCV. Additionally, FSx for Lustre provides data encryption both in transit and at rest. By scoping AWS Identity and Access Management (IAM) policies to the minimum permissions required, you can limit unauthorized access to resources.

AWS ParallelCluster uses HPC cluster job scheduling to enable parallel computational task implementation, using Slurm Workload Manager, which optimally allocates resources based on job requirements, priorities, and user-defined policies. This reduces the chance of application failure so that you can run weather simulations and avoid downtime errors. Additionally, this Guidance deploys EC2 instances in different Availability Zones for increased reliability, and FSx for Lustre provides highly reliable storage for your HPC clusters.

This Guidance lets you efficiently manage and provision HPC clusters using AWS ParallelCluster and a YAML-based configuration. AWS ParallelCluster efficiently scales its CPU and RAM footprint and the instance number both horizontally and vertically to handle increased workloads. This Guidance also uses Message Passing Interface to provide efficient parallel processing and distributed data processing capabilities. Additionally, FSx for Lustre provides a high-performance storage layer for the HPC clusters.

As a managed service, Amazon Cognito provides cost-effective user authentication and authorization. Additionally, Amazon EC2 Auto Scaling scales cluster node instances horizontally or vertically based on workload demand, so that you won’t have to provision and pay for unused resources. FSx for Lustre also provides a cost-efficient storage layer that makes it easy to launch, run, and scale storage for your HPC tasks.

This Guidance uses specialized Amazon EC2 instances (including Hpc6a instances powered by third-generation AMD Epyc processors) that offer high performance for compute-intensive HPC workloads. This performance, combined with the elasticity and scalability of serverless of AWS services, helps you achieve optimal resource utilization, helping you avoid overprovisioning resources. Additionally, FSx for Lustre supports concurrent access to the same files and directories from thousands of compute instances, further helping you minimize your workloads’ environmental impact.

Deploy with confidence

Everything you need to launch this Guidance in your account is right here

We'll walk you through it

Dive deep into the implementation guide for additional customization options and service configurations to tailor to your specific needs.

Let's make it happen

Ready to deploy? Review the sample code on GitHub for detailed deployment instructions to deploy as-is or customize to fit your needs.

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages