Come ti è sembrato il contenuto?

- Scopri

- In che modo Proto sta dando vita ad avatar all'avanguardia con Amazon Bedrock

In che modo Proto sta dando vita ad avatar all'avanguardia con Amazon Bedrock

.png)

Un paziente in Australia necessita di cure specialistiche urgenti da un medico del Regno Unito. Un'azienda di robotica deve dimostrare la sua ultima invenzione a potenziali investitori senza doversi preoccupare del trasporto di macchinari. Un nuovo hotel vuole stupire gli ospiti con un'esperienza premium. Proto sta rendendo possibile tutto ciò e molto altro ancora, con ologrammi basati sull'IA generativa.

Proto sfrutta l'intelligenza artificiale (IA) generativa per creare avatar realistici che vengono proiettati in un'impressionante macchina olografica di circa 2 metri, dove possono effettuare delle vere e proprie conversazioni, fornire informazioni, dare supporto nelle attività e divertire. Sono già attivi in settori come la pubblicità e la vendita al dettaglio, l'intrattenimento, i trasporti, l'ospitalità, l'istruzione, la formazione e l'assistenza sanitaria, lavorando per le aziende di Fortune 500 e per alcune delle squadre sportive e per delle celebrità più famose al mondo.

Quindi, quel paziente che necessita di cure urgenti può essere valutato da uno specialista a migliaia di chilometri di distanza. L'azienda di robotica può mostrare il suo ultimo prodotto senza dover affrontare i costi e la complessità dell'esportazione di costosi kit. L'hotel può accogliere gli ospiti con chatbot a grandezza naturale e fornire un servizio di concierge digitale personalizzato per distinguere la propria attività dalla concorrenza.

Ciascuno di questi casi d'uso presenta caratteristiche (ad esempio costi, latenza, precisione) che richiedono la scelta di un determinato modello di fondazione (FM). Pertanto, Proto aveva bisogno di una soluzione che facilitasse la sperimentazione, il test e l'adattamento di avatar potenziati con l’IA in base al loro particolare caso d'uso. La migrazione ad Amazon Bedrock ha fornito questa soluzione, dando a Proto l'accesso a un'ampia scelta di FM che possono essere valutati, sperimentati, implementati e personalizzati con facilità.

Miglioramento e ottimizzazione con Amazon Bedrock

Nonostante sia leader nel suo settore, la richiesta del mercato di una latenza inferiore, di un maggiore realismo e di una maggiore precisione nelle interazioni ha fatto sì che Proto trovasse un modo per migliorare i suoi avatar con IA. Gli avatar di Proto possono essere trasmessi a migliaia di spettatori o personalizzati per una singola persona, trasmettere informazioni in tempo reale o fornire formazione di alto livello. Qualunque sia il loro scopo o settore, devono fornire un sofisticato livello di realismo, precisione e sicurezza, offrendo al contempo un avatar adattato al caso d'uso. Ad esempio, un concierge virtuale di un hotel richiederebbe una messa a punto specifica per migliorare l'esperienza degli ospiti, mentre un insegnante virtuale di arte ha bisogno di flessibilità per trasformare il linguaggio in immagini.

Fondamentalmente, Proto voleva anche semplificare il processo di creazione e gestione delle applicazioni. In passato, ciò era stato complicato dall'uso di Proto di diversi servizi e strumenti: utilizzava una suite di servizi AWS ma creava utilizzando un altro provider.

L'IA generativa è uno spazio in rapida evoluzione, gli operatori esigenti continuano a innovare i propri prodotti e a servire al meglio i propri clienti. Ciò richiede tempo umano e risorse, supportati da strumenti flessibili che possono aiutare a svolgere il lavoro pesante. L'ambiente legacy di Proto, frammentato in più soluzioni, rendeva difficile la gestione dei servizi AWS esistenti e l'implementazione di nuovi. Con un approccio basato su Amazon Bedrock, Proto è in grado di iterare continuamente sui propri avatar e adattarli in base alle interazioni degli utenti in tempo reale. Questo aiuta a garantire che il contenuto sia sempre appropriato per un determinato contesto. Ad esempio, Proto può decidere sul momento se un determinato argomento è appropriato o meno e impostare linee guida, note come guardrail, durante l'interazione per proteggere questi contatti.

Questi fattori, oltre alla necessità dei più recenti strumenti di IA generativa, hanno spinto Proto a migrare a Claude di Anthropic su Amazon Bedrock. Ciò ha consentito all'azienda di migliorare i propri avatar di intelligenza artificiale e i processi utilizzati per crearli.

Perché scegliere Amazon Bedrock?

Amazon Bedrock è un servizio completamente gestito che offre una scelta di modelli di fondazione (FM) ad alte prestazioni attraverso una singola API, insieme a un'ampia gamma di funzionalità necessarie per creare applicazioni di IA generativa garantendo sicurezza, privacy e IA responsabile.

Utilizzando Amazon Bedrock, è possibile sperimentare e valutare facilmente i migliori FM per il proprio caso d'uso, personalizzarli privatamente con i propri dati utilizzando tecniche come l'ottimizzazione e la generazione potenziata tramite recupero (Retrieval Augmented Generation, RAG), e creare agenti che eseguono attività utilizzando i sistemi e le origini dati aziendali.

Amazon Bedrock è anche serverless, il che significa che le startup non devono gestire alcuna infrastruttura e possono integrare e distribuire in modo sicuro le funzionalità di IA generativa nelle applicazioni utilizzando i servizi AWS che già conoscono.

La meccanica della migrazione

Proto è migrato ad Amazon Bedrock e ha scelto Claude Instant di Anthropic, un FM disponibile su Amazon Bedrock, per mostrare un avatar conversazionale in una conferenza importante. Ha messo a punto questo modello per ottimizzare il suo avatar basato sull'IA, mettendo in atto delle regole guardrail per garantire che le interazioni fossero appropriate all'ambientazione, al pubblico e al contesto.

Amazon Bedrock ha permesso a Proto di testare le prestazioni di vari FM prima di passare alla fase successiva di sviluppo, il che significa che ha potuto scegliere la soluzione più conveniente per ogni caso d'uso. Si è trattato di un processo semplice, quindi non ha avuto alcun impatto sul flusso di lavoro o sulle pipeline di distribuzione, entrambi elementi fondamentali per le startup che desiderano rimanere all'avanguardia nello sviluppo dell'IA. Come spiega Raffi Kryszek, Chief Product and AI Officer di Proto, “Amazon Bedrock ci ha permesso di testare le prestazioni del nostro avatar proveniente da diversi modelli fondamentali modificando solo una riga di codice.”

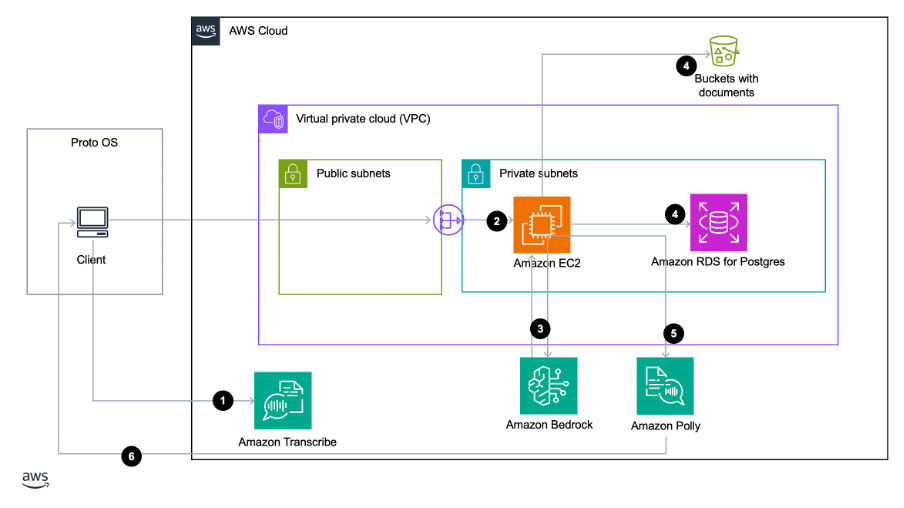

Proto ha inoltre implementato Amazon Polly , un servizio cloud che converte il testo in voce realistica, e Amazon Transcribe, un servizio di riconoscimento vocale che converte automaticamente la voce in testo. Ciò consente loro di adattare la propria soluzione per supportare conversazioni in lingue diverse come il giapponese, il coreano e lo spagnolo.

Oltre alla gamma di servizi offerti, le funzionalità specifiche di Amazon Bedrock hanno assicurato un processo regolare durante la migrazione e hanno consentito a Proto di adattarsi rapidamente e sfruttare i più recenti strumenti di IA generativa man mano che cresce.

Il fulcro della migrazione si è concentrato sulla riconfigurazione delle API. Ciò ha richiesto un'analisi approfondita del funzionamento interno di entrambi i sistemi per garantire una comunicazione senza interruzioni tra l'interfaccia utente di Proto e i servizi Amazon Bedrock. Il team tecnico di Proto è stato in grado di rifattorizzare rapidamente il codice utilizzando le API di Amazon Bedrock e ha utilizzato la tecnica di formattazione dei prompt specifica di Claude per aumentare la qualità delle risposte degli avatar.

La migrazione ha segnato un cambiamento fondamentale nell'approccio di Proto alla personalizzazione dei parametri di inferenza, in base al quale i parametri vengono regolati per controllare le risposte del modello. Il processo ha comportato l'uso completo delle funzionalità API di Amazon Bedrock, con il team che ha sfruttato le sue ampie opzioni di configurazione per ottimizzare la generazione delle risposte. Ciò includeva le impostazioni della temperatura e top K.

Una migliore gestione della temperatura consente un controllo più calibrato della creatività rispetto alla fedeltà. Per un avatar informativo con IA, come quello utilizzato da un'azienda sanitaria per fornire informazioni mediche, Proto può optare per un'impostazione della temperatura più bassa, dando priorità alla precisione e alla pertinenza. La possibilità di regolare la temperatura in questo modo significa che Proto può adottare un approccio più calibrato per soddisfare le esigenze di diversi clienti che utilizzano diversi tipi di avatar AI. È importante sottolineare che il suo team può farlo molto più velocemente e facilmente rispetto a prima, poiché Amazon Bedrock riduce i requisiti tecnici imposti ai suoi utenti.

Proto può anche essere più preciso nel processo di calibrazione quando si tratta di controllare le impostazioni top K. Come la temperatura, l'impostazione top K è un'altra categoria di parametri di inferenza che può essere regolata per limitare o influenzare la risposta del modello.

Top K è il numero di opzioni più probabili che un modello considera per il token successivo in una sequenza. Potrebbe essere la parola successiva in una frase, il che rende l'impostazione top-k fondamentale per controllare la generazione del testo e garantire che il testo sia coerente e accurato. Abbassando il valore si riduce la dimensione del pool di opzioni tra cui il modello può scegliere, riducendolo a quelle più probabili. Questo potrebbe essere utilizzato per risultati più prevedibili e mirati, come la documentazione tecnica. Un valore più alto aumenta le dimensioni di questo pool e consente al modello di prendere in considerazione opzioni meno probabili. Questo potrebbe essere usato per la narrazione creativa, in cui è richiesta una più ampia varietà di scelte di parole per aumentare la ricchezza e l'imprevedibilità della narrazione.

Lo sfruttamento di questa funzionalità ha permesso a Proto di ottimizzare le prestazioni e la qualità personalizzando attentamente gli output per diversi avatar con IA, a seconda del caso d'uso.

Infine, Proto ha beneficiato delle capacità di progettazione dei prompt di Claude. Il team ha sviluppato una serie di best practice per l'ottimizzazione dei parametri, migliorando la reattività e la pertinenza dell'IA. Questo approccio personalizzato sottolinea l'importanza di comprendere le capacità e i vincoli del modello di IA, al fine di garantire agli sviluppatori la possibilità di sfruttare appieno la tecnologia per soddisfare i requisiti specifici dell'avatar con IA che stanno implementando, del pubblico e delle loro esigenze.

Implementazione dell'architettura di alto livello

L'utilizzo di Amazon Bedrock ha consentito a Proto di migliorare il modo in cui gli avatar con AI rispondono alle domande degli utenti. L'architettura inizia con un utente che pone una domanda che viene poi indirizzata ad Amazon Bedrock. Il processo RAG unisce gli input degli utenti in tempo reale con informazioni approfondite provenienti dai dati proprietari di Proto e dagli archivi di dati esterni. Questo aiuta a generare prompt precisi e pertinenti, dando luogo a una conversazione personalizzata per l'utente che pone la domanda.

Proto è stato in grado di scegliere le dimensioni dei blocchi degli embedding, il che consente di utilizzare più o meno informazioni nelle risposte fornite dai suoi avatar con IA. Gli embedding più piccoli vengono utilizzati al meglio per applicazioni come un assistente personale, poiché consentono di aggiungere molte memorie al prompt. Gli embedding più grandi, invece, sono più utili quando i documenti sono separati in modo che le informazioni non siano sparse ovunque.

Una volta aumentato, il prompt viene elaborato da una selezione di modelli di IA avanzati, tra cui Claude. All'interno di ogni applicazione Proto, un ID avatar univoco indirizza queste domande, consentendo risposte precise e contestualmente consapevoli consultando il database appropriato.

Le risposte vengono quindi inviate ad Amazon Polly per garantire che ogni parola pronunciata dai suoi avatar non solo sia rappresentata visivamente con una precisa sincronizzazione labiale, ma anche consegnata ad alta velocità, ottenendo risposte visivamente e interattivamente fluide. L'avatar con AI assomiglia di più a un essere umano quando parla con il suo l'utente e la conversazione risulta la più istantanea possibile.

Conclusioni

Pertanto, Proto ora sta utilizzando gli strumenti di IA generativa più all'avanguardia per fornire le applicazioni di IA generativa più innovative ai propri clienti. Trattandosi di un servizio completamente gestito, il passaggio ad Amazon Bedrock ha consentito al team di Proto di non dover perdere tempo a riprogettare le proprie soluzioni per supportare più modelli fondamentali. Il team è ora libero di concentrarsi su ciò che conta: creare, scalare e ottimizzare i prodotti per adattarli alle esigenze degli utenti finali e, di conseguenza, far crescere la propria startup.

L'ottimizzazione di questi prodotti è già chiara: concentrandosi sulla riconfigurazione delle API come parte della migrazione gestita, Proto ha migliorato le capacità dei suoi avatar, assicurando che rimangano all'avanguardia nella tecnologia di IA conversazionale. Adattarli ai diversi settori e alla velocità con cui è possibile farlo consente all'azienda di servire meglio un'ampia base di clienti ed estendere la propria presenza in più settori a una velocità competitiva.

La migrazione dei carichi di lavoro e delle applicazioni in AWS è solo l'inizio. Proto ha fatto un ulteriore passo avanti, adattando e sfruttando le funzionalità dell’IA generativa di AWS e le utilizza in tutto il suo flusso di lavoro: dalla sperimentazione con gli strumenti dei leader del settore, all'implementazione della tecnologia che crea interazioni significative per i suoi clienti.

Con la migrazione in AWS, ha alleggerito il carico (tecnico) del suo team ampliando al contempo le sue capacità creative e creando, scalando e implementando più facilmente le applicazioni di IA di propria generazione con sicurezza, privacy e IA responsabile. Che tu sia una startup che desidera iniziare il suo percorso con l'IA generativa o desideri ottimizzare e migliorare il flusso di lavoro e i prodotti attuali, il programma AWS Migration Acceleration Program può aiutarti a esplorare le tue opzioni e scoprire di più su come Amazon Bedrock può funzionare per te.

Con il contributo scritto di Shaun Wang e Tony Gabriel Silva

Aymen Saidi

Aymen is a Principal Solutions Architect in the AWS EC2 team, where he specializes in cloud transformation, service automation, network analytics, and 5G architecture. He’s passionate about developing new capabilities for customers to help them be at the forefront of emerging technologies. In particular, Aymen enjoys exploring applications of AI/ML to drive greater automation, efficiency, and insights. By leveraging AWS's AI/ML services, he works with customers on innovative solutions that utilize these advanced techniques to transform their network and business operations.

Hrushikesh Gangur

Hrushikesh Gangur è un Principal Solutions Architect per startup nel campo dell'IA/ML con esperienza sia nel machine learning che nei servizi di rete AWS. Aiuta le startup che sviluppano IA generativa, veicoli a guida autonoma e piattaforme di ML a gestire la loro attività su AWS in modo efficiente ed efficace.

Nolan Cassidy

Nolan Cassidy is the Lead R & D Engineer at Proto Hologram, specializing in holographic spatial technology. His pioneering work integrates AI and advanced communication systems to develop low-latency, highly interactive experiences, enabling users to feel present in one location while physically being in another.

Come ti è sembrato il contenuto?