AWS News Blog

New Network Load Balancer – Effortless Scaling to Millions of Requests per Second

Elastic Load Balancing (ELB) has been an important part of AWS since 2009, when it was launched as part of a three-pack that also included Auto Scaling and Amazon CloudWatch. Since that time we have added many features, and also introduced the Application Load Balancer. Designed to support application-level, content-based routing to applications that run in containers, Application Load Balancers pair well with microservices, streaming, and real-time workloads.

Elastic Load Balancing (ELB) has been an important part of AWS since 2009, when it was launched as part of a three-pack that also included Auto Scaling and Amazon CloudWatch. Since that time we have added many features, and also introduced the Application Load Balancer. Designed to support application-level, content-based routing to applications that run in containers, Application Load Balancers pair well with microservices, streaming, and real-time workloads.

Over the years, our customers have used ELB to support web sites and applications that run at almost any scale — from simple sites running on a T2 instance or two, all the way up to complex applications that run on large fleets of higher-end instances and handle massive amounts of traffic. Behind the scenes, ELB monitors traffic and automatically scales to meet demand. This process, which includes a generous buffer of headroom, has become quicker and more responsive over the years and works well even for our customers who use ELB to support live broadcasts, “flash” sales, and holidays. However, in some situations such as instantaneous fail-over between regions, or extremely spiky workloads, we have worked with our customers to pre-provision ELBs in anticipation of a traffic surge.

New Network Load Balancer

Today we are introducing the new Network Load Balancer (NLB). It is designed to handle tens of millions of requests per second while maintaining high throughput at ultra low latency, with no effort on your part. The Network Load Balancer is API-compatible with the Application Load Balancer, including full programmatic control of Target Groups and Targets. Here are some of the most important features:

Static IP Addresses – Each Network Load Balancer provides a single IP address for each Availability Zone in its purview. If you have targets in us-west-2a and other targets in us-west-2c, NLB will create and manage two IP addresses (one per AZ); connections to that IP address will spread traffic across the instances in all the VPC subnets in the AZ. You can also specify an existing Elastic IP for each AZ for even greater control. With full control over your IP addresses, Network Load Balancer can be used in situations where IP addresses need to be hard-coded into DNS records, customer firewall rules, and so forth.

Zonality – The IP-per-AZ feature reduces latency with improved performance, improves availability through isolation and fault tolerance and makes the use of Network Load Balancers transparent to your client applications. Network Load Balancers also attempt to route a series of requests from a particular source to targets in a single AZ while still providing automatic failover should those targets become unavailable.

Source Address Preservation – With Network Load Balancer, the original source IP address and source ports for the incoming connections remain unmodified, so application software need not support X-Forwarded-For, proxy protocol, or other workarounds. This also means that normal firewall rules, including VPC Security Groups, can be used on targets.

Long-running Connections – NLB handles connections with built-in fault tolerance, and can handle connections that are open for months or years, making them a great fit for IoT, gaming, and messaging applications.

Failover – Powered by Route 53 health checks, NLB supports failover between IP addresses within and across regions.

Creating a Network Load Balancer

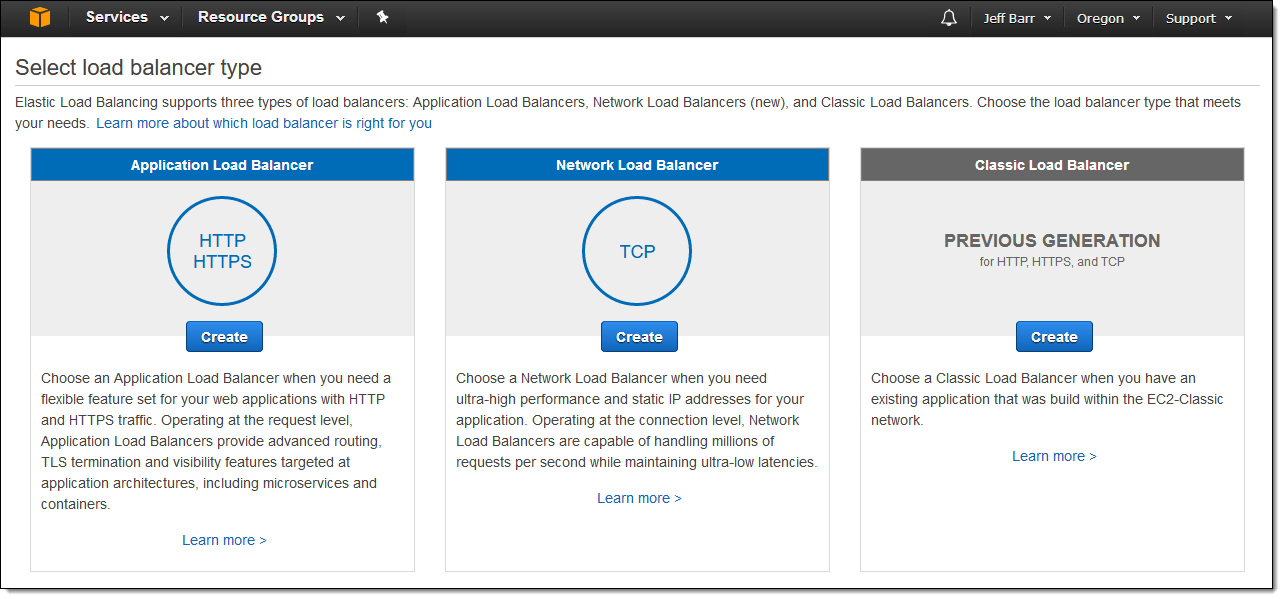

I can create a Network Load Balancer opening up the EC2 Console, selecting Load Balancers, and clicking on Create Load Balancer:

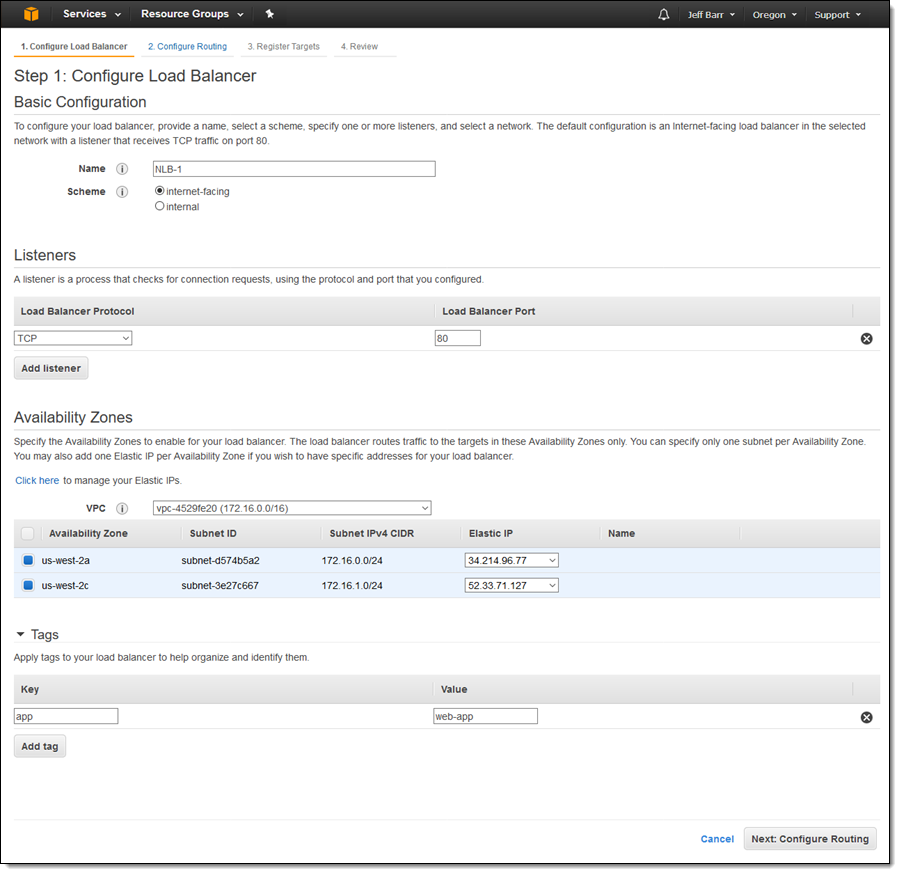

I choose Network Load Balancer and click on Create, then enter the details. I can choose an Elastic IP address for each subnet in the target VPC and I can tag the Network Load Balancer:

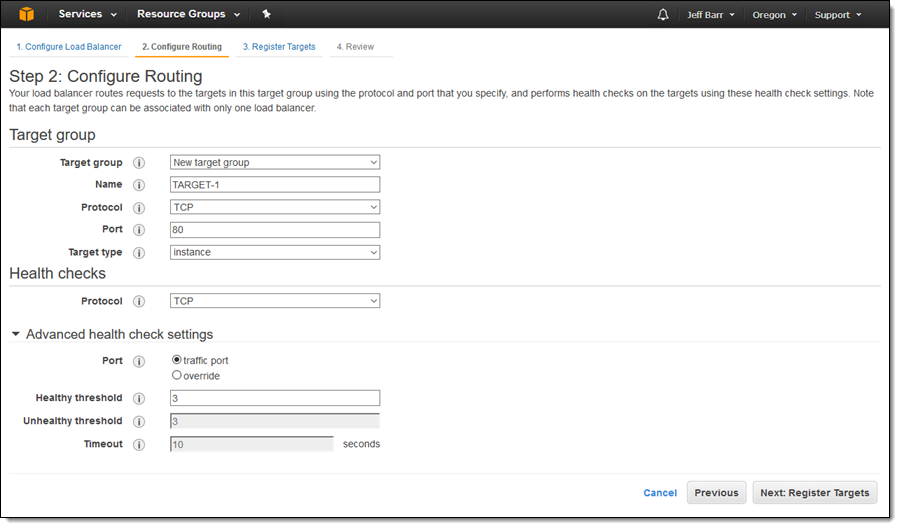

Then I click on Configure Routing and create a new target group. I enter a name, and then choose the protocol and port. I can also set up health checks that go to the traffic port or to the alternate of my choice:

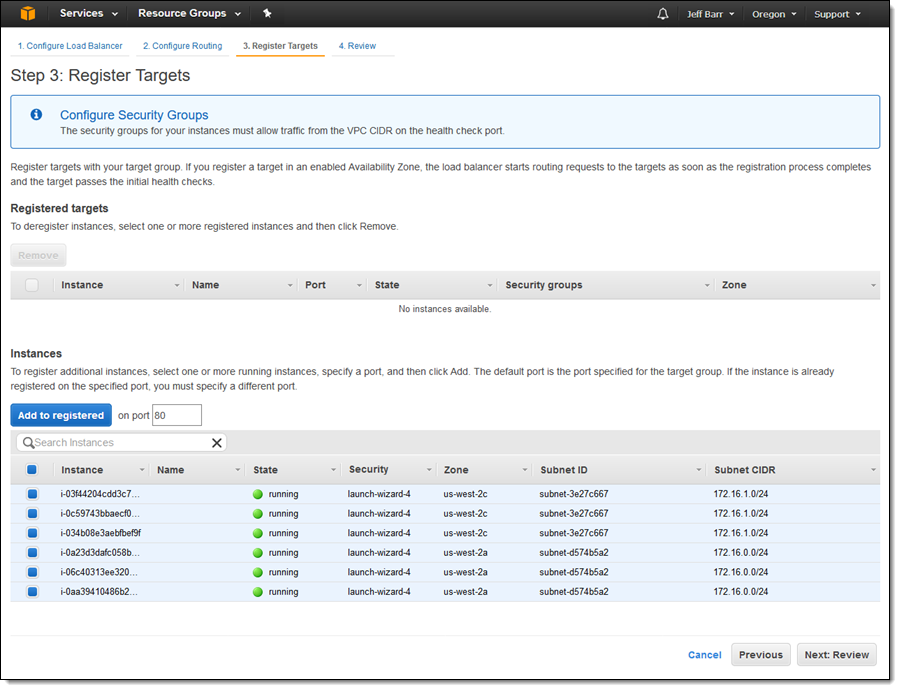

Then I click on Register Targets and the EC2 instances that will receive traffic, and click on Add to registered:

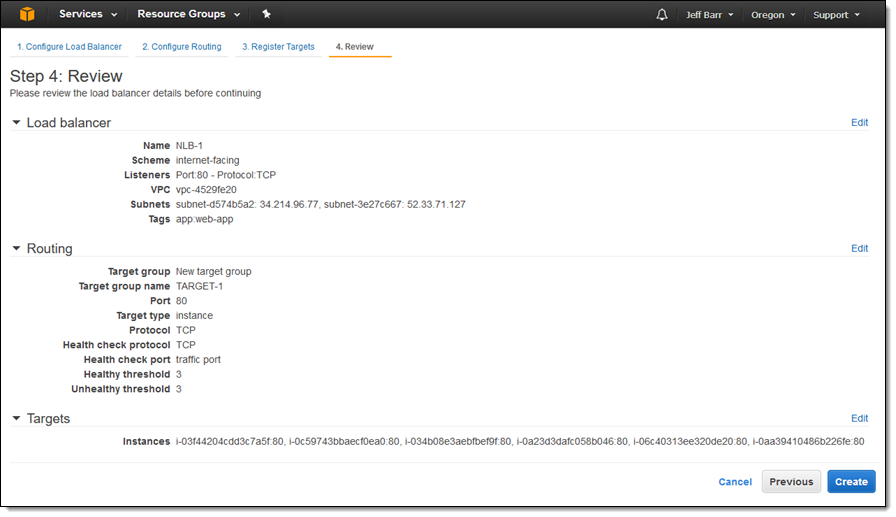

I make sure that everything looks good and then click on Create:

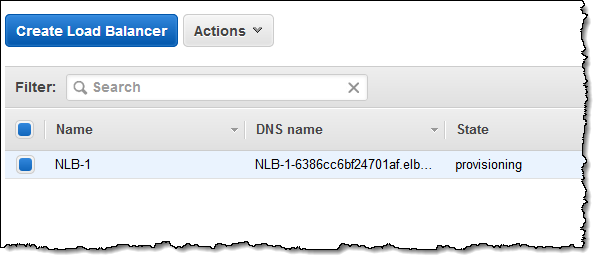

The state of my new Load Balancer is provisioning, switching to active within a minute or so:

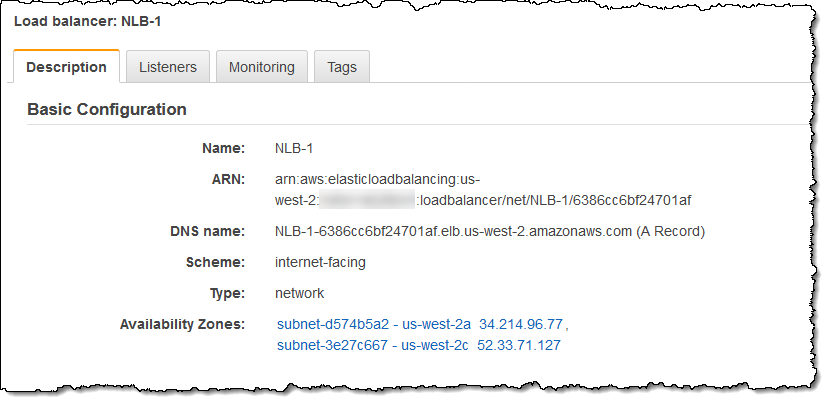

For testing purposes, I simply grab the DNS name of the Load Balancer from the console (in practice I would use Amazon Route 53 and a more friendly name):

Then I sent it a ton of traffic (I intended to let it run for just a second or two but got distracted and it created a huge number of processes, so this was a happy accident):

$ while true;

> do

> wget http://nlb-1-6386cc6bf24701af.elb.us-west-2.amazonaws.com/phpinfo2.php &

> done

A more disciplined test would use a tool like Bees with Machine Guns, of course!

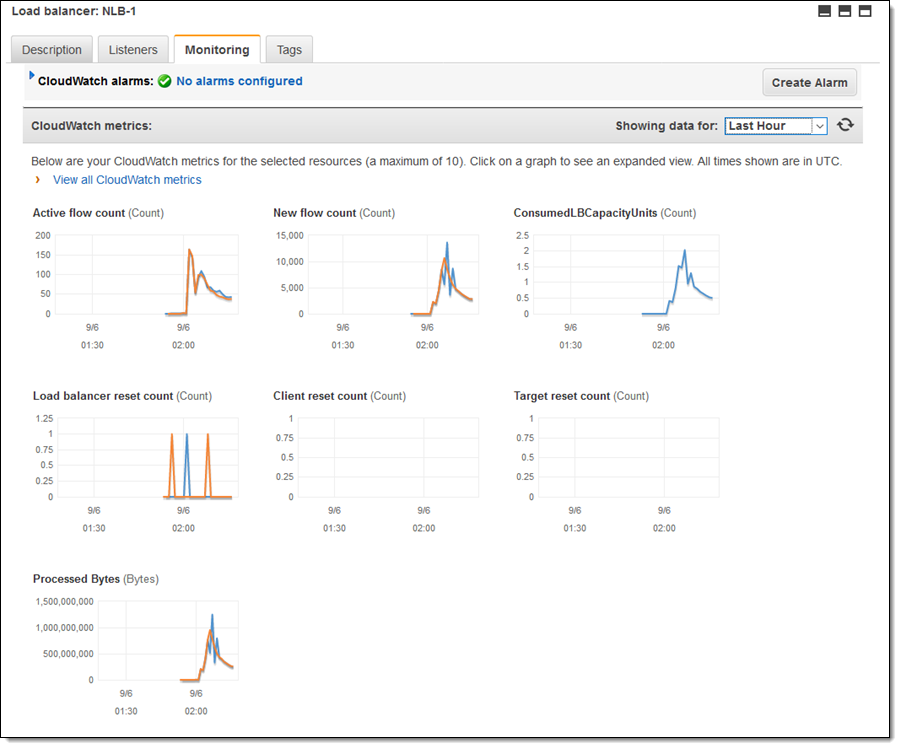

I took a quick break to let some traffic flow and then checked the CloudWatch metrics for my Load Balancer, finding that it was able to handle the sudden onslaught of traffic with ease:

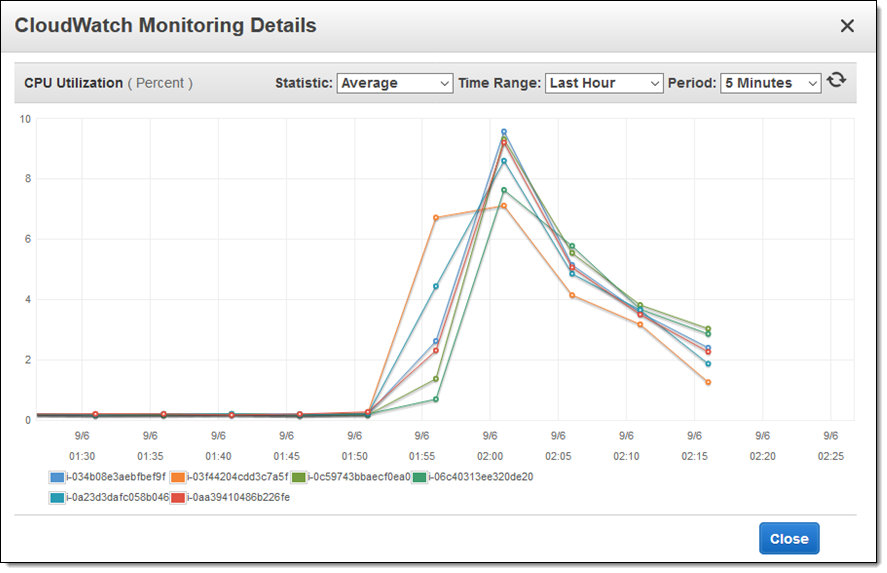

I also looked at my EC2 instances to see how they were faring under the load (really well, it turns out):

It turns out that my colleagues did run a more disciplined test than I did. They set up a Network Load Balancer and backed it with an Auto Scaled fleet of EC2 instances. They set up a second fleet composed of hundreds of EC2 instances, each running Bees with Machine Guns and configured to generate traffic with highly variable request and response sizes. Beginning at 1.5 million requests per second, they quickly turned the dial all the way up, reaching over 3 million requests per second and 30 Gbps of aggregate bandwidth before maxing out their test resources.

Choosing a Load Balancer

As always, you should consider the needs of your application when you choose a load balancer. Here are some guidelines:

Network Load Balancer (NLB) – Ideal for load balancing of TCP traffic, NLB is capable of handling millions of requests per second while maintaining ultra-low latencies. NLB is optimized to handle sudden and volatile traffic patterns while using a single static IP address per Availability Zone.

Application Load Balancer (ALB) – Ideal for advanced load balancing of HTTP and HTTPS traffic, ALB provides advanced request routing that supports modern application architectures, including microservices and container-based applications.

Classic Load Balancer (CLB) – Ideal for applications that were built within the EC2-Classic network.

For a side-by-side feature comparison, see the Elastic Load Balancer Details table.

If you are currently using a Classic Load Balancer and would like to migrate to a Network Load Balancer, take a look at our new Load Balancer Copy Utility. This Python tool will help you to create a Network Load Balancer with the same configuration as an existing Classic Load Balancer. It can also register your existing EC2 instances with the new load balancer.

Pricing & Availability

Like the Application Load Balancer, pricing is based on Load Balancer Capacity Units, or LCUs. Billing is $0.006 per LCU, based on the highest value seen across the following dimensions:

- Bandwidth – 1 GB per LCU.

- New Connections – 800 per LCU.

- Active Connections – 100,000 per LCU.

Most applications are bandwidth-bound and should see a cost reduction (for load balancing) of about 25% when compared to Application or Classic Load Balancers.

Network Load Balancers are available today in all AWS commercial regions except China (Beijing), supported by AWS CloudFormation, Auto Scaling, and Amazon Elastic Container Service (Amazon ECS).

— Jeff;