- AWS Solutions Library›

- Guidance for Deploying Rancher RKE2 at the Edge on AWS

Guidance for Deploying Rancher RKE2 at the Edge on AWS

Overview

How it works

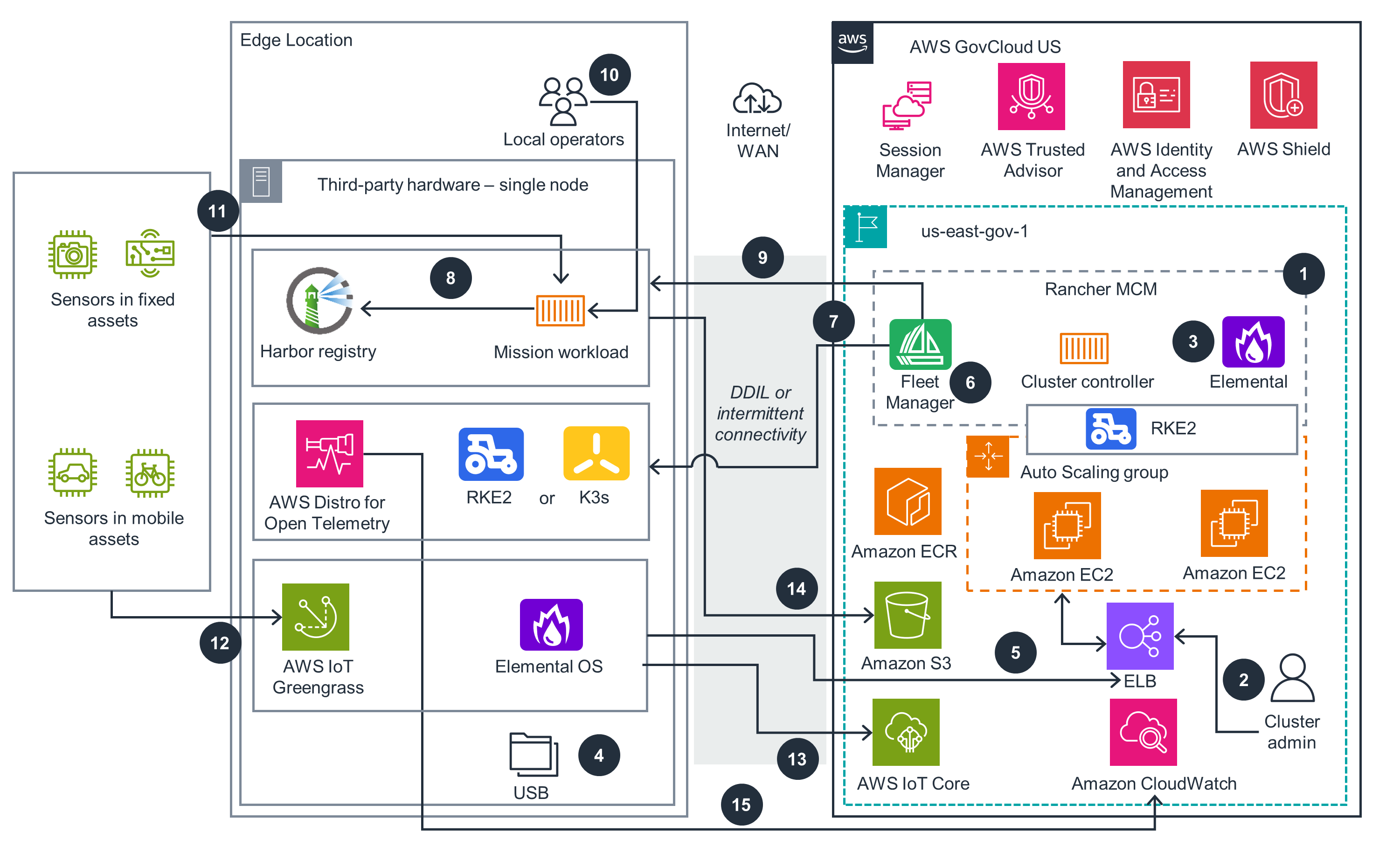

Single-node cluster

This architecture diagram shows an edge and cloud pattern to deploy containerized workloads on a single node cluster at the edge using RKE2 on any third party hardware in DDIL environments.

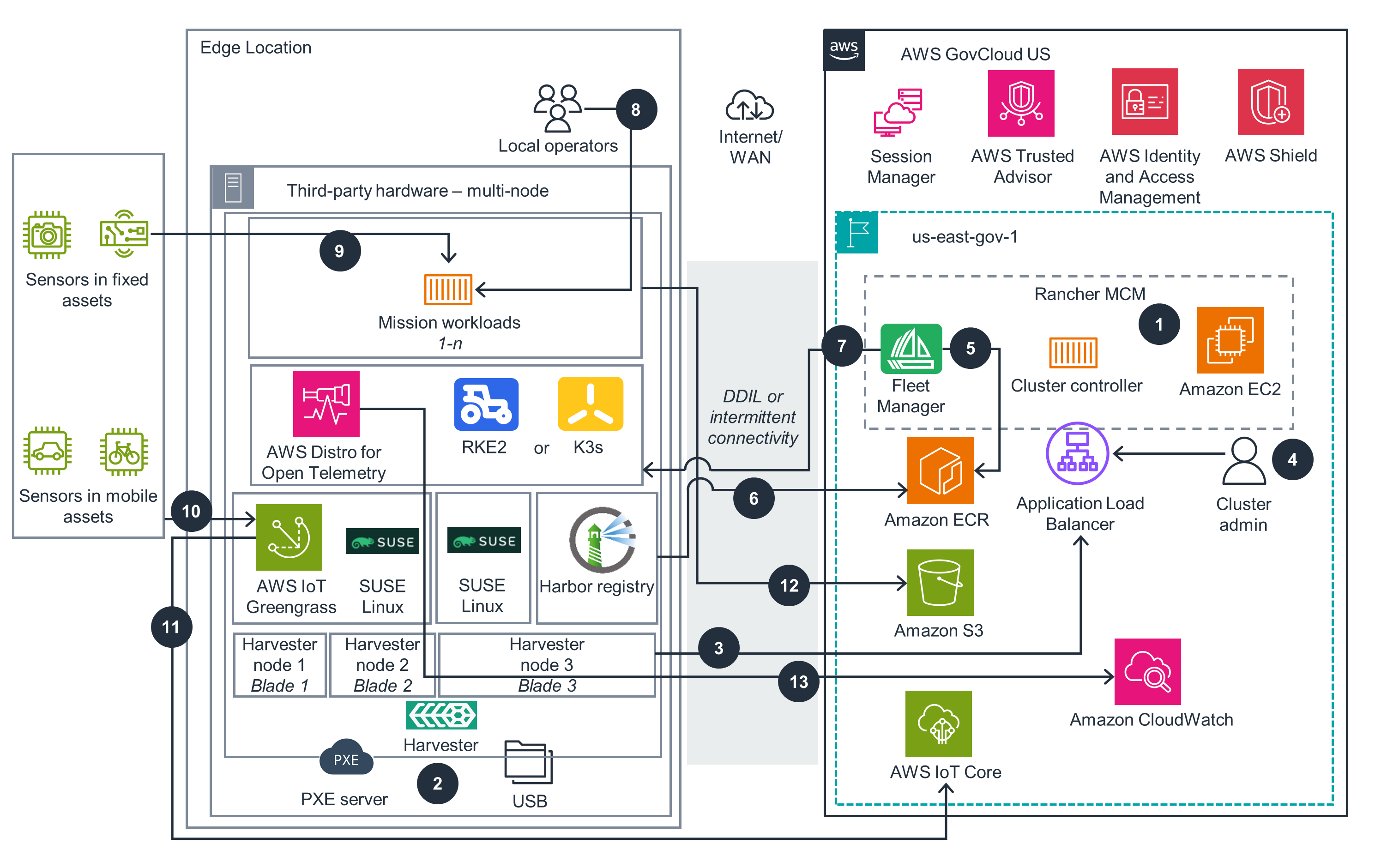

Multi-node cluster

This architecture diagram shows two distinct edge-to-cloud patterns for managing applications in tactical edge scenarios, illustrating how mission-critical workloads can be deployed on RKE2 in DDIL environments.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

For consistent and continuous deployment of edge applications through a continuous DevOps pipeline, leverage Rancher's MCM and Rancher Fleet to automate the deployment and updating of RKE2 container workloads at the edge. Gain visibility into the performance and status of your edge components by integrating Distro for Open Telemetry and CloudWatch, providing you with key insights to optimize your edge operations.

Secure your edge infrastructure by using Session Manager, a capability of AWS Systems Manager, to establish safe connections to your EC2 instances running Rancher MCM. AWS Identity and Access Management (IAM) roles and policies control access, and IAM instance roles enable your EC2 instances to interact with other AWS services. Enhance image security by leveraging Harbor registry to scan for vulnerabilities, ensuring your container images are secure. Protect your environment from distributed denial of service (DDoS) attacks with AWS Shield Standard, and enforce code compliance through the use of Harbor registry.

Amazon EC2 Auto Scaling helps ensure your edge applications are highly available and resilient by maintaining necessary capacity. ELB distributes traffic across multiple EC2 instances in different Availability Zones. Leverage multi-AZ and multi-Region configurations to enhance the reliability of your edge solution, providing you with a robust and fault-tolerant architecture.

Monitor the performance of your edge solution using CloudWatch metrics, and leverage Amazon EC2 Auto Scaling to optimize resource utilization. By analyzing performance data to identify and address any bottlenecks, you can ensure your edge applications and Rancher MCMoperate efficiently.

Amazon EC2 Auto Scaling automatically adjusts your capacity based on demand, expanding during peak periods and scaling down during slower times. AWS Trusted Advisor receives recommendations on cost optimization opportunities, while the open-source Harbor registry eliminates the need for purchasing commercial licenses.

Reduce your environmental impact by leveraging the wide variety of EC2 instance types to choose the right-sized resources for your workloads. Combine this with Amazon EC2 Auto Scaling to automatically scale resources up and down, minimizing unused capacity and lowering your carbon footprint.

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages