How was this content?

- Learn

- Startup’s guide to GenAIOps on AWS part 1: Future-proof your AI stack from day one

Startup’s guide to GenAIOps on AWS part 1: Future-proof your AI stack from day one

Startups are investing heavily in generative AI, with initiatives promising to empower and transform. However, many are still in the early phases of extracting value from these investments. CIOs and CTOs face the challenge of navigating a rapidly evolving landscape of technologies and methodologies, while ensuring their decisions support both immediate requirements and long-term strategic objectives.

Generative AI Operations or GenAIOps, is an emerging framework that is helping startups like yours overcome these challenges. GenAIOps builds upon established practices of Machine Learning Operations (MLOps) but broadens the focus to span the entire lifecycle of generative AI, from development and training to deployment and continuous monitoring.

By integrating GenAIOps into your operations from day one, you can streamline your workflows and position yourself for long-term success in an increasingly AI-driven world. This is thanks to three key, overarching benefits. First, early implementation gives you a clean slate advantage over established companies burdened with legacy systems. This provides the flexibility to build efficient AI pipelines from scratch using best practices in GenAIOps. Second, implementing GenAIOps early helps avoid technical debt by preventing inefficiencies building up and hindering scalability or innovation later. And finally, demonstrating a robust GenAIOps framework from day one signals your preparedness and scalability to potential stakeholders, aka investor appeal!

When you’re building on AWS, mastering GenAIOps isn't just a technical consideration—it's a cornerstone of business success. In today’s competitive landscape, being able to efficiently use generative AI capabilities can mean the difference between your startup rapidly scaling versus its growth stalling.

This three-part series will serve as your practical guide to implementing GenAIOps at every stage of your startup journey. We'll explore how GenAIOps practices evolve with the growth of your organization, providing actionable frameworks and tools to maintain innovation velocity while ensuring well-architected AI deployment.

The startup imperative: Why GenAIOps matters from day one

When you’re operating in a fast-paced, resource-constrained environment, adopting GenAIOps from the outset can provide a significant competitive edge. While implementing structured AI operations might seem like a luxury for larger companies, startups that establish these practices early often outpace competitors who manage AI systems manually. Here's how GenAIOps delivers measurable advantages:

1. Accelerating time-to-market

- Rapid prototyping and iteration: GenAIOps enables you to quickly develop, test, and deploy generative AI applications, reducing product development cycles by automating workflows and streamlining processes.

- Agility in market adaptation: Respond swiftly to market trends and customer demands by leveraging GenAIOps for faster iterations and feedback loops, keeping you ahead of your competitors.

2. Enhanced decision-making with data-driven insights

- Actionable intelligence: GenAIOps enables you to monitor system performance, user interactions, and AI model behavior, automatically synthesizing this data into actionable insights that accelerate product roadmap decisions, feature prioritization, and go-to-market strategies.

- Risk mitigation: Leverage GenAIOps to automatically identify unusual patterns in AI model performance, user engagement drops, or resource usage spikes, providing decision-makers with early warning signals that prevent costly issues and inform corrective strategies.

3. Competitive differentiation

- Personalized customer experiences: GenAIOps enables you to combine standardized AI workflows with real-time customer data, creating hyper-personalized products and services at scale while your competitors are still manually managing their AI operations.

- AI moat through operational excellence: GenAIOps lets you rapidly experiment with new features—such as AI agents—by automating the operational aspects of generative AI. While competitors spend weeks manually configuring and deploying each new AI feature, your standardized workflows let you launch experiments in days.

4. Building a future-proof foundation

- Seamless adoption of emerging AI technologies: When new AI models or tools emerge, GenAIOps pipelines let you evaluate and deploy them without rebuilding your entire system. Startups with ad-hoc AI implementations often face months of technical debt cleanup that GenAIOps-enabled teams complete in weeks.

- Compliance readiness from day one: GenAIOps embeds monitoring, audit trails, and ethical guardrails directly into your AI operations, ensuring you meet regulatory requirements and maintain responsible AI practices as you scale—avoiding the expensive retrofitting that many startups face later.

.jpg)

The initial investment in GenAIOps pays dividends as your team and user base grow. Startups that establish these foundations early avoid the expensive migrations and system overhauls that plague companies trying to scale ad-hoc AI implementations.

Core components of GenAIOps: An application-centric approach

Our GenAIOps pipeline takes a holistic, application-centric approach. It prioritizes end-to-end applications rather than the commonly used method of focusing on isolated foundation models operation. In doing so, you can directly address the challenges of integrating generative AI into your production systems.

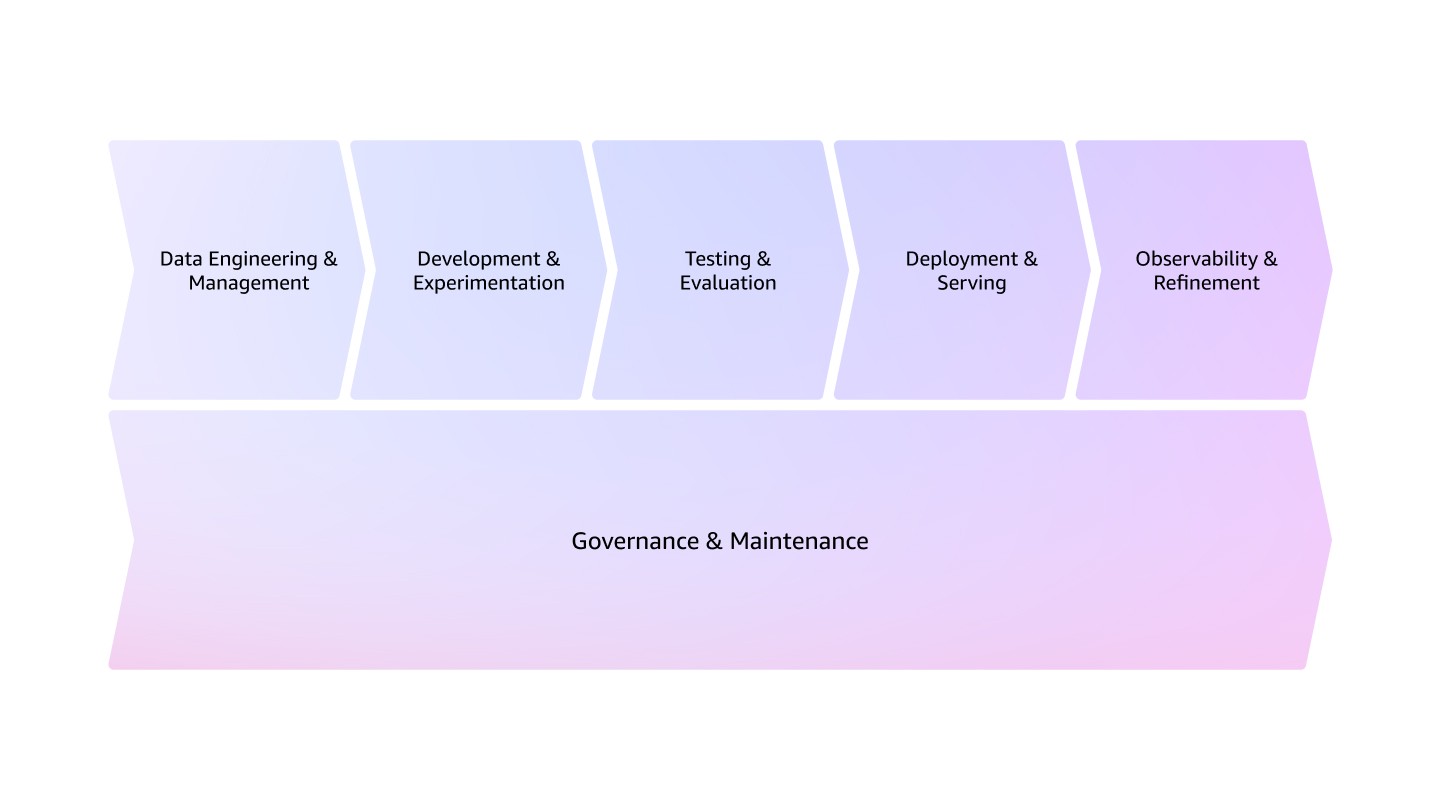

The AWS GenAIOps pipeline encompasses five interconnected stages shown in the figure below. The entire workflow is underpinned by robust governance and maintenance practices that span the complete application lifecycle.

The complexity and focus within each pipeline stage evolves with your startup's maturity. For example, if you're an early-stage startup, your teams will be building MVPs that typically prioritize rapid experimentation and basic safety guardrails, whereas if you're a scaling startup, you'll need more sophisticated observability systems, governance frameworks, and cost optimization strategies.

Below, we provide a high-level overview of each stage. Parts 2 and 3 of this series will dive deep into the specific practices, tools, and services for implementing these stages based on your startup's maturity level.

Data engineering and management

Building a strong data foundation is essential for GenAIOps, ensuring your generative AI systems are powered by high-quality, well-organized data. This allows your applications to evolve alongside business needs and helps you prepare various dataset types to support later stages in the GenAIOps pipeline. Having high quality datasets enables rapid experimentation during development, ensures evaluation and deployment consistency, and establishes the foundation for comprehensive observability and continuous improvement.

Development and experimentation

Here you’ll use the curated datasets you’ve developed in the previous phase to develop and refine AI solutions tailored to your specific business challenges. Through experimentation and iteration, you can identify the most effective components and architecture choices before committing to full implementation—and all of the investment and resources this requires!

This will help you mitigate the risks associated with adopting suboptimal designs early in the development process, establishing a strong foundation for successful deployment and long-term maintenance of AI solutions.

Testing and evaluation

Rigorous testing serves as the critical quality gate in AI application development, ensuring that all components work together reliably and effectively. It ensures your application meets business requirements, performs consistently, and handles edge cases.

This stage also establishes performance benchmarks for the production deployment and defines the initial set of metrics that you’ll monitor in production.

Deployment and serving

Moving into production marks the critical transition where your AI solution transforms from experimental capabilities into practical, accessible functionality for your end users.

While previous stages focus on capabilities and validation, deployment and serving focuses on reliability, performance, and integration at scale. It also feeds directly into the observability and refinement stage by establishing the monitoring touchpoints and performance baselines needed for continuous improvement.

Observability and refinement

Production observability ensures your AI applications remain effective, reliable, and aligned with evolving business objectives. By feeding the observability insights back to earlier pipeline stages, you create a true feedback loop where production data drives the next cycle of improvements—whether that means refining prompts, updating knowledge bases, or adjusting model selection.

Governance and maintenance

As the overarching layer that spans all stages of the GenAIOps pipeline, this stage ensures that your AI systems adhere to governance frameworks and meet necessary compliance standards.

Integrating governance into every phase means you can proactively mitigate risks, ensuring that your generative AI systems remain trustworthy, compliant, and aligned with organizational values as they scale and evolve. This holistic approach not only enhances the quality and reliability of AI outputs but also fosters a culture of responsible AI development, crucial for your startup’s long-term success and for fostering stakeholder trust.

Building on AWS from day one

Whether you're a pre-seed startup building your first AI prototype or a Series B company managing complex AI workflows, AWS provides the complete toolkit to implement this GenAIOps pipeline from day one. Amazon Bedrock eliminates infrastructure overhead with managed foundation models and built-in safety guardrails, while Amazon SageMaker handles everything from experimentation to production deployment. AWS's serverless architecture automatically scales your AI applications from prototype to production without upfront costs—you only pay for what you use, preserving critical runway. AWS Activate is a program for startups that provides not just credits, but dedicated technical support and architecture guidance to help lean teams implement enterprise-grade AI operations. This integrated approach lets you focus on building differentiated AI features while AWS handles the underlying complexity of model management, monitoring, and governance—turning GenAIOps from a future aspiration into an immediate competitive advantage.

Looking ahead

GenAIOps plays a critical role in your startup’s operations and by adopting this framework from day one, you’ll be setting yourself up for long-term success. In the upcoming parts of this series, we'll take a deeper dive into practical, stage-specific implementations of GenAIOps on AWS.

Part 2 focuses on essential GenAIOps practices for startups in earlier stages of their journey, helping you establish the right foundation while maintaining agility. Finally, Part 3 explores advanced GenAIOps strategies for when you’re entering the scale stage, ensuring robust, efficient, and sustainable AI operations to support your startup’s growth.

Whether you're just starting your AI journey or looking to optimize your existing operations, this series will provide actionable insights and AWS-specific recommendations for each stage of growth.

Nima Seifi

Nima Seifi is a Senior Solutions Architect at AWS, based in Southern California, where he specializes in SaaS and GenAIOps. He serves as a technical advisor to startups building on AWS. Prior to AWS, he worked as a DevOps architect in the ecommerce industry for over 5 years, following a decade of R&D work in mobile internet technologies. Nima has 20+ publications in prominent technical journals and conferences and holds 7 US patents. Outside of work, he enjoys reading, watching documentaries, and taking beach walks.

Anu Jayanthi

Anu Jayanthi works with Startup customers, providing advocacy and strategic technical guidance to help plan and build solutions using AWS best practices.

.jpg)

Pat Santora

Pat Santora is a GenAI Labs Cloud Architect and Technologist with over 25 years of experience implementing solutions across the cloud for both enterprises and startups. He has successfully launched numerous products from inception, led analytical re-architecture projects, and managed remote teams with a philosophy centered on transparency and trust. His technical expertise spans strategic planning, systems management, and architectural redesign, complemented by interests in GenAI, Analytics, and Big Data.

.jpg)

Clement Perrot

Clement Perrot helps top-tier startups accelerate their AI initiatives by providing strategic guidance on model selection, responsible AI implementation, and optimized machine learning operations. A serial entrepreneur and Inc 30 Under 30 honoree, he brings deep expertise in building and scaling AI companies, having founded and successfully exited multiple ventures in consumer technology and enterprise AI.

How was this content?