- AWS Solutions Library›

- Guidance for Multi-Cluster Application Management with Karmada and Amazon EKS

Guidance for Multi-Cluster Application Management with Karmada and Amazon EKS

Centralized Kubernetes multi-cluster management and deployment

Overview

This Guidance shows how to use Karmada with Amazon Elastic Kubernetes Service (Amazon EKS) to manage and run your cloud-native applications across multiple Kubernetes clusters on AWS. Karmada provides ready-to-deploy automation for multi-cluster application management, offering features like centralized multi-cloud management, high availability, failure recovery, and traffic scheduling. You can centrally manage and control multiple Kubernetes clusters through a single entry point without modifying your applications.

This Guidance is compatible with the Kubernetes API, allowing seamless integration with the existing Kubernetes suite of built-in policy sets to address a variety of deployment scenarios. These include policies for active-active configurations, remote disaster recovery, and geo-redundancy—allowing you to manage applications across multiple clusters with high availability and resilience.

How it works

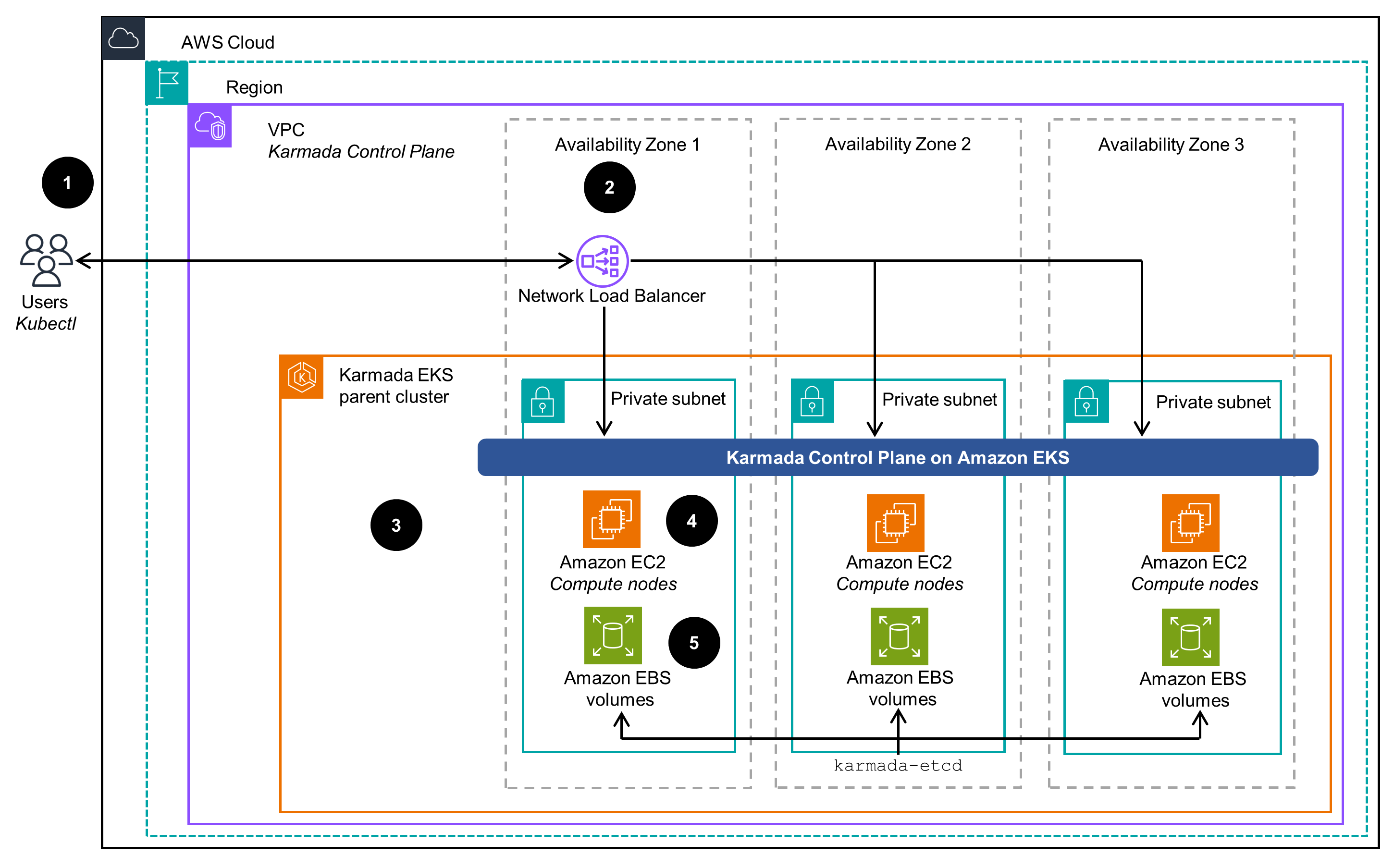

Karmada Control Plane

This architecture diagram shows how to deploy a Karmada Control Plane on an Amazon EKS parent cluster.

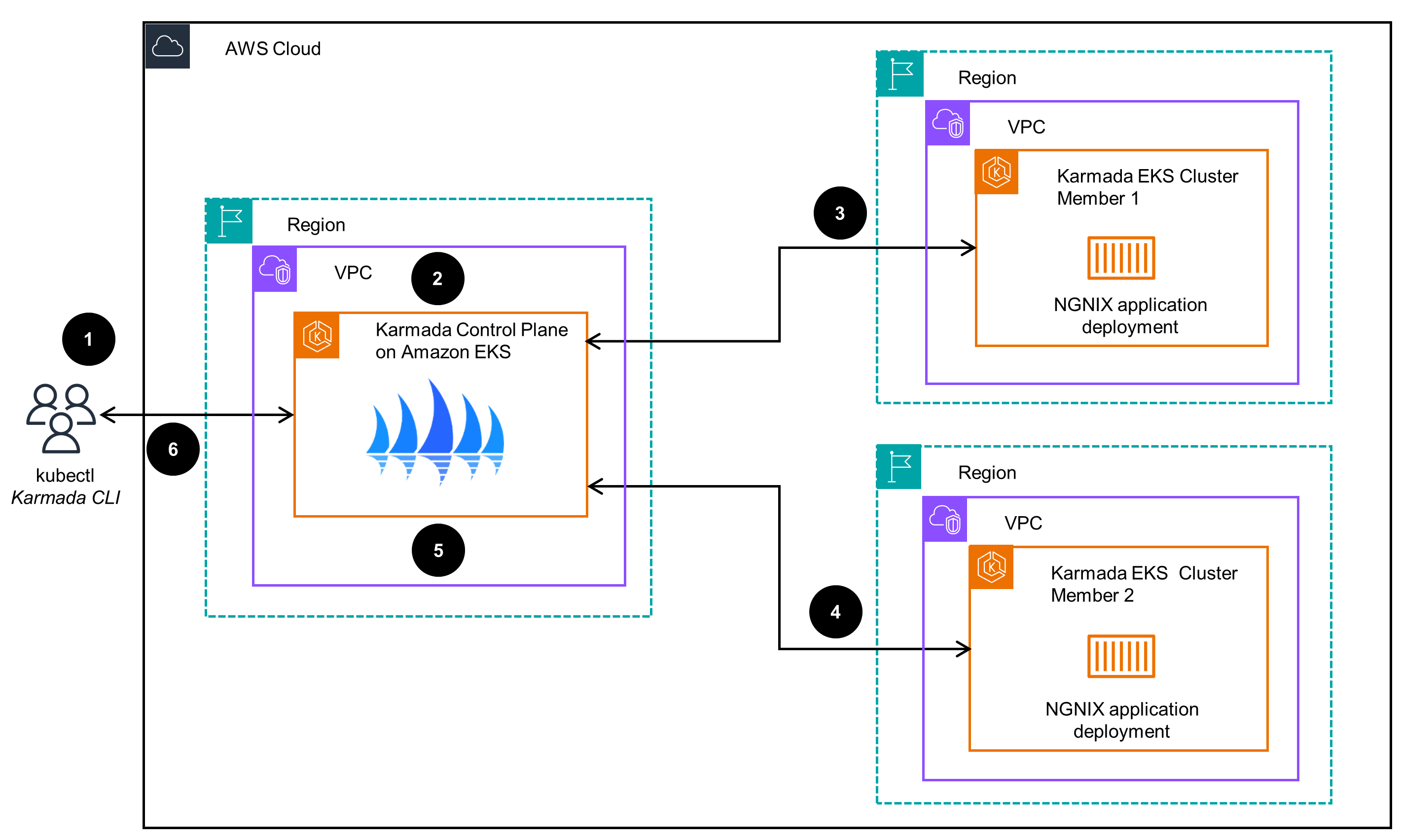

Application deployment to Karmada-managed EKS clusters

This diagram shows how to deploy applications to Karmada-managed Amazon EKS clusters.

Deploy with confidence

Everything you need to launch this Guidance in your account is right here

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

Amazon EKS provides dynamic scaling of compute nodes across Availability Zones, enabling reliable and consistent Kubernetes cluster deployment, management, and upgrades using Infrastructure as Code (IaC). It also integrates with Amazon CloudWatch Container Insights for monitoring.

Additionally, Karmada simplifies multi-cluster and multi-cloud Kubernetes management from a centralized portal, reducing operational overhead by applying policies and configurations uniformly across clusters for consistent application deployment and networking. Karmada also supports hybrid deployments across AWS accounts, allowing you to choose the most suitable infrastructure.

AWS Identity and Access Management (IAM) allows for the creation of policies with granular controls to specify the permitted and denied actions. The utilization of IAM policies facilitates adherence to the "principle of least privilege" with regards to resource access. Furthermore, IAM integrates with AWS CloudTrail to provide comprehensive logging of user activity, thereby enhancing auditing capabilities and visibility into actions performed.

Additionally, Amazon EKS integrates with Amazon Virtual Private Cloud (Amazon VPC) to establish logical network isolation between the Kubernetes nodes. This network-level isolation, combined with granular access controls, serves to enhance the overall security posture of the Amazon EKS environment, which is built upon the open-source Kubernetes API. Together, they incorporate industry-standard security and encryption practices for both the platform and application layers.

This Guidance supports a highly available topology in a number of ways. One, Amazon EKS deploys Kubernetes control and compute planes across multiple Availability Zones to provide high availability. Two, Amazon EKS uses Elastic Load Balancing (ELB) that routes application traffic to healthy nodes. Three, Amazon EKS sends cluster metrics to CloudWatch, enabling custom alerts based on thresholds. Four, Kubernetes has built-in high availability features, including a distributed etcd database and the ability to run the Control Plane on multiple servers across Availability Zones.

Lastly, Karmada enhances reliability through cross-cluster load balancing and auto-scaling, dynamically scheduling workloads based on utilization. Karmada itself is designed for high availability with multi-master clustering and redundancy.

Amazon EKS supports auto-scaling of compute nodes, which matches capacity to demand, improving performance efficiency. This includes various types of Amazon EC2 instances to better match the kind of workloads you are running.

Karmada can deploy workloads closer to end-users on Amazon EKS clusters in different geographical locations, which reduces latency and improves the user experience. Integrated with Kubernetes native auto-scaling features, Karmada can help in automatically scaling applications based on the demand for efficient resource use.

Amazon EKS charges a fixed monthly fee for the Control Plane and with it, you can use the capabilities of Kubernetes to optimize your pod resource requests and achieve lower costs. For example, Amazon EKS can automatically scale compute nodes based on workload demand to reduce over-provisioning and costs.

In addition, Karmada simplifies the management and deployment of multiple Amazon EKS clusters and workloads across different clusters from a central location. This reduces the overhead costs related to the administration and management of multiple Amazon EKS clusters and their associated workloads.

Amazon EKS is designed to run on the highly efficient AWS cloud infrastructure, which has been engineered with sustainability in mind. This includes the use of custom-designed, ARM-based Graviton processors that deliver significantly higher performance-per-watt compared to traditional x86 chips. Beyond the energy-efficient hardware, the AWS data centers hosting Amazon EKS also incorporate innovative cooling and power distribution systems to maximize efficiency. By running on this AWS foundation built for sustainability, Amazon EKS is able to provide the required compute resources for containerized applications with a smaller environmental footprint compared to on-premises deployments.

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages