How was this content?

Accelerating AI/ML scaling and AI development with Anyscale and AWS

Building a cloud-distributed and scalable artificial intelligence (AI) application is a cross-team effort that requires complicated management of resources and comes with numerous production concerns such as code changes, refactoring, setting up the infrastructure, and complex developer operations (DevOps). These can confuse the development process, slow down time-to-market, and keep developers from focusing on product innovation.

“With the lack of established development-to-production pathways, some estimates place the AI development project failure rate at nearly 50%,” explains Rob Ferguson, Amazon Web Services (AWS) global head of AI and machine learning (ML) for startups.

That’s where Anyscale, Ray (the first unified, distributed compute framework for scaling ML or Python workloads), and the Anyscale Platform (a fully-managed Ray platform provided by the creators of Ray) help AI teams speed development and experimentation, and effortlessly scale applications to ensure the success of their AI projects.

Ray was conceived at the University of California Berkeley in the RISELab and open-sourced in 2017. Today, Ray is widely adopted by organizations globally for developing AI and Python applications at scale.

Improve scalability and developer ergonomics with the Anyscale Platform

To advance AI development and scaling for every organization, the Anyscale Platform extends the capabilities of Ray by enabling developers and cross-functional teams to accelerate experimentation and speed up the development of ML applications at scale. The Anyscale Platform and Ray increase developer velocity by providing scalable compute for data ingestion and preprocessing, ML training, hyperparameter tuning, model serving, and more, all while integrating seamlessly with the rest of the ML ecosystem.

The Anyscale Platform significantly extends the capabilities of Ray. Anyscale Workspaces provides developers with a unified and seamless development experience to scale ML workloads from a laptop to the cloud with no code changes. Developers can now leverage a single environment to build, test, and deploy workloads to production while leveraging the tools they are familiar with. The Anyscale Platform improves iteration speed by reducing cluster setup time by 5X over Ray. Finally, the Anyscale Platform provides observability, monitoring, and job scheduling out of the box.

“The capabilities of the Anyscale Platform go far beyond the capabilities of Ray and make AI/ML and Python workload development, experimentation, and scaling even easier,” said Ion Stoica, co-founder and executive chairman of Anyscale. “Thousands of organizations rely on Ray to scale their ML and Python applications, and a growing number of organizations are leveraging the Anyscale Platform to build, test, and deploy these applications to production faster than ever.”

From AI developers, to ML practitioners, to data scientists and engineers; all contributors can benefit from the ease, scalability, and developer ergonomics that the Anyscale Platform provides for the full AI journey: from model development, tuning, and training to inference and scalable model serving.

Anyscale’s mission is to ease the building and scaling of AI/ML and Python workloads, as well as AI applications. Their team democratizes AI by making it easier for data scientists and ML engineers to work with project structures that make sense for building production ML systems. Scalable, unified, and open distributed computing, such as what Anyscale offers for Ray, helps lead to the mass democratization and industrialization of AI.

Anyscale’s commitment to open source and the freedom of shared technology is evident: The Anyscale team leads the Ray open source community by managing operations, reviewing contributions, and growing and developing the community. Additionally, they are the biggest contributor to (OSS) and recently released Ray 2.1.

Building mature, reliable, and easily scalable ML models

With 90% of new applications expected to be cloud-native by 2025, Anyscale envisions the cloud as the default substrate in which the next generation of applications will be developed. They expect the next generation of cloud infrastructure to be optimized frameworks which ease the development and management of applications.

Anyscale leverages AWS’ mature suite of products as components for rapid development of their platform. Anyscale and Ray are full-featured on AWS, with highly efficient performance and scaling. In addition to AWS’ scale being a tremendous asset to Anyscale and Ray, some of their largest customers have a long history of using AWS and this facilitates their customer interactions.

“Inference is 90% of any ML project. Machine learning teams need novel ways of working with distributed computing to deliver the next generation of ML at scale,” explains Rob. “The golden era of machine learning is taking place and AWS and Anyscale help companies to build ML models the way they’re supposed to be: mature, reliable, and easily scalable.”

Amazon.com and AWS have worked with the Ray community to integrate Ray with many AWS services, including:

- AWS Identity and Access Management (IAM) for fine-grained access control

- AWS Certificate Manager (ACM) for SSL/TLS in-transit encryption

- AWS Key Management Service (AWS KMS) for at-rest encryption

- Amazon Simple Storage Service (Amazon S3) for object storage

- Amazon Elastic File System (Amazon EFS) for distributed-file access

- Amazon CloudWatch for observability

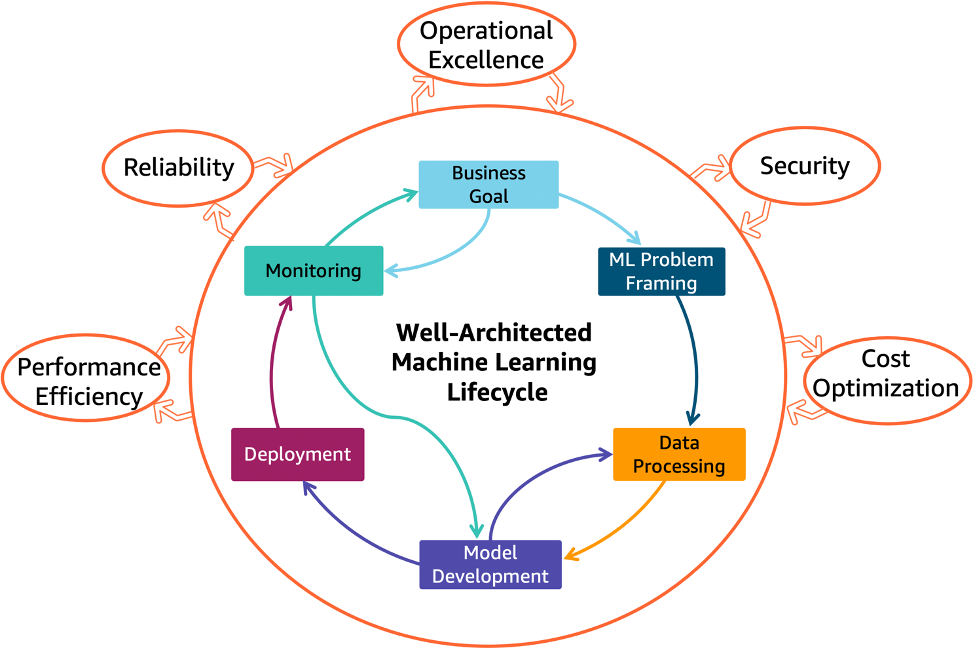

Solutions that fit within the AWS Well-Architected Machine Learning Lifecycle–such as the Anyscale Platform for the deployment stage—can help companies to spend more time on product development rather than resource provisioning and management.

The AWS Well-Architected Framework, which provides operational and architectural best practices for designing and operating workloads in the cloud, includes pillars for operational excellence, security, reliability, performance efficiency, cost optimization, and sustainability. With the Anyscale platform, developers can increase their performance efficiency.

“Anyscale simplifies a typical Python ML code and helps it fit within the framework within as little as two lines of code,” explains Rob. “AWS is an incredible home for Anyscale with its industry-leading tools for production systems as well as broadest and deepest compute options.”

Rob Ferguson

Rob is the Global Head of Artificial Intelligence and Machine Learning for Startups and Venture Capital at AWS, based in San Francisco. Prior to AWS, he worked as a CTO/VPE of two Y Combinator startups raising over $150M+, with expertise in AI/ML as well as consumer electronics and media. As CTO of Automatic Labs, Rob earned one of Y Combinator’s 10 biggest exits as well as a 4.5+ star product on Amazon.

Ion Stoica

Stoica has been a Berkeley professor since 2000. His research includes cloud computing, networking, distributed systems and big data. He has authored or co-authored more than 100 peer reviewed papers in various areas of computer science. Stoica was co-founder and Chief Technology Officer (CTO) of Conviva in 2006, a company that came out of the End System Multicast project at CMU. Stoica then co-invented Apache Spark in the Berkeley AMPLab. In 2013 Stoica co-founded Databricks, based on Spark, and served as its chief executive officer until 2016 when he became executive chairman. Stoica then co-invented Ray in the Berkeley RISELab. Ray is now the fastest growing unified compute framework to scale AI/ML workloads and applications. Stoica co-founded Anyscale in 2019, the company that offers the Anyscale Platform, a fully-managed Ray platform. Ion Stoica is currently Executive Chairman of Anyscale in addition to Executive Chairman of Databricks.

Megan Crowley

Megan Crowley is a Senior Technical Writer on the Startup Content Team at AWS. With an earlier career as a high school English teacher, she is driven by a relentless enthusiasm for contributing to content that is equal parts educational and inspirational. Sharing startups’ stories with the world is the most rewarding part of her role at AWS. In her spare time, Megan can be found woodworking, in the garden, and at antique markets.

How was this content?