We could develop our services on AWS with a lot of peace of mind, not worrying about flexibility, reliability, or availability of AWS services.

Uri Cohen

Vice President of Product Management, Platform Engineering, ElasticAbout Elastic

In 2012, Dutch-American company Elastic was formed around the open-source project Elasticsearch. Following expansion, the software business now offers a Search AI platform and self-managed SaaS solutions for search, logging, security, observability, and analytics.

Opportunity | Using AWS to Build Hassle-Free Search and AI Infrastructure

Founded in 2012, Elastic is well-known for its distributed search and analytics engine, Elasticsearch. In 2015, the company added a cloud offering to its on-premises offering, called Elastic Cloud. The cloud offering was initially built on top of AWS but was later made available on all public clouds.

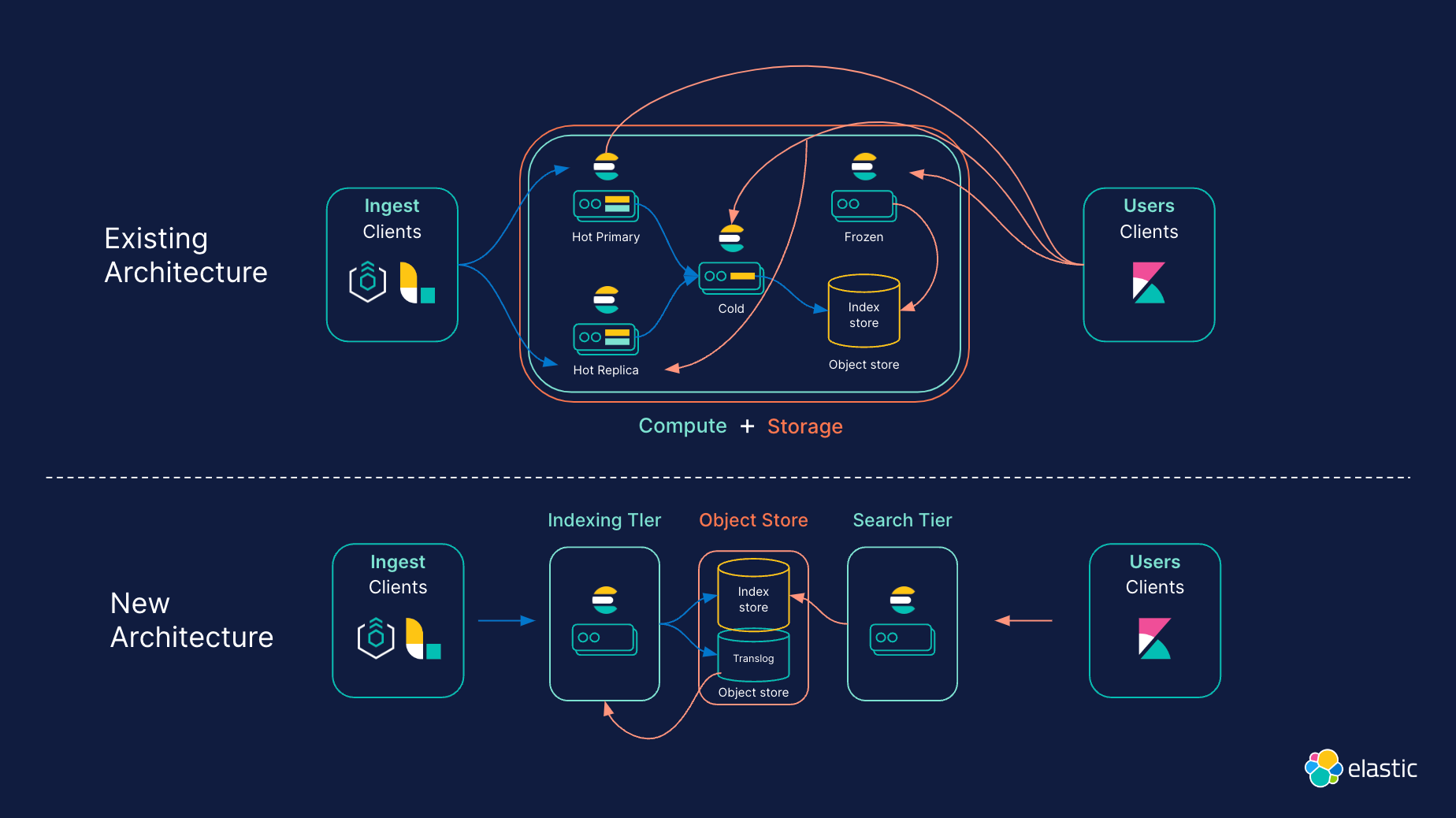

As Elastic expanded into generative AI solutions, it developed Search AI Lake—a cloud-optimized architecture that offers vast storage and search capabilities through Elasticsearch for low-latency querying of large volumes of data.

In 2022, the company began developing Elastic Cloud Serverless on Search AI Lake, which takes away the administrative burden of managing capacity, sizing, and upgrades, making it simpler for customers to use the solutions’ features for generative AI and other applications. Elastic used AWS to build this serverless offering. “AWS is the most-used cloud environment in the world, and a large portion of our customers are on AWS,” says Uri Cohen, vice president of product management for platform engineering at Elastic.

Solution | Developing Elastic Cloud Serverless in 1 Year from Concept to Solution

Elastic uses a stateless architecture to attain the manageability, scalability, and efficiency of Elastic Cloud Serverless. The team architected the solution to make sure that customers did not run into operational challenges and that the system would right-size itself to customers’ workloads, using services like Amazon Elastic Compute Cloud (Amazon EC2), which provides secure and resizable compute capacity for virtually any workload. For even more optimization, Elastic uses AWS Graviton processors, a family of processors that are designed to deliver the best price-performance for cloud workloads running on Amazon EC2, with locally attached NVMe solid-state drives. “The performance we get from those NVMe drives for the price we’re paying for them in our existing cloud offering is unparalleled, so it was only natural for us to use AWS Graviton instances for our serverless offering as well,” says Cohen. Elastic can store hundreds of petabytes of data on the drives while managing tens of thousands of compute instances daily.

To help customers store massive amounts of data at very low costs, Elastic Cloud Serverless uses Amazon Simple Storage Service (Amazon S3)—an object storage service—as the system of record for customer data. The company uses Amazon S3 to store and retrieve large amounts of data with high availability and durability. “With our new Search AI Lake architecture, we’re giving customers the ability to store massive amounts of data, like a data lake, but one that is interactively searchable,” says Cohen. “The new architecture supports all the amazing stuff you can do with Elasticsearch by searching vast amounts of data and getting responses immediately.”

Additionally, Elastic uses Amazon Elastic Kubernetes Service (Amazon EKS)—the most trusted way to start, run, and scale Kubernetes—as the substrate for all of its services. Elastic also uses its own observability and security solutions to monitor and secure the serverless offering.

In October 2023, Elastic released a private preview of Elastic Cloud Serverless. In April 2024, the offering was released for public preview in four AWS Regions, and over 1,000 customers tested it and provided feedback. During the preview period, SAP Concur highlighted the solution's simplicity of use and impressive autoscaling capabilities. Two Six Technologies also praised the simple setup process, noting that it could provision new projects without technical expertise; the company experienced near-zero latency when ingesting and querying data.

Elastic found that 20 percent of its workloads in the current cloud offering were in one AWS Region. To make sure that the new serverless solution would be sufficiently scalable, it planned its operational capacity across AWS Regions accordingly. It also compartmentalized workloads in each AWS Region into multiple Kubernetes clusters, which could be managed independently, with more Kubernetes clusters being added for scaling. “If one Kubernetes cluster gets broken, the impact is constrained only to the workloads on that cluster,” says Cohen. “It’s a few hundred customer workloads compared to thousands of workloads when the whole region is operated as one unit.” That helped Elastic minimize the impact of operational issues and simplify scaling.

It took 1 year to complete the project, which is one of the largest projects Elastic has undertaken since its inception. “We re-architected everything—the cloud platform, the core Elasticsearch product, the operational and pricing models, and the services around Elasticsearch,” says Cohen. “The fact that we went live in 1 year at such scale is pretty mind-blowing to me.”

By using AWS, Elastic could focus on building its offering without worrying about the underlying infrastructure. “We can innovate and generate business value without operational distractions, and given the maturity and vast capacity, we rarely encounter capacity or reliability issues,” says Cohen. “We could develop our services on AWS with a lot of peace of mind, not worrying about flexibility, reliability, or availability of AWS services.”

Outcome | Preparing for General Availability Across Numerous AWS Regions and Multiple Cloud Providers

In December 2024, Elastic Cloud Serverless was released for general availability on AWS. Elastic collaborated closely alongside the AWS team to plan for availability and capacity to deploy in many more AWS Regions.

Elastic’s solutions, including the new serverless offering, are available on AWS Marketplace. Elastic is committed to continuing to use AWS Marketplace for its offerings, ensuring its customers have a reliable platform to discover, deploy, and manage software that runs on AWS. “We experience strong growth annually in the opportunities we bring to and launch alongside AWS,” says Alyssa Fitzpatrick, global vice president for partner sales at Elastic.

Elastic is looking forward to customers using its serverless offering. “Users, especially if they operate in search, observability, security, or building generative AI applications—as well as monitoring services and infrastructure—will find that with serverless, it is all so much easier,” says Cohen.

Elastic Cloud serverless architecture