- AWS Solutions Library›

- Guidance for Building a Sustainability Data Fabric with Snowflake on AWS

Guidance for Building a Sustainability Data Fabric with Snowflake on AWS

Overview

How it works

Overview

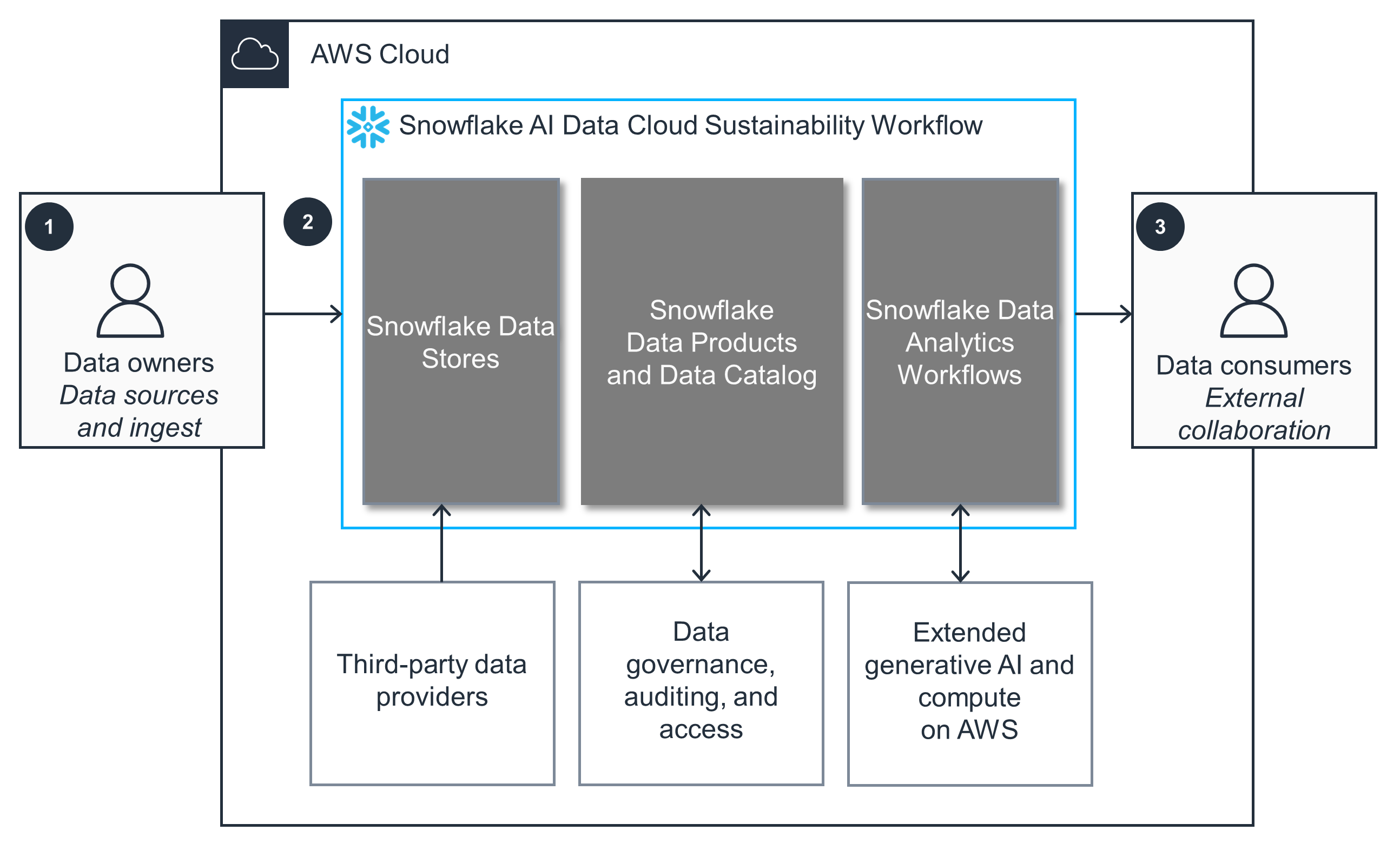

This architecture diagram illustrates how to use Snowflake for integrating, processing, and analyzing sustainability data, with the unified platform encompassing carbon accounting, energy optimization, and supply chain transparency. The subsequent tabs depict data ingestion and data management processes.

Data sources and ingestion

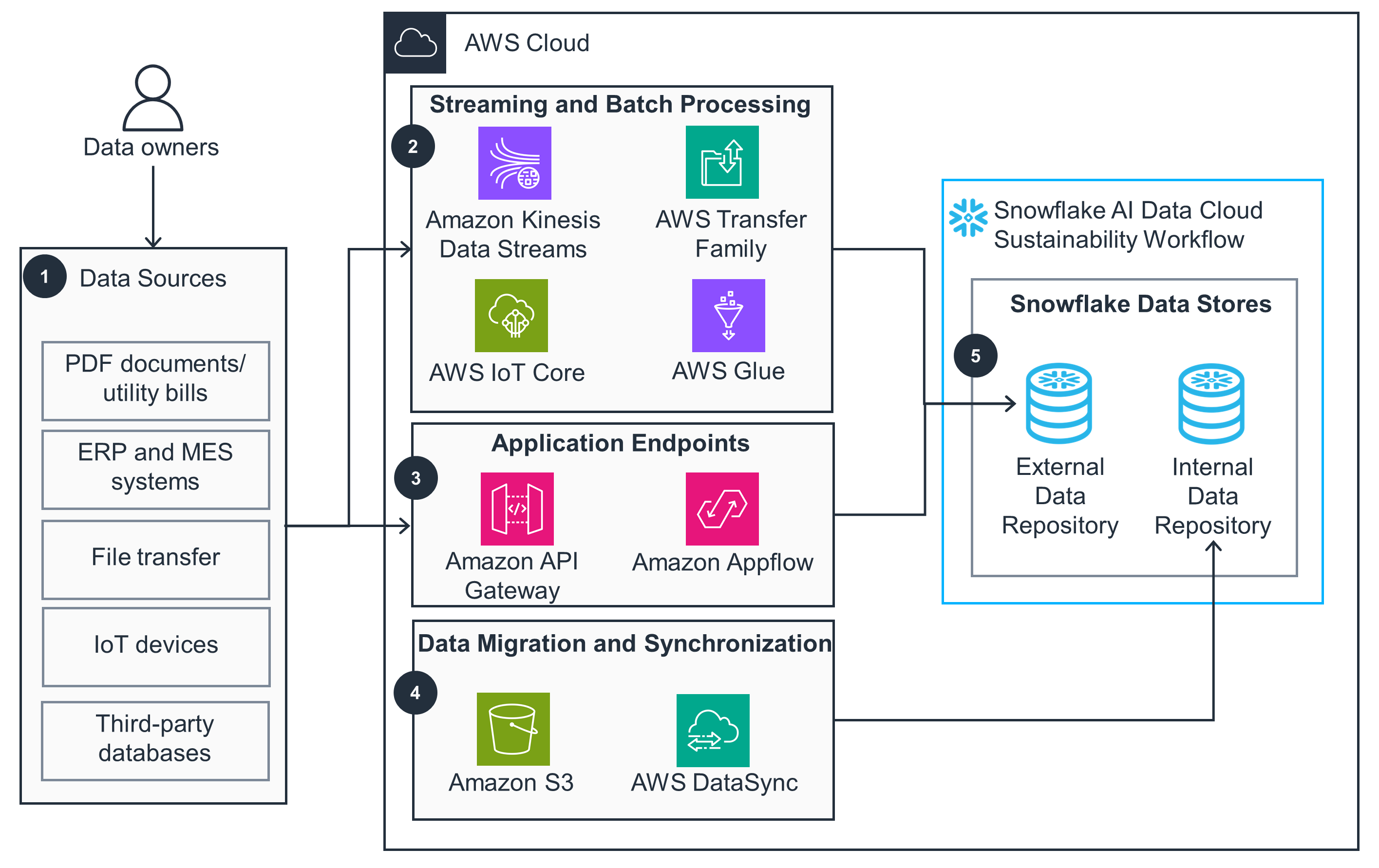

This architecture diagram illustrates the ingest, preparation, and storage of unstructured sustainability data using AWS services.

Snowflake AI Data Cloud sustainability workflow

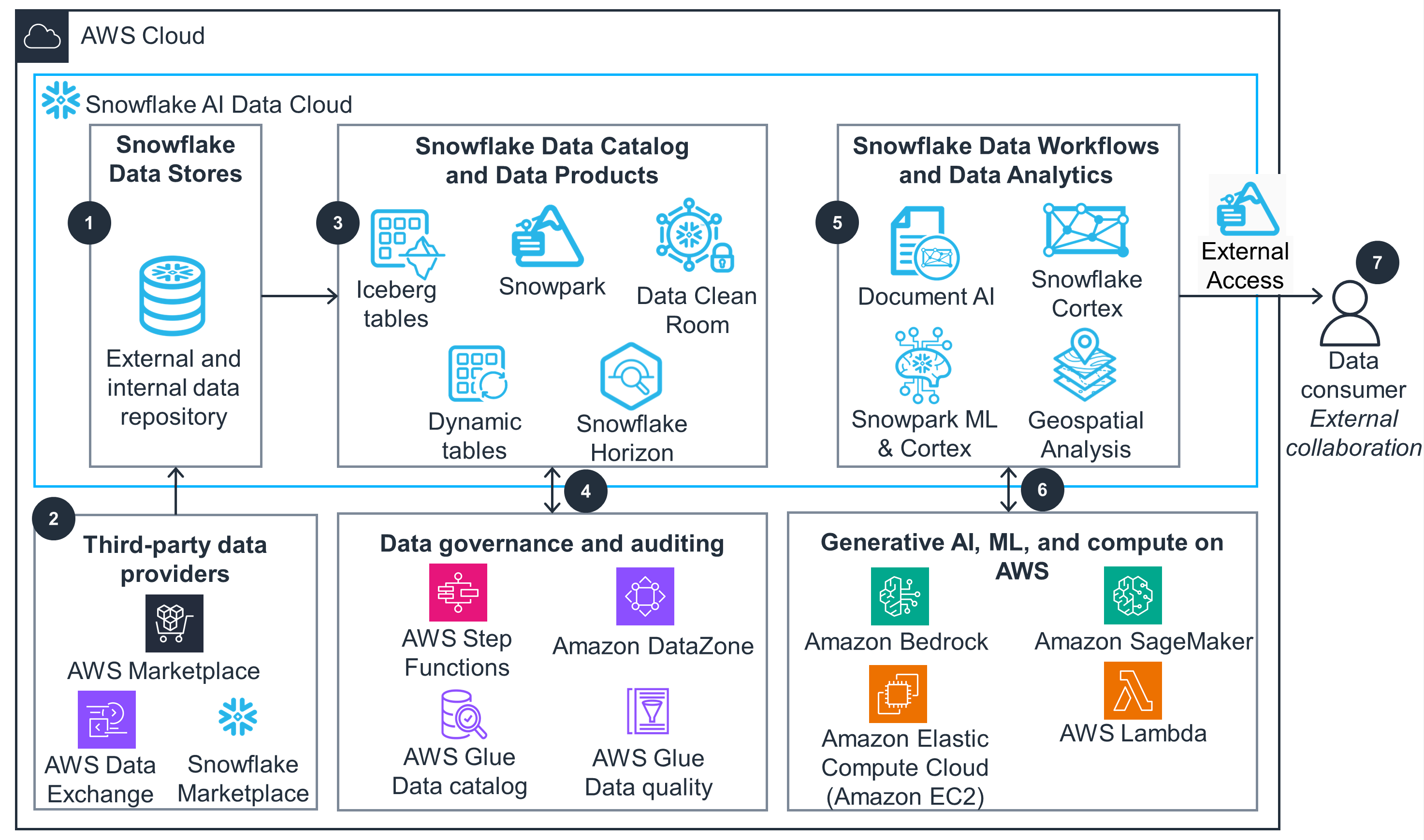

This architecture diagram shows how you can manage sustainability workloads across your organization by applying data governance best practices, integrating workflows with third-party data, and using compute instances to extract additional insights.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

Amazon Bedrock and AWS Lambda provide generative AI compute capabilities without the need to upgrade or patch virtual machine images or operating system versions. Furthermore, Amazon Elastic Compute Cloud (Amazon EC2), with its extensive selection of over 750 instance types, helps you to meet your specific requirements for processor, storage, networking, operating system, and workload needs. This comprehensive approach helps you to achieve optimal price-performance ratios to support your diverse computing workloads, including high-performance computing (HPC), ML, and Windows-based applications.

AWS Identity and Access Management (IAM) integrates with all AWS services, including Lambda and Amazon EC2. This enables application code running in Lambda to authenticate with other services, such as Amazon Bedrock and API Gateway, without needing to store long-lived credentials. Furthermore, IAM identity-based policies can be used to define and manage access permissions, determining whether a user can create, access, or delete Amazon Kendra resources. For instance, a user within your AWS account can be denied access to query a specific Amazon Kendra index through the application of an IAM policy.

This Guidance uses fully managed serverless offerings like Amazon Bedrock, Lambda, Amazon EC2, and Amazon S3 that are all deployed across multiple Availability Zones (AZs) by default. These services do not involve long-running compute or database resources that require maintenance, leading to fewer points of failure within the overall architecture.

Amazon Bedrock is a fully managed generative AI service that offers a choice of foundation models (FMs) through a single API. You can quickly experiment with a variety of FMs and use a single API for inference regardless of the models you choose, giving you the flexibility to use FMs from different providers and keep current with the latest model versions with minimal code changes.

Additionally, Amazon AppFlow is a fully managed integration service that helps you securely transfer data between SaaS applications, such as Salesforce, SAP, Google Analytics, and various AWS services. Amazon AppFlow is used here as an entry point to connect to various Snowflake services.

Lambda and Amazon Bedrock automatically scale and allocate resources based on demand. These fully managed services also reduce the operational burden on DevOps team, lowering the costs associated with infrastructure management and maintenance. Additionally, Lambda and Amazon Bedrock offer a pay-as-you-go pricing model so that you’re only charged when these services are actively processing requests. For example, Amazon Bedrock has an on-demand and batch mode that lets you use FMs on a pay-as-you-go basis without having to make any time-based term commitments.

Amazon S3 is also used here to provide you with the flexibility to choose storage tiers based on your workload and data retention requirements.

Lambda, Amazon Bedrock, and Amazon Kinesis enable more efficient operations by shifting the responsibility of maintaining your hardware infrastructure to AWS. Learn more about Amazon and AWS Sustainability efforts.

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages