AWS News Blog

AWS DeepLens – Get Hands-On Experience with Deep Learning With Our New Video Camera

As I have mentioned a time or two in the past, I am a strong believer in life-long learning. Technological change is coming along faster than ever and you need to do the same in order to keep your skills current.

For most of my career, artificial intelligence has been an academic topic, with practical applications and real-world deployment “just around the corner.” I think it is safe to say, with the number of practical applications for machine learning, including computer vision and deep learning, that we’ve turned the corner and that now is the time to start getting some hands-on experience and polishing your skills! Also, while both are more recent and spent far less time gestating, it is safe to say that IoT and serverless computing are here to stay and should be on your list.

New AWS DeepLens

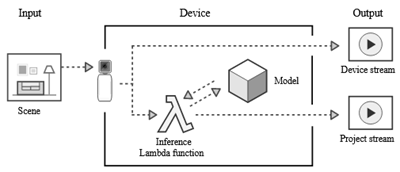

Today I would like to tell you about AWS DeepLens, a new video camera that runs deep learning models directly on the device, out in the field. You can use it to build cool apps while getting hands-on experience with AI, IoT, and serverless computing. AWS DeepLens combines leading-edge hardware and sophisticated on-board software, and lets you make use of AWS IoT Greengrass, AWS Lambda, and other AWS AI and infrastructure services in your app.

Today I would like to tell you about AWS DeepLens, a new video camera that runs deep learning models directly on the device, out in the field. You can use it to build cool apps while getting hands-on experience with AI, IoT, and serverless computing. AWS DeepLens combines leading-edge hardware and sophisticated on-board software, and lets you make use of AWS IoT Greengrass, AWS Lambda, and other AWS AI and infrastructure services in your app.

Let’s start with the hardware. We have packed a lot of power into this device. There’s a 4 megapixel camera that can capture 1080P video, accompanied by a 2D microphone array. An Intel Atom® Processor provides over 100 GFLOPS of compute power, enough to run tens of frames of incoming video through on-board deep learning models every second. DeepLens is well-connected, with dual-band Wi-Fi, USB and micro HDMI ports. Wrapping it all up, 8 gigabytes of memory for your pre-trained models and your code, makes this a powerful yet compact device.

On the software side, the AWS DeepLens runs Ubuntu 16.04 and is preloaded with the Greengrass Core (Lambda runtime, message manager, and more). There’s also a device-optimized version of MXNet, and the flexibility to use other frameworks such as TensorFlow and Caffe2. The Intel® clDNN library provide a set of set of deep learning primitives for computer vision and other AI workloads, taking advantage of special features of the Intel Atom® Processor for accelerated inferencing.

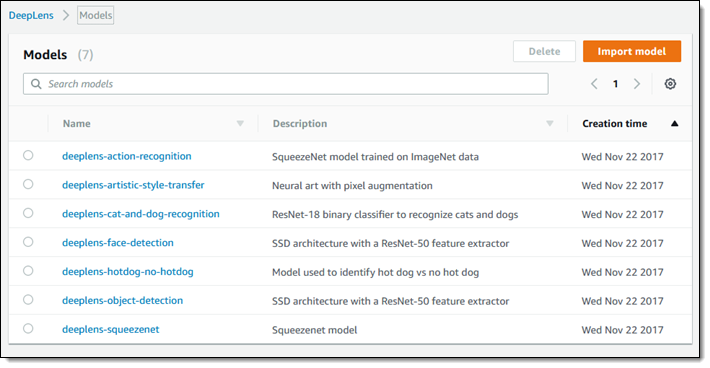

We also give you data! When you build an app that runs on your AWS DeepLens, you can take advantage of a set of pre-trained models for image detection and recognition. These models will help you detect cats and dogs, faces, a wide array of household and everyday objects, motions and actions, and even hot dogs. We will continue to train these models, making them better and better over time. Here’s the initial set of models:

All of this hardware, software, and data come together to make the AWS DeepLens a prime example of an edge device. With eyes, ears, and a fairly powerful brain that are all located out in the field and close to the action, it can run incoming video and audio through on-board deep learning models quickly and with low latency, making use of the cloud for more compute-intensive higher-level processing. For example, you can do face detection on the DeepLens and then let Amazon Rekognition take care of the face recognition.

This is an epic learning opportunity in a box! We’ve also included tons of sample code (Lambda functions) that you can use as-is, pick apart and study, and use as the basis for your own functions. Once you have built something cool and useful, you can deploy it in production form. We’ve made sure that AWS DeepLens is robust and secure, with unique certificates for each device and fine-grained, IAM-powered control over access to AWS services and resources.

Registering a Device

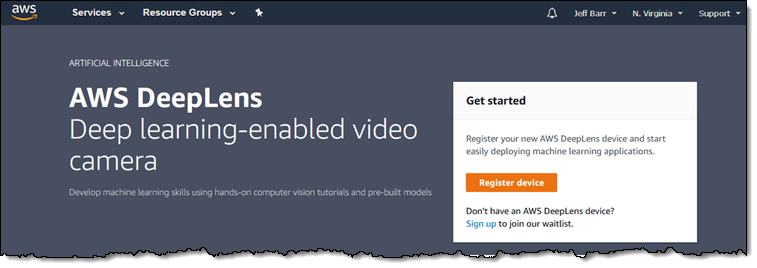

Let’s walk through the process of registering a device and getting it ready for use, starting from the DeepLens Console. I open it up and click on Register device:

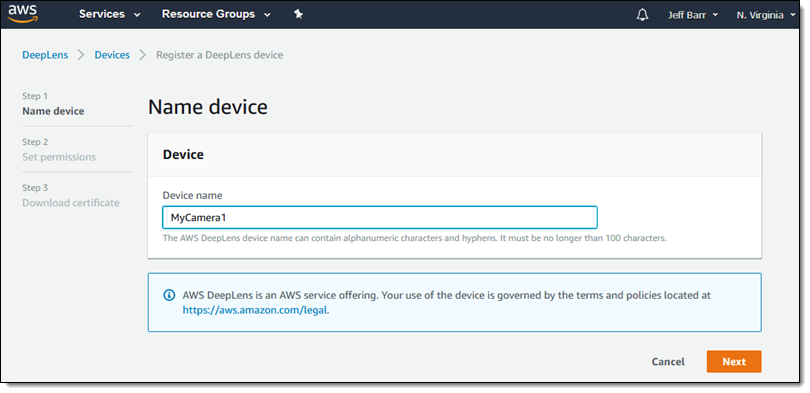

Then I give my camera a name and click on Next:

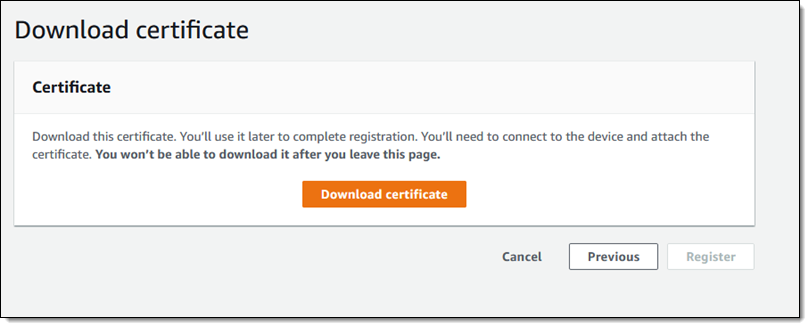

Then I click on Download certificate and save it away in a safe place:

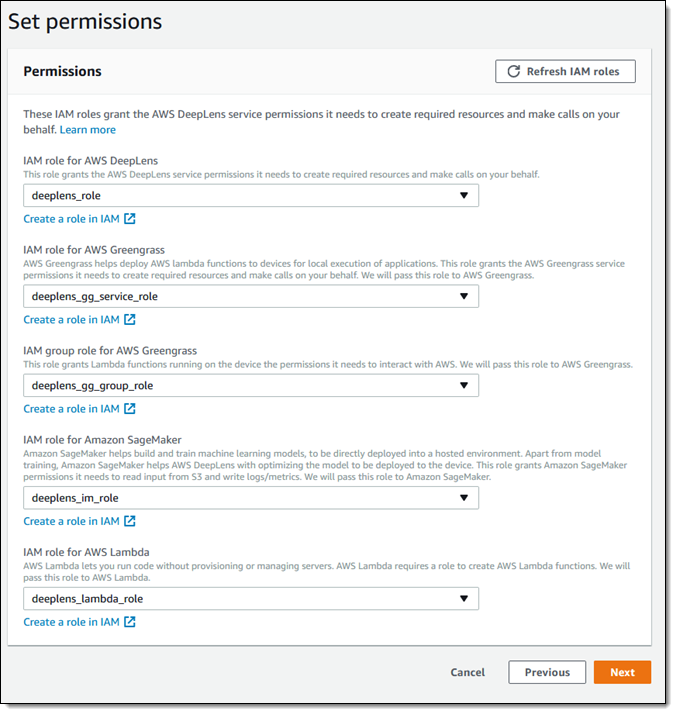

Next, I create the necessary IAM roles (the console makes it easy) and select each one in the appropriate menu:

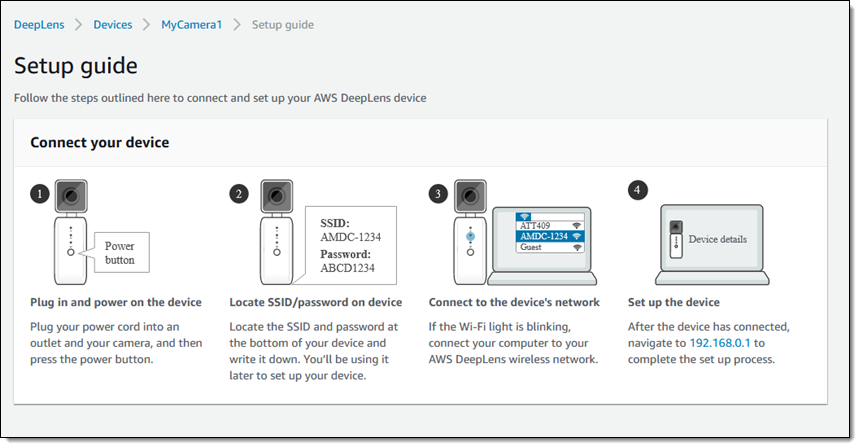

Now I am ready to go hands-on with my DeepLens! I power up, connect my laptop to the device’s network, and access the built-in portal to complete the process. The console outlines the steps:

At this point my DeepLens is a fully-functional edge device. The certificate on the device allows it to make secure, signed calls to AWS. The Greengrass Core is running, ready to accept and run a Lambda function.

Creating a DeepLens Project

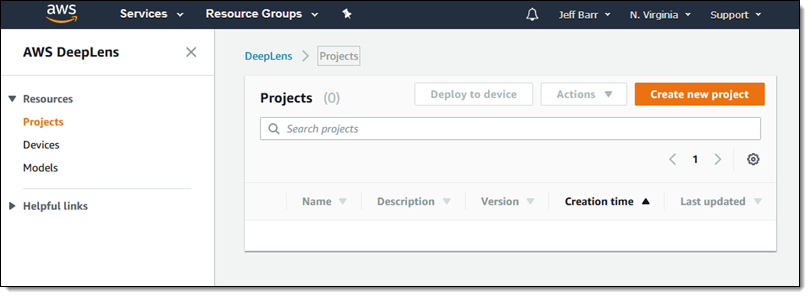

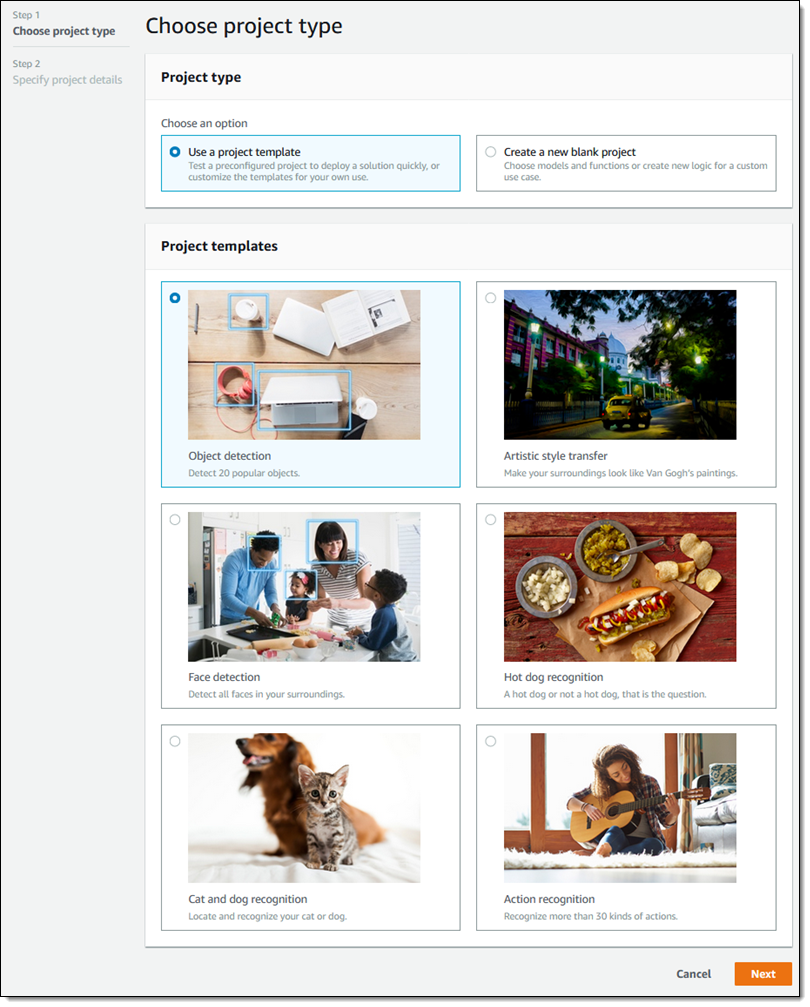

With everything connected and set up, I can create my first project. I navigate to the Projects and click on Create new project:

Then I choose a project template or start with a blank project. I’ll go for cat and dog recognition, selecting it and clicking on Next:

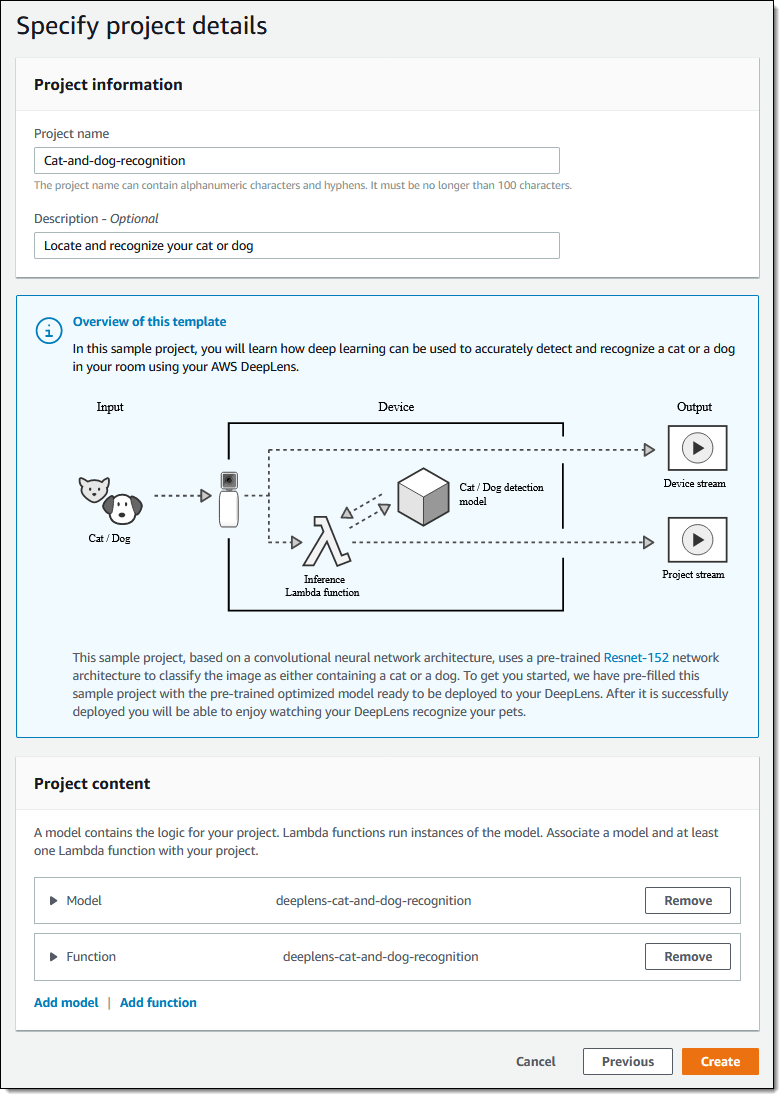

The console give me the opportunity to name and customize the project. As you can see, the project refers to a Lambda function and one of the pre-trained models that I listed above. The defaults are all good, so I simply click on Create:

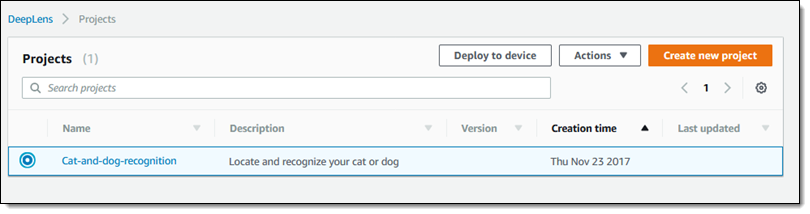

Now I simply deploy the project to my camera:

Training Cats and Dogs

The functions run on the camera and publish their output to an MQTT topic. Here’s an excerpt from the inner loop of the cat and dog recognition function (I removed some error handing):

while doInfer:

# Get a frame from the video stream

ret, frame = awscam.getLastFrame()

numFrames += 1

# Resize frame to fit model input requirement

frameResize = cv2.resize(frame, (224, 224))

# Run model inference on the resized frame

inferOutput = model.doInference(frameResize)

# Publish a message to the cloud for every 100 frames

if numFrames >= 10:

msg = "Infinite inference is running. Sample result of the last frame is\n"

# Output inference result of the last frame to cloud

# The awsca module can parse the output from some known models

outputProcessed = model.parseResult(modelType, inferOutput)

# Get top 5 results with highest probiblities

topFive = outputProcessed[modelType][0:2]

msg += "label prob"

for obj in topFive:

msg += "\n{} {}".format(outMap[obj["label"]], obj["prob"])

client.publish(topic=iotTopic, payload=msg)

numFrames = 0;

Like I said, you can modify this or you can start from scratch. Either way, as you can see, it is pretty easy to get started.

I can’t wait to see what you come up with once you get a DeepLens in your hands. To learn more and to have the opportunity to take a AWS DeepLens home with you, be sure to attend one of the sixteen DeepLens Workshops at AWS re:Invent.

Pre-Order One Today

We’ll start shipping the AWS DeepLens in 2018, initially in the US. To learn more about pricing and availability, or to pre-order one of your own, visit the DeepLens page.

— Jeff;