Best Practices in Evaluating Elastic Load Balancing

This article describes the features and unique architecture of the Amazon Web Services (AWS) Elastic Load Balancing service. It provides you with best practices, so that you can avoid common pitfalls when you test and evaluate Elastic Load Balancing. The intended audience for this whitepaper is developers who have minimal experience using Elastic Load Balancing, but it will be especially relevant if you have used software or hardware load balancers in the past.

Submitted By: SpencerD@AWS

AWS Products Used: Amazon EC2

Created On: February 27, 2012

Best Practices in Evaluating Elastic Load Balancing

Abstract

To best evaluate Elastic Load Balancing you need to understand its architecture. This article describes the features and unique architecture of the Amazon Web Services (AWS) Elastic Load Balancing service. It provides you with best practices, so that you can avoid common pitfalls when you test and evaluate Elastic Load Balancing. The intended audience for this whitepaper is developers who have minimal experience using Elastic Load Balancing, but it will be especially relevant if you have used software or hardware load balancers in the past.

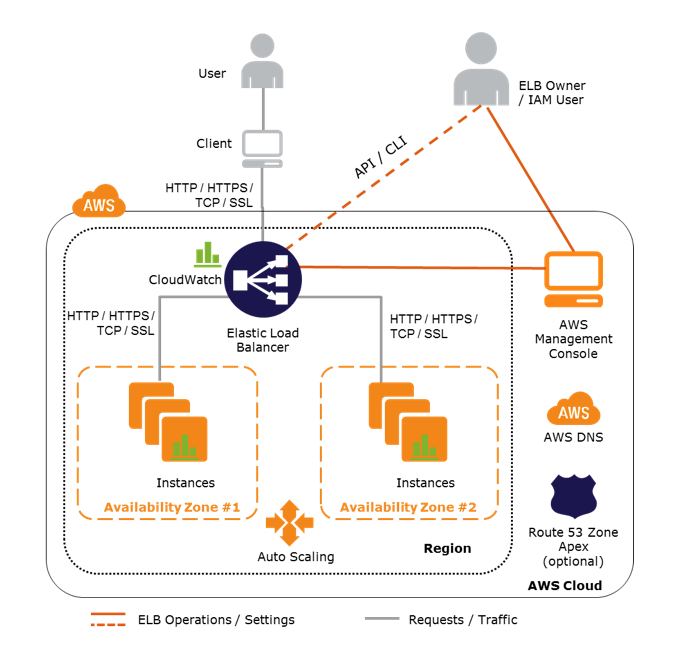

Overview of Elastic Load Balancing

Elastic Load Balancing automatically distributes incoming application traffic across multiple Amazon Elastic Compute Cloud (Amazon EC2) instances. You can set up an elastic load balancer to load balance incoming application traffic across Amazon EC2 instances in a single Availability Zone or multiple Availability Zones. Elastic Load Balancing enables you to achieve even greater fault tolerance in your applications, plus it seamlessly provides the amount of load balancing capacity that is needed in response to incoming application traffic.

You can build fault tolerant applications by placing your Amazon EC2 instances in multiple Availability Zones. To achieve even more fault tolerance with less manual intervention, you can use Elastic Load Balancing. When you place your compute instances behind an elastic load balancer, you improve fault tolerance because the load balancer can automatically balance traffic across multiple instances and multiple Availability Zones. This ensures that only healthy EC2 instances receive traffic.

Elastic Load Balancing also detects the health of EC2 instances. When it detects unhealthy Amazon EC2 instances, it no longer routes traffic to them. Instead, it spreads the load across the remaining healthy EC2 instances. If you have set up your EC2 instances in multiple Availability Zones, and all of your EC2 instances in one Availability Zone become unhealthy, Elastic Load Balancing will route traffic to your healthy EC2 instances in those other zones. When the unhealthy EC2 instances have been restored to a healthy state Elastic Load Balancing will resume load balancing to those instances. Additionally, Elastic Load Balancing is itself a distributed system that is fault tolerant and actively monitored.

Elastic Load Balancing also offers integration with Auto Scaling, which ensures that you have the back-end capacity available to meet varying traffic levels. Let's say that you want to make sure that the number of healthy EC2 instances behind an Elastic Load Balancer is never fewer than two. You can use Auto Scaling to set these conditions, and when Auto Scaling detects that a condition has been met, it automatically adds the requisite amount of EC2 instances to your Auto Scaling Group. Here's another example: If you want to make sure to add EC2 instances when the latency of any one of your instances exceeds 4 seconds over any 15 minute period, you can set that condition. Auto Scaling will take the appropriate action on your EC2 instances, even when running behind an Elastic Load Balancer. Auto Scaling works equally well for scaling EC2 instances whether you're using Elastic Load Balancing or not.

One of the major benefits of Elastic Load Balancing is that it abstracts out the complexity of managing, maintaining, and scaling load balancers. The service is designed to automatically add and remove capacity as needed, without needing any manual intervention.

Architecture of the Elastic Load Balancing Service and How It Works

There are two logical components in the Elastic Load Balancing service architecture: load balancers and a controller service. The load balancers are resources that monitor traffic and handle requests that come in through the Internet. The controller service monitors the load balancers, adds and removes capacity as needed, and verifies that load balancers are behaving properly.

Scaling Elastic Load Balancers

Once you create an elastic load balancer, you must configure it to accept incoming traffic and route requests to your EC2 instances. These configuration parameters are stored by the controller, and the controller ensures that all of the load balancers are operating with the correct configuration. The controller will also monitor the load balancers and manage the capacity that is used to handle the client requests. It increases capacity by utilizing either larger resources (resources with higher performance characteristics) or more individual resources. The Elastic Load Balancing service will update the Domain Name System (DNS) record of the load balancer when it scales so that the new resources have their respective IP addresses registered in DNS. The DNS record that is created includes a Time-to-Live (TTL) setting of 60 seconds, with the expectation that clients will re-lookup the DNS at least every 60 seconds. By default, Elastic Load Balancing will return multiple IP addresses when clients perform a DNS resolution, with the records being randomly ordered on each DNS resolution request. As the traffic profile changes, the controller service will scale the load balancers to handle more requests, scaling equally in all Availability Zones.

Your load balancer will also perform health checks on the EC2 instances that are registered with the Elastic Load Balancing service. The health checks must reach the defined target set in the Elastic Load Balancing configuration for the number of successful checks before the instance is considered in service and healthy. For example, for any instance registered with Elastic Load Balancing, if you set the interval for health checks to 20 seconds, and you set the number of successful health checks to 10, then it will take at least 200 seconds before Elastic Load Balancing will route traffic to the instance. The health check also defines a failure threshold. For example, if you set the interval to 20 seconds, and you set the failure threshold at 4, then when an instance no longer responds to requests, at least 80 seconds will elapse before it is taken out of service. However, if an instance is terminated, traffic will no longer be sent to the terminated instance, but there can be a delay before the load balancer is aware that the instance was terminated. For this reason, it is important to de-register your instances before terminating them; instances will be removed from service in a much shorter amount of time if they are de-registered.

Planning Your Load Test Scenario

Many developers have used load testing tools in a virtual private server (VPS) or in a physical hardware environment, and they understand how to evaluate the behavior of load balancers in these context. They evaluate the load balancers to make the following determinations:

- How many application servers do I need to support various traffic levels?

- How many load balancers do I need to distribute the traffic without a decrease in response time?

- Will my application continue to operate in the event of a hardware or network failure?

Although all of these questions are important, depending on how you configure your application, questions one and two might not be as important in the EC2 environment. If you use Auto Scaling, then Auto Scaling will add and remove application servers as needed, so that you do not have to make this decision up front. When you use Elastic Load Balancing, you do not need to be concerned with question two, because you only pay for the hours that Elastic Load Balancing is running and the data it processes, not the actual capacity that may be needed to service your application.

Because the goal of Elastic Load Balancing is to scale to meet the demands of your application, and because this is done by updating the DNS records for your Elastic Load Balancer, you need to be aware of how some of the features of the Elastic Load Balancing service will affect your test scenario.

DNS Resolution

There are a variety of load testing tools, and most of the tools are designed to address the question of how many servers a business must procure based on the amount of traffic the servers are able to handle. To test server load in this situation, it was logical to quickly ramp up the traffic to determine when the server became saturated, and then to try iterations of the tests based on request and response size to determine the factors affecting the saturation point.

When you create an elastic load balancer, a default level of capacity is allocated and configured. As Elastic Load Balancing sees changes in the traffic profile, it will scale up or down. The time required for Elastic Load Balancing to scale can range from 1 to 7 minutes, depending on the changes in the traffic profile. When Elastic Load Balancing scales, it updates the DNS record with the new list of IP addresses. To ensure that clients are taking advantage of the increased capacity, Elastic Load Balancing uses a TTL setting on the DNS record of 60 seconds. It is critical that you factor this changing DNS record into your tests. If you do not ensure that DNS is re-resolved or use multiple test clients to simulate increased load, the test may continue to hit a single IP address when Elastic Load Balancing has actually allocated many more IP addresses. Because your end users will not all be resolving to that single IP address, your test will not be a realistic sampling of real-world behavior.

Sticky Sessions

Elastic Load Balancing has features that support sticky sessions (also known as session affinity) using cookies. By default, these features are disabled, and enabling them is a simple change. There are two types of stickiness policies that you can use, but the net effect is the same. If the elastic load balancer has sticky sessions enabled, this traffic will be routed to the same back-end instances as the user continues to access your application. When you design your load tests and are using sticky sessions, it will be important to decide how you will test this feature. Consider how sticky sessions can be an issue in both load testing and in the real world.

For example, consider an application that normally has four instances serving a site. Each user receives a cookie that contains instructions for Elastic Load Balancing to send their requests to one of the instances with a duration of ten minutes. When a major change in traffic occurs and the number of users coming to your site triples, all of these new users will get cookies that direct their requests to one of the four instances. No matter how much capacity you add to the back-end, a huge number of users now have their requests sent to the original four instances until the cookies expire.

Although sticky sessions can be a powerful feature, it is important to think about how they fit into your architecture and whether they are a good solution for your scenario.

Health Checks

Elastic Load Balancing will perform health checks on back-end instances, using the configuration that you supply. When an instance is registered with Elastic Load Balancing, it will not be considered healthy until the number of successful health checks that define a healthy state are completed. The instance will also be removed from the pool (but still registered with Elastic Load Balancing) when the limit on unsuccessful health checks is reached. As long as an instance is registered with Elastic Load Balancing, the load balancer will continue to perform health checks on an instance. If you set a long interval for health checks and/or a high healthy threshold, it will take more time for instances to start receiving traffic from Elastic Load Balancing. This is especially important when you use automated processes and systems (such as Auto Scaling) to make sure capacity is added to your application pool.

As you plan your architecture and your load tests, it is important to decide how health checks will be managed. Consider whether you need a lightweight page that is checked frequently to make sure the web server is responding or whether you need a heavyweight page that is checked less frequently but that verifies that all the parts of your application are functioning properly. All health check requests made from Elastic Load Balancing to your instances are ignored by metrics reported to Amazon CloudWatch, but they will appear in the logs of your application (if enabled). In addition to the health check that you configure, Elastic Load Balancing will perform more frequent checks that only open a connection to your instances; this check is not used in determining the health state of the instance but is instead used to ensure that the service is aware when a registered instance is terminated.

Back-end Authentication

If SSL encryption is used when communicating with back-end instances, you can optionally enable authentication of back-end instances. When this authentication is configured, the elastic load balancer will only communicate with the back-end instance if the public key of the certificate is in the configured white list of public keys. This powerful feature provides additional levels of protection and security, but it also means that request throughput is slightly lower. Depending on your expectations for the response time, this feature may or may not be appropriate for your application.

Selecting a Testing Framework

Regardless of the details of the test setup, you will need some software or a framework for executing load tests. Your load testing setup must be able to increase the number of requests over time, and it may need to spread the requests across multiple clients or agents. Many load testing tools support the creation of separate load generating clients, while other load testing tools will require you to make adjustments to your testing implementation. The three common scenarios used for testing are described in the following sections.

Single Client Tests

In single client tests, a load generator will issue requests to the server using the same client. In most cases, the client does not re-resolve the DNS after the test is initiated. A good example of this type of test is Apache Bench (ab). If you are using this type of testing tool, there are two options:

- Write discrete tests at a given sample size and concurrency then start a new set of tests and invoke these tests separately so that the client will re-resolve the DNS (possibly even forcing a re-resolution depending on the operating system and other factors). It is important to save detailed request logs so you can aggregate the logs for a single view of the request details.

- Launch multiple client instances and initiate the tests on the different instances at approximately the same time. AWS CloudFormation can help you do this by creating your load test script and launching multiple clients, then simply issuing remote SSH commands to initiate the tests. You may want to bring up more load generating clients based on Auto Scaling rules (for example, when the average CPU of other load clients reaches a certain level, add more load generators).

However, depending on the load you are trying to generate, your test may be constrained by the client's ability to issue requests. In this case, you need to consider distributed tests.

Built-in Multi-Client Tests

Some testing frameworks will generate multiple clients for executing the tests. One example is the curl-loader open source software performance testing tool. Because each client runs in its own isolated process, it will re-resolve DNS on its own. Like the single client tests, you may need to consider distributed tests if the load to test is greater than the load that the client can generate.

Distributed Tests

To test Elastic Load Balancing using many clients, there are service providers that can perform automated testing using tools for website testing. Depending on your application, this may be the easiest and most efficient way to load test your application. If this type of service won't work for you, then you could consider tools that help you distribute tests, such as the open source Fabric framework combined with an interesting approach called Bees with Machine Guns, which uses the Amazon EC2 environment for launching test clients that execute tests and report the results back to a test controller. There are many ways to approach the need for distributed testing, but in most cases, especially at very high loads, using a distributed test framework can be the most effective approach for simulating real-world traffic.

Recommended Testing Approach

There are some specific approaches and considerations that you should account for in your Elastic Load Balancing test plan. It is critical that you test not only the saturation and breaking points but also the "normal" traffic profile you expect. If your site receives a consistent flow of traffic during normal business hours, then you should simulate the steady states of low and high traffic; you should also simulate the time frame in the mornings and evenings in which the traffic profile transitions from low to high or vice versa. If you expect to receive significant changes in traffic in short periods of time, then you should test this. If your application is an internal intranet site, then it is probably unlikely that traffic will go from 1000 users to 100,000 users in five minutes, but if you are building an application that is likely to have flash traffic, then you should test in this environment so that you understand not only how Elastic Load Balancing will behave, but also how your application and any components in your application will behave.

Ramping Up Testing

Once you have a testing tool in place, you will need to define the growth in the load. We recommend that you increase the load at a rate of no more than 50 percent every five minutes. Both step patterns and linear patterns for load generation should work well with Elastic Load Balancing. If you are going to use a random load generator, then it is important that you set the ceiling for the spikes so that they do not go above the load that Elastic Load Balancing will handle until it scales (see Pre-Warming the ELB).

If your application architecture involves using session affinity with your load balancer, then some additional configuration steps are required, as described below.

Load Testing with Session Affinity

If your configuration leverages session affinity, then it is important for the load generator to use multiple clients, so that Elastic Load Balancing can behave as it would in the real world. If you do not make these adjustments, then Elastic Load Balancing will always send requests to the same back-end servers, potentially overwhelming the back-end servers well before Elastic Load Balancing has to scale to meet the load. To test in this scenario, you will need to use a load testing solution that uses multiple clients to generate the load.

Monitoring the Environment

One of the benefits of Elastic Load Balancing is that it provides a number of metrics through Amazon CloudWatch. While you are performing load tests, there are three areas that are important to monitor: your load balancer, your load generating clients, and your application instances registered with Elastic Load Balancing (as well as EC2 instances that your application depends on).

Monitoring Elastic Load Balancing

Elastic Load Balancing provides the following metrics through Amazon CloudWatch (the documentation at https://aws.amazon.com/documentation/cloudwatch/ provides detailed information on these metrics):

- Latency

- Request count

- Healthy hosts

- Unhealthy hosts

- Backend 2xx-5xx response count

- Elastic Load Balancing 4xx and 5xx response count

As you test your application, all of these metrics are important to watch. The particular items of interest are likely to be the Elastic Load Balancing 5xx response count, the backend 5xx response count, and latency.

Monitoring the Load Generating Clients

It is also important to monitor any clients that are generating load to ensure that they are able to send and receive responses at the load you are testing. If you use a single test client running in Amazon EC2, make sure it is sufficiently scaled to handle the load. At higher loads, it is necessary to use multiple clients, because the client may be network-bound and may be affecting the test results.

Monitoring Your Application Instances

We recommend that you monitor your application instances just as you would with any other tests. The typical monitoring tools apply, such as top for Linux or Performance Analysis of Logs (PAL) for Windows, but you can also use Amazon CloudWatch metrics for your instances (see the CloudWatch documentation at https://aws.amazon.com/documentation/cloudwatch/). You may want to consider enabling detailed, one-minute metrics for your application instances to ensure that you have good visibility into their behavior while under test.

Common Pitfalls When Testing Elastic Load Balancing

Elastic Load Balancing Capacity Limits Reached

Elastic Load Balancing will likely never reach true capacity limits, but until it scales based on the metrics, there can be periods in which your load balancer will return an HTTP 503 error when it cannot handle any more requests. The load balancers do not try to queue all requests, so if they are at capacity, additional requests will fail. If traffic grows over time, then this behavior works well, but in the case of significant spikes in traffic or in certain load testing scenarios, the traffic may be sent to your load balancer at a rate that increases faster than Elastic Load Balancing can scale to meet it. There are two options for addressing this situation, described below.

Pre-Warming the Load Balancer

Amazon ELB is able to handle the vast majority of use cases for our customers without requiring "pre-warming" (configuring the load balancer to have the appropriate level of capacity based on expected traffic). In certain scenarios, such as when flash traffic is expected, or in the case where a load test cannot be configured to gradually increase traffic, we recommend that you contact us to have your load balancer "pre-warmed". We will then configure the load balancer to have the appropriate level of capacity based on the traffic that you expect. We will need to know the start and end dates of your tests or expected flash traffic, the expected request rate per second and the total size of the typical request/response that you will be testing.

Controlled Load Testing Parameters

We recommend configuring the load test so that the traffic increases at no more than 50 percent over a five-minute interval. The test can be set up to use either a stair-step approach to traffic or a gradual curve. When this is configured to increase load even to large volumes over a period of time, Elastic Load Balancing will scale to meet the demand.

DNS Resolution

If clients do not re-resolve the DNS at least once per minute, then the new resources Elastic Load Balancing adds to DNS will not be used by clients. This can mean that clients continue to overwhelm a small portion of the allocated Elastic Load Balancing resources, while overall Elastic Load Balancing is not being heavily utilized. This is not a problem that can occur in real-world scenarios, but it is a likely problem for load testing tools that do not offer the control needed to ensure that clients are re-resolving DNS frequently.

Sticky Sessions and Unique Clients

If sticky sessions are enabled, it is critical that the load test framework not re-use connections and clients in the tests, but rather it creates unique clients for each request. If this is not done, then the clients will constantly send traffic to the same Elastic Load Balancing resources and the same back-end instances, overwhelming what is potentially a very small amount of the available capacity.

Initial Capacity

Elastic Load Balancing has a starting point for its initial capacity, and it will scale up or down based on traffic. In some cases, the traffic that immediately starts coming in to the load balancer will be greater than the amount that the initial capacity configuration supports. Alternatively, if the load balancer is created and not used for some period of time (generally a few hours, but potentially as little as an hour), the load balancer may scale down before the traffic begins to reach it. This means that the load balancer has to scale up just to reach its initial capacity level.

Connection Timeouts

When configuring instances that will be registered with Elastic Load Balancing, it is important to ensure that the idle timeout on these instances is set to at least 60 seconds. Elastic Load Balancing will close idle connections after 60 seconds, and it expects back-end instances to have timeouts of at least 60 seconds. If you fail to adjust your instances Elastic Load Balancing might incorrectly identify the instance as an unhealthy host and stop sending traffic to the instance.

Additional Reading

Elastic Load Balancing Pages

- https://aws.amazon.com/elasticloadbalancing

- https://aws.amazon.com/documentation/elasticloadbalancing

CloudWatch Pages

Auto Scaling Pages

Distributed Testing Solutions

There are many potential solutions for distributed load testing. See below for a partial list of the partners that may be able to meet your testing needs. Please visit the solution providers section of the AWS site for more solutions: https://aws.amazon.com/solutions/solution-providers.