- Products›

- Artificial Intelligence

AWS AI: Innovation that drives results faster

AI agents and tools built for precision and performance, ready to run on day one.

Build AI your way

The future of business is being shaped by AI and intelligent agents. We envision billions of AI agents working alongside humans, fundamentally changing how organizations operate and innovate. Whether you're just starting with AI or ready to scale, AWS provides the flexibility to choose your path while maintaining control of your data and costs. AWS is helping companies and builders move beyond prompts and POCs to reimagine how work gets done with agentic AI—turning ideas into agents, code into capability, and effort into measurable impact. Choose AWS to get the freedom to invent your way, maximize value of every AI investment, and build confidently with AI you can trust.

What's new in AWS AI

Discover breakthrough innovations powering the future of business

Five AI use cases that deliver value

Discover how leading businesses turn AI into action. From automating workflows and building intelligent applications to creating content, making data-driven decisions, and engaging customers—explore five proven use cases that make AI achievable for your business. See what's possible when you focus on outcomes that matter.

AI services and tools to create a business advantage

Agentic AI

Agentic AI represents the next frontier in computing—where intelligent agents reason, plan, and act autonomously to complete complex tasks with limited human involvement. From ready-to-deploy agents to comprehensive development tools to leading models, AWS provides everything organizations need to build and scale agentic AI.

Generative AI

Accelerate generative AI-powered innovation with enterprise-grade security and privacy, a choice of leading foundation models (FMs), a data-first approach, and infrastructure that pushes the envelope to deliver the highest performance while lowering costs. Organizations of every size in nearly every industry trust AWS to turn their prototypes, demos, and betas into real-world innovation and productivity gains.

Machine Learning

Get deeper insights from your data while lowering costs with AI and machine learning. Amazon SageMaker AI is a fully managed service that brings together the most comprehensive set of AI tools and capabilities to enable high-performance, low-cost AI model development for any use case. With SageMaker AI, you can build, train, customize, and deploy AI models at scale using complete development environments, purpose-built training infrastructure, AI agent-guided workflows (in preview), and optimized inference capabilities—all with enterprise-grade governance and security controls.

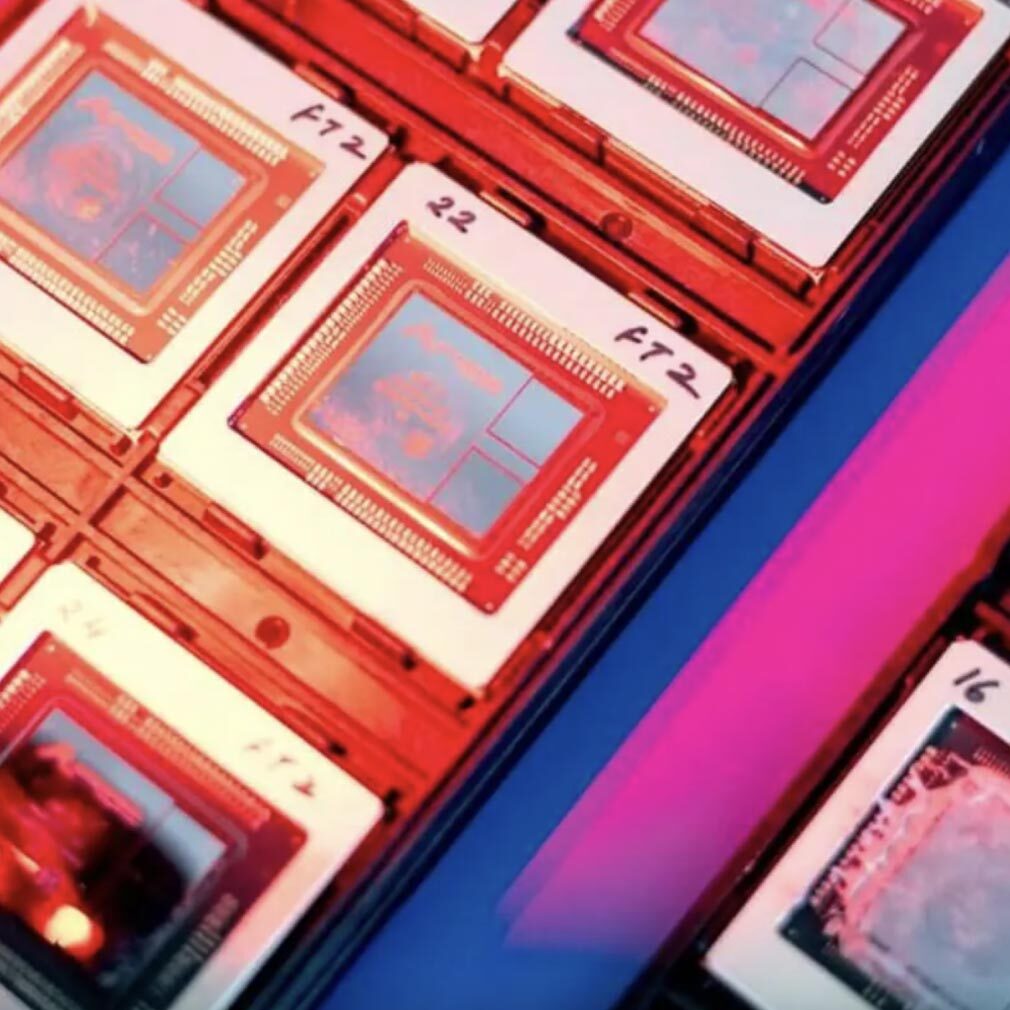

AI infrastructure

With the growth of AI comes the increased usage, management, and cost of infrastructure resources. To maximize performance, lower costs, and avoid complexity during the training and deployment of foundation models to production, AWS provides specialized infrastructure that's optimized for your AI use cases.

Data foundation for AI

Only AWS provides the most comprehensive set of data capabilities for an end-to-end data foundation that supports any workload or use case, including generative AI. Quickly and easily connect to and act on all your data with end-to-end data governance that helps your teams move faster with confidence. And with AI built into our data services, AWS makes the complexities of data management easier, so you spend less time managing data and more time getting value out of it.

Building AI responsibly

The rapid growth of AI and intelligent agents brings promising innovation and new challenges. At AWS, we make responsible AI practical and scalable, freeing you to accelerate trusted AI innovation. Our science-based best practices and tools—including the AWS Well-Architected Responsible AI Lens, Amazon Bedrock Guardrails, Amazon Bedrock AgentCore—help you build and operate AI responsibly across your use cases. Build with responsible AI practices from day one to move faster with confidence and earn customer trust.