- Industry›

- AWS for Media & Entertainment

AWS for Media & Entertainment

Deliver breakthrough experiences with cloud-based solutions for M&E

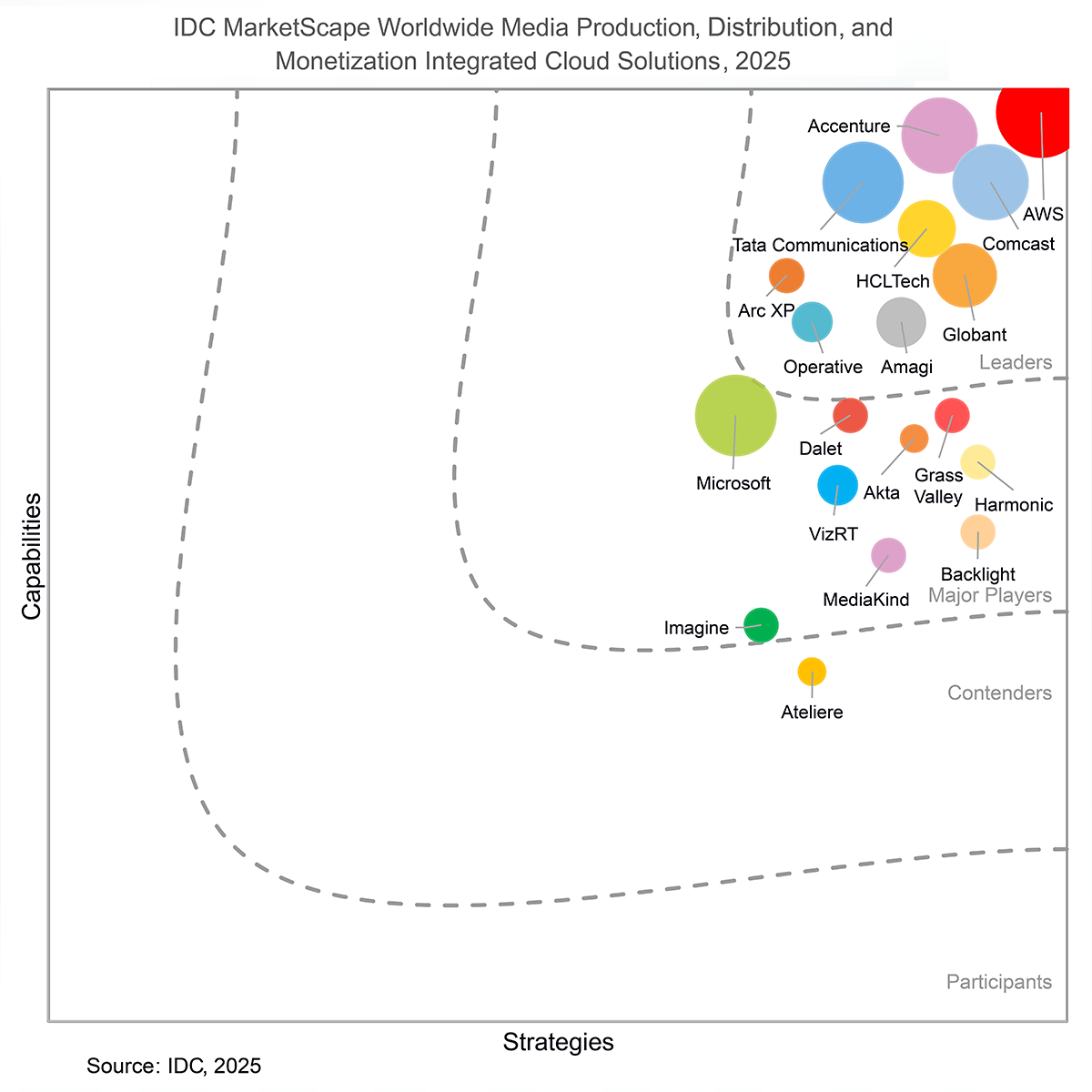

IDC MarketScape

IDC names Amazon Web Services (AWS) as a Leader in the 2025 MarketScape for Worldwide Media Production, Distribution, and Monetization Integrated Cloud Solutions. Download the report today.

IBC 2025 Demo Showcase

IBC2025 brings together the global media, entertainment, and technology community to shape the future of our industry. Explore the AWS for Media & Entertainment demos.

Create content. Connect workflows. Captivate audiences.

AWS for M&E helps customers reimagine their business models with the cloud. With a comprehensive set of services, solutions, and partners, M&E customers can keep pace with changes in viewing behavior, new innovations in content creation, data-driven monetization, generative AI, and customer engagement.

Reimagine Media & Entertainment with AWS

Purpose-built services for M&E

Purpose-built services for M&E

Produce high-impact creative projects and outstanding viewing experiences with Media Services on AWS.

Tools for building generative AI applications

Generative AI-powered applications

Partners

Discover solutions from an extensive network of industry-leading AWS Partners who have demonstrated technical expertise and customer success in building M&E solutions on AWS.

AWS Marketplace

Media and entertainment solutions from AWS Partners are also available on AWS Marketplace, a digital catalog of third-party software, services, and data that makes it easy to find, buy, deploy, and manage software on AWS.

AWS Marketplace

Media and entertainment solutions from AWS Partners are also available on AWS Marketplace, a digital catalog of third-party software, services, and data that makes it easy to find, buy, deploy, and manage software on AWS.

Amagi CLOUDPORTXpress

A cloud-based channel playout platform to launch and manage broadcast-grade linear channels. Learn more »

A cloud-based channel playout platform to launch and manage broadcast-grade linear channels. Learn more »

Arc XP

An integrated ecosystem of cloud-based tools that create and distribute content, drive digital commerce, and deliver powerful experiences. Learn more »

An integrated ecosystem of cloud-based tools that create and distribute content, drive digital commerce, and deliver powerful experiences. Learn more »

Aspera on Cloud

A hosted service to quickly and reliably send and share your files and data sets of any size and type across a hybrid cloud environment. Learn more »

A hosted service to quickly and reliably send and share your files and data sets of any size and type across a hybrid cloud environment. Learn more »