为什么选择 Amazon SageMaker?

Amazon SageMaker 是一项完全托管的服务,它汇集了大量工具,可为任何使用案例提供高性能、低成本的机器学习(ML)。借助 SageMaker,您可以使用笔记本、调试器、分析器、管道、MLOps 等工具大规模构建、训练和部署机器学习模型——这一切都在一个集成式开发环境(IDE)中完成。SageMaker 通过简化的访问控制和机器学习项目的透明度来支持治理要求。此外,您可以使用专门构建的工具来微调、实验、再训练和部署基础模型,构建自己的基础模型(在海量数据集上训练过的大型模型)。 SageMaker 提供对数百个预训练模型的访问权限,包括公开的基础模型,您只需点击几下即可部署这些模型。

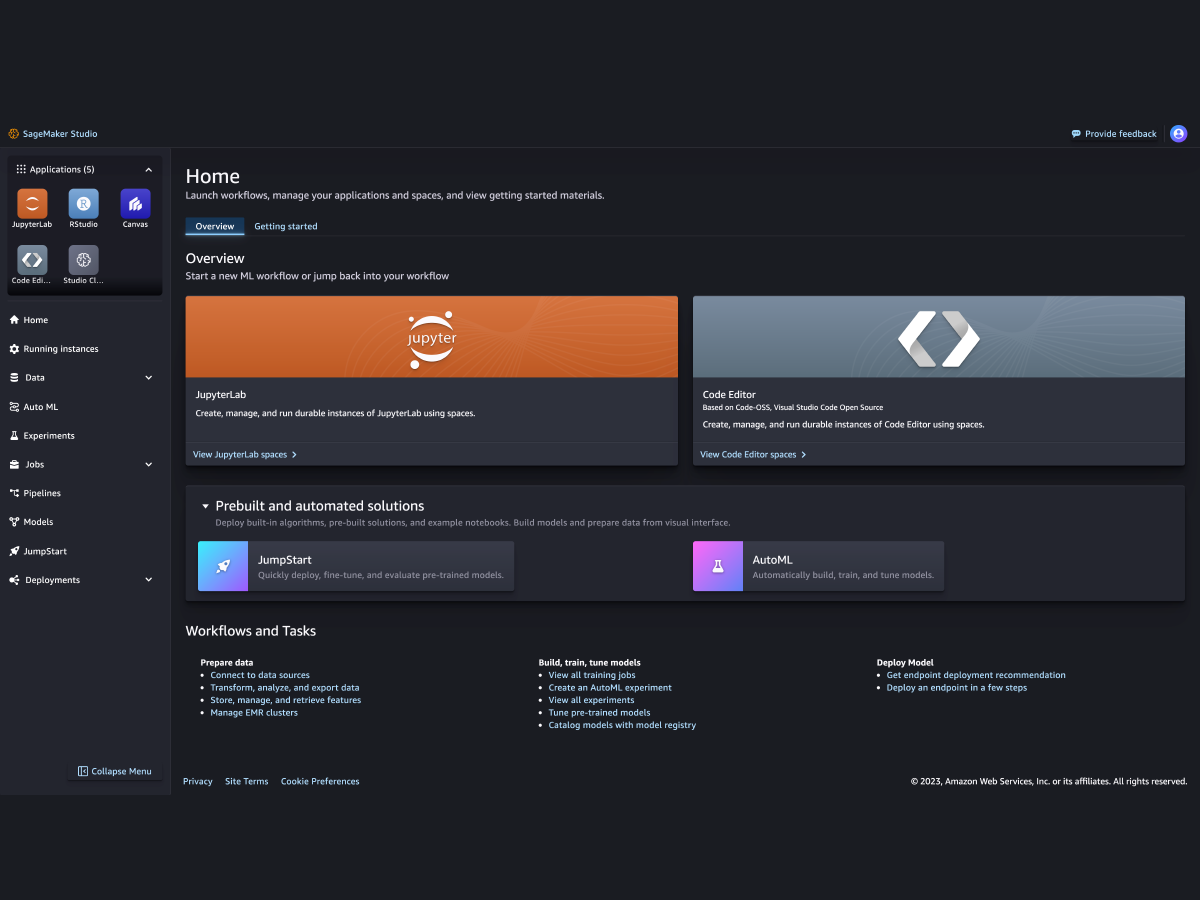

Amazon SageMaker 概述

为什么选择 Amazon SageMaker?

Amazon SageMaker 是一项完全托管的服务,它汇集了大量工具,可为任何使用案例提供高性能、低成本的机器学习(ML)。借助 SageMaker,您可以使用笔记本、调试器、分析器、管道、MLOps 等工具大规模构建、训练和部署机器学习模型——这一切都在一个集成式开发环境(IDE)中完成。SageMaker 通过简化的访问控制和机器学习项目的透明度来支持治理要求。此外,您可以使用专门构建的工具来微调、实验、再训练和部署基础模型,构建自己的基础模型(在海量数据集上训练过的大型模型)。 SageMaker 提供对数百个预训练模型的访问权限,包括公开的基础模型,您只需点击几下即可部署这些模型。

Amazon SageMaker 模型训练概述

SageMaker 的优势

让更多人利用机器学习进行创新

-

业务分析师

-

数据科学家

-

ML 工程师

-

业务分析师

-

-

数据科学家

-

-

ML 工程师

-

支持领先的机器学习框架、工具包和编程语言