AWS IoT Greengrass 可讓您輕鬆使用在雲端建立、訓練和優化的模型,在本地裝置上執行機器學習推論。AWS IoT Greengrass 讓您使用在 Amazon SageMaker 中訓練過的機器學習模型,或使用儲存於 Amazon S3 中自行預先訓練過的模型的靈活性。

機器學習使用從現有資料學習的統計演算法,這個過程稱為培訓,以便對新資料做出各種決策,這個過程稱為推論。在訓練期間找出資料中的模式和關係,以建立模型。之後,系統可以透過這個模型對以前沒有遇到過的資料做出明智的決定。優化模型會壓縮模型尺寸,使它能快速執行。訓練及優化 ML 模型需要大量的運算資源,因此非常適合在雲端進行。但是,推論一般需要很少的運算能力,並且通常在新的資料可用時即時完成。以非常短的延遲時間獲得推論結果非常重要,以確保 IoT 應用程式能夠快速回應本機事件。

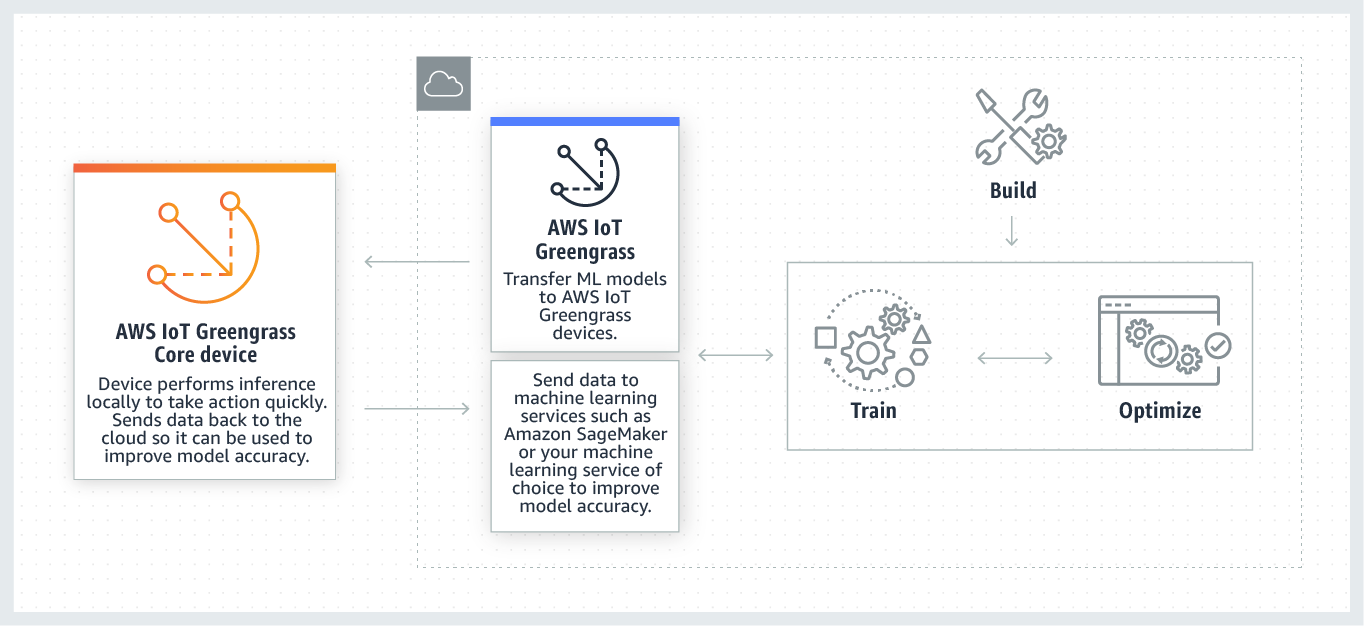

AWS IoT Greengrass 為您提供兩全其美的解決方案。您使用在雲端建立、訓練和優化的機器學習模型,然後在本機裝置執行推論。例如,您可以在 SageMaker 中建立一個用於場景偵測分析的預測模型,針對在任何相機上執行進行優化,然後將它部署,以便預測可疑活動,並送出提醒訊號。從 AWS IoT Greengrass 上執行的推論收集的資料可以送回 SageMaker,加上標籤,並用來持續改進機器學習模型的品質。

優勢

靈活

按幾下滑鼠即可將模型部署到連線的裝置

加速推論效能

在更多裝置上執行推論

在連線裝置上輕鬆執行推論

建立更準確的模型

運作方式

使用案例

預測性工業維護

精準農業

安全性

零售和觀光旅遊

影片處理

特色客戶

AWS IoT Greengrass 透過自動偵測和識別蔬菜的主要生長階段來種植更多農作物,協助 Yanmar 提高溫室營運的智慧。

Electronic Caregiver 利用 AWS IoT Greengrass ML 推論確保高品質的照護,而且可以將機器學習模型直接推送到邊緣裝置,讓患者更安全。

使用 AWS IoT Greengrass 時,Vantage Power 可以將機器學習模型推送至個別車輛,並提早 1 個月偵測到電池故障。

特色合作夥伴