- AWS Solutions Library›

- Guidance for DevOps on Amazon Redshift

Guidance for DevOps on Amazon Redshift

Overview

Important: This Guidance requires the use of AWS CodeCommit, which is no longer available to new customers. Existing customers of AWS CodeCommit can continue using and deploying this Guidance as normal.

This Guidance demonstrates best practices for running development and operations (DevOps) on Amazon Redshift using both open source software and AWS services. DevOps is a set of practices that combine software development and IT operations to provide continuous integration and continuous delivery (CI/CD). It can help customers establish an agile software development process, shorten the development lifecycle, and deliver high-quality software.

How it works

AWS Services

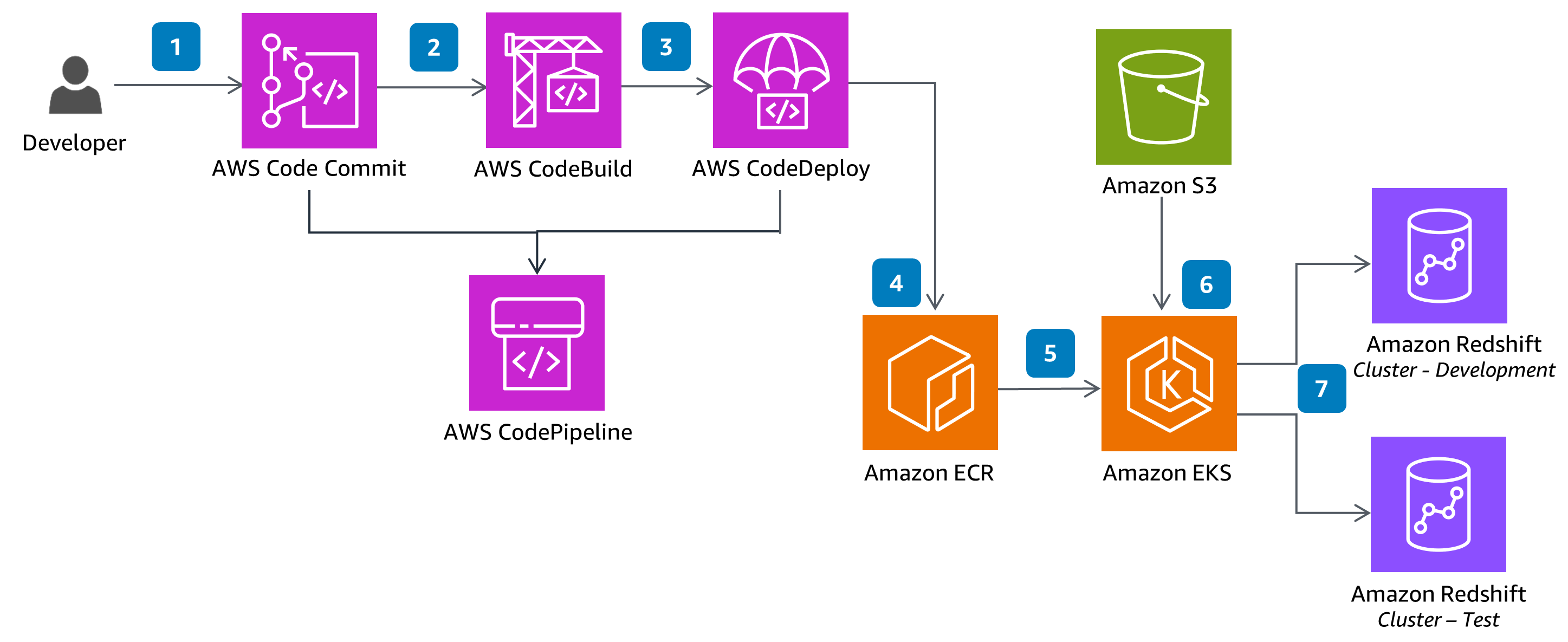

This architecture displays best practices for running development and operations (DevOps) on Amazon Redshift.

Open source software

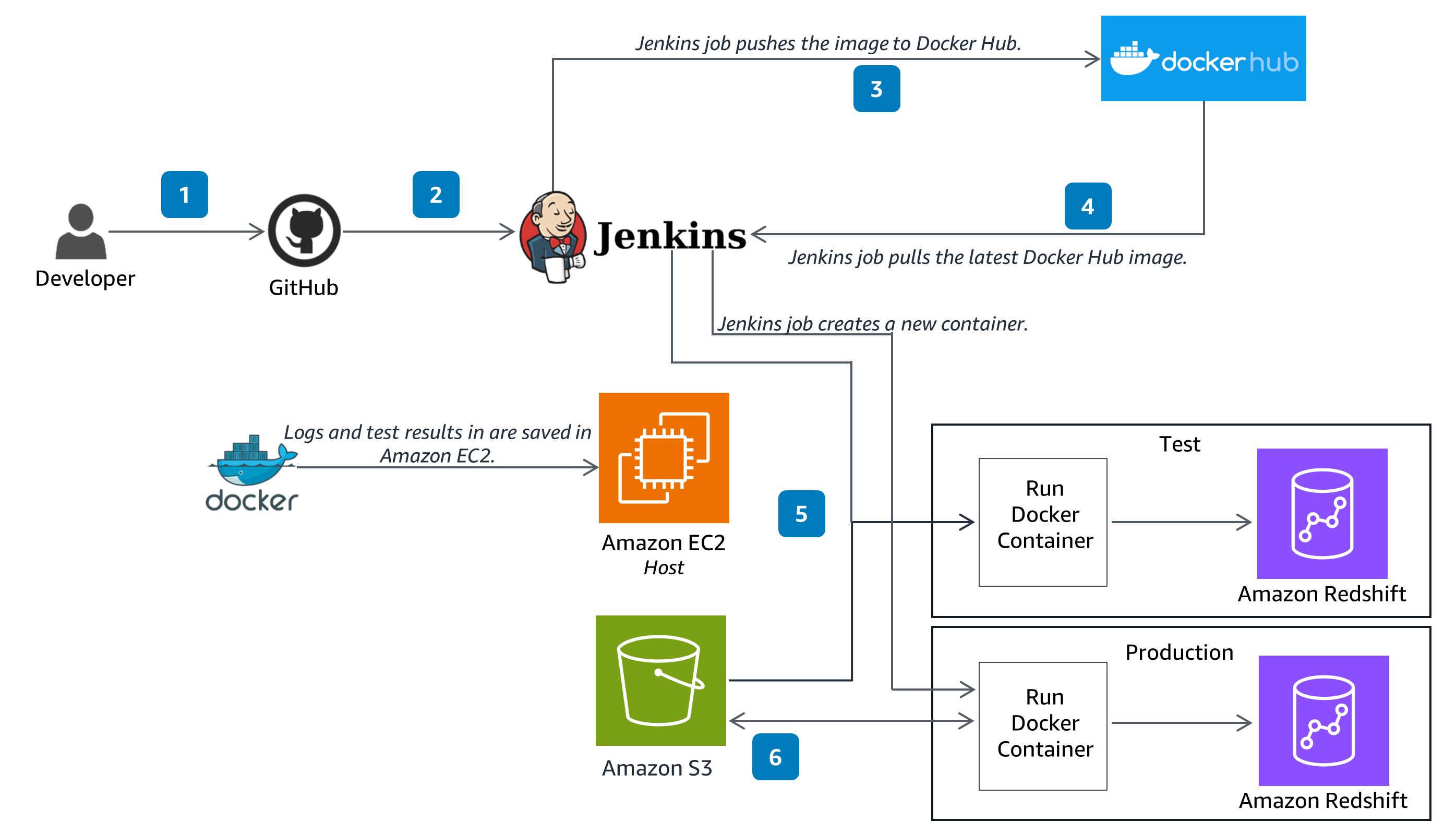

This architecture displays DevOps best practices to build and run open source software on Amazon Redshift.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

This Guidance ensures you are well-architected by helping you evaluate your workloads against other best practices. One way this is accomplished is by using configuration as code, which allows teams to manage config files from a centralized location. Another way to ensure continual improvement is through feedback loops, which are implemented within this Guidance through GitHub, where the feedback received is prioritized and implemented as different versions of this Guidance are released.

For secure authentication and authorization, Amazon EC2 uses private keys for enhanced security. AWS Identity and Access Management (IAM) is used for AWS services with least privileged access granted, while non-root accounts are used to perform scripts and workloads in Amazon EC2 instances and containers.

To ensure you have a reliable application-level architecture, this Guidance saves Jenkins container configurations in an Amazon EC2 directory. The external configuration makes Jenkins stateless, providing you with the ability to restart when needed. Compute used in this Guidance is also stateless, so in case of failures, the Guidance can be restarted without configuration changes.

Logs and metrics are captured directly within the Amazon EC2 instance and Amazon CloudWatch sends notifications when thresholds are crossed or significant events occur. This Guidance also enables recovery from disaster events by performing the DDL and DML scripts that are saved configurations (these are YAML formatted templates that define an environment's version, tier, configuration option settings, and tags).

When configuration or code changes are needed, this Guidance uses GitHub (an open source environment) and CodeCommit to deploy the changes.

The services in this Guidance are built and meet the functional capabilities needed to deploy this Guidance's best practices. To experiment with this Guidance and optimize it based on your data, you can deploy this Guidance with an AWS CloudFormation template and experiment with:

-

Creating different Amazon Redshift clusters and running SQL scripts, such as changing the INI files.

-

Customizing the Docker build (inject any additional libraries and dependencies) by using Dockerfile, a text document that contains all the commands a user could call on the command line to assemble an image.

-

Adding configuration for the Jenkins job build with a Jenkinsfile, a text file that contains the definition of a Jenkins Pipeline, and is checked into source control.

-

Changing to build process details with buildspec.yml, a collection of build commands and related settings in YAML format.

We recommend you deploy the Amazon EC2 instance in the same Region with the Amazon Redshift cluster to reduce connection latency while performing the SQL statement commands.

Except for Amazon EC2 instances, all services in this Guidance are serverless. If you use CloudFormation, it deploys to a virtual private cloud (VPC) that displays costs associated with the Guidance. Because this Guidance is deployed in a contained VPC, there are no data transfer charges. We do recommend you stop any instances when not in use to reduce costs.

Except for Amazon EC2, the serverless components in this Guidance help reduce your carbon footprint by scaling to continually match your workloads while ensuring that only the minimum resources are required.

Implementation Resources

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages