Combine AWS Batch & Step Functions to create a video processing workflow

Cloud-based video hosting services regularly perform video transcoding and feature extraction on videos that are uploaded to the cloud. Video processing pipelines often require an elaborate workflow that manages multiple parallel compute jobs and handles exceptions.

In this tutorial, you will create an AWS Step Functions workflow that simulates the processing of videos that have been uploaded to the cloud. The workflow will process the videos by submitting jobs to multi-priority queues on the Amazon Batch service.

The Step Functions workflow will be used to specify dependencies and sequence jobs that are submitted to AWS Batch. Within AWS Batch, the user can define the compute environment, queues and queue priorities. Step Function workflows can be used to specify how exceptions are handled in the workflow.

Note: No actual processing will be performed by the workflow. This tutorial is designed to work within the AWS free tier and allow you to experiment with AWS Step Functions and AWS Batch. It is important to follow the tutorial recommendations when configuring the compute environment and to delete all resources after the tutorial is complete to avoid additional incurred cost.

| About this Tutorial | |

|---|---|

| Time | 10 minutes |

| Cost | Free |

| Use Case | Serverless |

| Products | AWS Batch, AWS Step Functions |

| Level | 100 |

| Last Updated | October 10, 2019 |

Step 1: Setup

1.1 — Open a browser and navigate to the AWS Batch console. If you already have an AWS account, login to the console. Otherwise, create a new AWS account to get started.

Already have an account? Log in to your account

Step 2: Setup a Batch job

Your next step is to setup an AWS Batch compute environment. The compute environment specifies the minimum, desired and maximum number of CPUs that will be available to run your batch jobs.

2.1 — Click compute environments from the left hand side panel. You will leave most elements to their default setting and only change a few items.

2.4 — For your compute resources, set the minimum vCPUs to 0, the desired vCPUs to 2 and the maximum vCPUs to 4.

You can limit the CPU resources to keep costs down for the tutorial. Setting minimum vCPUs to 0 will ensure that CPU time is not wasted when there are no active jobs submitted to the queue. The penalty for setting vCPUS to 0 is that when jobs are submitted, if there are no vCPUs active, there will be a cold start latency when vCPUs are allocated and booted up.

Step 3: Setup an AWS Batch queue

You will now create two AWS Batch queues with differing priorities.

Step 4: Setup a job definition

You will now create a series of dummy jobs that can be executed as part of a batch job.

4.2 — Click create.

Note: For the purpose of this tutorial, we will be using an Amazon Linux container to help illustrate the workflow steps. The container will not perform any real work. When creating a batch environment for your target use-case, you will need to provide a container that can do the processing you require. For more details, refer to creating a job definition in the AWS Batch users guide.

4.3 — Enter the job name as StepsBatchTutorial_TranscodeVideo.

Click create job definition.

Note: For the purpose of this tutorial, we will be using an Amazon Linux container to help illustrate the workflow steps without performing any processing. The user will want to provide their own container to run batch jobs.

4.6 — Repeat steps 4.1 and 4.2 creating two more jobs.

For the second job, set the job name to StepsBatchTutorial_FindFeatures and set the command to echo performing video feature extraction.

For the third job, set the job name to StepsBatchTutorial_ExtractMetadata and set the command to echo extracting metadata from video.

Step 5: Create a workflow with a state machine

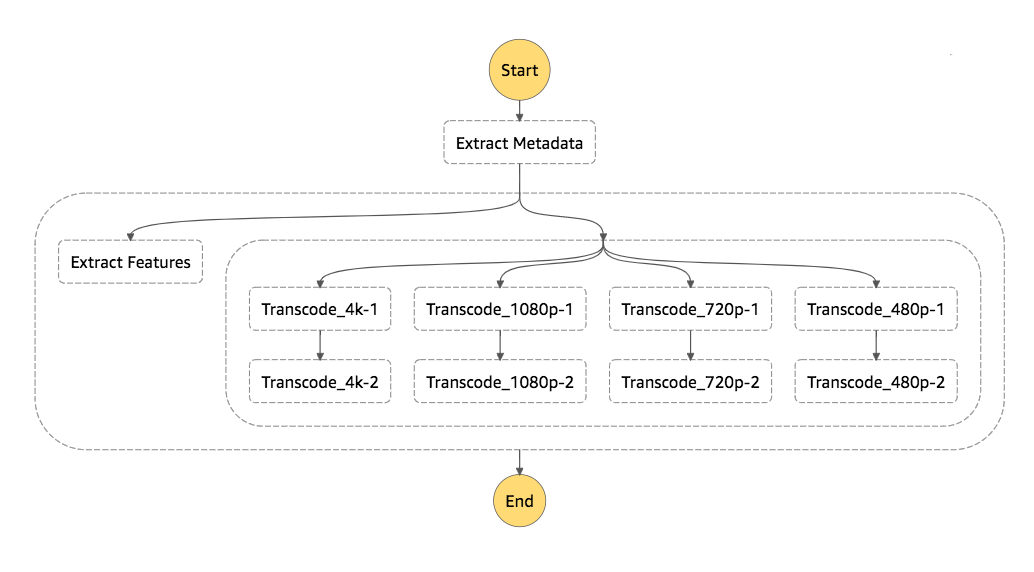

You will now create a State Machine that will control your workflow and call AWS Batch. Workflows describe a process as a series of discrete tasks that can be repeated again and again. You will design your workflow in AWS Step Functions. AWS Step Function uses state machines to create a workflow. The state machines are coded in Amazon States Language (ASL) in a JSON format.

5.1 — Open the AWS Step Functions console to create a state machine. Select author with code snippets. In the name text box, name your state machine StepsBatchTutorial_VideoWorkflow.

5.2 — Replace the contents of the state machine definition window with the Amazon States Language (ASL) state machine definition below. Amazon States Language is a JSON-based, structured language used to define your state machine.

This state machine represents a workflow that performs video processing using batch. Most of the steps are Task states that execute AWS Batch jobs. Task states can also be used to call other AWS services such as Lambda for serverless compute or SNS to send messages that fanout to other services.

{

"StartAt": "Extract Metadata",

"States": {

"Extract Metadata": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_ExtractMetadata:1",

"JobName": "SplitVideo",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_HighPriorityQueue"

},

"Next": "Process Video"

},

"Process Video": {

"Type": "Parallel",

"End": true,

"Branches": [

{

"StartAt": "Extract Features",

"States": {

"Extract Features": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_ExtractFeatures:1",

"JobName": "ExtractFeatures",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_LowPriorityQueue"

},

"End": true

}

}

},

{

"StartAt": "Transcode Video",

"States": {

"Transcode Video": {

"Type": "Parallel",

"End": true,

"Branches": [

{

"StartAt": "Transcode_4k-1",

"States": {

"Transcode_4k-1": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_TranscodeVideo:1",

"JobName": "Transcode_4k-1",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_HighPriorityQueue"

},

"Next": "Transcode_4k-2"

},

"Transcode_4k-2": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_TranscodeVideo:1",

"JobName": "Transcode_4k-2",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_LowPriorityQueue"

},

"End": true

}

}

},

{

"StartAt": "Transcode_1080p-1",

"States": {

"Transcode_1080p-1": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_TranscodeVideo:1",

"JobName": "Transcode_1080p-1",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_HighPriorityQueue"

},

"Next": "Transcode_1080p-2"

},

"Transcode_1080p-2": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_TranscodeVideo:1",

"JobName": "Transcode_1080p-2",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_LowPriorityQueue"

},

"End": true

}

}

},

{

"StartAt": "Transcode_720p-1",

"States": {

"Transcode_720p-1": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_TranscodeVideo:1",

"JobName": "Transcode_720p-1",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_HighPriorityQueue"

},

"Next": "Transcode_720p-2"

},

"Transcode_720p-2": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_TranscodeVideo:1",

"JobName": "Transcode_720p-2",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_LowPriorityQueue"

},

"End": true

}

}

},

{

"StartAt": "Transcode_480p-1",

"States": {

"Transcode_480p-1": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_TranscodeVideo:1",

"JobName": "Transcode_480p-1",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_HighPriorityQueue"

},

"Next": "Transcode_480p-2"

},

"Transcode_480p-2": {

"Type": "Task",

"Resource": "arn:aws:states:::batch:submitJob.sync",

"Parameters": {

"JobDefinition": "arn:aws:batch:REGION:112233445566:job-definition/StepsBatchTutorial_TranscodeVideo:1",

"JobName": "Transcode_480p-2",

"JobQueue": "arn:aws:batch:REGION:112233445566:job-queue/StepsBatchTutorial_LowPriorityQueue"

},

"End": true

}

}

}

]

}

}

}

]

}

}

}

5.3 — Click the refresh button to have Step Functions translate the ASL state machine definition into a visual workflow. You are able to easily verify that the process is described correctly by reviewing the visual workflow.

This example uses the Parallel and Task states. The ASL language specifies a number of different state types that can be used.

5.4 — For each Batch Task state, the ARN will need to be updated to match your region and account. The Batch Task state has three fields. The first is the reference to the job name that will be executed. The second is the name that will be assigned for the job. The third is the queue for which the job will be assigned.

For each Batch Task, replace:

The dummy REGION with the region you are working in, such as US-EAST-1.

The dummy account number 112233445566 with your AWS account number.

It is faster to use a text editor or IDE such as VSCode to do a search and replace. Ensure that all 20 account references in the ARNs are replaced.

5.6 — Next, you will add an IAM role to your workflow. Select create an IAM role for me and name it StepsBatchTutorial_Role. Step Functions will analyze your workflow and generate an IAM policy that includes resources used by your workflow.

Click create state machine. You should see a green banner indicating your state machine was successfully created.

Step 6: Exercise workflow

Your next step is to exercise the step function workflow that you have built. You will trigger the start of the execution manually. A JSON can be specified to provide input to the Step Function. Step Functions can also be triggered by a Lambda function or a CloudWatch event.

6.6 — You can view details on the execution of your Batch jobs. Navigate to the Batch service and select dashboard from the right hand side column. You will see the status of your jobs under Job Queues. Click the refresh button to view the progression of jobs through your queues.

From the Step Functions console, rerun your state machine multiple times and watch the performance of the batch jobs and queues, alternating between the Step Functions and Batch consoles.

To view details on executed batch jobs, click on one of the job queue numbers under succeeded.

6.11 (Optional) — For your second job, replace the JobDefinition for Transcode_4k-1 with arn:aws:batch:us-west-2:134029540168:job-definition/StepsBatchTutorial_TranscodeVideo_DOES_NOT_EXIST:1.

Re-execute the state machine and notice how if one parallel batch job fails, then all other parallel jobs will be cancelled. In case of a failure, all batch jobs will be removed from the queue.

Introduce failure handling into the state machine. The task state retry field can be used to retry a job more than once. The catch statement can be introduced to perform error handling. For more information, refer to Amazon States Language error handling.

Step 7: Terminate your resources

In this step you will terminate your AWS Step Function, AWS Step Functions and AWS Batch related resources. Important: Terminating resources that are not actively being used reduces costs and is a best practice. Not terminating your resources can result in a charge.

7.1 — First you will delete your step function. Click services in the AWS Management Console menu, then select Step Functions.

In the state machines window, click on the state machine StepsBatchTutorial_VideoWorkflow and select delete. Confirm the action by selecting delete state machine in the dialog box. Your state machine will be deleted in a minute or two once Step Functions has confirmed that any in process executions have completed.

7.2 — Next, you’ll delete your Batch Queues and Compute Environment. Click services in the AWS Management Console menu, then select Batch. Select job definitions from the right hand side menu.

For each Job definition, click on the job definition name, select the radial box next to Revision 1, and select actions, then select deregister. Your job will be deregistered. Do this for each of your jobs.

7.3 — Next, you’ll delete your job queues. Click job queues from the right hand side menu.

Select the radial box next to the StepsBatchTutorial_HighPriority queue. Select disable. Once the queue is disabled, reselect the queue and select delete.

Repeat for the StepsBatchTutorial_LowPriority queue. It may take a minute to finish deleting your queues.

7.4 — Next, you’ll delete your compute environments. Click compute environments from the right hand side menu.

Select the radial box next to the StepsBatchTutorial_Compute compute environment queue. Select disable. Once the compute environment is disabled, re-select the compute environment and click delete. You cannot delete the compute environment until the job queues have finished deleting.

7.5 — Lastly, you’ll delete your IAM roles. Click services in the AWS Management Console menu, then select IAM.

Select roles from the right hand side menu. Enter StepsBatchTutorial and select the IAM roles that you created for this tutorial, then click delete role. Confirm the delete by clicking yes, delete on the dialog box.

Congratulations

You have now orchestrated a trial video transcoding workflow using AWS Step Functions and AWS Batch. Step Functions is a great fit when you need to orchestrate complex batch jobs and handle errors and job failures.

Recommended next steps

Learn about error handling

Now that you have learned to create a state machine integrated with Batch, you can progress to the next tutorial where you will learn how to handle errors in serverless applications. Step Functions makes it easy to handle workflow runtime errors.

Read the documentation

Learn about functionality and capabilities of AWS Step Functions by reading the AWS Step Functions developer guide.

Explore AWS Step Functions

If you want to learn more about AWS Step Functions, visit the AWS Step Functions product page to explore documentation, videos, blogs, and more.