¿Por qué usar Amazon SageMaker con MLflow?

Amazon SageMaker ofrece una capacidad de MLflow administrada para la experimentación con machine learning (ML) e IA generativa. Esta capacidad facilita a los científicos de datos el uso de MLflow en SageMaker para el entrenamiento, el registro y la implementación de modelos. Los administradores pueden configurar con rapidez entornos MLflow seguros y escalables en AWS. Los científicos de datos y los desarrolladores de machine learning pueden realizar un seguimiento eficiente de los experimentos de machine learning y encontrar el modelo adecuado para un problema empresarial.

Beneficios de Amazon SageMaker con MLflow

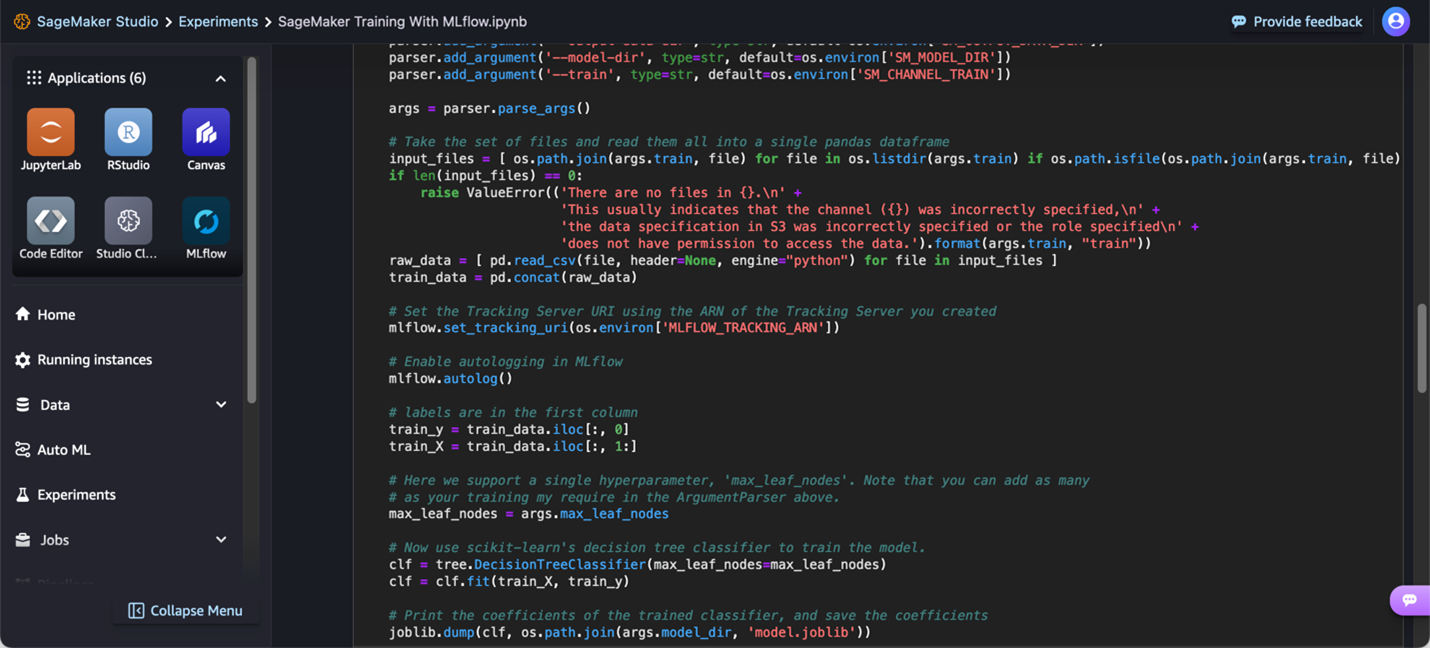

Realice un seguimiento de los experimentos desde cualquier lugar

Los experimentos de machine learning se realizan en diversos entornos, incluidos blocs de notas locales, IDE, códigos de entrenamiento basados en la nube o IDE administrados en Amazon SageMaker Studio. Con SageMaker y MLflow, puede usar su entorno preferido para entrenar modelos, realizar un seguimiento de sus experimentos en MLflow e iniciar la interfaz de usuario de MLflow directamente o mediante SageMaker Studio para realizar análisis.

Colabore en la experimentación con modelos

La colaboración eficaz en equipo es esencial para el éxito de los proyectos de ciencia de datos. Con SageMaker Studio puede administrar y acceder a los experimentos y servidores de seguimiento de MLflow; de este modo, los miembros del equipo pueden compartir información y garantizar la coherencia de los resultados de los experimentos, lo que facilita la colaboración.

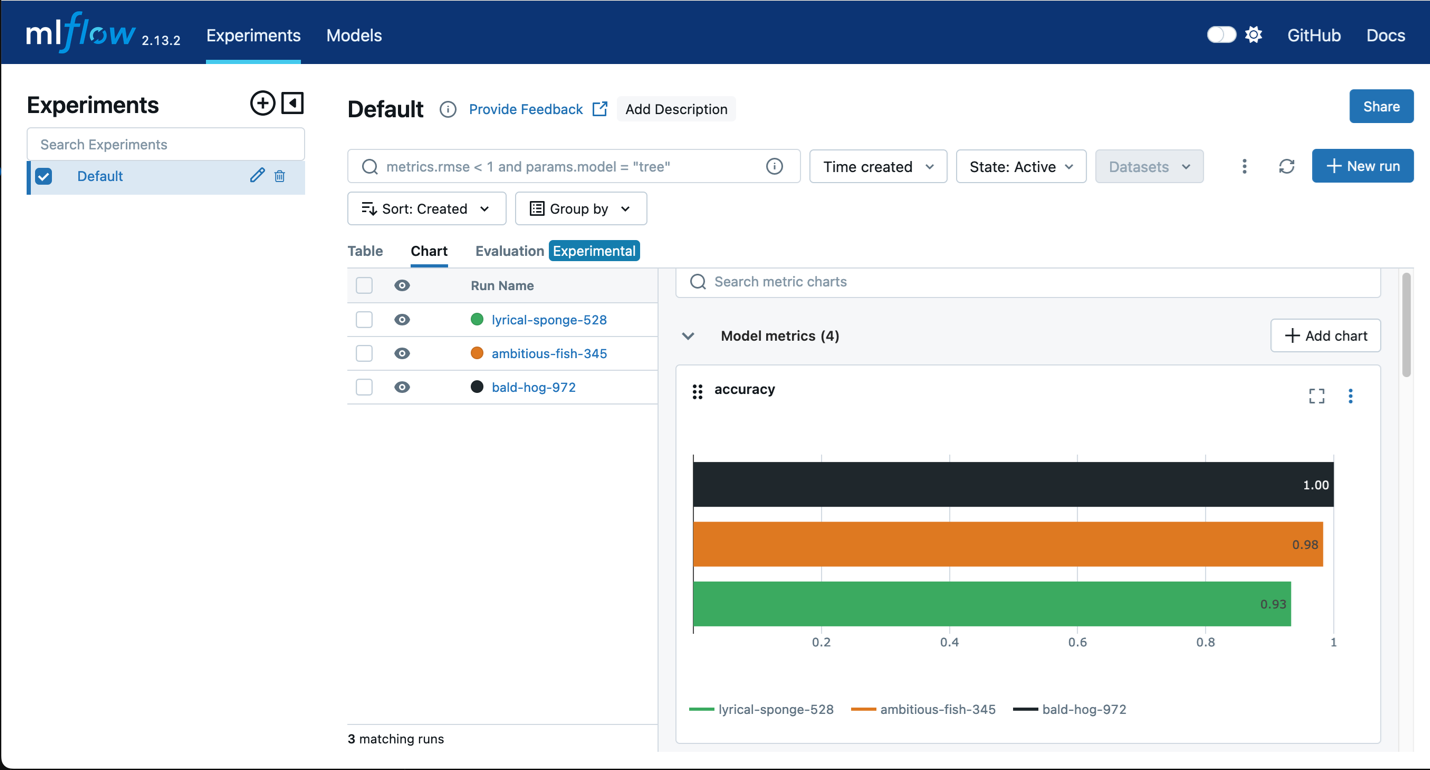

Evalúa los experimentos

Identificar el mejor modelo a partir de múltiples iteraciones requiere analizar y comparar el rendimiento del modelo. MLflow ofrece visualizaciones como gráficos de dispersión, gráficos de barras e histogramas para comparar las iteraciones del entrenamiento. Además, MLflow permite la evaluación de modelos para determinar su sesgo y equidad.

Administre de forma centralizada los modelos de MLflow

Varios equipos suelen utilizar MLflow para administrar sus experimentos, y solo algunos modelos se convierten en candidatos para la producción. Las organizaciones necesitan una manera fácil de realizar un seguimiento de todos los modelos candidatos para tomar decisiones fundamentadas sobre qué modelos pasan a la producción. MLflow se integra perfectamente con Registro de modelos de SageMaker, lo que permite a las organizaciones ver cómo sus modelos registrados en MLflow aparecen automáticamente en Registro de modelos de SageMaker, junto con una tarjeta del modelo de SageMaker para la gobernanza. Esta integración permite a los científicos de datos y a los ingenieros de machine learning utilizar distintas herramientas para sus respectivas tareas: MLflow para la experimentación y Registro de modelos de SageMaker para administrar el ciclo de vida de la producción con una amplia variedad de modelos.

Implemente modelos de MLflow en los puntos de conexión de SageMaker

La implementación de modelos de MLflow en puntos de conexión de SageMaker es sencilla y elimina la necesidad de crear contenedores personalizados para el almacenamiento de modelos. Esta integración permite a los clientes aprovechar los contenedores de inferencia optimizados de SageMaker y, al mismo tiempo, seguir disfrutando de la facilidad que brinda MLflow para registrar modelos.