Container Monitoring

Container monitoring is the activity of continuously collecting metrics and tracking the health of containerized applications and microservices environments, in order to improve their health and performance and ensure they are operating smoothly. Containers have become one of the most popular ways to deploy applications, bringing benefits such as making it easier for organizations to improve their application portability and operational resiliency. In Cloud Native Computing Foundation (CNCF)’s 2018 survey, 73% of respondents indicated they are currently using containers in production to boost agility and speed up the rate of innovation. Container monitoring is a subset of observability — a term often used side by side with monitoring which also includes log aggregation and analytics, tracing, notifications, and visualizations. Compared to traditional monitoring solutions, modern monitoring solutions provide robust capabilities to track potential failures, as well as granular insights into container behavior. This page covers why, when, and how you should monitor your containers, and what challenges and solutions you can look out for.

Why monitor your containers?

Having insight into metrics, logs, and traces greatly benefits operators of containerized platforms. Understanding what is happening not just at the cluster or host level, and also within the container runtime and application, helps organizations make better informed decisions, such as when to scale in/out instances/tasks/pods, change instance types, and purchasing options (on-demand, reserved, and spot). DevOps or systems engineers can also improve efficiency and speed resolution by adding automation, such as using alerts to dynamically trigger scaling operations. For example, by actively monitoring memory utilization, you can identify a threshold and notify operators as resource consumption approaches resource limits, or you can automate additional nodes to be added before available CPU and memory capacity is exhausted. If you set a rule to alert at, say, 70% utilization, you can dynamically add instances to the cluster when the threshold is reached.

Challenges with container monitoring

Despite the popularity of containers for modern application deployment, the CNCF 2018 survey found that 34% of respondents cited monitoring as one of the top challenges for adopting containers. Adopting a container monitoring system comes with unique challenges compared to a traditional monitoring solution for virtualized infrastructure. These challenges include:

Containers are ephemeral

They can be quickly provisioned, and just as quickly destroyed. This behavior is one of the primary advantages to using them but it can be a struggle to track changes, especially in complex systems with high churn.

Containers share resources

Resources like memory and CPU are shared across one or more hosts, making it difficult to monitor resource consumption on the physical host, which makes it hard to get a good indication of container performance or application health.

Insufficient tooling

Traditional monitoring platforms, even those well suited for virtualized environments, may not provide sufficient insight into metrics, logs, and traces needed to monitor and troubleshoot container health and performance.

What to look for in a container monitoring system

A good monitoring system needs to provide an overview of your entire application as well as relevant information on each component. Here’s what to consider when selecting a container monitoring solution:

- Can you see how the entire application is performing, with respect to both the business as well as the technical platform?

- Can you correlate events and logs, in order to spot abnormalities and proactively, or reactively, respond to events and minimize damage?

- Can you drill down to each component and layer to isolate and identify the source of failure?

- How easy is it to add instrumentation to your code?

- How easy is it to configure alarms, alerts, and automation?

- Can you display, analyze, and alarm on any set of acquired metrics and logs from different data sources?

Options for monitoring solutions

To speed up development cycles and build in governance into their continuous integration and continuous delivery (CI/CD) pipelines, teams can build reactive tooling and scripts into their standard devops orchestration by making use of metrics from their monitoring solution or leverage community projects like AutoPilot from Portworx to help. Autopilot is a monitor-and-react engine. It watches the metrics from the applications that it is monitoring, and based on certain conditions being met in those metrics, it reacts and alters the application's runtime environment. Built to monitor stateful applications deployed on Kubernetes, it is a good example of a project designed to make it easier for businesses to make metric-based decisions and strengthen their operational resiliency.

Businesses that have invested in Chaos Engineering will need to isolate and profile failure domains in order to supplement their risk resilience tooling. Chaos Monkey, originally created by Netflix, randomly terminates virtual machine instances and containers that run inside of your production environment. This exposes engineers to failures more frequently and enables them to build resilient services. By injecting failures into a system but not having a good set of metrics by which to track them you are really just left with chaos.

The open source data-series visualization suite Grafana is a good choice in many scenarios because of its long list of supported data sources, including Prometheus, Graphite, and Amazon CloudWatch, among others. While Grafana’s core competency used to be the visualization and alerting of metrics, the recently added support for logs analytics with data sources for InfluxDB and Elasticsearch allows users to correlate metric data with log events and provides improved root cause analysis. Besides Grafana, many paid container monitoring solutions exist, but they usually require the use of specific agents or data collection protocols.

For containerized applications running on AWS resources, a similar cross data source monitoring experience can be realized with Amazon CloudWatch Container Insights. By automatically collecting and storing metrics and logs from solutions like fluentD and DockerStats, CloudWatch Container Insights can give you insight into your container clusters and running applications.

How does container monitoring work?

Tying together disparate metrics sources requires a robust infrastructure monitoring platform that can provide a single pane of glass to view data from various sources. It also requires a lot of thought and planning from your application development team to ensure that data can correlate to allow easy debugging end-to-end.

Conceptually, containerized applications are monitored in a similar way to traditional applications. It requires data at different layers throughout the stacks for various purposes. You measure and collect metrics data both at the container itself and at the infrastructure-layer for better resource management such as scaling activities. You also need application specific data, application performance management, and tracing information for application troubleshooting.

Robust observability and easy debugging are the key for building a microservices architecture. As your system grows, so does the complexity in monitoring the entire application. Traditionally, you would build an independent service that serves a single purpose, and it would communicate with other services to perform a larger task. You can build services to include a networking function, such as service-to service communication, traffic control, retry logic, and more using an SDK approach — in addition to business logic. However, these tasks become very complex when you have hundreds of them that use different programming languages across different teams. This is where service mesh comes into the picture.

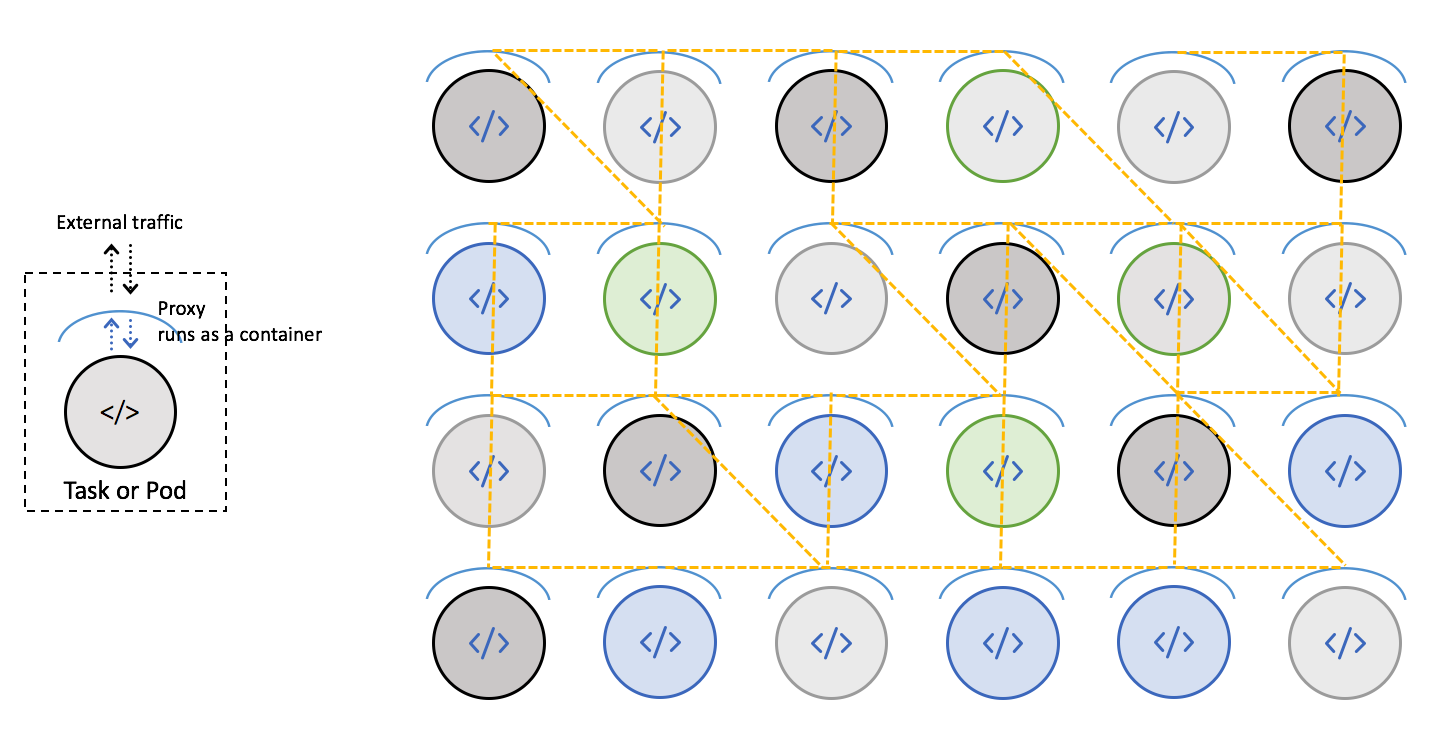

A service mesh manages the communication layer of your microservices system by implementing a software component as a sidecar proxy. This software component performs networking functions, traffic control, tracing and logging. Each service has its own proxy service and all proxy services form the mesh. Each service does not directly communicate with other services but only with its own proxy. The service-to-service communication takes place between proxies. Here’s what the architecture looks like:

With a service mesh, you can build a network of microservices and separate most of the network layer out to streamline the management and observability. Ultimately, if problems occur, it enables you to identify the source of the problem quickly. Here are some of the benefits from using service mesh:

- Ensure consistent communication among services.

- Provide complete visibility of end-to-end communication.

- Control traffic throughout the application such as load balancing, scaling, and traffic routing during deployment.

- Provide insight into metrics, logging and tracing throughout the stacks.

- Streamline the networking layer and distributed architecture implementation such as retries, rate limiting, and circuit breaking out from business logic.

- It is service platform independent, so you can build services in any programming language you wish.

One of the most popular implementations of the sidecar proxy for a service mesh is Envoy. Envoy is a high-performance C++ distributed proxy originally built at Lyft. Envoy runs alongside every service and provides common networking features in a platform-agnostic manner. It is self-contained and has a very small footprint (8 MB). It supports advanced load balancing features including automatic retries, circuit breaking, rate limiting, and so on. As service traffic flows through the mesh, Envoy provides deep observability of L7 (Application Layer) traffic, native support for distributed tracing, and wire-level observability of the system.

One last tip

The purpose of monitoring your application is to collect monitoring and operational data in the form of logs, metrics, events, and traces, in order to identify and respond to issues quickly and minimize disruptions. If your monitoring system is working well, you should ideally be able to easily create alarms and perform automated actions when thresholds are reached. In most cases static alarms are sufficient, but for applications that exhibit organic growth, cyclical, or seasonal behavior (such as requests that peak during the day and taper off at night), they require more thought and knowledge to define the threshold to implement an effective static alarm. The common challenge is finding the right threshold — either it is too broad and risks letting problems slip, or it is too tight and gives too many false alerts.

The way to solve this puzzle is with machine learning. Many monitoring tools now incorporate this capability to be able to learn what the normal baseline is and recognize anomalous behavior in the data when it arises. The tool can adapt to metric trends and seasonality to continuously monitor the dynamic nature of system and application behavior, and auto-adjust to situations such as time-of-day utilization peaks. With machine learning, you can preemptively identify runtime issues sooner, reducing system and application downtime.

Learn about AWS monitoring with anomaly detection with Amazon Cloudwatch »