What is DynamoDB

Amazon DynamoDB is a fully managed NoSQL database provided by AWS. Developers of high-scale applications choose DynamoDB due to its consistent performance at any scale, and serverless developers like DynamoDB because of its ease of provisioning, compatibility with serverless compute, and pay-per-use billing.

Software is all about trade-offs, and this is particularly true about databases. One database cannot serve all access patterns equally well. Databases make different choices about storage format, indexing options, and scaling strategies based on the use cases they aim to serve.

With the importance of understanding trade-offs in mind, there are three key aspects of DynamoDB to know:

- Aims for consistent performance through horizontal scaling. DynamoDB's key promise is to provide consistent performance regardless of the scale or number of concurrent queries against your database. To do this, it splits data across multiple machines into partitions of 10GB or less. This allows DynamoDB to horizontally scale table infrastructure as data or requests increase.

- Provides a focused API with primary key-based access. To enable consistent performance via horizontal scaling, DynamoDB uses a partition key on every item. This partition key is used to determine the physical partition to which each item belongs. Nearly all API requests require this partition key, allowing DynamoDB to route each request to the proper partition for execution.

- A fully managed database. All DynamoDB tables within an AWS Region use shared infrastructure, including a massive pool of storage instances and a Region-wide request router. This enables DynamoDB to scale up and down on demand to handle spikes in traffic, and it reduces the number of operations to handle on the DynamoDB table.How do DynamoDB data models differ from other databasesDynamoDB has some significant structural differences from traditional relational databases. Because of these differences, DynamoDB data models must be designed differently than relational databases.

How do DynamoDB data models differ from other databases

DynamoDB has some significant structural differences from traditional relational databases. Because of these differences, DynamoDB data models must be designed differently than relational databases.

DynamoDB data modeling tips

1. Access patterns: The DynamoDB API is targeted and will require primary keys to access data. Accordingly, the design must first account for how data is accessed, and then primary keys are designed to assist those access patterns.

For example, an application might have "User" and "Order" entities. First, a list of ways to access the data is created, such as "Fetch User by Username" or "Fetch Order by OrderId," and then primary keys for these entities are configured to match those access patterns.

Note that "access patterns first, then data design" is the inverse of traditional relational database modeling. With relational databases, design often begins with tables using a normalized model. Then, queries are constructed to handle access patterns.

2. Data organization for Query operation: The Query operation is able to retrieve a contiguous set of items that share the same partition key. A set of items that have the same partition key is called an "item collection," and item collections can handle a variety of use cases.

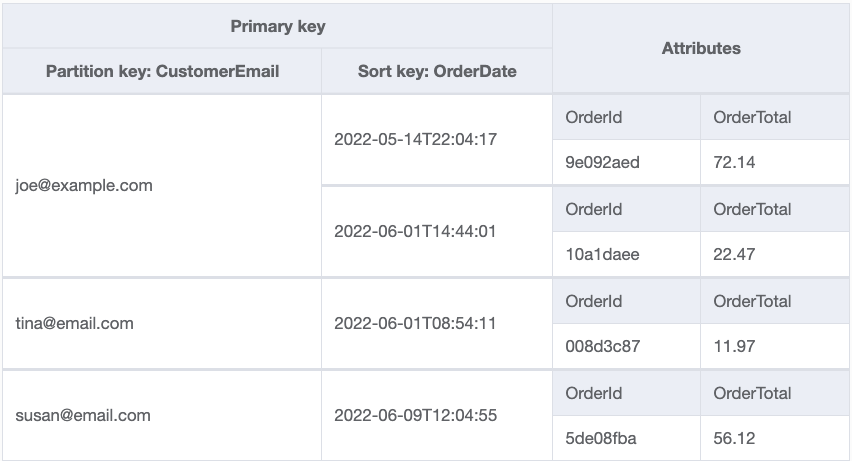

The sample table below shows some Order items from an e-commerce store.

Notice that the customer email address is used as the partition key. This allows a customer's Orders to be grouped into an item collection that can be retrieved in a single request with the Query operation. For example, the first two Orders both belong to "joe@example.com."

Items with the same partition key are ordered according to the sort key. By using the order date as the sort key, Orders for a Customer can be retrieved in the order they were placed or within a particular time range.

3. Secondary indexes: As noted, DynamoDB makes it easy to use the primary key to locate data. However, it is common to have multiple patterns that access data in different ways.

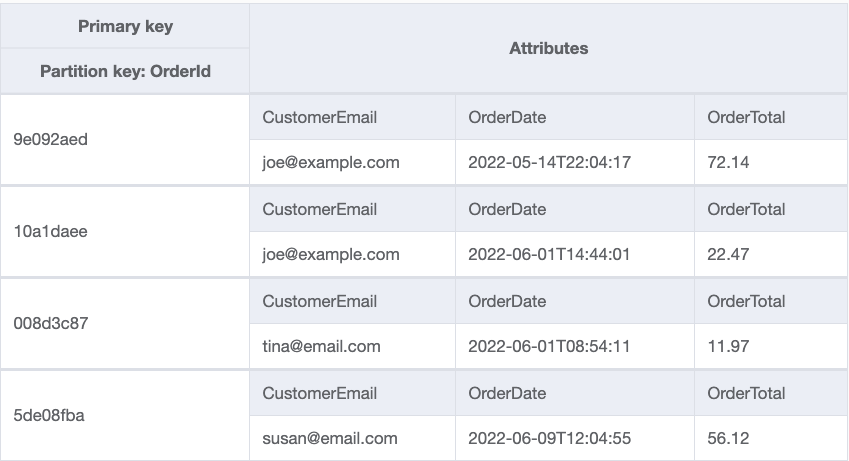

For example, it may be desirable to retrieve all e-commerce Orders for a Customer by the Customer's email address while also being able to retrieve a specific Order by the OrderId. For this situation, secondary indexes are useful. Secondary indexes permit data to be re-indexed in a different way, with a different primary key, that allows for additional access patterns on the data.

From the e-commerce Orders table above, a secondary index is set up using the OrderId as the primary key. The structure of that secondary index looks as follows:

Notice that this is the exact same data from the first table, but it has a different primary key. When items are written to the table, DynamoDB will work to replicate that item into the secondary index using the new primary key. That secondary index can then be queried directly, which enables the additional access patterns.

DynamoDB single-table design concepts

One of the common data modeling patterns in DynamoDB is called single-table design. As its name implies, all entity types in the database are combined into a single table. This is in contrast with relational databases in which each entity receives its own dedicated table.

Additional information can be found in the blog post The What Why and When of Single-Table Design with DynamoDB.

One of the core reasons for using single-table design is to allow the retrieval of items of different types in a single request. DynamoDB does not have a SQL JOIN operation, but the Query operation allows the retrieval of a set of items with the same partition key. If an access pattern must retrieve a parent item plus related child items, they can be "pre-joined" into the same item collection by giving them the same partition key.

Additionally, using single-table design in DynamoDB can be a good forcing function to understand how DynamoDB data modeling works. Focusing on single-table design avoids re-implementing a relational model in a NoSQL system, a route that leads to frustration and inefficient applications.

Most of the general tips for data model design in DynamoDB work well for single-table design as well: model for access patterns, use the Query operation, and add in secondary indexes for additional read-based patterns. However, there are a few tips specific to single-table design.

First, use generic names for primary key elements. Because a single table will have multiple different entities, each entry will have a different attribute for its primary keys. Names like "CustomerEmail" or "OrderId" for the primary key elements will not work.

A common pattern is to use "PK" and "SK" for the partition key and sort key elements, respectively. Such generic names can work for all entities in the application. Further, it helps to reinforce the importance of thinking of data in terms of access patterns.

Second, remember that DynamoDB is a schemaless database. Outside of the primary key elements, DynamoDB won't enforce a schema. This is a benefit when using single-table design because it is not necessary to declare optional columns in advance. However, you need to be careful to maintain some semblance of a schema in your application code to avoid data chaos.

How to use DynamoDB single-table vs multi-table design with GraphQL

There are two approaches to integrating DynamoDB with GraphQL: multi-table and single-table. To compare these approaches, consider the example of building a blog hosting web service. Users can come to the service to create their own blog site, and they will write posts that will be published on their blog. Other users can post comments on their posts. Thus, this example contains three key entities: Sites, Posts, and Comments. This example is necessarily simplified and won't include all access patterns for this sample application. However, it can help us understand the key trade offs to consider in single-table vs. multi-table design.

Difference between GraphQL APIs and other APIs

GraphQL APIs are different from REST or other web APIs in a number of ways.

First, GraphQL APIs are designed for flexibility. Most GraphQL API implementations allow for arbitrary queries from clients. These queries may fetch a single object or may fetch a graph of data with multiple layers of nested relationships. This is in contrast with REST APIs in which a client can request a specific resource, and the backend implementation can optimize the work required to fetch that resource.

Second, GraphQL resolvers are intended to be simple. A GraphQL type is backed by a resolver that helps to populate the requested object. In many cases, a single resolver may be used in multiple locations in a schema to match the various ways that data is connected. Many GraphQL implementations choose to make their resolvers simple and isolated, so they can be reused without understanding the larger context of a request.

Note that both of these properties are common attributes of GraphQL APIs but may not be true for every implementation. For example, some GraphQL implementations have a narrow set of predefined queries that can be used rather than allowing for flexible queries from clients.

Additionally, some resolvers may be more complex and aware of the context in which they are being invoked. Rather than simply retrieving the object that triggered it, the resolver may check first to determine whether related objects have been requested as well. If so, the resolver may retrieve both the requested object and the related objects to optimize overall request latency and reduce database load.

This is the core trade-off between single-table and multi-table design in a GraphQL API. A traditional GraphQL approach with focused, isolated resolvers will have simpler resolver code at the expense of slightly slower GraphQL queries. Single-table principles and lookaheads in the resolvers will add complexity to the resolvers but provide for faster response times.

To learn more about GraphQL, see What is GraphQL, the Decision Guide to GraphQL Implementation, and How to Build GraphQL Resolvers for AWS Data Sources.

Multi-table DynamoDB design in GraphQL

The first and simplest approach to integrating DynamoDB with GraphQL is to use a multi-table approach. In this approach, a separate DynamoDB table for each core object type is created in the GraphQL schema.

For a video discussion of the key points, refer to the multi-table video walkthrough. For additional information, visit the GitHub repository for the multi-table sample project.

In the previously described blog example, the application has three DynamoDB tables: one each for Sites, Posts, and Comments. In this multi-table approach, each resolver is focused on retrieving and hydrating only the object requested. The resolver ignores the larger context of the GraphQL query.

For example, a resolver to fetch the parent Site object based on the Site domain might look as follows:

{

"version": "2018-05-29",

"operation": "GetItem",

"key": {

"domain": $util.dynamodb.toDynamoDBJson($ctx.args.domain),

}

}

Notice that it is a straightforward DynamoDB getItem operation that uses the given domain argument in the query to retrieve the requested site.

A common query to the backend might ask for the site and the ten most recent posts on the site. In doing so, it uses a GraphQL query as follows:

query getSite {

getSite(domain: "aws.amazon.com") {

id

name

domain

posts (num: 10) {

cursor

posts {

id

title

}

}

}

}

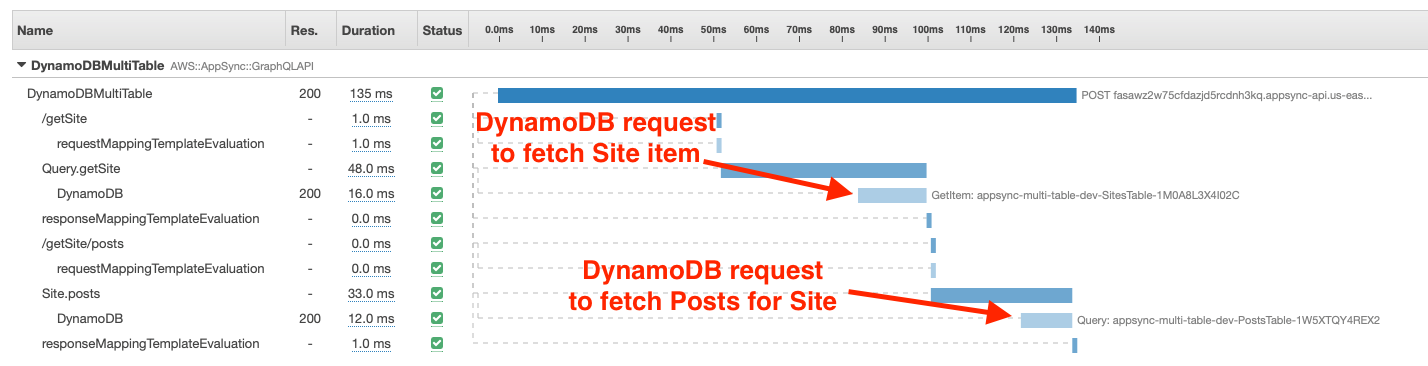

Because the GraphQL resolvers are focused and isolated, there are two separate resolvers triggered here. First, the getSite resolver is triggered to fetch the parent site. Then, the Posts resolver is triggered to retrieve the most recent posts for the site.

Notice that these resolvers are called sequentially. The GraphQL server will first resolve a parent object, then move on to nested objects on the parent, and continue for as many levels of nesting as is necessary.

This will necessarily result in a "waterfall" of requests by the GraphQL server. The following AWS X-Ray trace displays two separate, sequential requests to DynamoDB to satisfy the GraphQL query.

While this sequential approach does add some latency to the overall query, it is the default approach for most GraphQL implementations, regardless of the database used.

Note that with this approach, overall performance from the backend GraphQL server will not be as performant as it could be. Note also that the GraphQL spec does not enforce consistency within a single request, so the consistency trade-offs of making multiple, sequential requests to the database must be considered.

Depending on the depth of the GraphQL schema and the application needs, this may be an acceptable result. The benefits of GraphQL's flexibility plus resolver simplicity may make sense for a particular case. Patterns like caching and dataloader can be used to reduce overall request latencies.

Further, moving to a standard REST API setup might not be any better, particularly if the frontend client needs to make their own waterfall of requests to retrieve the same graph of data. Having this waterfall on the backend, closer to the data sources, will likely be faster than the same waterfall on the frontend.

When using the multi-table approach with GraphQL, keep the following tips in mind:

- Consider how objects will be retrieved. DynamoDB relies on primary keys for almost all access patterns. Items cannot be retrieved by any column value like they can in a relational database. Accordingly, primary key patterns that reflect how your items will be accessed should be set up.

- Use composite primary keys and the Query operation for related data. DynamoDB has a Query operation that permits the retrieval of multiple items with the same partition key. Give the same partition key to related items to allow for fast, efficient fetching of related items.

- Use sort keys for filtering and sorting related data. Items with the same partition key will be sorted according to their sort key. Use this sort key to provide filtering and sorting on related data. For example, using the publish date as the sort key for the posts items allows posts to be fetched within a particular time range or to fetch the most recent posts, ordered by time.

- Consider using IDs to maintain relationships between records to provide mutability. Relational databases often use auto-incrementing integers or random IDs as primary keys for records. These IDs are used to maintain relationships between items and provide easier mechanics around updates through normalization. With DynamoDB, meaningful ID values are preferred in order to avoid sequential requests to fetch a parent item, then retrieve its related items. Because those sequential requests are a part of multi-table design with DynamoDB, a more normalized pattern can be successful.

Single-table DynamoDB design in GraphQL

The second approach to integrating DynamoDB with GraphQL is to use a single-table design.

For a video discussion of the key points, refer to the single-table video walkthrough. For additional information, refer to the GitHub repository for the single-table sample project.

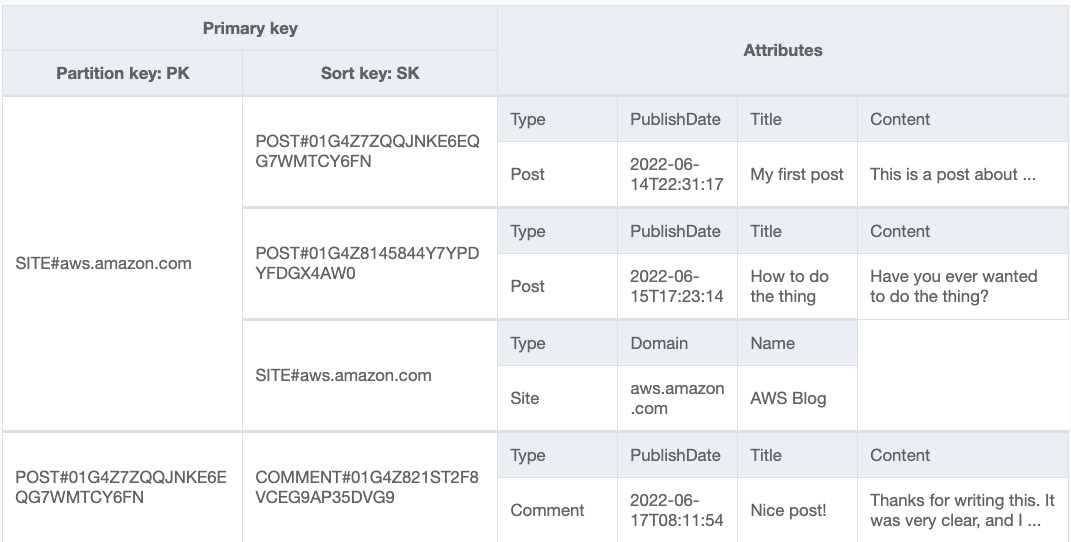

In a single-table approach, all three entities from the example are in the same DynamoDB table. That table might look as follows:

Notice how all three entities are mixed within the table (the "Type" attribute on each item can determine the type of entity). Further, notice that the table has the generic "PK" and "SK" names for the partition key and sort key in the table.

Finally, notice that the table has created item collections of heterogeneous entity types. The "SITE#aws.amazon.com" partition key includes both a Site entity and two Post entities that were posted on that site. The Query operation on this item collection can fetch both the Site item and the most recent Posts for that Site.

To use this Query operation to retrieve both the Site and the Posts in a single request, the logic in our Site resolver becomes more complicated. It might look as follows:

#if($ctx.info.selectionSetList.contains("posts"))

#set($limit = 11)

#else

#set($limit = 1)

#end

{

"version": "2018-05-29",

"operation": "Query",

"query": {

"expression": "PK = :pk",

"expressionValues": {

":pk": $util.dynamodb.toDynamoDBJson("SITE#$ctx.args.domain")

}

},

"limit": $limit,

"scanIndexForward": false

}

Notice that at the beginning of the resolver, the $ctx.info.selectionSetList object is used to determine whether the GraphQL query is also asking for the "posts" property on the requested Site. If it is, the Query is used to get both the Site and the Post items. If not, it retrieves just the Site.

This pattern is known as a "lookahead," after the practice of looking ahead at nested elements in the GraphQL query. This practice is controversial in the GraphQL community. Some like it as a way to optimize requests and reduce database load, while others discourage it due to the increased complexity and maintainability in resolvers.

It is clear that the use of lookaheads to reduce the sequential queries from the server will improve response times to GraphQL queries. The trade-off is whether this increased complexity is manageable.

When using the single-table approach, keep the following tips in mind:

- Don't be afraid of a partial approach. A single-table approach does not have to solve every GraphQL query with a single request. GraphQL queries can be complex and traverse a large amount of connected data. Trying to solve for all use cases will lead to frustration, and removing even a single sequential query can be helpful in reducing latency. Don't make the perfect the enemy of the good.

- Optimize for commonly used patterns first. Remember that the biggest benefit of single-table design comes from removing sequential queries when traversing relationships. Target the most frequently accessed relationships in the schema, and implement the lookahead pattern to reduce them to a single query and resolver.

- Avoid complex pagination options in nested fields. In the example given, a "lookahead" is used to see which fields are requested on a particular item. However, it is harder to reliably extract variables for related data, such as the number of posts to fetch or the token to start at. For these single-table requests, default parameters can allow GraphQL clients to paginate through additional post items by using the getPostsForSite query directly.

- Consider immutability for primary key values. In order to fetch both the Site and the Posts in a single request with the Query operation, they must be identifiable by a single attribute. In the example use case, the "domain" property is used to group the Site and the Post items together. While this eases the operation, it does make it harder to allow users to alter the "domain" for the Site and Posts later on. To make attributes in primary keys immutable, consider adding an application constraint.

Combining single-table and multi-table designs

This article presented the single-table and multi-table approaches as if they are distinct from each other. However, it is possible to combine elements from both approaches to get some of the benefits of each.

First, some GraphQL users use a single DynamoDB table for their API, even if they are mostly using isolated resolvers that retrieve items of a single type. These users are not getting the reduced latency benefits from removing sequential requests by fetching related items together, but they do get some operational benefits from having fewer DynamoDB tables. Further, using a single table to hold all entities can help teams to focus on using DynamoDB as intended – with focused, targeted usage rather than trying to emulate a relational database.

Second, some GraphQL users can borrow concepts from single-table design even if using multiple tables. For an application with a large number of entities, putting them all in a single table may not be ideal. However, clustering a few related entities in the same table can receive the benefit of reduced latencies for certain patterns.

The key takeaway is that this decision is not all-or-nothing. Understanding the needs of the application is necessary in order to make the best choice. There are real trade-offs around simplicity, flexibility, and latency, and there is no single choice that works for all situations.

Looking for a fully managed GraphQL service?

Explore AWS AppSync

AWS AppSync is an enterprise level, fully managed serverless GraphQL service with real-time data synchronization and offline programming features. AppSync makes it easy to build data driven mobile and web applications by securely handling all the application data management tasks such as real-time and offline data access, data synchronization, and data manipulation across multiple data sources.