Perform sentiment analysis

Amazon Aurora is a MySQL and PostgreSQL-compatible relational database built for the cloud, that combines the performance and availability of traditional enterprise databases with the simplicity and cost-effectiveness of open source databases.

Amazon Aurora machine learning enables you to add ML-based predictions to applications via the familiar SQL programming language, so you don't need to learn separate tools or have prior machine learning experience. It provides simple, optimized, and secure integration between Aurora and AWS ML services without having to build custom integrations or move data around. This allows developers working with the Postgres or MySQL engines to add capabilities to their application using familiar SQL techniques, syntax and interfaces.

When you run an ML query, Aurora calls Amazon SageMaker for a wide variety of ML algorithms or Amazon Comprehend for sentiment analysis, so your application doesn't need to call these services directly. This makes Aurora machine learning suitable for low-latency, real-time use cases such as fraud detection, ad targeting, and product recommendations.

In this tutorial, you learn how to create an Aurora PostgresSQL database, enable integration with Amazon Comprehend, and use Comprehend to perform sentiment analysis based on records in the database table.

In this tutorial, you complete the following steps:

- Create the IAM roles required to allow Amazon Aurora access to Amazon Comprehend and Amazon S3.

- Create the Amazon Aurora PostgreSQL database instance

- Download and install a PostgreSQL client

- Connect to the Aurora DB instance with the PostgreSQL client

- Install AWS ML and S3 extensions so you can query the database and analyze the sentiment of a sample table and customer reviews dataset

- Clean up resources used in this tutorial

The tutorial uses two data tables: one table is manually populated with sample data in this tutorial, and one table is loaded with sample data from a sample customer reviews dataset.

| About this Tutorial | |

|---|---|

| Time | 1 hour |

| Cost | Less than $1 |

| Use Case | Databases, Machine Learning |

| Products | Amazon Aurora, Amazon Comprehend |

| Audience | Database administrators, Developers, Data scientists |

| Level | Intermediate |

| Last Updated | November 20, 2020 |

Step 1: Create IAM roles

In this step, you create the AWS Identity and Access Management (IAM) roles that allow Amazon Aurora access to Amazon Comprehend and Amazon S3.

1.1 — Open the AWS Management Console and sign in with your AWS account credentials. If you do not have an AWS account, create a new one to get started.

Already have an account? Log in to your account

1.2 — Navigate to IAM dashboard. In the navigation pane of the IAM dashboard choose Roles, then Create Role.

1.6 — Skip the tagging section and choose Next: Review.

Step 2: Create Aurora database instance

For this step, you create an Amazon Aurora PostgreSQL database instance and attach the roles you created in Step 1.

2.1 — Open the Amazon RDS console, and In the left navigation pane, choose Databases, then choose Create database.

2.10 — When the instance status shows Available, choose the aurora-ml-db instance to view the details. Choose the Connectivity & security tab, and in the Manage IAM roles section, for Add IAM roles to this cluster, choose TutorialAuroraComprehendRole. For Feature, choose Comprehend and choose Add role.

Step 3: Download and install a PostgreSQL Client

For this step, you download and install a PostgreSQL client that you use to connect to your Aurora PostgreSQL database instance.

You can use any preferred PostgreSQL database client to connect to your Aurora PostgreSQL database instance. This tutorial uses the pgAdmin client, but you can also use SQL Workbench, or any other preferred client.

- For pgAdmin, download and install the pgAdmin 4 client from the pgAdmin website.

- For SQL Workbench, refer to the directions in Create and Connect to a PostgreSQL Database.

Note: You must run the PostgreSQL client on the same device and same network where you created the Aurora PostgreSQL DB instance. In this tutorial, the database security group is configured to allow connection only from the device you used when you created the DB instance. Your database can be configured to be accessed from any IP address, but for this tutorial, we kept the configuration simple.

After you have downloaded and installed your preferred client, continue to Step 4.

Step 4: Connect to the Aurora DB instance

In this step, you connect to the Aurora PostgreSQL DB instance by using pgAdmin 4 PostgreSQL Client. For other connection options, see Connect to a DB Instance Running the PostgreSQL Database Engine.

4.3 – Choose the Connection tab and enter the following information:

- For Host name/address, paste the database cluster endpoint you copied in Step 2.13.

- For Password, enter the password you specified when creating your database instance.

Choose Save. In the left pane navigation tree, you should see your new server TutorialServer.

Step 5: Query database with Amazon Comprehend

In this step, you install extensions for machine learning and Amazon S3 access. Then, you set up and query a sample table. Finally, you load sample data from a customer review dataset and run queries on the customer reviews for sentiment analysis and confidence.

5.1 — In the query editor, run the following statement to install the Amazon ML services extension for model inference.

CREATE EXTENSION IF NOT EXISTS aws_ml CASCADE;

Now, you can create a table, add some data, and try out Comprehend. Your sample table holds comments from an article announcing the ML integration feature, with an ID, and comment text column.

5.2 — Run the following statement to create your sample table named comments.

CREATE TABLE IF NOT EXISTS comments (

comment_id serial PRIMARY KEY,

comment_text VARCHAR(255) NOT NULL

);5.3 — Add data to your comments table using the following statement.

INSERT INTO comments (comment_text)

VALUES ('This is very useful, thank you for writing it!');

INSERT INTO comments (comment_text)

VALUES ('Awesome, I was waiting for this feature.');

INSERT INTO comments (comment_text)

VALUES ('An interesting write up, please add more details.');

INSERT INTO comments (comment_text)

VALUES ('I do not like how this was implemented.');5.4. – Run the following statement to call the aws_comprehend.detect sentiment function.

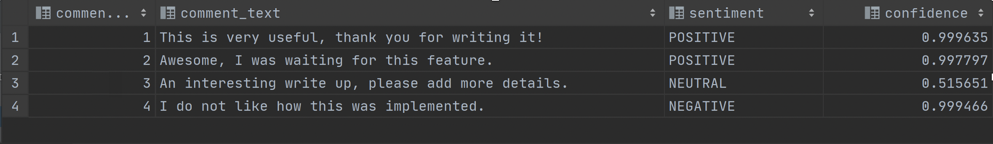

SELECT * FROM comments, aws_comprehend.detect_sentiment(comments.comment_text, 'en') as sThis statement passes Comprehend two parameters – the column you need it to evaluate, and the language code for the text in that column – in this example, ‘en’ for English. This statement returns two additional columns:

- sentiment, which scores sentiment as POSITIVE, NEGATIVE, NEUTRAL, or MIXED

- confidence, which provides the confidence on a scale of 0 to 1 of for the Comprehend model sentiment analysis

Great! You ran Comprehend sentiment analysis on a sample table. In the following steps, you use a sample of customer reviews data accessible from a publicly readable S3 bucket.

5.5 — Run the following statement to install the Amazon S3 service extension. This extension allows you to load data from Amazon S3 into the Aurora DB instance from SQL.

CREATE EXTENSION IF NOT EXISTS aws_s3 CASCADE;

Next, you can create a table that holds the data from the dataset on S3. The data is in a tab-separated-value (TSV) format in a publicly readable S3 bucket.

5.6 — Copy and paste the following code to create your table named review_simple.

create table review_simple

(

marketplace char(2),

customer_id varchar(20),

review_id varchar(20) primary key,

product_id varchar(20),

product_parent varchar(20),

product_title text,

product_category varchar(20),

star_rating int,

helpful_votes int,

total_votes int,

vine char,

verified_purchase char,

review_headline varchar(255),

review_body text,

review_date date,

scored_sentiment varchar(20),

scored_confidence float4

)When you create the table, you’re including columns matching the schema of the input data from the customer reviews data set and adding two incremental columns related to sentiment: scored_sentiment and scored_confidence. You use these columns in Step 5.8 to store the result of calling Comprehend.

5.7 — Run the following statement to load the data directly from Aurora PostgreSQL.

Note: In production, you may choose to use AWS Glue or another ETL process to load the data.

select aws_s3.table_import_from_s3(

'review_simple', 'marketplace, customer_id,review_id,product_id,

product_parent, product_title, product_category, star_rating,

helpful_votes, total_votes, vine, verified_purchase,review_headline,

review_body, review_date',

'(FORMAT CSV, HEADER true, DELIMITER E''\t'', QUOTE ''|'')',

'amazon-reviews-pds',

'tsv/sample_us.tsv',

'us-east-1'

)This statement specified the set of columns you want to load, described the format of the file, and supplied the bucket name, key, and AWS Region. This sample file has 49 rows plus a header.

5.8 — When you loaded the data, the scored_sentiment and scored_confidence columns in the table were ignored; the data set loaded from S3 didn’t contain those columns. Now, you'll use Comprehend to evaluate the sentiment, and use the result to update those columns in the table. Run the following statement to call Comprehend and update the table.

update review_simple

set scored_sentiment = s.sentiment, scored_confidence = s.confidence

from review_simple as src,

aws_comprehend.detect_sentiment( src.review_body, 'en') as s

where src.review_id = review_simple.review_id

and src.scored_sentiment is nullThis statement calls comprehend for each of the rows where the sentiment is not yet known, and updates the scored_sentiment and scored_confidence columns.

This approach can help optimize the cost and performance of later reports or queries that want to consider the sentiment values. If the review text hasn’t changed, there’s no reason to call Comprehend each time you want to use the sentiment; you can simply store the data and retrieve it from the database on demand. You can use statements such as this to set the sentiment when you load new rows to the table without reprocessing the inferences for existing rows.

Since Comprehend invocations incur charges, and each batch submitted to Comprehend takes time to process, it’s preferable to incur the overhead repeatedly. Storing the sentiment in columns within the database also allows you to create indices over the sentiment values if you need to optimize the queries to support larger data sets.

Next, try running some queries on the data.

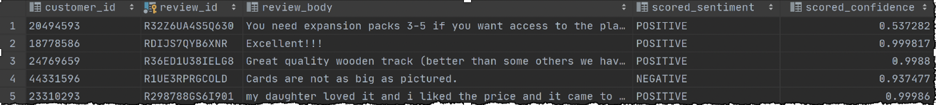

5.9 — Run the following statement to see the returned data.

select customer_id, review_id, review_body, scored_sentiment, scored_confidence from review_simple

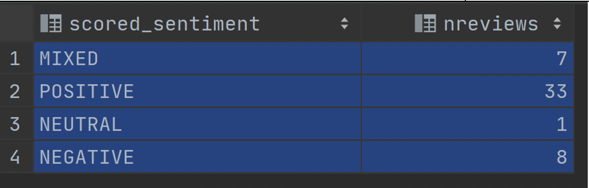

5.10 — Run the following statement to see the data summarized based on the sentiment returned by Comprehend.

select scored_sentiment,count(*) as nReviews from review_simple group by scored_sentiment

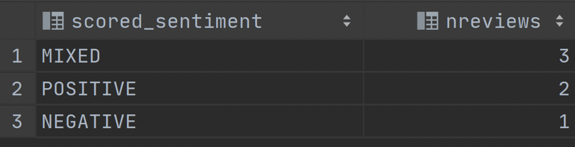

5.11 — Run the following statement to query the data based on a confidence threshold of > .9.

select scored_sentiment,count(*) as nReviews from review_simple where scored_confidence > .9

group by scored_sentiment

In this query, you're leveraging the score sentiment and confidence values you saved in the table. This is an example of the flexibility and performance gained by saving the sentiment directly in the database. The ability to do this directly in the database can give individuals more comfortable with SQL easier access to the data.

Step 6: Clean up

In the following steps, you clean up the resources you created in this tutorial.

It is a best practice to delete instances and resources that you are no longer using so that you are not continually charged for them.

Delete Aurora database

6.1 — Navigate to the RDS console, and in the left pane, choose Databases.

6.2 — Choose the tutorial aurora-ml-db instance. Note: In the Connectivity & security section, under Networking, copy the value for VPC. You can remove this resource in the next step.

6.3 — Choose Actions, then choose Delete.

6.4 — Clear the check box for Create final snapshot and select the I acknowledge check box. Type delete me and choose Delete.

Delete VPC

6.5 — Navigate to the VPC console.

6.6 — In the left navigation pane, choose Your VPCs and select the VPC that matches the ID you noted in Step 6.2.

6.7 — Choose Actions, then choose Delete VPC.

6.8 — In the confirmation box, type delete and choose Delete.

Delete IAM roles

6.9 — Navigate to the IAM console, and in the navigation pane, choose Roles.

6.10 — Search for Tutorial and then select the check boxes next to the roles you created for this tutorial: TutorialAuroraComprehendRole and TutorialAuroraS3Role.

6.11 — At the top of the page, choose Delete role.

6.12 — In the confirmation dialog box, choose Yes, Delete.

Delete other resources

Optionally, delete the PostgreSQL database client you downloaded for this tutorial.

Congratulations

You have created an Aurora PostgreSQL database, enabled integration with Amazon Comprehend, and used Comprehend to perform sentiment analysis based on records in the database.

Recommended next steps

Learn more about Amazon Aurora ML features

Find out more about the features of Amazon Aurora Machine Learning.

Read about more Amazon Aurora ML applications

Learn about more Amazon Aurora ML applications on the AWS Machine Learning Blog.

Learn more about Amazon Aurora

Visit Amazon Aurora Resources for getting started tips and more.