Overview

Product video

Cerebras Inference Cloud on AWS Marketplace brings lightning-fast, on-demand performance to the latest open-source LLMs, including Llama, Qwen, OpenAI GPT-OSS, and more.

Built for real-time interactivity, multi-step reasoning, and complex agentic workflows, Cerebras delivers the speed, scale, and simplicity needed to go from API key to production in under 30 seconds.

Powered by a fast AI accelerator, the Wafer-Scale Engine (WSE), and the CS-3 system, the Cerebras Inference Cloud offers ultra-low latency and high-throughput inferencing via a drop-in, OpenAI-compatible API.

Start today with our self-service offering. Pricing can be found here: https://www.cerebras.ai/pricing

For custom pricing or other questions please contact partners@cerebras.net

Highlights

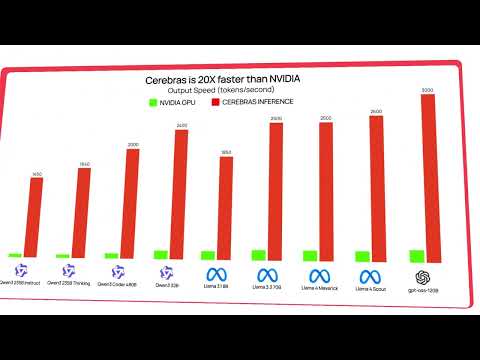

- Up to 70X faster than GPUs: With throughput exceeding 2,500 tokens per second, Cerebras eliminates lag, delivering near-instant responses, even from large models.

- Full reasoning in under 1 second: No more multi-step delays, Cerebras executes full reasoning chains and delivers final answers in real time.

- Instant API access to top open-source models: Skip GPU setup and launch models like Llama, Qwen, OpenAI GPT-OSS in seconds, just bring your prompt.

Details

Introducing multi-product solutions

You can now purchase comprehensive solutions tailored to use cases and industries.

Features and programs

Financing for AWS Marketplace purchases

Pricing

Dimension | Description | Cost/unit |

|---|---|---|

Cerebras Consumption Units | Pricing is based on actual usage | $0.0000001 |

Vendor refund policy

Payment obligations are non-cancelable once incurred, and Fees paid are non-refundable.

Custom pricing options

How can we make this page better?

Legal

Vendor terms and conditions

Content disclaimer

Delivery details

Software as a Service (SaaS)

SaaS delivers cloud-based software applications directly to customers over the internet. You can access these applications through a subscription model. You will pay recurring monthly usage fees through your AWS bill, while AWS handles deployment and infrastructure management, ensuring scalability, reliability, and seamless integration with other AWS services.

Resources

Vendor resources

Support

Vendor support

Learn more about our supported models, rate limits, pricing, and more in our documentation (docs.cerebras.ai/cloud). For 24x7 technical support, contact support@cerebras.net or +1 (650) 933-4980.

AWS infrastructure support

AWS Support is a one-on-one, fast-response support channel that is staffed 24x7x365 with experienced and technical support engineers. The service helps customers of all sizes and technical abilities to successfully utilize the products and features provided by Amazon Web Services.

Similar products

Customer reviews

Fast inference has enabled ultra-low-latency coding agents and continues to improve

What is our primary use case?

I use the product for the fastest LLM inference for LLama 3.1 70B and GLM 4.6.

How has it helped my organization?

We use it to speed up our coding agent on specific tasks. For anything that is latency-sensitive, having a fast model helps.

What is most valuable?

The valuable features of the product are its inference speed and latency.

What needs improvement?

There is room for improvement in supporting more models and the ability to provide our own models on the chips as well.

For how long have I used the solution?

I have used the solution for one year.

Which solution did I use previously and why did I switch?

I previously used Groq and Sambanova, but I switched because they were serving a spec dec model that had worse intelligence than the listed model.

What's my experience with pricing, setup cost, and licensing?

They are more expensive, but if you need speed, then it is the only option right now.

Which other solutions did I evaluate?

I evaluated Groq and Sambanova.

What other advice do I have?

Their support has been helpful, and I've had a few outages with them in the past, but they were resolved quickly. I recommend using it for speed and having a good fallback plan in case there are issues, but that's easy to do.

High-speed parallel inference has transformed quantitative finance decisions and expands model diversity

What is our primary use case?

Our primary use case is high TPS-burst inference, executed in parallel across many large parameter language models.

How has it helped my organization?

The throughput increase has extended decision-making time by over 50 times compared to previous pipelines when accounting for burst parallelism. This has improved both end-to-end performance and opened new use cases within our domain, specifically in the field of quantitative finance.

What is most valuable?

The most valuable features for us are the speed (TPS) and the diversity of models.

What needs improvement?

There is room for improvement in the integration within AWS Bedrock.

For how long have I used the solution?

We have been using the solution since its launch on AWS .

Which solution did I use previously and why did I switch?

We previously used a combination of Bedrock and local LLM compute.

Which other solutions did I evaluate?

We considered alternate solutions such as Groq, Bedrock, Local Inference, and lambda.ai.

What other advice do I have?

I recommend giving it a try!

Has enabled faster token inference to improve customer response times

What is our primary use case?

I use it for fast LLM token inference.

How has it helped my organization?

Cerebras' token speed rates are unmatched. This can enable us to provide much faster customer experiences.

What is most valuable?

One of the most valuable features is the very fast token inference.

For how long have I used the solution?

I have used the solution for one week.

Which solution did I use previously and why did I switch?

I am currently leveraging most top models from Google, OpenAI, Anthropic, and Meta.

What's my experience with pricing, setup cost, and licensing?

I have no advice to give regarding setup cost.

Which other solutions did I evaluate?

I also considered Sonnet , GPT, Gemini, and Scout.

What other advice do I have?

Cerebras has a great collection of team members who genuinely want to help you get up and going.