Break a Monolithic Application into Microservices with AWS Copilot, Amazon ECS, Docker, and AWS Fargate

TUTORIAL

Introduction

Overview

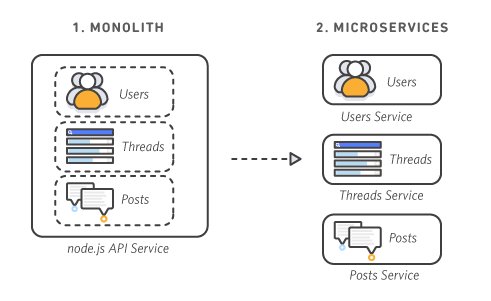

In this tutorial, you will deploy a monolithic Node.js application to a Docker container, then decouple the application into microservices without any downtime. The Node.js application hosts a simple message board with threads and messages between users.

Why this matters

Traditional monolithic architectures are hard to scale. As an application's code base grows, it becomes complex to update and maintain. Introducing new features, languages, frameworks, and technologies becomes very hard, limiting innovation and new ideas.

Within a microservices architecture, each application component runs as its own service and communicates with other services through a well-defined API. Microservices are built around business capabilities, and each service performs a single function. Microservices can be written using different frameworks and programming languages, and you can deploy them independently, as a single service, or as a group of services.

What you will accomplish

During this tutorial, we will show you how to run a simple monolithic application in a Docker container, deploy the same application as microservices, then switch traffic to the microservices without any downtime. After you are done, you can use this tutorial and the code in it as a template to build and deploy your own containerized microservices on AWS.

Monolithic architecture

The entire Node.js application is run in a container as a single service and each container has the same features as all other containers. If one application feature experiences a spike in demand, the entire architecture must be scaled.

Microservices architecture

Each feature of the Node.js application runs as a separate service within its own container. The services can scale and be updated independently of the others.

Prerequisites

- An AWS account: If you don't already have an account, follow the Setting Up Your AWS Environment tutorial for a quick overview.

- Install and configure the AWS CLI

- Install and configure AWS Copilot

- Install and configure Docker

- A text editor. For this tutorial, we will use VS Code, but you can use your preferred IDE.

AWS experience

Intermediate

Minimum time to complete

110 minutes

Cost to complete

Free Tier eligible

Requires

- An AWS account: If you don't already have an account, follow the Setting Up Your AWS Environment tutorial for a quick overview.

- Install and configure the AWS CLI.

- Install and configure AWS Copilot.

- Install and configure Docker.

- A text editor. For this tutorial, we will use VS Code, but you can use your preferred IDE.

Services used

Code

Last updated

Modules

This tutorial is divided into the following modules. You must complete each module before moving to the next one.

- Setup (20 minutes): In this module, you will install and configure the AWS CLI, install AWS Copilot, and install Docker.

- Containerize and deploy the monolith (30 minutes): In this module, you will containerize the application, use AWS Copilot to instantiate a managed cluster of EC2 compute instances, and deploy your image as a container running on the cluster.

- Break the monolith (20 minutes): In this module, you will break the Node.js application into several interconnected services and push each service's image to an Amazon Elastic Container Registry (Amazon ECR) repository.

- Deploy microservices (30 minutes): In this module, you will deploy your Node.js application as a set of interconnected services behind an Application Load Balancer (ALB). Then, you will use the ALB to seamlessly shift traffic from the monolith to the microservices.

- Clean up (10 minutes): In this module, you will terminate the resources you created during the tutorial. You will stop the services running on Amazon ECS, delete the ALB, and delete the AWS CloudFormation stack to terminate the Amazon ECS cluster, including all underlying EC2 instances.