Overview

Architecture

Architecture

Product video

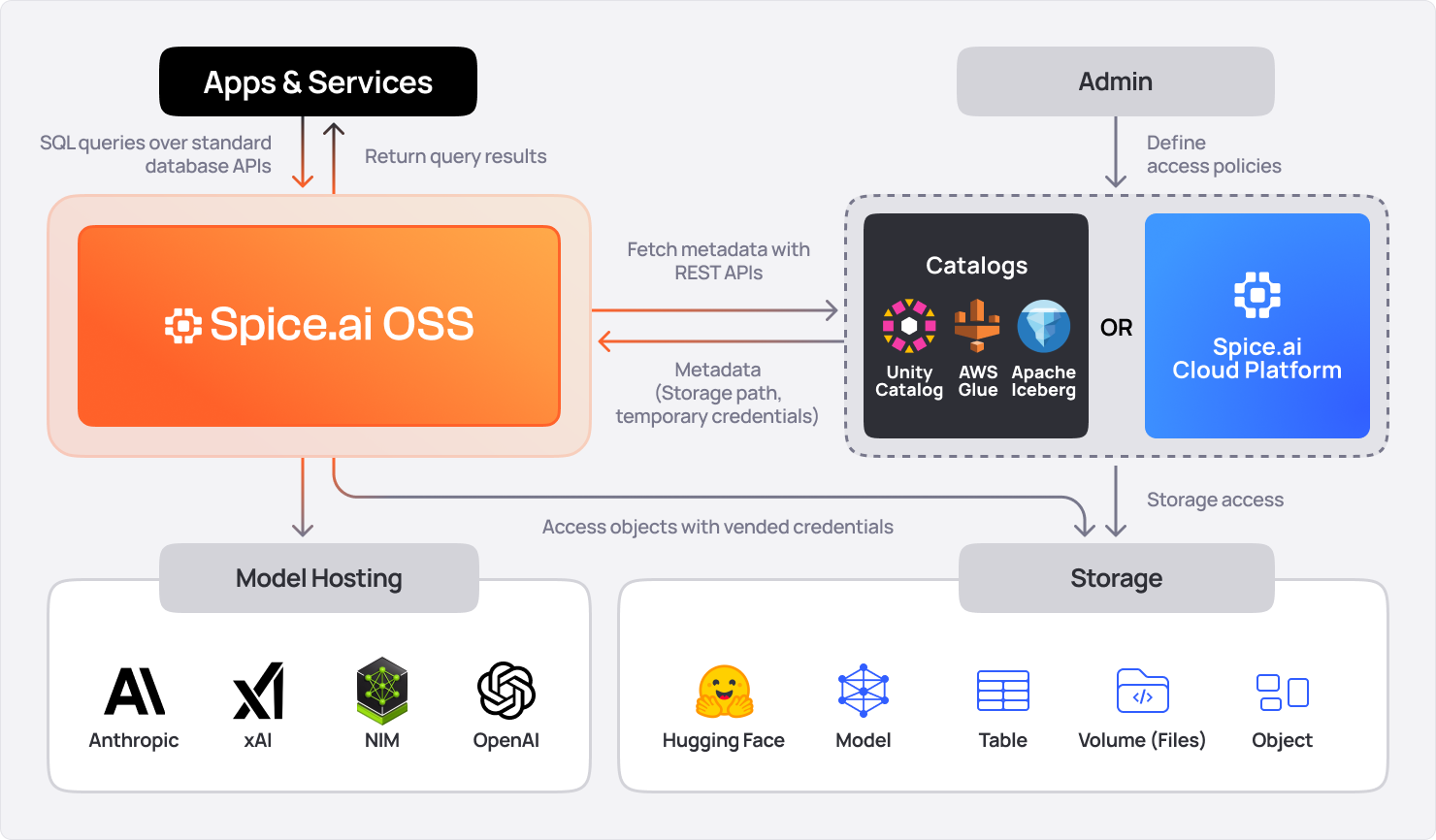

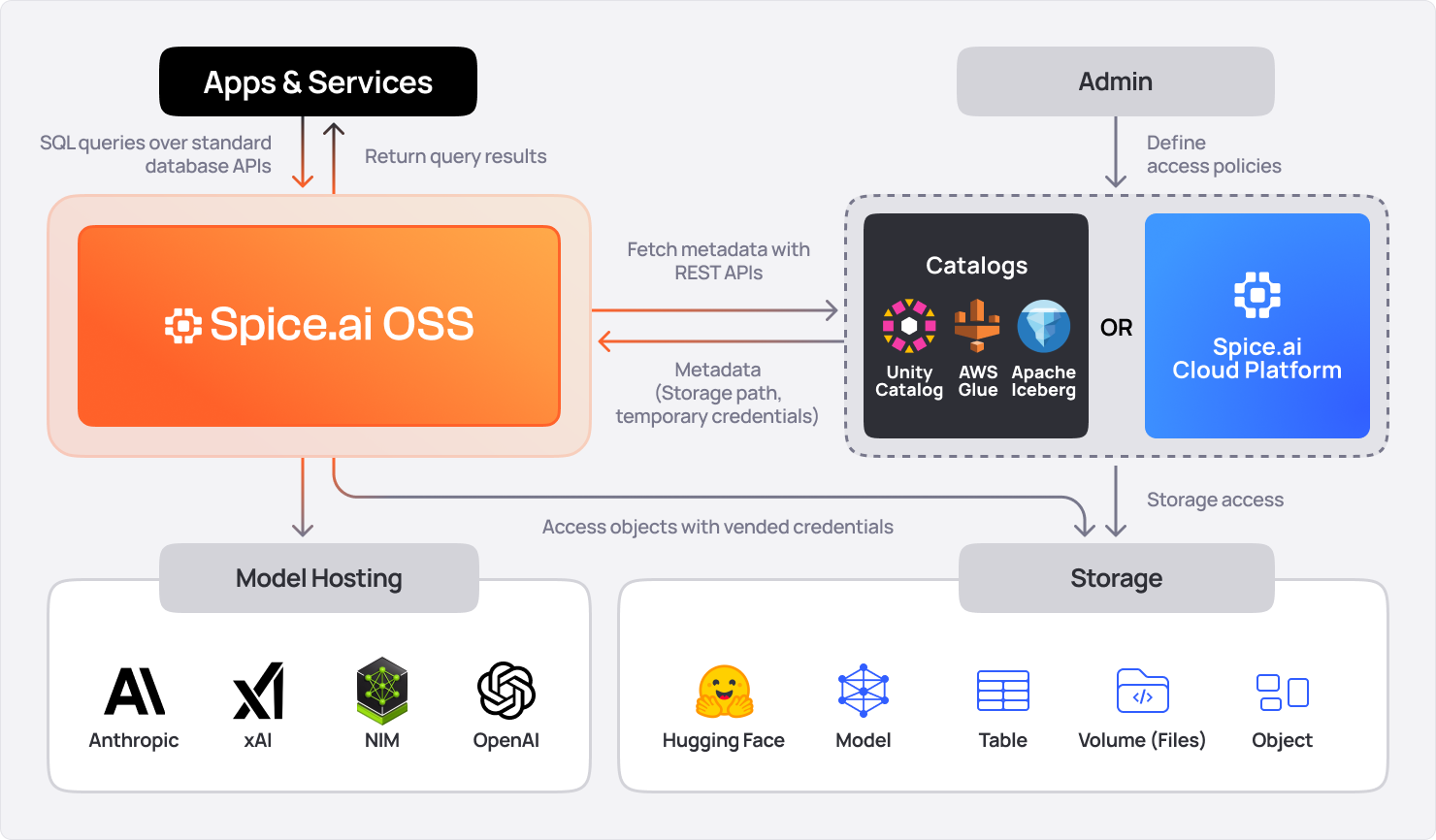

Spice.ai Enterprise is a portable (<150MB) compute engine built in Rust for data-intensive and intelligent applications. It accelerates SQL queries across databases, data warehouses, and data lakes using Apache Arrow, DataFusion, DuckDB, or SQLite. Integrated and co-deployed with data-intensive applications, Spice materializes and accelerates data from object storage, ensuring sub-second query performance and resilient AI applications. Deployable as a container on AWS ECS, EKS, or hybrid cloud & edge, it includes enterprise licensing, support, and SLAs.

Note: Spice.ai Enterprise requires an existing commercial license. For details, please contact sales@spice.ai .

Highlights

- Unified data query and AI engine accelerating SQL queries across databases, data warehouses, and data lakes. Delivers sub-second query performance while grounding mission-critical AI applications with real-time context to minimize errors and hallucinations.

- Advanced AI and retrieval tools, featuring vector and hybrid search, text-to-SQL, and LLM memory, enabling data-grounded AI applications with more than 25 data connectors enabling federated queries and real-time applications.

- Deployable as a container on AWS ECS, EKS, or on-premises, with dedicated support and SLAs for scalable, secure integration into any architecture.

Details

Introducing multi-product solutions

You can now purchase comprehensive solutions tailored to use cases and industries.

Features and programs

Financing for AWS Marketplace purchases

Quick Launch

Pricing

Vendor refund policy

Refunds for Spice.ai Enterprise container subscriptions are not available after activation, as usage begins immediately upon deployment. Ensure compatibility with AWS ECS, EKS, or on-premises setups before purchase. For billing inquiries, contact AWS Marketplace support or Spice AI directly at support@spice.ai .

How can we make this page better?

Legal

Vendor terms and conditions

Content disclaimer

Delivery details

Container Deployment

- Amazon ECS

- Amazon EKS

- Amazon ECS Anywhere

- Amazon EKS Anywhere

Container image

Containers are lightweight, portable execution environments that wrap server application software in a filesystem that includes everything it needs to run. Container applications run on supported container runtimes and orchestration services, such as Amazon Elastic Container Service (Amazon ECS) or Amazon Elastic Kubernetes Service (Amazon EKS). Both eliminate the need for you to install and operate your own container orchestration software by managing and scheduling containers on a scalable cluster of virtual machines.

Version release notes

Spice v1.11.1-enterprise (Feb 9, 2026)

v1.11.1-enterprise is a patch release improving Spice Cayenne accelerator reliability and performance, enhancing DynamoDB Streams and HTTP data connectors, and fixing issues in Federated Task History and FlightSQL.

What's New in v1.11.1-enterprise

Spice Cayenne Accelerator Improvements

This release includes stability and performance fixes for the Spice Cayenne accelerator:

- Row-based Deletion Logic: Refactored row-based delete operations to use per-file deletion vectors with RoaringBitmap. Deletion scans now use Vortex-native streaming with filter pushdown and project only row indices, achieving zero data I/O for delete operations.

- Constraints & On Conflict: constraints and on_conflict configurations are now automatically inferred from federated table metadata, enabling datasets like DynamoDB to work without explicitly defining primary_key in the Spicepod.

- Partitioned Table Deletion: Fixed an issue where DELETE operations on partitioned Cayenne tables failed.

- Data Integrity: Fixed two issues with acceleration snapshot handling: protected snapshots are now included in conflict detection keyset scans (preventing duplicate key creation during append refresh), and snapshot cleanup no longer deletes protected snapshots.

Data Connector Improvements

- DynamoDB Streams: Added automatic re-bootstrapping when the stream lag exceeds DynamoDB shard retention (24h). Configurable via the new lag_exceeds_shard_retention_behavior parameter with values error (default), ready_before_load, or ready_after_load.

- HTTP Connector: HTTP responses now include a response_status column (UInt16). 4xx responses (e.g., 404 Not Found) are treated as valid queryable data and cached normally. 5xx responses are retried with backoff, returned to the user, but excluded from the cache to prevent transient server errors from polluting cached results.

Other Improvements

- Reliability: Added retries for SnapshotManager operations and general snapshot reliability improvements.

- Reliability: Fixed handling of timestamp precision mismatches in query result caching .

- Reliability: Fixed a double projection issue in federated task history queries that caused Schema error: project index out of bounds errors in cluster mode.

- Developer Experience: Added cookie middleware support to the FlightSQL data connector.

Contributors

Breaking Changes

No breaking changes.

Cookbook Updates

No major cookbook updates. The Spice Cookbook includes 86 recipes to help you get started with Spice quickly and easily.

Upgrading

To upgrade to v1.11.1-enterprise, use one of the following methods:

CLI:

spice upgradeHomebrew:

brew upgrade spiceai/spiceai/spiceDocker:

Pull the spiceai/spiceai:1.11.1 image:

docker pull spiceai/spiceai:1.11.1For available tags, see DockerHub .

Helm:

helm repo update helm upgrade spiceai spiceai/spiceai --version 1.11.1Additional details

Usage instructions

Prerequisites

Ensure the following tools and resources are ready before starting:

- Docker: Install from https://docs.docker.com/get-docker/ .

- AWS CLI: Install from https://docs.aws.amazon.com/cli/latest/userguide/getting-started-install.html .

- AWS ECR Access: Authenticate to the AWS Marketplace registry: aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin 709825985650.dkr.ecr.us-east-1.amazonaws.com

- Spicepod Configuration: Prepare a spicepod.yaml file in your working directory. A spicepod is a YAML manifest file that configures which components (i.e. datasets) are loaded. Refer to https://spiceai.org/docs/getting-started/spicepods for details.

- AWS ECS Prerequisites (for ECS deployment): An ECS cluster (Fargate or EC2) configured in your AWS account. An IAM role for ECS task execution (e.g., ecsTaskExecutionRole) with permissions for ECR, CloudWatch, and other required services. A VPC with subnets and a security group allowing inbound traffic on ports 8090 (HTTP) and 50051 (Flight).

Running the Container

- Ensure the spicepod.yaml is in the current directory (e.g., ./spicepod.yaml).

- Launch the container, mounting the current directory to /app and exposing HTTP and Flight endpoints externally:

docker run --name spiceai-enterprise

-v $(pwd):/app

-p 50051:50051

-p 8090:8090

709825985650.dkr.ecr.us-east-1.amazonaws.com/spice-ai/spiceai-enterprise-byol:1.11.1-enterprise-models

--http 0.0.0.0:8090

--flight 0.0.0.0:50051

- The -v $(pwd):/app mounts the current directory to /app, where spicepod.yaml is expected.

- The --http and --flight flags set endpoints to listen on 0.0.0.0, allowing external access (default is 127.0.0.1).

- Ports 8090 (HTTP) and 50051 (Flight) are mapped for external access.

Verify and Monitor the Container

- Confirm the container is running:

docker ps

Look for spiceai-enterprise with a STATUS of Up.

- Inspect logs for troubleshooting:

docker logs spiceai-enterprise

Deploying to AWS ECS Create an ECS Task Definition and use this value for the image: 709825985650.dkr.ecr.us-east-1.amazonaws.com/spice-ai/spiceai-enterprise-byol:1.11.1-enterprise-models. Configure the port mappings for the HTTP and Flight ports (i.e. 8090 and 50051).

Override the command to expose the HTTP and Flight ports publically and link to the Spicepod configuration hosted on S3:

"command": [ "--http", "0.0.0.0:8090", "--flight", "0.0.0.0:50051", "s3://your_bucket/path/to/spicepod.yaml" ]

Register the task definition in your AWS account, i.e. aws ecs register-task-definition --cli-input-json file://spiceai-task-definition.json --region us-east-1

Then run the task as you normally would in ECS.

Resources

Vendor resources

Support

Vendor support

Spice.ai Enterprise includes 24/7 dedicated support with a dedicated Slack/Team channel, priority email and ticketing, ensuring critical issues are addressed per the Enterprise SLA.

Detailed enterprise support information is available in the Support Policy & SLA document provided at onboarding.

For general support, please email support@spice.ai .

AWS infrastructure support

AWS Support is a one-on-one, fast-response support channel that is staffed 24x7x365 with experienced and technical support engineers. The service helps customers of all sizes and technical abilities to successfully utilize the products and features provided by Amazon Web Services.

Similar products