Overview

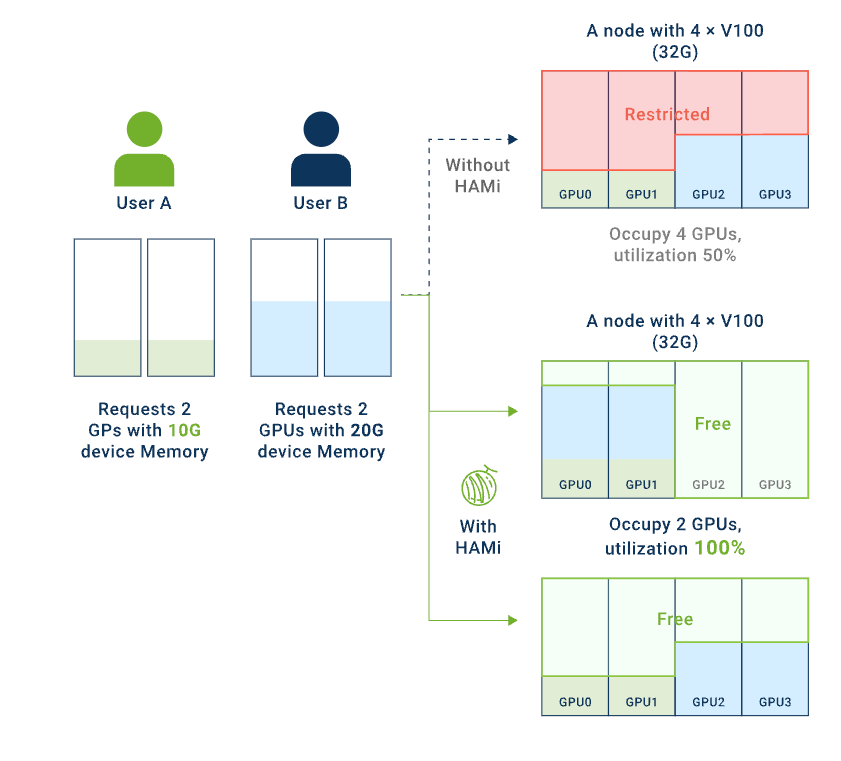

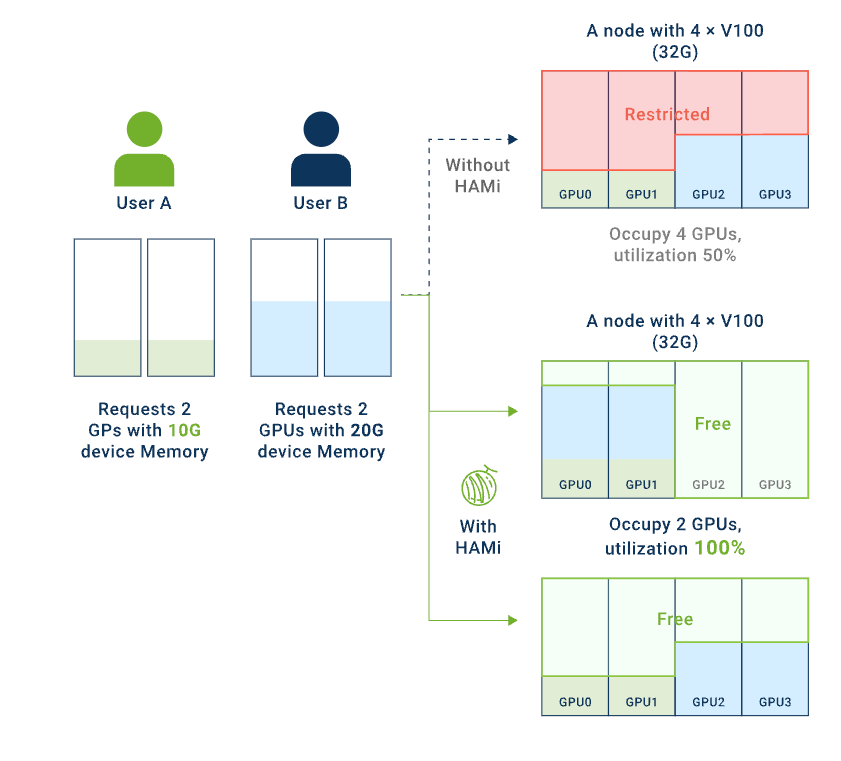

gpu share

gpu share

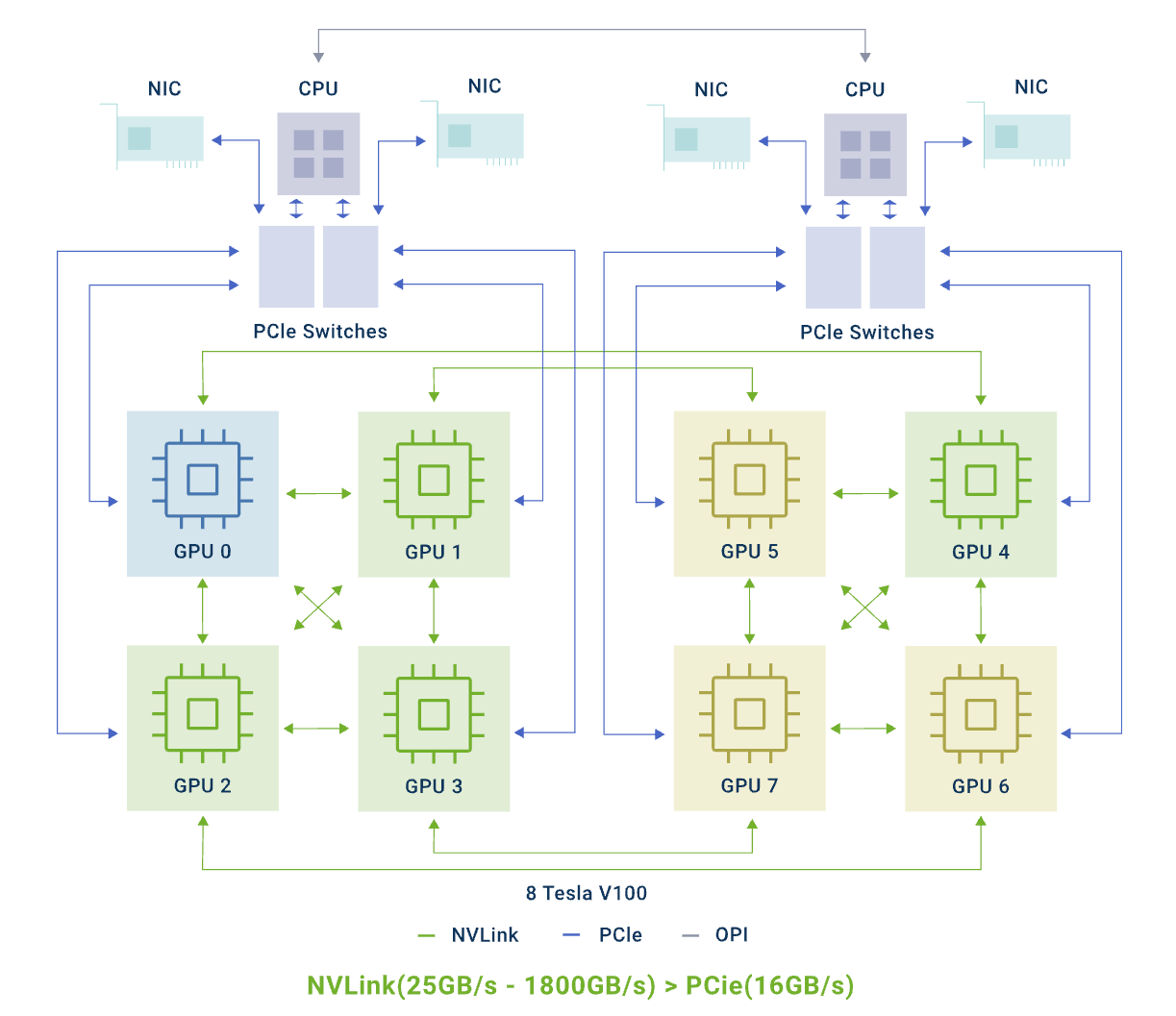

Topology-aware scheduling

Dynamia AI Platform brings enterprise-grade GPU virtualization and AI-aware scheduling to Amazon EKS, and includes an integrated web console for multi-cluster dashboards, inventory, and policy/governance.

GPU & scheduling capabilities

- Fractional sharing with hard limits: per-pod SM/compute throttling and VRAM caps (MB or %), preventing noisy neighbors.

- VRAM overcommit with guardrails: increase cluster-level utilization while honoring per-pod safety limits.

- Live VRAM vertical scaling: adjust a running pod's GPU memory without restarts for many inference workloads.

- AI-purpose scheduling: binpack/spread, target by GPU model/UUID, NUMA/NVLink awareness, namespace/tenant GPU quotas; optional gang scheduling & preemption via integrations (e.g., Volcano/Koordinator).

Web console (single control plane)

- Overview dashboard: multi-cluster posture, utilization, allocation, hot spots, and SLA risk hints.

- Cluster dashboards: per-cluster health, GPU usage, allocation vs. requests, saturation trends.

- Inventories: nodes list & detail, GPUs list & detail (model/UUID/topology/health), and workloads list & detail with GPU limits/actuals.

- Governance & ops: quota management (tenant/project/namespace), storage management (volumes, classes, usage), policy enforcement and basic audit trail.

- Observability: built-in DCGM metrics and prebuilt Grafana dashboards; alerting hooks.

Integrations & compatibility

- Kubernetes-native (Helm/EKS add-on); no app changes required.

- Works with vLLM Production Stack, SGLang, TensorRT-LLM, JupyterHub, Volcano/Koordinator.

- Supports NVIDIA GPUs on Amazon EKS; optional MIG awareness; RBAC and LTS release channel.

Outcomes

- Higher GPU utilization with fewer VRAM-related failures, clearer multi-tenant controls, and faster, safer rollout of AI training and inference services.

Highlights

- Enterprise GPU virtualization for EKS -- fractional sharing with strict SM/compute & VRAM limits, VRAM overcommit, and live per-pod VRAM scaling to maximize utilization without refactoring apps.

- AI-purpose scheduling & quotas -- binpack/spread placement, model/UUID targeting, NUMA/NVLink awareness, and tenant/namespace GPU quotas; optional gang scheduling & preemption via integrations.

- Full web console & governance -- global & cluster dashboards, node/GPU/workload inventories, storage and quota management.

Details

Introducing multi-product solutions

You can now purchase comprehensive solutions tailored to use cases and industries.

Features and programs

Financing for AWS Marketplace purchases

Pricing

Dimension | Description | Cost/month |

|---|---|---|

NVIDIA T4 | One NVIDIA T4 GPU, for g4 and g5 instances. | $133.00 |

NVIDIA L4 | One NVIDIA L4 GPU, for g6 instances. | $133.00 |

NVIDIA A10G | One NVIDIA A10G GPU, for g5 instances. | $133.00 |

NVIDIA A100-SXM4-40GB | One NVIDIA A100-SXM4-40GB, for p4d instances. | $216.00 |

NVIDIA A100-SXM4-80GB | One NVIDIA A100-SXM4-80GB, for p4de instances. | $216.00 |

NVIDIA L40S | One NVIDIA L40S, for g6e instances. | $133.00 |

NVIDIA H100 | One NVIDIA H100, for p5 instances. | $216.00 |

NVIDIA H200 | One NVIDIA H200, for p5e instances. | $216.00 |

NVIDIA B200 | One NVIDIA B200, for p6 instances. | $216.00 |

AWS Neuron | One AWS Neuron chip, for trn1 instances. | $133.00 |

Vendor refund policy

Refunds are handled according to AWS Marketplace policies. Buyers may request a refund within 30 days of purchase if the subscription was not activated or deployment failed due to a product issue. No pro-rata refunds are offered after activation, except where required by law. Please contact info@dynamia.ai with your AWS account ID and order ID for assistance.

How can we make this page better?

Legal

Vendor terms and conditions

Content disclaimer

Delivery details

dynamia ai v1

- Amazon EKS

Helm chart

Helm charts are Kubernetes YAML manifests combined into a single package that can be installed on Kubernetes clusters. The containerized application is deployed on a cluster by running a single Helm install command to install the seller-provided Helm chart.

Version release notes

Fix hami extended resource quota do not take effect.

Additional details

Usage instructions

You can follow this instruction to deploy dynamia ai platform in your cluster:

Resources

Vendor resources

Support

Vendor support

Email: info@dynamia.ai Docs & tickets: https://dynamia.ai

SLA: P1 247 (1-hour response) P2 85 (next business day) Enterprise services include onboarding, architecture reviews, and LTS updates via Helm/EKS add-on.

AWS infrastructure support

AWS Support is a one-on-one, fast-response support channel that is staffed 24x7x365 with experienced and technical support engineers. The service helps customers of all sizes and technical abilities to successfully utilize the products and features provided by Amazon Web Services.

Similar products