Documentation

Simulation World Assets

We have created additional environments you can use with your robots. They can be used to test facial recognition, navigation, obstacle avoidance, machine learning and can be modified for your scenarios.

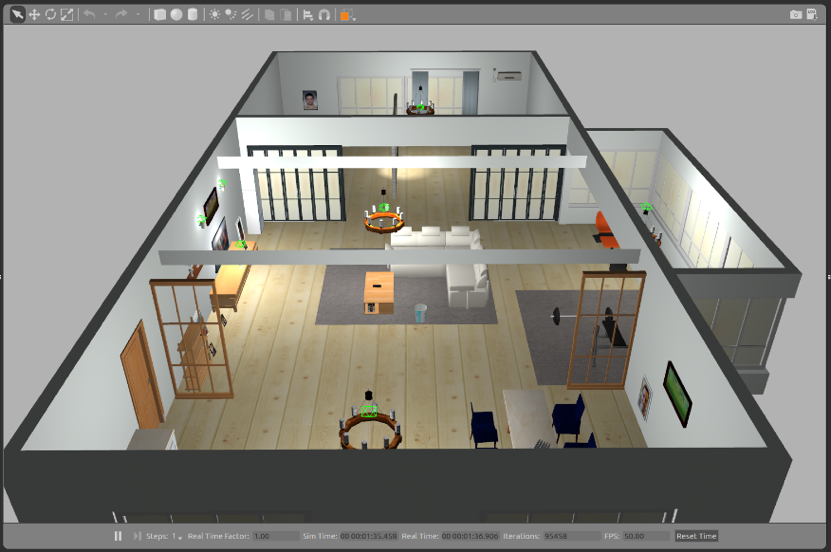

WorldForge House Worlds

AWS RoboMaker WorldForge supports the automatic generation of a multitude of indoor home environments, complete with configurable floor plans and furnishings.

Small House World

Additionally, this simple home world is available for your use. It provides a small house with kitchen, living room, home gym and pictures you can customize to test image recognition. There are plenty of obstacles for your robot to navigate.

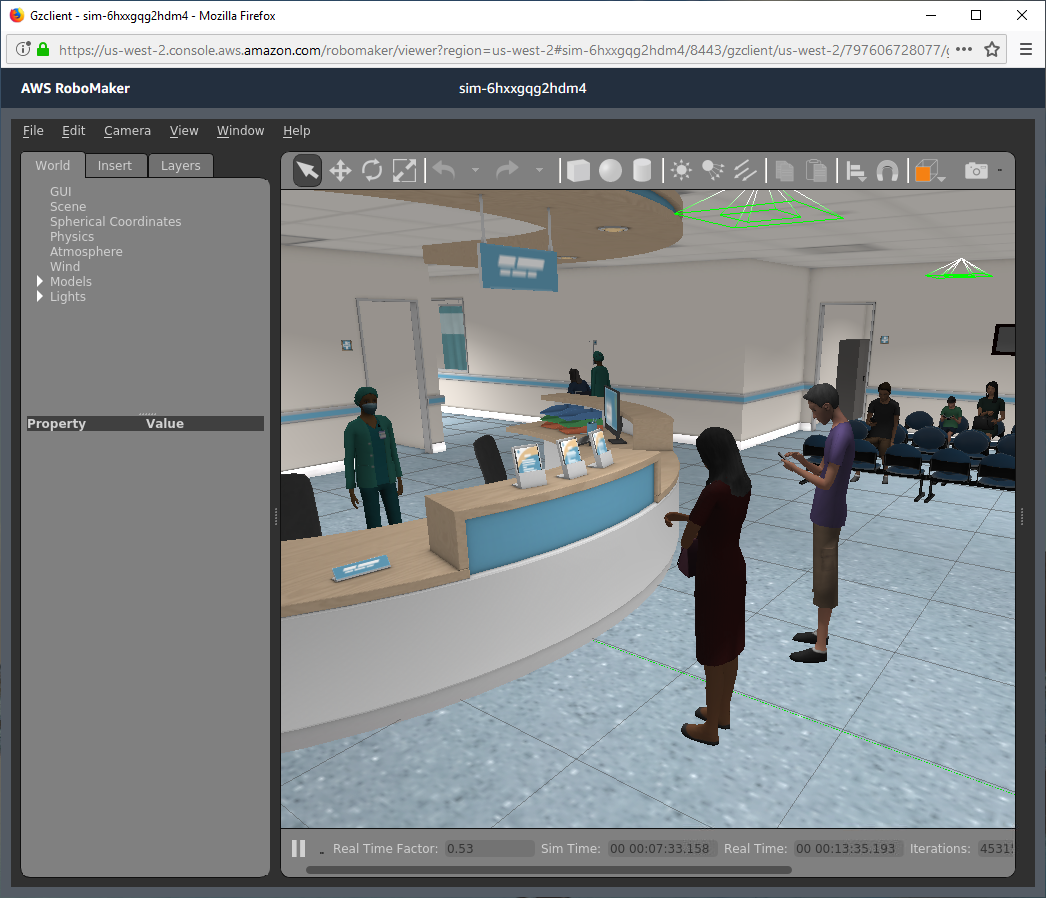

Hospital World

A large hospital world with a front desk and waiting room, exam rooms, patient rooms, storage, and a staff break room.

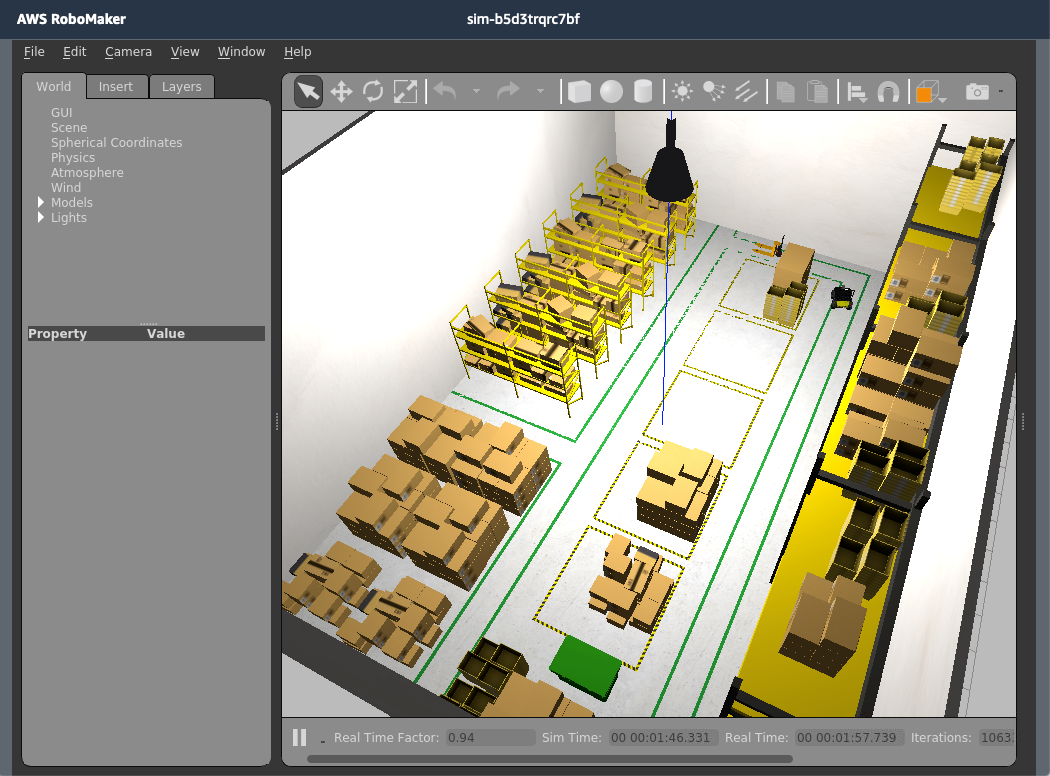

Small Warehouse World

A small warehouse world for testing your robotics applications for warehouse and logistics use cases.

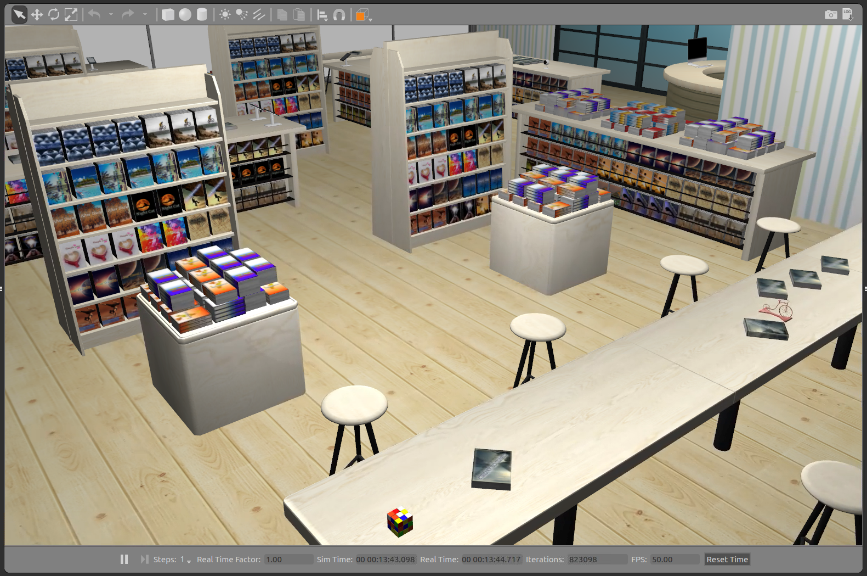

Bookstore

Navigate among shelves of books in this simulated bookstore. It includes different obstacles including chairs and tables for your robot to navigate.

Sample Applications

AWS RoboMaker includes sample robotics applications to help you get started quickly. These provide the starting point for the voice command, recognition, monitoring, and fleet management capabilities that are typically required for intelligent robotics applications. Sample applications come with robotics application code (instructions for the functionality of your robot) and simulation application code (defining the environment in which your simulations will run). You can get started with the samples here.

Launch in RoboMaker

Hello world

Learn the basics of how to structure your robot applications and simulation applications, edit code, build, launch new simulations, and deploy applications to robots. Start from a basic project template including a robot in an empty simulation world.

Navigation

Navigate to a robot to designated locations in a simulated bookstore world. Learn how to procedurally generate an occupancy map for any Gazebo world using a map generation plugin.

Launch in GitHub

Simulation launcher

Launch batch simulations in AWS RoboMaker with Code Pipeline and Step Functions. Learn more in the code repository.

Robot navigation

Create a map and navigate the robot to a designated location in RoboMaker simulator. Learn more in the code repository.

Reinforcement learning

Escape from a maze world by training a reinforcement learning model on AWS RoboMaker. Learn more in the code repository.

End-to-end robotics application

This sample application demonstrates an end-to-end robotics system with the Open Source Rover from NASA JPL. It includes a URDF file modeled after the popular open source project. Learn more in the code repository.

Multi robot fleet simulation

Learn how to spin up a fleet of robots in simulation in Gazebo, to enable development and testing of applications such as path planners and fleet management tools.

Learn more in the code repository.

Robot monitoring

Monitor health and operational metrics for a robot in a simulated bookstore using Amazon CloudWatch Metrics and Amazon CloudWatch Logs. Lean more in the code repository.

ROS Extensions

RoboMaker cloud extensions for ROS include services such as Amazon Kinesis Video Streams for video streaming, Amazon Rekognition for image and video analysis, Amazon Lex for speech recognition, Amazon Polly for speech generation, and Amazon CloudWatch for logging and monitoring. RoboMaker provides each of these cloud services as open source ROS packages, so you can extend the functions on your robot by taking advantage of cloud APIs, all in a familiar software framework.

Learn more about each of the cloud service extensions in the code repository.

ROS1 Cloud Extensions

Hardware developer kits

Building robots and adding advanced functionality requires many choices by developers. To remove uncertainly and speed development, AWS partners have created a number of robotic development kits that include complete HW solutions, pre-installed SW, and extensive documentation and tutorials.

Intel – UP Squared RoboMaker Developer Kit

The UP Squared RoboMaker Developer kit is the easiest way to get started with your robotics project powered by AWS RoboMaker. It’s a starter package designed to be a fast and easy way for developers to add artificial intelligence (AI) and vision into their robots. This kit provides a clear tutorial for how to build hardware from the module level and how to use cloud services to shorten the development time. Developers have been able to add machine vision into their robots within a single day and working robotics demos in just a few days. With expertise from Intel, AWS and AAEON, this kit aims at providing developers a path from prototype to field deployment.

This kit features an UP Squared board with an Intel® Atom™ processor x7-E3950, Intel® RealSense™ D435i camera, an Intel® Movidius™ Myriad™ X VPU, is fully compatible to AWS RoboMaker cloud services and extends the open-source robotics software framework, Robot Operating System (ROS).

Learn about the UP squared Robomaker kit and order today

Learn more about the partnerships with Intel and Aaeon

.71e2a406fb99c58b15cc16feb27f4c11c20f5d62.png)

Nvidia – JetBot AI Kit Featuring ROS & AWS RoboMaker

Nvidia accelerates robotic development from Cloud to Edge with AWS RoboMaker. Robotic simulation and development can now be easily done in the cloud and deployed across millions of robots and other autonomous machines powered by Jetson. This includes NVIDIA’s open source reference platform, JetBot, powered by the Jetson Nano. Jetbot is easy to set up and use, is compatible with many accessories and includes interactive tutorials showing you how to harness the power of AI to follow objects, avoid collisions and more. The JetBot AI Kit powered by Nvidia and featuring ROS and AWS RoboMaker includes the board, a complete robot chassis, wheels, and controllers along with a battery and 8MP camera. Extensive documentation is provided to accompany the kit.

Learn about the Jetbot kit and order now

Learn more about the partnership with Nivida.

Qualcomm – Robotics RB3 Platform with integrated support for AWS RoboMaker

Qualcomm Technologies’ support of Amazon Web Services’ AWS RoboMaker is helping to transform innovation in robotics. With high-performance heterogeneous computing, on-device machine learning and computer vision, hi-fidelity sensor processing for perception, odometry for localization, mapping, and navigation, and 4G LTE and Wi-Fi connectivity, the Qualcomm Robotics RB3 platform provides developers the tools to build robots that can accelerate innovation, revolutionize logistics and enhance our daily lives. Qualcomm Robotics RB3 development kit’s integrated support for AWS Robomaker helps develop, test, and deploy intelligent robotics applications at scale and provides an edge-to-cloud solution to make building intelligent robotics applications more accessible.

To learn more about the Qualcomm Robotics RB3 kit and to buy now

Learn about the Qualcomm’s commitment to robotic innovation

Developer documentation and an extensive step-by-step Developer documentation is available here: https://developer.qualcomm.com/project/aws-robomaker-rb3