If you've recently chatted on a messaging app with an online business, chances are your conversation was powered by Intercom.

Intercom offers a suite of messaging-first products that companies can integrate seamlessly with their websites and mobile apps to help them acquire, engage, and support customers. About 30,000 enterprises—including Sotheby's, New Relic, and Shopify—use Intercom products for more than 500 million monthly conversations among a billion individuals worldwide. The company raised $125 million in its 2018 Series D financing round.

Intercom credits Amazon Web Services (AWS) as a key enabler of this success. "We’ve been all in on AWS since our founding to reduce our undifferentiated heavy lifting and keep focused on what we do best,” says Rob Clancy, engineering manager for Intercom.

To free up more resources for product development, innovation, and support, Intercom started exploring AWS serverless computing. The first stage involved augmenting traditional cloud architectures with AWS Lambda, a serverless computing service that runs code in response to events.

Next, Intercom deployed two mission-critical solutions built entirely on AWS serverless architectures, one to manage billing and the other to prevent outages resulting from errors in customers’ integration code. Intercom saw discrete benefits from each solution, but it was the larger lessons learned that drove home how important serverless computing will be for the company’s long-term strategy.

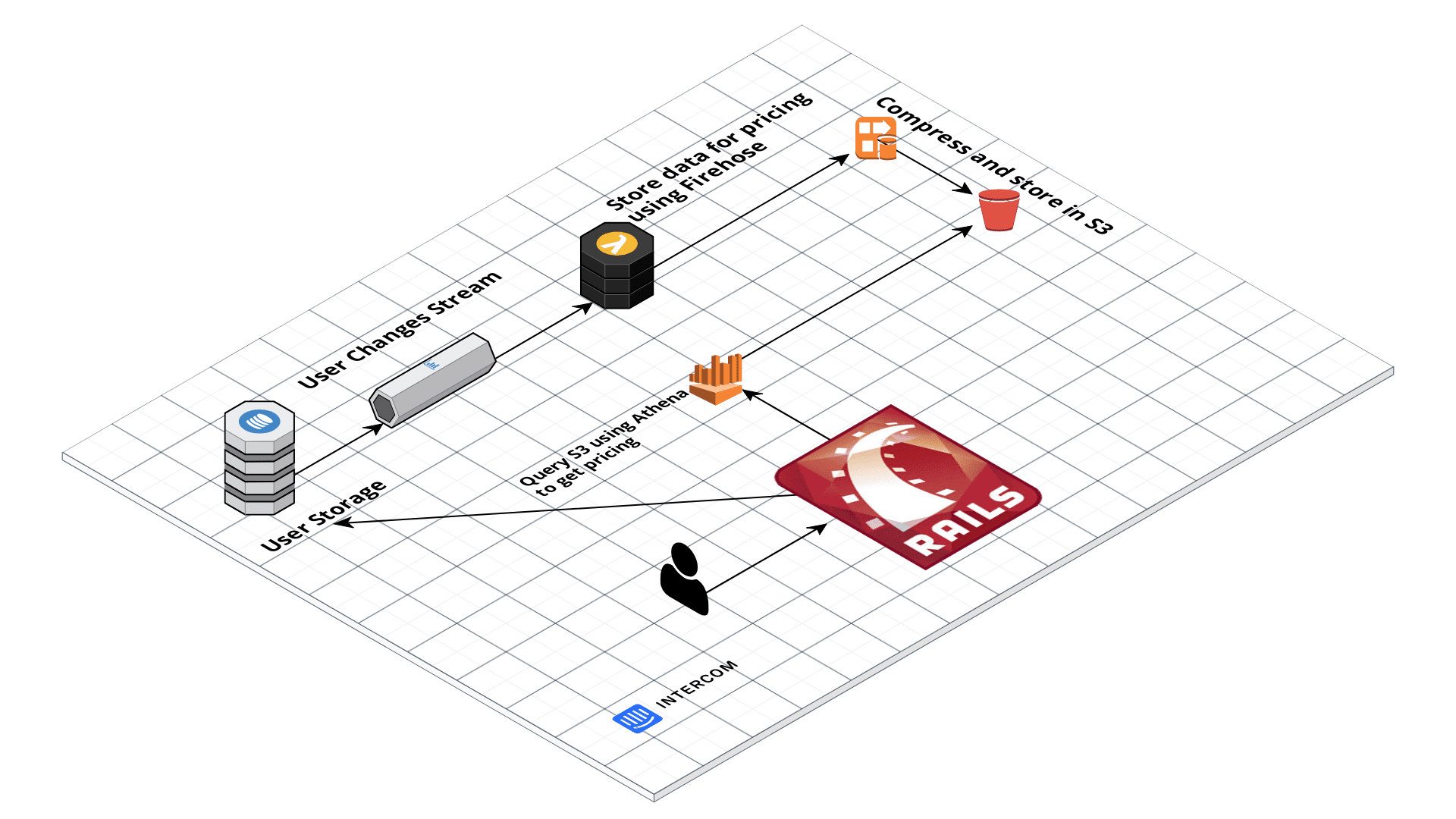

What Clancy calls Intercom's "first big play" with serverless architectures came when engineers needed to update business logic in the company's billing system, then based on self-hosted Elasticsearch. The team eventually chose a serverless solution based on Amazon Athena and Amazon Kinesis Data Firehose that reduced costs about 90 percent, improved scalability, and saved about 800 maintenance hours a year.

This project gave the Intercom engineering team confidence in using serverless solutions even for critical systems with high security requirements. "If you're running your billing system on a serverless architecture, you must really trust it," says Pratik Bothra, a senior engineer for Intercom.

Intercom billing system architecture

Intercom engineers next turned to serverless computing to solve the problem of what Clancy describes as "misbehaving integrations." Intercom had moved its user account data storage from self-hosted infrastructure to Amazon DynamoDB. This shift improved scalability, reduced costs, and simplified maintenance, but it also created the need to detect and shut down customer integrations containing code loops that cause excessive update requests that can trigger Amazon DynamoDB to temporarily close access to the affected partition.

Clancy's team built a serverless throttling solution that uses AWS Lambda and Amazon Managed Service for Apache Flink. In response to user update requests detected in the DynamoDB event stream, an AWS Lambda function estimates consumed write capacity. This estimate is compared to records in Amazon Managed Service for Apache Flink showing aggregated capacity per DynamoDB partition key during the current two-minute window. When excessive usage is detected, AWS Lambda sends data via an Amazon Simple Queue Service (Amazon SQS) stream to Amazon ElastiCache, which suspends the user. Because AWS Lambda and Amazon Managed Service for Apache Flink run on demand, scale up and down automatically, and require no provisioning, Intercom pays only for the resources it actually consumes.

Each hour, the serverless Intercom throttling solution detects about 50 misbehaving user connections and prevents nearly 50,000 requests that pose availability risks for the system. Excellent results—even before you consider that the team deployed it in less than two weeks after originating the idea. "To be able to prototype a system in a day, iterate, and ship a week later—any developers who work with traditional architectures would have their minds blown by how fast AWS serverless computing enabled us to build this," says Clancy. “The system, which is scaled to handle more than 1 billion updates a day, has required no maintenance time since launch, which is a testament to how seamlessly AWS serverless technologies like AWS Lambda and Amazon Kinesis scale with growth.”

.d33c0357c2d8af2f18466eea20438864ebfa60b5.png)

Serverless throttling solution

The developer enablement that Intercom offered its teams on these two projects—in which developers were set free to prototype, test, iterate, and rework their apps and workflows at the speed of ideas—is just part of the powerful case Clancy thinks can be made for AWS serverless computing. "Serverless computing isn't the solution for everything, but it's a good fit for many more use cases than people realize," he says.

The time savings available from serverless computing don't stop with deployment. "For example,” Clancy says, “because our serverless billing solution is based on Amazon Athena, we can adapt to even dramatic requirement changes simply by updating some SQL definitions."

Intercom can also use serverless computing to free up maintenance resources. "With serverless architectures on AWS, we don't have to worry about replacing retired instance types, addressing limits, or performing version and security updates," says Bothra. "It's all under the hood, ready when we are."

Serverless computing makes it even simpler to take advantage of the easy scalability AWS is known for. "What I would tell other startups is that AWS is prepared for much larger scale than just about any company is actually going to need," says Clancy. "Not having to think about scale is like a dream for us."

High availability is also easier to achieve on AWS serverless architectures. "Using AWS serverless computing enables us to provide higher availability at lower costs," says Clancy. "We don't have to overprovision against traffic spikes, low-quality customer integrations, or any of the other problems you have to plan for with traditional architectures.”

Clancy sums up: “We’re deliberate about where we use AWS serverless computing and where we don’t. But we’re also very enthusiastic about it and we are actively looking for more opportunities to use it.”

Learn more about serverless computing and applications.