- AWS Solutions Library›

- Guidance for Implementing Google Privacy Sandbox Key/Value Service on AWS

Guidance for Implementing Google Privacy Sandbox Key/Value Service on AWS

Allows demand and sell-side platforms to query real-time data to inform bidding or auction logic through the Chrome Protected Audience API (PAAPI)

Overview

How it works

Overview

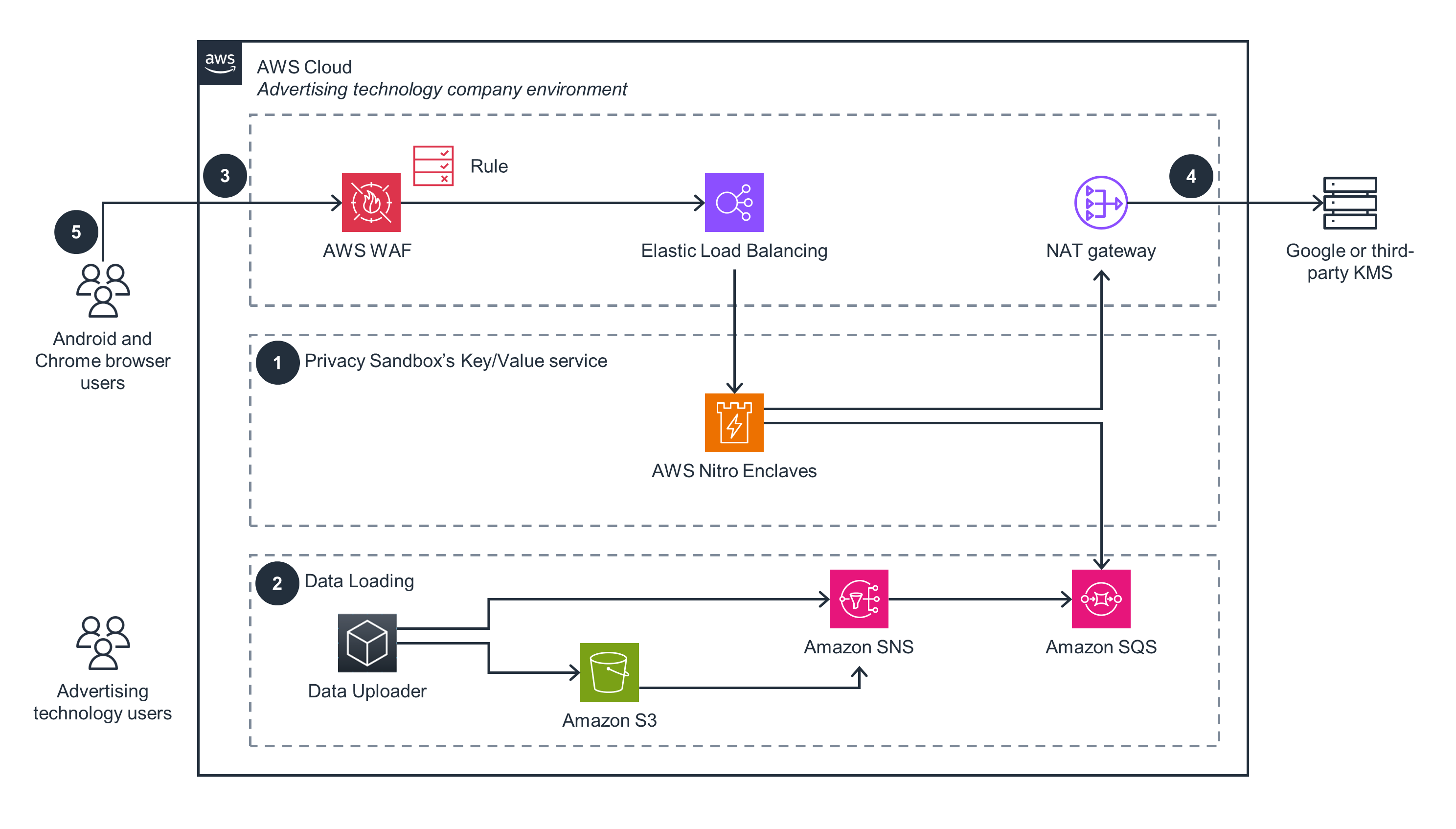

This architecture diagram shows an overview of how to deploy Privacy Sandbox’s Protected Audience API Key/Value service. For the data loading component, open the other tab.

Data loading

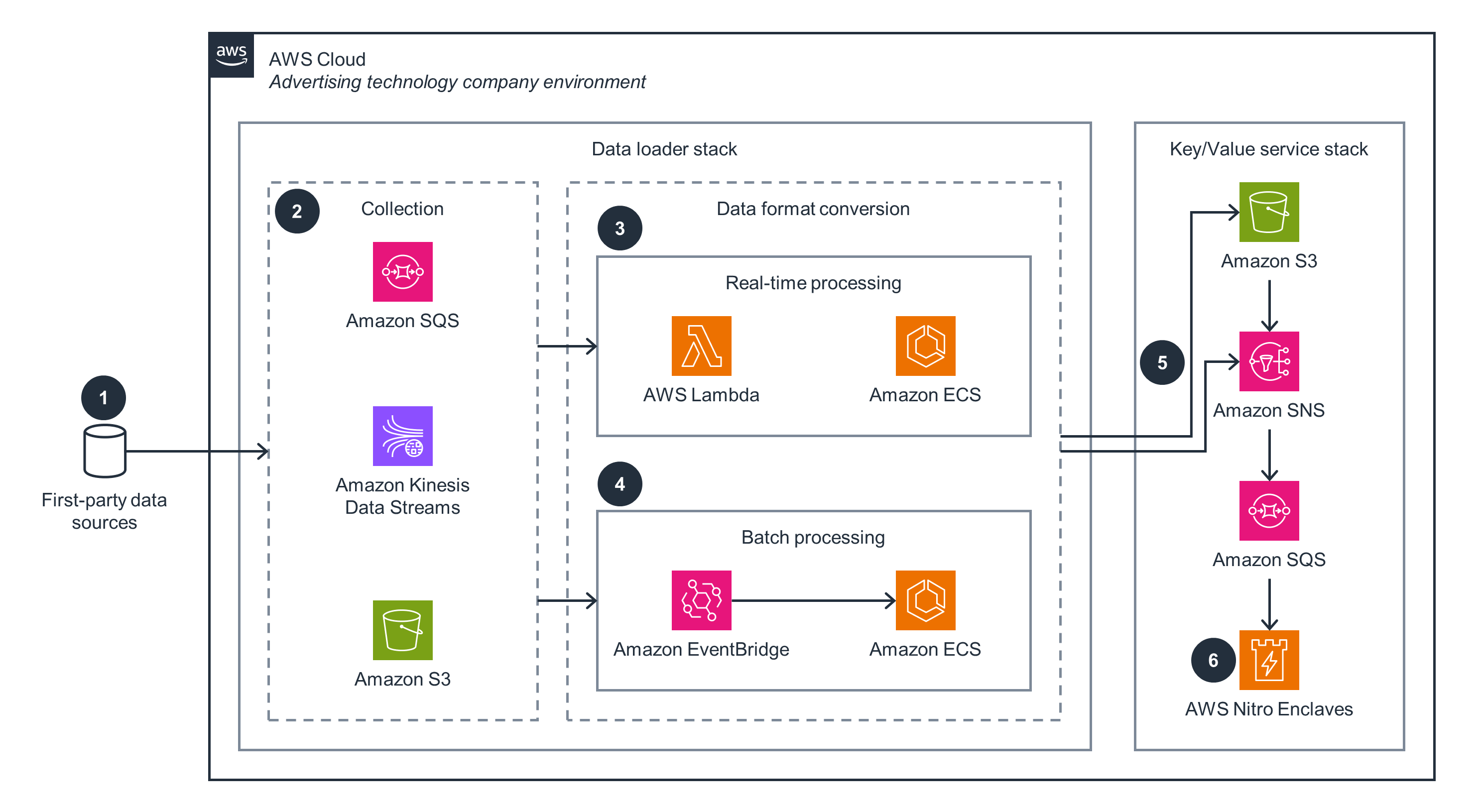

This architecture diagram shows the data loading component and demonstrates patterns for ingesting the first-party data needed for real-time auction and bidding. For an overview, open the other tab.

Well-Architected Pillars

The architecture diagram above is an example of a Solution created with Well-Architected best practices in mind. To be fully Well-Architected, you should follow as many Well-Architected best practices as possible.

Amazon CloudWatch, which provides logging and tracing (through AWS X-Ray) for the Key/Value service running in Nitro Enclaves and Amazon ECS, makes it easy for you to understand the performance characteristics. Additionally, Amazon Elastic Container Registry (Amazon ECR) lets you store and version containers for deployment to Amazon ECS, and Amazon EC2 Auto Scaling makes it easy to manage the scaling and compute of the Key/Value service running in Nitro Enclaves.

AWS Identity and Access Management (IAM) policies enable you to scope services and resources to the minimum privilege required to operate. Amazon S3 encrypts data at rest using server-side encryption with Amazon S3 managed keys. AWS WAF provides distributed denial of service protection for the public endpoint to prevent malicious attacks, and you can easily create security rules to control bot traffic. Additionally, this Guidance uses Amazon VPC endpoints to enable the services running in Nitro Enclaves and Amazon ECS to privately connect to supported AWS services.

This Guidance stores data in Amazon S3 before uploading it into the Key/Value service, so you can use S3 Versioning to preserve, replicate, retrieve, and restore every version of an object. This enables you to recover from unintended user actions and application failures. Additionally, this Guidance uses Auto scaling groups to spread compute across Availability Zones, and Elastic Load Balancing distributes traffic to healthy Amazon EC2 instances. Finally, Amazon SNS and Amazon SQS, which serve as an endpoint for low-latency data updates, provide a push-and-pull-based system to deliver data and facilitate data persistence before it is processed downstream.

Amazon ECS reduces the complexity of scaling containerized workloads on AWS. By using Amazon ECS containers that run on AWS Graviton processors, you can achieve higher throughputs and lower latencies for requests. Additionally, Amazon SNS can publish messages within milliseconds, so upstream applications can send time-critical messages to the Key/Value service through a push mechanism.

As a serverless service, Amazon S3 automatically scales your data storage, enabling you to optimize your costs based on the storage you actually use, and you can avoid the costs of provisioning and managing physical infrastructure. Additionally, Amazon S3 provides different storage classes that help you further optimize storage and reduce costs, and you can set up lifecycle rules to delete data after a period of time. Amazon ECS also helps optimize costs by dynamically scaling compute resources up and down based on demand, so you only pay for the resources used. Finally, by decreasing the number of empty receives to an empty queue, Amazon SQS long polling enhances the efficiency of processing inventory updates, further optimizing costs.

Instances powered by AWS Graviton processors enable you to serve more requests at lower latency and with up to 60 percent less energy than comparable Amazon EC2 instances, helping you lower the carbon footprint of your workloads. Additionally, Amazon SQS long polling improves resource efficiency by reducing API requests, minimizing network traffic, and optimizing resource utilization.

Deploy with confidence

Ready to deploy? Review the sample code on GitHub for detailed deployment instructions to deploy as-is or customize to fit your needs.

Disclaimer

Did you find what you were looking for today?

Let us know so we can improve the quality of the content on our pages