Amazon Lex features

Overview

Amazon Lex is a fully managed artificial intelligence (AI) service with advanced natural language models to design, build, test, and deploy voice and text conversational interfaces in applications. Lex integrates with AWS Lambda, used to easily trigger functions for execution of your back-end business logic for data retrieval and updates. Once built, your bot can be deployed directly to contact centers, chat and text platforms, and IoT devices. Lex provides rich insights and pre-built dashboards to track metrics for your

Amazon Lex + Generative AI

Amazon Lex leverages the power of Generative AI and Large Language Models (LLMs) to enhance the builder and customer experience. As the demand for conversational AI continues to grow, developers are seeking ways to enhance their chatbot with human-like interactions. Large language models can be highly useful in this regard by providing automated responses to frequently asked questions, analyzing customer sentiment and intents to route calls appropriately, generating summaries of conversations to help agents, and even automatically generating emails or chat responses to common customer inquiries. This new generation of AI-powered assistants provide seamless self-service experiences that delight customers.

Amazon Lex is committed to infusing Generative AI into all parts of the builder and end-user experiences to help increase containment while resolving increasingly complex use cases with confidence. Amazon Lex has launched the below features to empower developers and users alike:

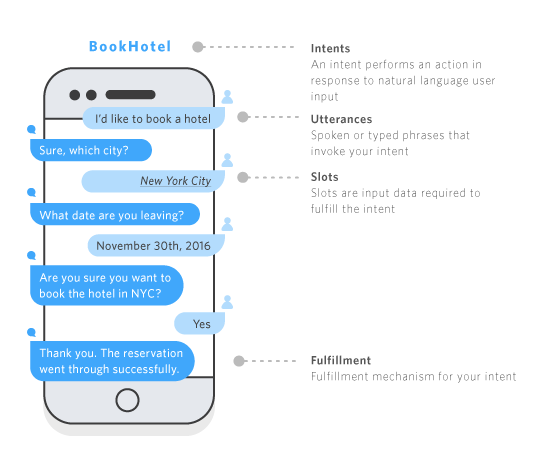

How Lex works