Modernizing legacy processes

Monolithic architecture, once the industry standard for building applications, bundled all code into a single package running synchronously on a machine. However, the shift to distributed systems has popularized new patterns, such as event-driven asynchronous workflows and single-page applications. Business processes have evolved as well, now distributing work across people, automation tools, and third-party services, all communicating through events.

This distributed model brings new challenges: increased reliance on external services, decoupled system components, and long-running processes spanning multiple services. The right way to design modern processes is to orchestrate workflows in such a manner that reliability is built in.

Designing optimal system architecture requires a delicate balance in achieving reliability without impacting development timelines. In this article, you will learn how to use Temporal Cloud (Pay-as-you-go) to build robust, production-ready workflows with radical simplicity.

Imagine you are a staff engineer who recently joined a new credit card approvals group at a large financial organization. The nature of the work done by this group includes application reviews, credit checks, background verifications, and other forms of fact-checking.

Except for the application submission process, which happens from the company website, not a lot has changed since the workflow was initially built 10 years ago. However, an evolving landscape of threats, a growing diversity of 3rd party APIs available and business growth over time have made the process slow and fragile.

The team has reported a long list of issues, from process failure due to network conditions and intermittent outages in a third-party API, to timeouts caused by hardware issues and scaling activities. There is also a long list of feature requests to optimize the process, most importantly improving visibility and clarity into the status of any ongoing requests.

Your top priority is to modernize and make the credit card approval workflow more reliable and transparent and you have a five-week timeframe to explore and propose an approach. You decide to start with the simplest of the workflows first—the classic credit card application validation workflow.

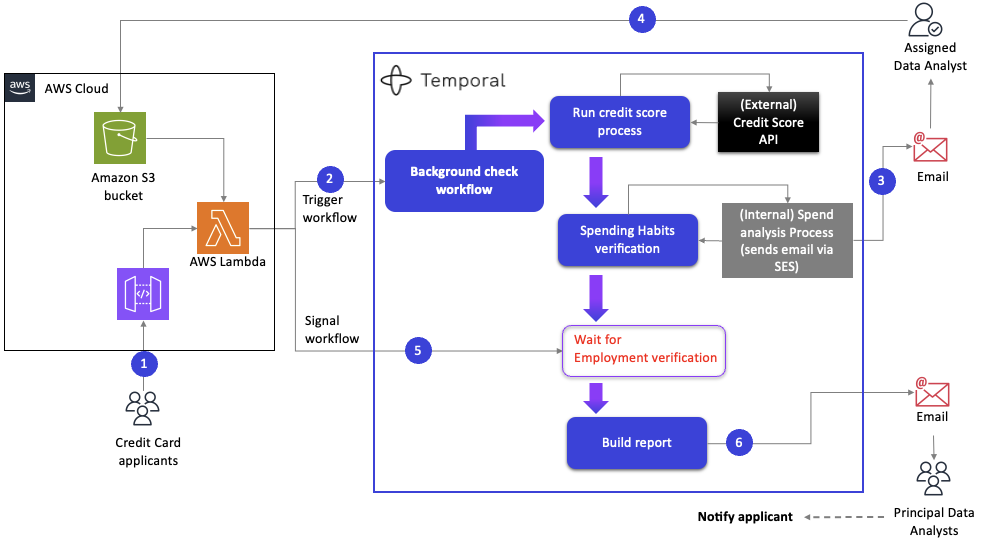

Example scenario business workflow

Here is the classic credit card eligibility workflow you inherited.

Once the credit card application has landed in an S3 bucket, an analyst is assigned on a round-robin basis. The assigned data analyst calls an external credit score analysis API, which accepts application documents and returns a credit score. If the credit score fits the criteria, the analyst calls an internal API maintained by the research and development line of business, which identifies red flags in spending habits. Once the data analyst is satisfied with the outcome from the previous two APIs, the analyst manually reviews pay slips and tax returns and confirms whether the employment information is accurate.

Based on the output, the data analyst builds a report, along with their recommendation about whether the credit card should be granted to the applicant. The report is then sent to the Principal Data Analyst, who makes the final decision.

Some of the core issues that have been reported are:

Slow and unpredictable application processing throughput: Relying on a single individual to perform the bulk of the analysis process introduces many potential delays, including “human sourced” delays such as vacation and sick days, to inefficient manual retries and rework when APIs fail due to outages or rate limiting.

Subjective manual processes: The amount of human effort associated with the process introduces bias and lends itself to errors that have a direct impact on the quality of service provided and the risks associated with granting credit to consumers.

Lack of visibility: Other than asking the analyst or adding yet more manual status update processes to the workflow, it is impossible for other involved stakeholders to identify where the process each application stands, or identify bottlenecks that could be optimized.

Why you need an enterprise-grade orchestrator

Evidently automation, visibility, and reliability are indispensable as this business process is revisited, taking advantage of a whole suite of modern tools and capabilities that were simply unavailable when the original mechanism was first designed. What are some of those new patterns that can be considered today?

Use of an orchestrator - The workflow should be driven by a system rather than a human; this is where the concept of “orchestrator” comes into play. When data analysts log into their computers each day, automated activities should already be complete so that they are only expected to perform any manual steps that require human intervention or interpretation.

The state of each application should be transparent – All stakeholders must be able to understand the exact status of every credit card eligibility validation workflow; this includes having clear visibility to overall workflow status, as well as the output and outcome of each of its steps.

Leverage automation for decision making - The workflow orchestrator should allow you to program dynamic logic, such as acting when the minimum acceptance threshold is not met.

Reliable error and failure handling - This must happen across the workflow and in each activity so that even if a service is down the system can pick up where things are left off.

The last point in the list is very important and, even though it sounds simple, requires some complex logic and robust mechanisms in place to avoid transaction duplication, data inconsistencies, and many other challenges related to “dumb” retry mechanisms.

Ensuring reliability is a big task–you must implement and test a large combination of scenarios around failures, crashes, server outages, timeouts, retry-when-timeout, and retry-when-external-system-is-down while ensuring each possible failure mode of the state is handled in the safest and most efficient way.

Considerable effort is usually spent in writing complex logic for retry and timeouts and then testing it out. This inflates timelines, yet skipping them leads to fragile processes.

A key value of using an orchestrator is that all these logic and state management problems have been solved for you. What you need is a modern orchestrator that enables you to model dynamic, asynchronous logic via code without introducing tight coupling. It should abstract away boilerplate logic around error handling, retries, and managing states while providing built-in reentrancy for workflows.

Reentrancy ensures that workflows are:

Resumable (resume from suspended wait state)

Recoverable (resume from suspended failure state)

Reactive (react to external states)

Since you are unclear about the exact long-term orchestration needs, you need the orchestrator priced on a pay-as-you-go (PAYG) basis. Most importantly, the third-party workflow orchestration product must be SOC2 and GDPR compliant, as it’s a mandatory requirement at your financial organization.

Introducing Temporal Cloud

If you’re an AWS user, you might choose to use AWS Marketplace as your starting point to look for a new workflow orchestration tool because it’s easy to search, has trusted reviews, and is the easiest way to start free and pay as you go through your AWS account. A quick search pulls up Temporal Cloud (Pay-as-you-go) that’s SOC2 compliant and stands out is its ability to remove the complexities of modern architecture and software failure, such as retries, rollbacks, queues, state machines, and timers, while enabling engineers to focus on the business logic instead of the boilerplate code. Furthermore, it scales with your workflows.

With the ability to write workflows as code, Temporal allows you to use familiar toolchains, giving you the freedom to use your IDE of choice and existing version control systems, not to mention you get access to the huge range of libraries and packages available in any of the languages in which Temporal makes an SDK available. As your code evolves, testing and versioning become critical concerns for production ready development at scale, which is also something enabled by the ability to work with code.

As you look into the resiliency and performance of your system, Temporal provides a durable execution platform, which can be easily understood as a “crash-proof” execution environment. Durable execution enables native and built-in capabilities for automated recovery and healing by transparently handling most failures, including network outages or server crashes. What makes durable execution unique is its capability to handle any type of crash, including software (eg. a bug in your code), hardware (think machines rebooting) and 3rd party failures (which can be caused by network conditions or 3rd party downtime), by isolating the Workflow code and ensuring that the process will continue from where it left off, eliminating the risks of traditional retry logic.

The Temporal Platform handles these types of problems and lets users focus on business logic instead of code to detect and recover from failures. Temporal records every step of a workflow execution, enabling automatic recovery from failures and better visibility.

As you further explore Temporal, you note some key concepts of the software:

A Temporal Workflow defines the overall flow of the application/business process. It is defined in a workflow definition file using languages such as Python, Java, GO, PHP, or .NET. A Temporal Workflow is a reentrant process capable of crash-proof execution as it orchestrates activities.

A Temporal Activity executes a well-defined action, which can be a short- or long-running one. Activities are defined in the activity definition. You use Temporal SDK to author code for Workflow as well as Activities. Activities represent a unique operation that, given durable-execution, can be retried and resumed from any point of execution.

The Temporal Worker process polls a task queue, executes the code associated with the task, and sends the results to the Temporal service. You define the Temporal worker process using Temporal SDK and run it.

Temporal Service manages state persistence and orchestration of tasks. When failures occur, Temporal Service automatically retries failed operations according to customizable policies, ensuring business logic continues without manual intervention. Temporal Cloud, from AWS Marketplace, is a managed and hosted solution for Temporal Service.

Temporal Cloud (Pay-as-you-go), comes with free credits when subscribed from AWS Marketplace.

Getting set up for local development

Temporal provides an efficient command line interface (CLI) that allows you to manage local and remote Temporal instances, as well as quickly bootstrap a local Temporal development environment.

The official documentation provides clear instructions to install the Temporal CLI as well as using it to spin up a local development server.

A great sample you can use to understand some of the core concepts in Temporal including Durable Execution can be found in a related blog post that demonstrates a sample money transfer application.

Trying a proof of concept for your use case with Temporal

Now that you understand how Temporal works, you decide to build the following proof of concept (POC).

The workflow starts with credit card applicants uploading documents into an Amazon S3 bucket, which triggers a Lambda function. The Lambda function calls the Temporal Workflow containing three Activities: the first Activity calls the external credit score API; the second Activity calls the internal spending habit verification API and then waits until a data analyst verifies income and employment information. Once the analyst uploads relevant documents into an S3 bucket, the Lambda function signals the Workflow so the build report Activity can trigger. Finally, the last Activity sends the report to the principal data analyst using Amazon Simple Email Service (Amazon SES).

1. Define activities

Here is how you start building activities outlined in the Temporal workflow.

@dataclass

class CreditCardApplicationDetails:

name: str

credit_score: int = 0

spending_habits: str = ""

income_and_employment_verification: str = ""

@activity.defn

async def run_credit_score_process(application: CreditCardApplicationDetails) -> CreditCardApplicationDetails:

response = requests.get("<EXTERNAL_API_URL>", stream=True)

# Save the credit score response in application.credit_score variable so it can be passed to next state.

# <code removed>

print(f"Assigned credit score of {application.credit_score}");

return application

@activity.defn

async def verify_spending_habits(application: CreditCardApplicationDetails) -> CreditCardApplicationDetails:

response = requests.get("<INTERNAL_API_CALL>", stream=True)

# <code removed>

print(f"Found spending habits {application.spending_habits}");

return application

@activity.defn

async def build_report(application: CreditCardApplicationDetails) -> CreditCardApplicationDetails:

# Builds a status report based on previous activities.

# <code removed>

print(f"Successfully completed background check. Compiling report for {application.name}");

return application

run_credit_score_process - This Activity calls an external API and populates the response in the application.credit_score in the instance of the dataclass, which is then sent back to the Workflow.

verify_spending_habits – This Activity calls an internal API that uses machine learning (ML) to identify red flags and then uses Amazon SES to send an email to the assigned analyst.

build_report – This is the last Activity that builds a report and uses Amazon SES to send an email to the principal analyst.

2. Define a workflow

Once you have created a blueprint of activities, you string together these activities in a Workflow definition. The Workflow definition calls the first two Activities in sequence, and then it waits for an event to occur; once the event occurs and Workflow has been signaled, it calls the final Activity in the Workflow, which builds the report.

@workflow.defn

class classic_card_eligibility_validation_workflow:

def __init__(self):

self.verify_income_and_employment_completion = False

@workflow.signal

async def update_employment_verification_status(self) -> None:

self.verify_income_and_employment_completion = True

@workflow.run

async def run(self, name: str) -> str:

details = await workflow.execute_activity(run_credit_score_process, CreditCardApplicationDetails(name),

start_to_close_timeout=timedelta(seconds=30),

heartbeat_timeout=timedelta(seconds=10),

retry_policy=RetryPolicy(

backoff_coefficient=2.0,

maximum_attempts=5,

initial_interval=timedelta(seconds=1),

maximum_interval=timedelta(seconds=10)))

if (details.credit_score > 650):

details = await workflow.execute_activity(verify_spending_habits, details,

start_to_close_timeout=timedelta(seconds=5))

await workflow.wait_condition(lambda: self.verify_income_and_employment_completion)

return await workflow.execute_activity(build_report, details, start_to_close_timeout=timedelta(seconds=5))

else:

return ""Notes:

Take a moment to look at the retry policy you are able to customize at an Activity level. Temporal provides comprehensive configurations such as timeouts, retry policy, and intervals for which you can specify values. If you don’t define any, default values are applied by Temporal. This helps ensure the reliable execution of the Activity.

Here is the code, written in the Lambda function, that signals the Workflow as soon as the data analyst verifies the income and employment information and uploads the same to the S3 bucket.

async def main():

client = await Client.connect("<URL>:7233")

name = "<name>"

card_type = "classic"

handle = client.get_workflow_handle("classic-card-eligibility-check")

await handle.signal(classic_card_eligibility_validation_workflow.update_employment_verification_status)

3. Creating a Temporal worker process

So far, you have defined the Workflow and corresponding Activities. Now, you need to create a Temporal Worker Process so it can execute Tasks associated with your Workflow as soon as they are submitted to the Temporal server by a client or an external process.

Here is how you create a Worker Process. Note how a Worker Process is associated with a Task Queue and is allowed to handle specific Workflows and Activities.

run_worker.py

async def main():

client = await Client.connect("<URL>:7233", namespace="default")

worker = Worker(

client, task_queue="classic-credit-card-eligibility-validation-task-queue",

workflows=[classic_card_eligibility_validation_workflow],

activities=[run_credit_score_process, verify_spending_habits, build_report]

)

await worker.run()Next, you write the code that triggers the Workflow execution.

run_workflow.py

async def main():

name = "<name>"

card_type = "classic"

client = await Client.connect("<URL>:7233")

result = await client.execute_workflow( classic_card_eligibility_validation_workflow.run,

f"{name}-{card_type}",

id="classic-card-eligibility-check",

task_queue="classic-credit-card-eligibility-validation-task-queue")

print(f"Result: {result}")Note – Once you have developed and tested your Workflows locally, it’s easy to run them on a Temporal Cloud account. Once you have created an account, chosen the region you want to run workflows in, and created a namespace and an API key, you can modify code that connects to Temporal Cloud-based service instead of local service as shown in the following code:

client = await Client.connect("<region>.aws.api.temporal.io:7233",

namespace="<namespace>.<account_id>",

rpc_metadata={"temporal-namespace": "<namespace>.<account_id>", "Authorization": f"Bearer {'<API Key>'}"},

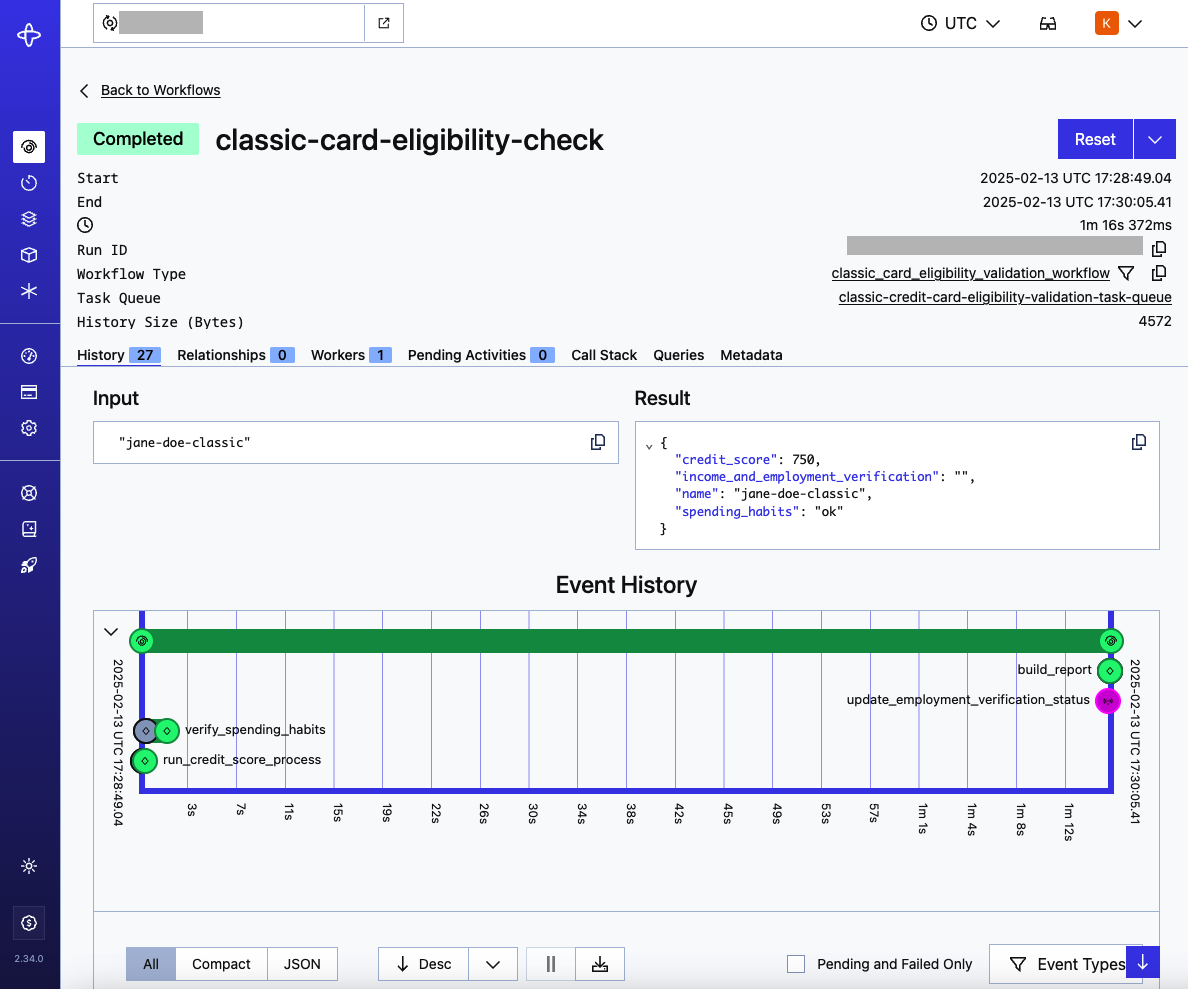

tls=True)Here is the output when I executed the Workflow on the Temporal Cloud.

Congratulations! In a matter of days, you were able to ramp up the technology, and you feel confident about recommending an approach for modernizing your organization’s business processes.

Key takeaways

By building your next generation of workflows and business process on top of robust orchestration platforms such as Temporal, you are confident you can build more reliable systems that are highly observable and in a much shorter time span. If you are starting out, here are my two cents on how to get the best results:

Start with simple processes that are relevant to your organization.

Use the free credits provided by Temporal Cloud (Pay-as-you-go) to build a small POC; this will help you ramp up the technology while evaluating whether it works for your requirements or not.

Before you decide to deploy, standardize timeouts and retries across applications.

Test thoroughly and deploy to production.

Why AWS Marketplace for on-demand cloud tools

Free to try. Deploy in minutes. Pay only for what you use.