Global Gen AI: Building for Low Latency

Generative AI (gen AI) has been the talk of the town for quite some time, and many organizations have moved beyond simply doing POCs to building applications with gen AI at their core. With a new generation of tools like Amazon Bedrock, the barrier of entry to building gen AI applications has dramatically dropped. However, as these applications become exposed to the dynamic and demanding requirements of real-world use, making them work across the globe efficiently and cost-effectively requires architectural considerations. The main challenge isn't just getting AI to work - it’s to ensure that users get quick responses no matter where they are.

Imagine you are a data architect who recently joined a unicorn startup that uses gen AI to help leaders and visionaries optimize how they use their time. After a praise-singing social media post from an iconic CEO about your company’s product, your management team has been getting all sorts of requests from leaders worldwide. Because of the unexpected and overwhelming global demand, your management has decided to expand the application to Europe and the Asia Pacific (APAC) regions.

Your customers tend to be highly skilled global travelers with severe time constraints and expect to receive the same experience (read as “no latency”) no matter where they are. After careful consideration, you and the technical architect have decided that rearchitecting the application is the best way to move forward.

The key requirement driving the change is that you need to scale your gen AI application globally while maintaining ultra-low response times, regardless of your users' location.

Scaling across regions: Need for an architectural solution

Let’s dig a little deeper into the decision of rearchitecting the application. Like most startups, the application architecture evolved organically from a proof of concept that catered to a small number of customers while the organization was able to identify which product features fit the market (product-market fit). Initially all customers are currently located in North America, and this meant that it was unnecessary to build multi-region capabilities in the application to identify product-market fit.

Although the application is already globally accessible, there are clear reasons as to why this is not an ideal scenario:

Multi-second latency for customers outside the Americas - Since the application runs in the Americas, each round trip to the application from further locations adds delay, leading to multi-second latency. That means the application will be slow and sluggish for your new global customers.

Bottlenecks during peak load - If your Asian and European customers use the current, US-based application, there will be bottlenecks and scalability issues.

Lack of High Availability - If the current deployment in the US is not reachable, the application just won’t work for any global customers.

It’s clear that the application needs to be re-architected. However, before you look at the application architecture, take a moment to brush up on your gen AI application concepts.

Gen AI essential concepts

1. Large Language Model (LLM)

An LLM is an AI model that has been trained on massive amounts of data. Due to the way LLMs are built and the huge data sets they require for training, they have the ability to “understand” and generate human-like text.

2. Vector embedding

Vector embedding is a numerical representation of data in high dimensional space. A vector embedding captures relationships between words/concepts. Vector embeddings are generated using AI models mostly for similarity searches.

Here are some examples of vector embedding:

S. No |

Text |

Vector Embedding |

1. |

Python is fun |

[0.21, -0.42, 0.15, 0.65, -0.31, ...] |

2. |

Let’s have fun |

[-0.12, 0.34, 0.45, 0.22, 0.56, ...] |

3. |

I love programming in Python |

[0.23, -0.45, 0.12, 0.67, -0.34, ...] |

A simple full-text search will not understand that text 1 and text 3 are identical. However, a human and a vector search will understand that Text 1 and Text 3 have similar meanings. A vector search understands this relationship and returns texts that are identical in understanding, even if they don’t have any words in common. A vector database stores vector embeddings and performs efficient vector search.

3. Retrieval Augmented Generation (RAG)

RAG is a popular design pattern used by gen AI applications that combines search results from a vector database and the intelligence of LLMs to provide context-aware responses to the user.

Here is how it works: First, you generate and store vector embeddings for all the data that is relevant to have available for context to the LLM. Vector embeddings are stored in a purpose-specific database that allows for the required querying patterns for similarity search. When a user sends a query to your RAG application, first, a vector search is performed against the data on the vector database, and the search results are provided as context to the LLM so it can return a context-aware response to the user query.

Ok, now you are ready to look at the application.

Application architecture

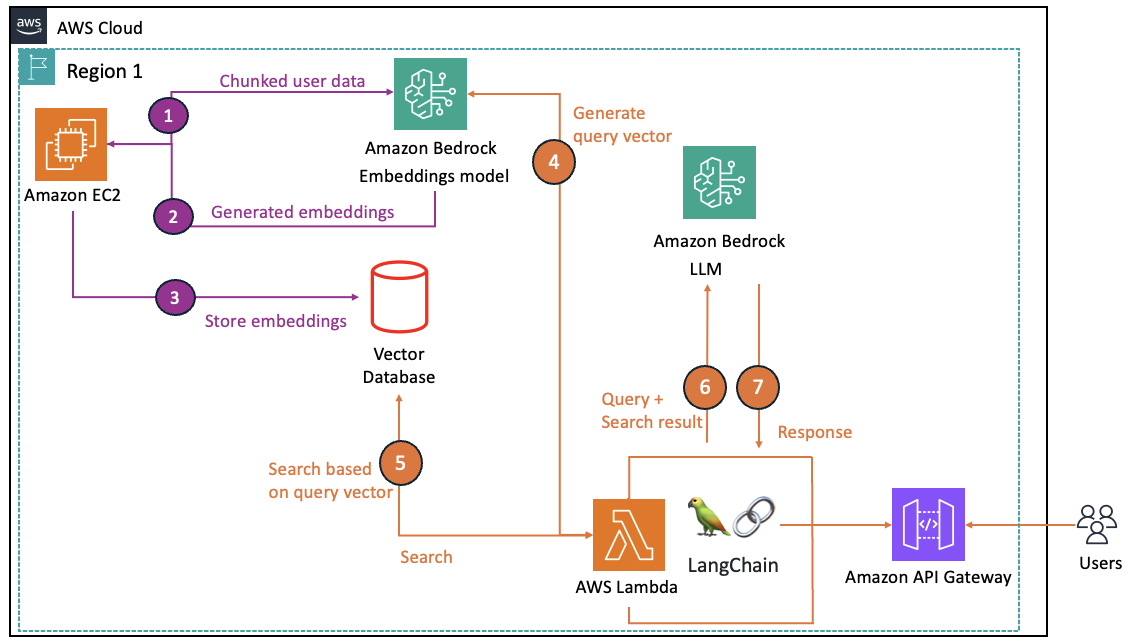

As you can see, the current application architecture implements the RAG architectural pattern.

Steps 1, 2, and 3 chunks the customers’ data, such as schedules, priorities, to-do task lists, generates embeddings, and finally persists them into the vector database.

Steps 4 and 5 perform a similarity search to identify rows relevant to the user’s query.

Steps 6 and 7 send the rows extracted along with the user query to an LLM running in Amazon Bedrock so it can return a context-aware response to the query.

Now that you understand the architecture, you are ready for a discussion.

Scaling application architecture across regions

During the whiteboarding session with the technical architect, you agree upon following ideas:

To maintain XXX millisecond SLA, the following components must be present in each region from where the customer would access the application:

API gateway endpoints.

The Lambda function that uses LangChain.

Amazon Bedrock LLM.

The AI model used for generating embeddings.

You must use Route 53’s latency-based routing so the application is always accessible, even if a region goes down.

Open question: Should the whole data pre-processing pipeline and the vector database be replicated in each region? Here is why it’s important to make this decision:

Vector database is referred in the user query workflow, if you do a cross-region region read, it will impact SLA.

If you replicate database in multiple regions, the overhead of maintaining databases and ensuring they stay in sync will be high.

As a data architect, you promise to get back to the technical architect in a few days with a proposal.

Challenges scaling your current vector database

Let’s look at the nuances of the problem. The vector database that you currently use does not support multi-region implementation or read replicas. Here are a couple of options that you consider but then quickly discount:

Maintain individual and manually synced replicated databases in each region.

Idea: Spin up empty databases in Europe and Asia, do a one-time manual copy of data from the primary region, and modify the batch application pipeline so it writes embeddings to all three vector databases.

Downsides: Although the solution works, if one of the databases is down, the other two would likely go out of sync, adding tedious manual work to your plate. The pipeline would also take much longer to run.Maintain data loading pipeline and a corresponding database, in each region.

Downsides: This option is even trickier and more expensive, as you would have to monitor multiple pipelines running in each region and synchronize them in case something goes wrong. Also, it lends itself to different data being available across regions, which would provide a poor user experience and a difficult-to-understand RAG context.

Clearly, neither of the two options is great. Ideally, you need a multi-region, low-latency vector database that automatically replicates data across regions. That way, you could keep the primary pipeline in a single region, and the database replicates the data to existing and any new regions by itself. The vector database should also support the following:

Non-vector/streaming data (based on a hint from the Chief Technology Officer about an upcoming feature).

Active-active configuration, so the batch application that loads the data into the vector database can be homed in any region.

If you are like me, you pay close attention to industry trends while exploring new products. While reviewing The Forrester Wave™: Vector Databases, Q3 2024 report, you see DataStax recognized as one of the two leaders. As you explore DataStax Astra DB, a fully managed serverless database, you find features such as active-active configuration, support for LangChain, and multi-region support impressive. Furthermore, you notice the promise of ultra-low latency when processing billions of vectors and feel comfortable about exploring it further as a strong candidate. As an AWS user, trying DataStax Astra DB in AWS Marketplace is free and easy to do using your AWS account. Using AWS Marketplace provides the benefit of being able to integrate it into your AWS environment with consumption-based billing through AWS along with the rest of your application infrastructure.

How DataStax Astra DB supports your multi-region use case

Here is how you go about your quick proof of concept (POC) with DataStax Astra DB.

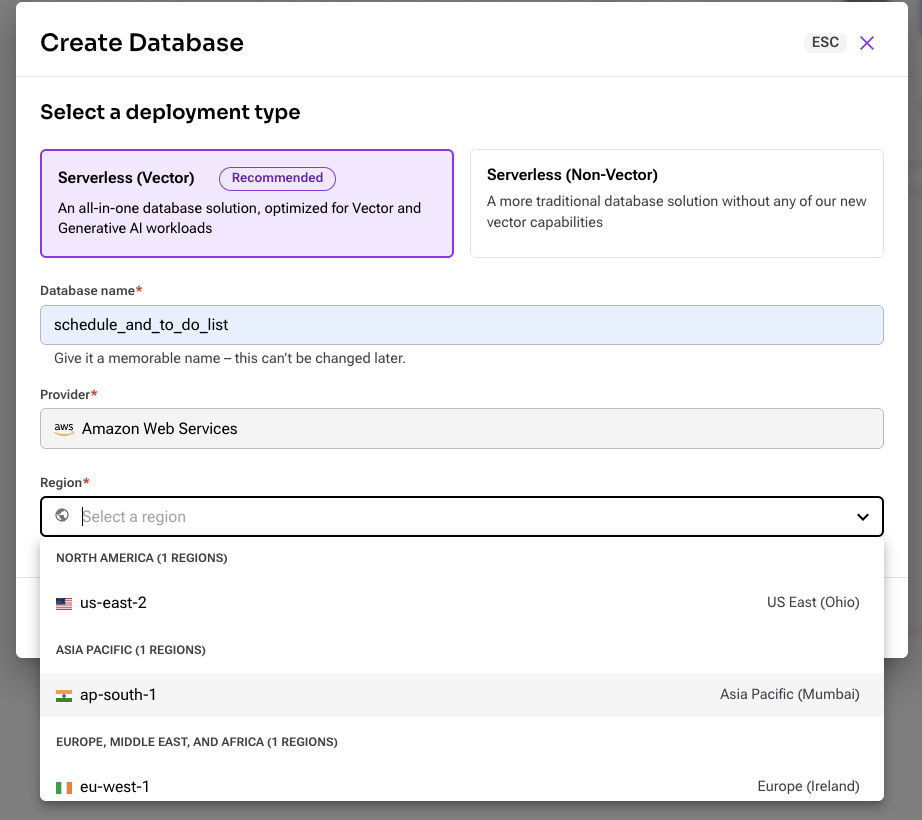

Step 1: Create multi-region database

First, you create a database. You choose us-east-2 (Ohio) region as the primary region.

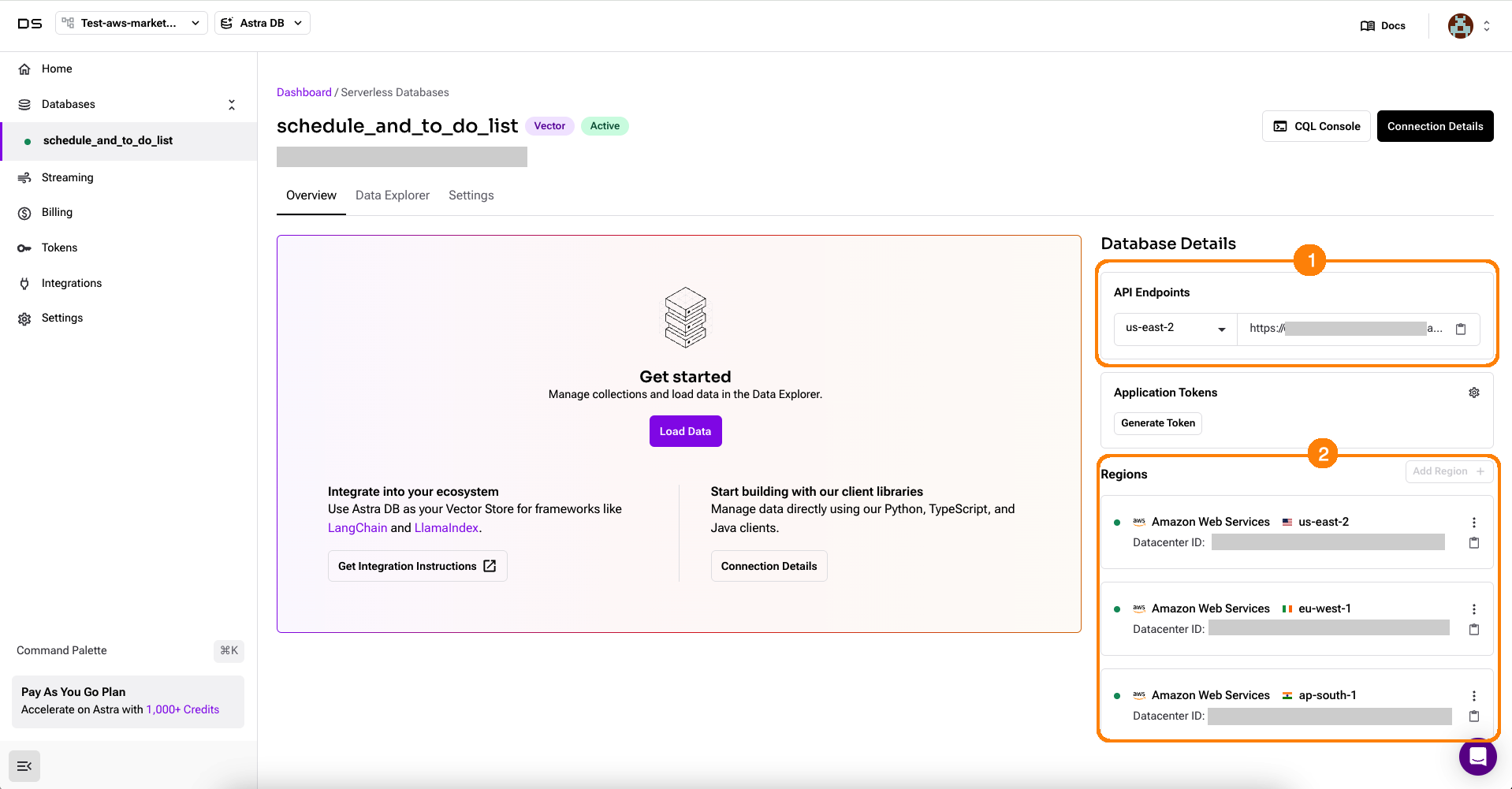

Next, you add two new regions (Europe and Asia Pacific) to the database, converting it from a single-region database to a multi-region one. Each region comes with an API endpoint you can connect to. In section 2 in the following screenshot, you can see all three regions of the database.

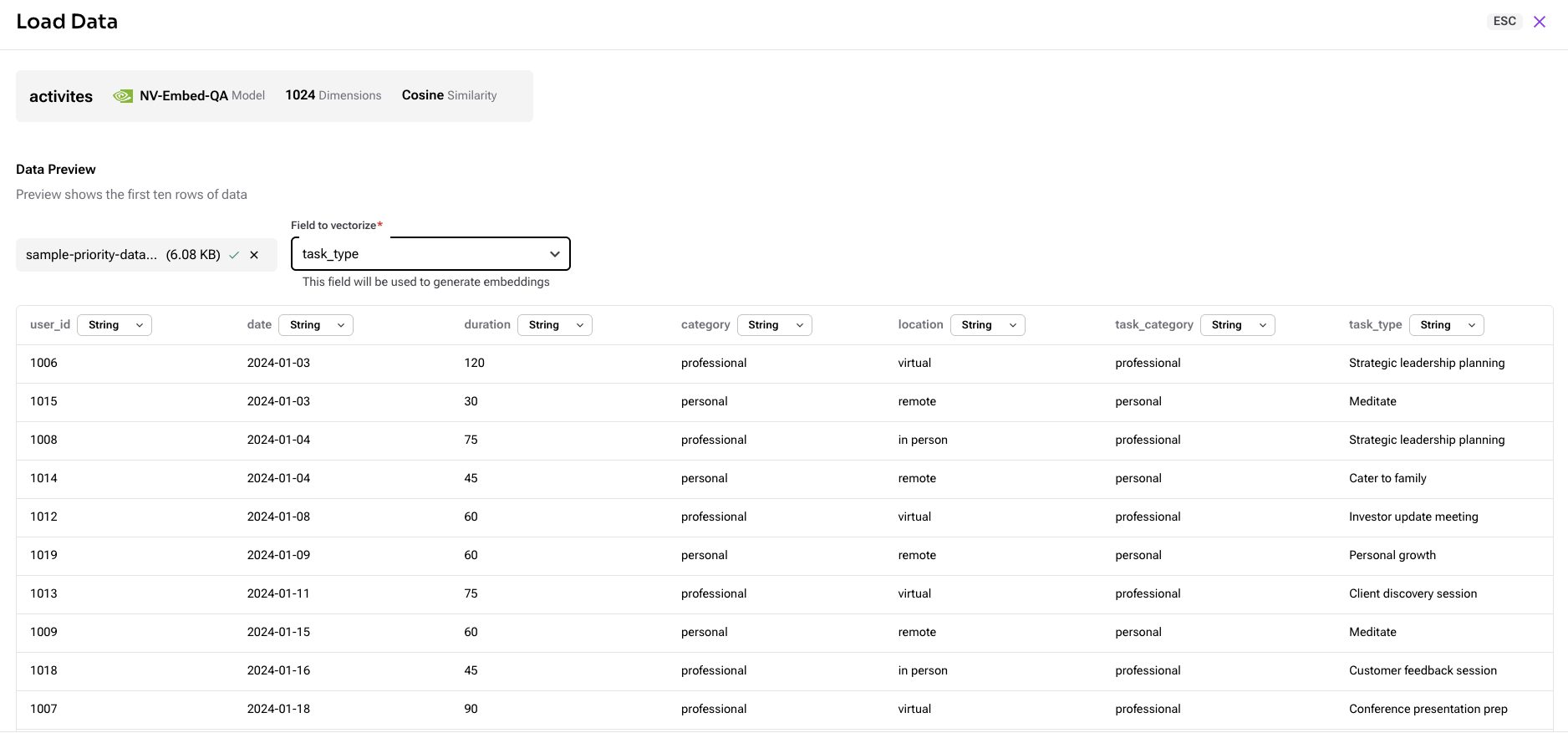

Step 2: Load data

Next, you load data into the table using the Astra DB console. The console provides an easy way to choose an NVIDIA embedding model, which you can use to generate embeddings on the fly. Since its a quick POC, you choose to use the NVIDIA embedding model.

Step 3: Do a similarity search

You write the following code to do a simple vector search using Python code.

from astrapy import DataAPIClient

import time

client = DataAPIClient("<token>")

db = client.get_database_by_api_endpoint("<Endpoint>")

query = "suggest activities for first quarter"

start_time = time.time()

results = db.get_collection("activities").find(

sort={"$vectorize": query},

limit=20,

projection={"$vectorize": True},

include_similarity=True)

end_time = time.time()

print("Time taken by vector search:", end_time - start_time, "seconds")

Next, you decide to run the code using AWS CloudShell service. First, you install the Astra package and then execute the python code. The code returns relevant results and prints the following latencies when executed from three different AWS Regions.

S. No |

AWS Region |

Latency |

1. |

us-east-2 |

71.44 milliseconds |

2. |

eu-west-1 |

90.21 milliseconds |

3. |

ap-south-1 |

74.39 milliseconds |

In short, if you use multi-region tables from Astra DB, you get the response back in milliseconds.

To summarize, the database takes care of replicating data and keeping data in different regions in sync. This means that you won’t need to replicate the data loading pipeline. Each regional lambda function will connect to the local endpoint of the vector database and retrieve relevant results (in milliseconds) before sending them to the LLM running in local region. This is great!

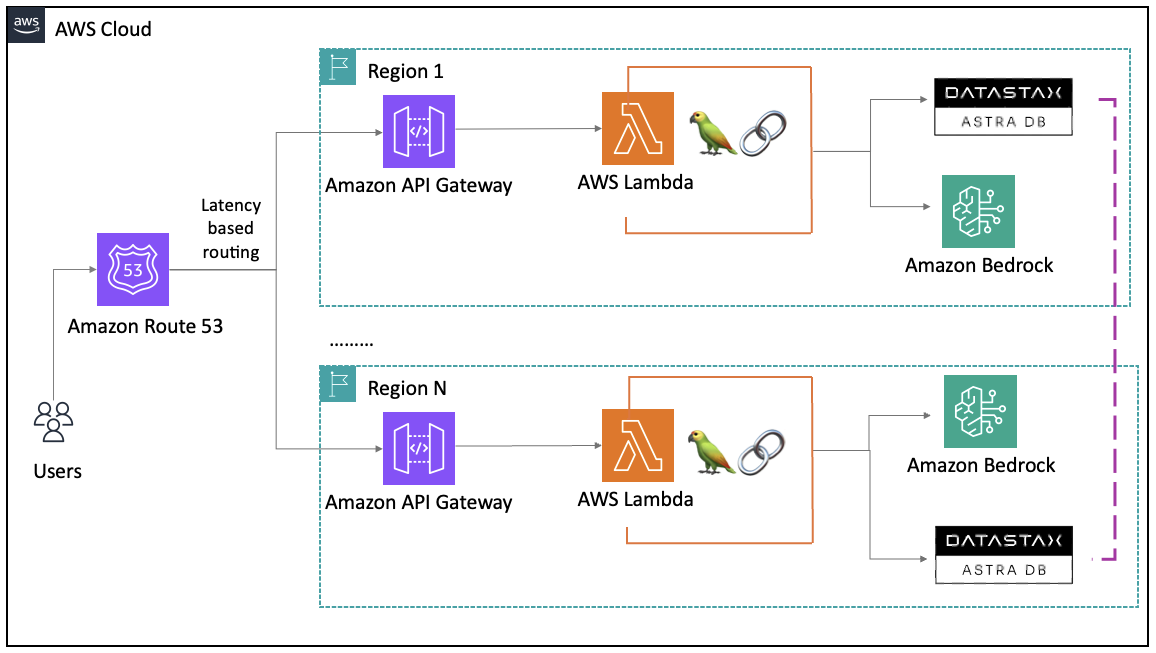

You and the technical architect decide to use DataStax Astra DB as the vector database for the application. Here is the final architecture diagram you and the technical architect decide to implement.

Key takeaways

Congratulations, you just learned how DataStax Astra DB can help scale your gen AI application globally by providing multi-region vector database capabilities! With active-active configuration and automatic data replication across geographical regions, you can maintain ultra-low latency responses for your global users.

Here are some key takeaways to help get you started on your own projects:

Try DataStax Astra DB in AWS Marketplace for free and evaluate how it fits your gen AI application needs.

Use Astra DB's built-in NVIDIA embedding models during development to accelerate your POC before implementing custom embedding generation.

Take advantage of Astra DB's LangChain integration to connect the database seamlessly with your existing implementation.

Implement proper monitoring and alerting across regions to ensure consistent performance globally.

Why AWS Marketplace for on-demand cloud tools

Free to try. Deploy in minutes. Pay only for what you use.