AI-powered augmented customer experiences

With large volumes of sensitive information flowing through the typical enterprise organization, maintaining robust security is essential for regulatory compliance and business continuity. As organizations scale their cloud infrastructure, they face the two-pronged need to implement proper access controls and ensure comprehensive malware protection. With the right access controls, data will be exposed only to authorized users, limiting exposure to bad actors and unintended user mistakes. With preventative risk mitigation controls against malware, your data will be protected from data breaches, system disruptions, and financial losses. Implementing controls and patterns to address these two important aspects would not only make your cloud more secure but would also help in implementing compliance controls necessary to get business certifications, such as ISO 27001, when your organization is ready to apply for one. In this article, you will learn how to strengthen the security and privacy of your data lakes using Antivirus for Amazon S3 by Cloud Storage Security.

While Amazon S3 provides robust native security features, organizations often need additional layers of protection to meet specific compliance requirements. Cloud Storage Security Antivirus for Amazon S3 in AWS Marketplace extends these capabilities with advanced antivirus scanning and malware protection, creating a comprehensive security solution that's easy to implement and manage.

Understanding data security requirements

Imagine you're a DevSecOps engineer in a data management group at an enterprise organization. Your team is responsible for managing and protecting the organization’s data lake, including live and archived data. The live data includes data flowing in from different lines of businesses, as well as large non-sensitive in-house data files (often up to two terabytes in size) that are being used for building custom, business-specific machine learning (ML) models. As the data becomes stale, Amazon S3 lifecycle policies trigger an automation that compresses datasets into three-terabyte chunks and archives them. Your organization has been preparing for ISO 27001, and your leadership has asked you to implement best practices around access control and virus and malware protection for your data.

Regulatory compliance requires that you put appropriate access controls in place to ensure that access to data is given only to authorized users on a need-to-have basis. Stricter controls must be put in place for more sensitive data, such as financial data or any data that can expose a user’s identity. By enforcing access controls, compliance requirements protect data confidentiality and integrity while ensuring data is available to the right users. When you start implementing access controls, you first classify data, then define roles based on job functions and responsibilities, and finally identify what access a role must have. Finally, you use one of the following patterns to grant access: Access control lists (ACLs), Role-based access control (RBAC), and for highly sensitive data, multi-factor authentication (MFA) plus Attribute-based access control (ABAC).

Implementing AWS access controls

AWS outlines a well-architected framework to help you understand best practices while providing features that you can use to easily implement those best practices. Here are some best practices you can implement to help your organization move closer to getting your data lake compliant:

General best practices

Principle of least privilege. As per this operating principle users are granted with the minimal necessary access for them to perform specific actions on specific resources under specific conditions. You can use IAM roles, granular policies, and groups to dynamically set permissions at scale instead of individually granting permissions to individuals. This means that each user has access to everything they need and not more.

Multi-factor authentication. Enforce multi-factor authentication (MFA) throughout the organization. This is especially important for ensuring authorized access to sensitive data.

Amazon S3 best practices

Credentials. Never use root account credentials for S3 access.

Use bucket policies and review access regularly. Establish bucket policies to restrict public access and define granular permissions for users. Combine this with performing regular access reviews for S3 and revoke IAM permissions when not in use.

Enable S3 server access logging. This ensures you capture detailed logs of all requests made to your S3 buckets.

Establish monitoring and alarms. Use CloudWatch monitoring to set up alarms that monitor S3 for unauthorized access attempts or suspicious activities.

Data encryption. Use encryption at rest using S3 Server-side encryption, S3 Key Management Service (KMS), and S3 bring-your-own-encryption key options.

AWS Lake Formation

AWS Lake Formation provides centralized security controls and simplifies the process of setting up a secure data lake. It enables fine-grained access control at the column, row, and cell levels, while offering comprehensive audit capabilities. When combined with Cloud Storage Security's antivirus protection, you create a robust security framework that addresses both access control and threat prevention requirements.

Although implementing these controls helps you ensure the right people have the right access, which mitigates certain internal risks, you still need to protect data from external threats as well. External bad actors often try to introduce malware into an organization to gain privileged access to enterprise data or to user’s devices, all leading to filtration of proprietary information. When malware enters a system, it can lead to data breaches, system disruptions, and financial losses because of data leaks.

Remember, as part of the shared responsibility model, AWS is responsible for the underlying security, and you are responsible for protecting your data sitting in your S3-based data lake.

Implementing malware protection

Regulatory compliance requires organizations to implement malware protection to protect the confidentiality, integrity, and availability of the data. Some important compliance-related requirements include virus scanning on data upload, scheduled scans of existing data, and using versioning to protect against accidental or malicious deletion or modification of files.

Amazon S3 lets you enable versioning at the bucket level, however, to ensure the protection of your data from malware, you require a tool that provides you with the ability to:

Perform real-time scanning for individual files before they are uploaded.

Schedule scans so they automatically scan (and rescan) existing files.

Implement a “two bucket system” so that only clean data enters production buckets.

Use multiple scanning engines for a more comprehensive malware scan of the data, as each scanning engine is unique, and not all scanning engines will be able to find each virus.

The tool must be able to meet a typical enterprise organization’s requirement, such as multi-account scans and scans for files up to five terabytes (which is the maximum file size allowed by Amazon S3) while ensuring that data never leaves the AWS Region it resides within.

Cloud storage security Antivirus for Amazon S3

Antivirus for Amazon S3 is a good fit to meet these requirements, offering a comprehensive solution for malware protection. The solution leverages three industry-leading scanning engines, including Sophos and ClamAV, and provides flexible scanning options - scheduled, event-based, or API-based, which can scan files before they are written. A key feature is its built-in two-bucket scanning configuration, which quarantines dirty files and allows only clean data to the destination or production environment. The solution extends beyond Amazon S3 to protect multiple AWS storage services, including Amazon FSx, Amazon Elastic File System, and Amazon Elastic Block Store.

Furthermore, its dynamic analysis functionality provides detailed reporting on malware behavior, showing you the exact IP address that the threat attempts to connect to, which directories the malware targets for writing, and what commands it tries to execute.

Based on what you learn about the product, you may decide to subscribe to Antivirus for Amazon S3 in AWS Marketplace. It comes with a 30-day free trial and container as the delivery method.

Post subscription, to install the antivirus software in your AWS account, you click the link to run the AWS CloudFormation template. This CSS Public Deployment—Antivirus for Amazon S3 how-to video explains the deployment process.

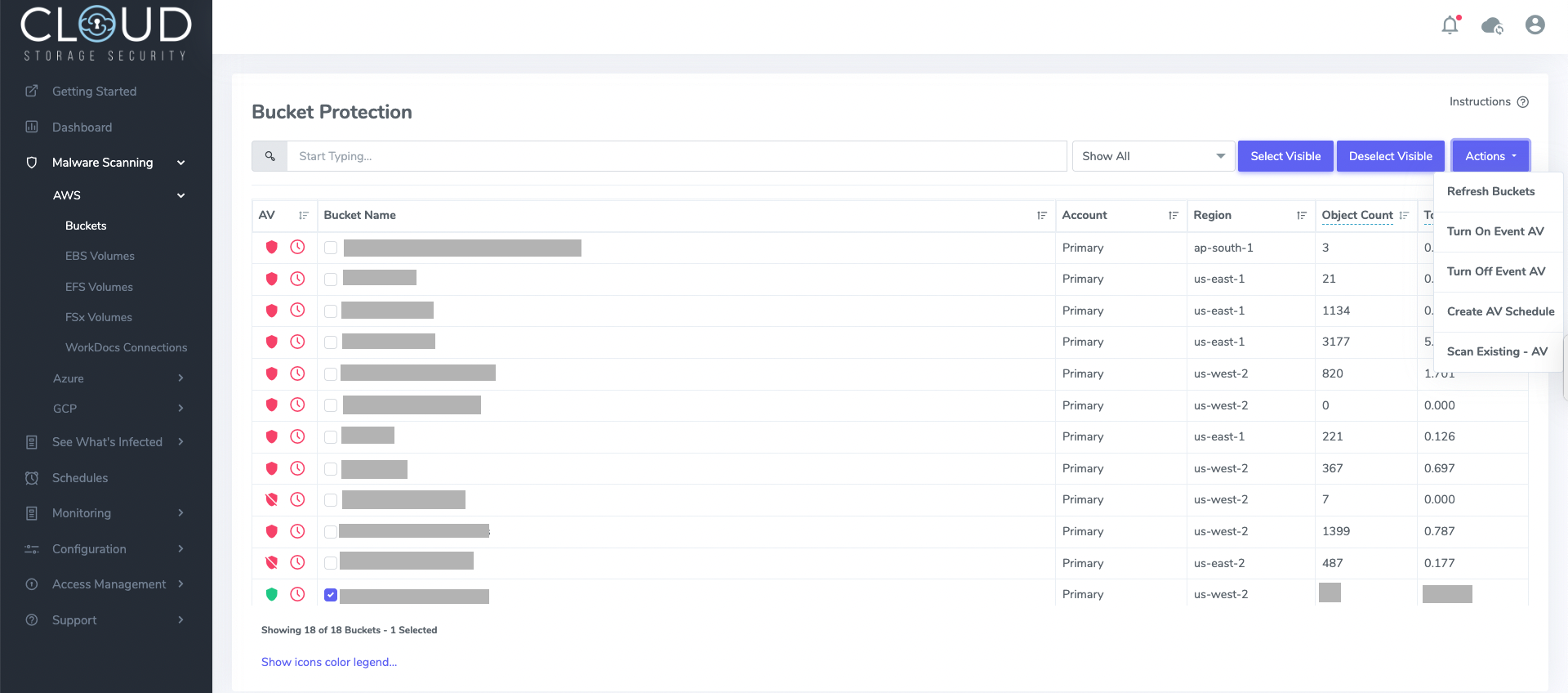

The AWS CloudFormation template performs the necessary initial configuration for you, so when you log in, you can see the overall status of your Amazon S3 buckets. You can also choose one or more buckets and turn event-based scanning ON, so files being written to those buckets are scanned automatically from that point onward. You can also choose to run scans on existing data so that existing data are scanned as well.

The scanning process itself can be customized from the configuration screen. Here are some configurations you can do for running scans on the data:

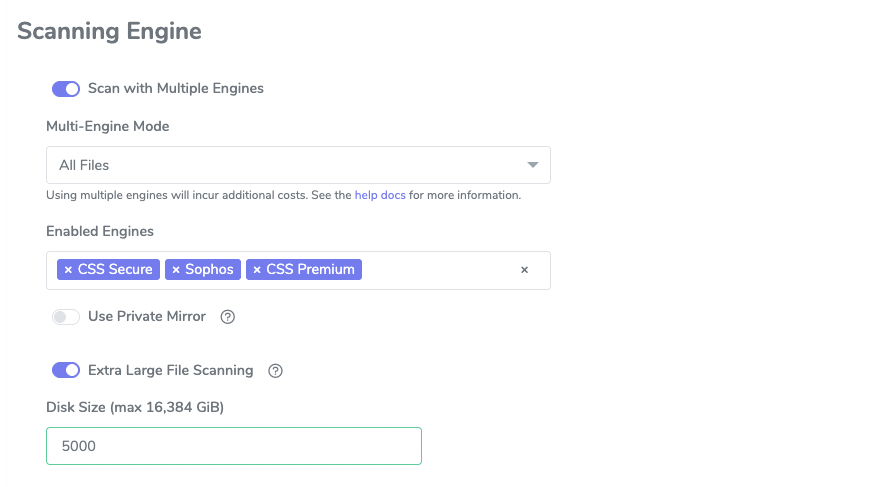

1. Multi-engine scanning

You can either configure to scan all files using multiple engines, or you can explicitly choose engines for small and large files. By using multiple engines to scan, you optimize accuracy and performance. If your data lake contains large files, you can also configure Extra Large File Scanning, which can scan files up to a maximum size of 5TB, the largest single object size allowed in an Amazon S3 bucket.

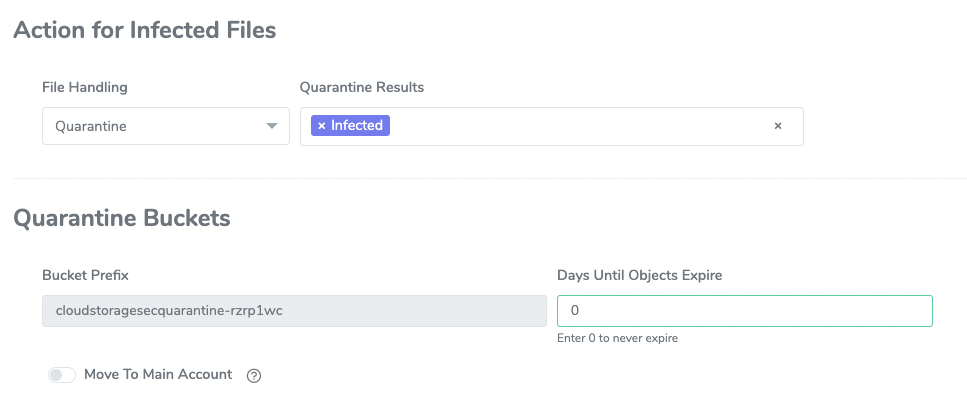

2. Infected file handling

There are a number of ways that infected files can be handled. You can either: 1) Delete the file, 2) Quarantine the file (move it into another bucket designated as quarantine bucket), or 3) Keep/retain the file. Note that regardless of the file handling protocol selected, files are still tagged according to the customer-designated tagging configuration as detailed in the section below.

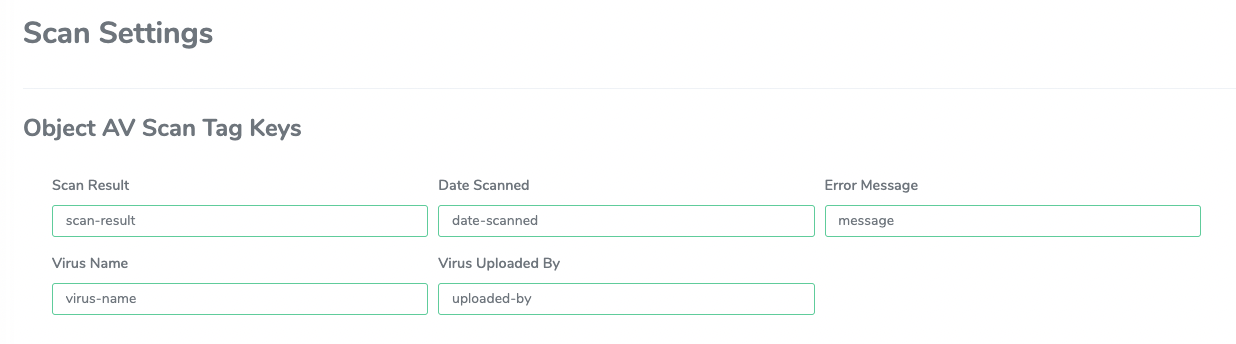

3. Associating scan output with an S3 object via tag

Your application might implement logic that needs to know whether the file is clean before it begins the processing. As part of the scan settings, customers can set tags that the engine automatically associates with the S3 object post-scan.

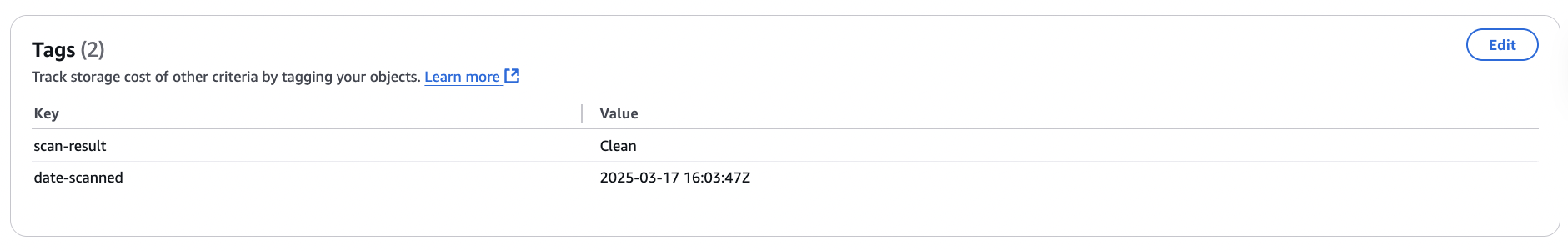

Here is the scan result for an object the Antivirus for S3 product scanned as part of an event-based scan set up on one of the protected S3 buckets. You can see that it was found to be clean.

Note: If you want to scan the file before you upload it into the S3 bucket, you can use API-driven scanning functionality.

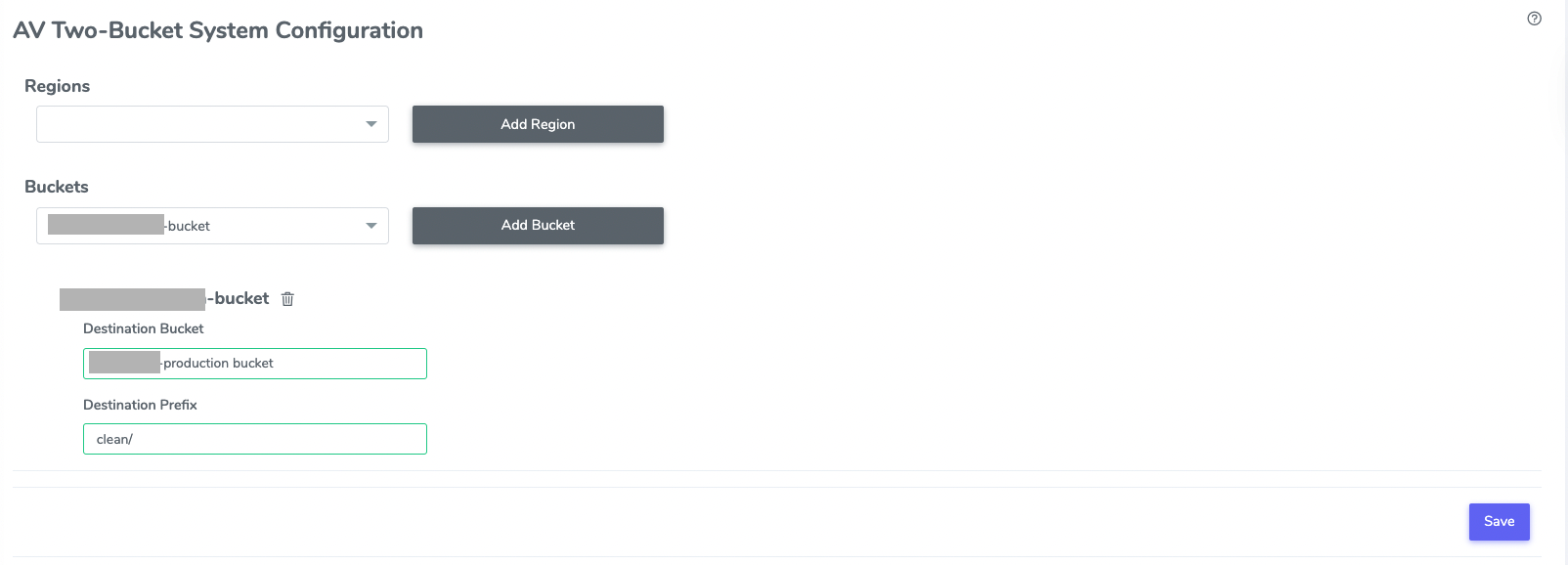

4. Two-bucket system configuration

Some environments, such as user acceptance testing (UAT) and production, need to be kept clean, and they should never see an infected file. With the two-bucket system configuration option, you can identify a bucket as a source bucket (or dirty bucket) and another as a destination bucket (or clean bucket), and the Antivirus for the S3 product automatically moves only clean files from the source to the destination bucket. Here is how a bucket-level configuration that moves only clean data from a dirty bucket to a clean bucket looks.

When you upload a file to a source bucket with event-based scanning enabled, the file is automatically scanned and moved into the destination bucket when found clean.

Furthermore, you can configure proactive notifications to get notified about important scan and scan result events. Antivirus for Amazon S3 also integrates with your existing AWS security implementations. For example, with a simple toggle, you can enable AWS Security Hub integration, which starts pushing your Amazon S3 antivirus scan findings into your AWS Security Hub console.

You just learned how to use Antivirus for Amazon S3 to perform a retro scan on existing data, automate event-based scanning on new data, and a two-bucket system configuration, so only clean data makes it to your clean buckets.

Conclusion and key takeaways

By implementing these security controls and adding Cloud Storage Security antivirus protection from AWS Marketplace, you can create a comprehensive security framework that meets compliance requirements while protecting your data from both internal and external threats. The solution's integration with native AWS services ensures seamless deployment and management. Here are some key takeaways to help you get started on improving the security posture of your architecture using Amazon S3:

Implement access controls to ensure that access to the data is given only to authorized users on a need-to-have basis.

Use the Antivirus for Amazon S3 free trial to try different features and perform tests to ensure it meets your edge-case requirements as well.

Configure scan settings to ensure you use multiple engines and appropriate actions for dealing with infected files.

Set up an on-event scan to ensure any new files are automatically scanned and then run a retro scan to ensure your existing data is scanned as well.

Why AWS Marketplace for on-demand cloud tools

Free to try. Deploy in minutes. Pay only for what you use.