Why Amazon SageMaker?

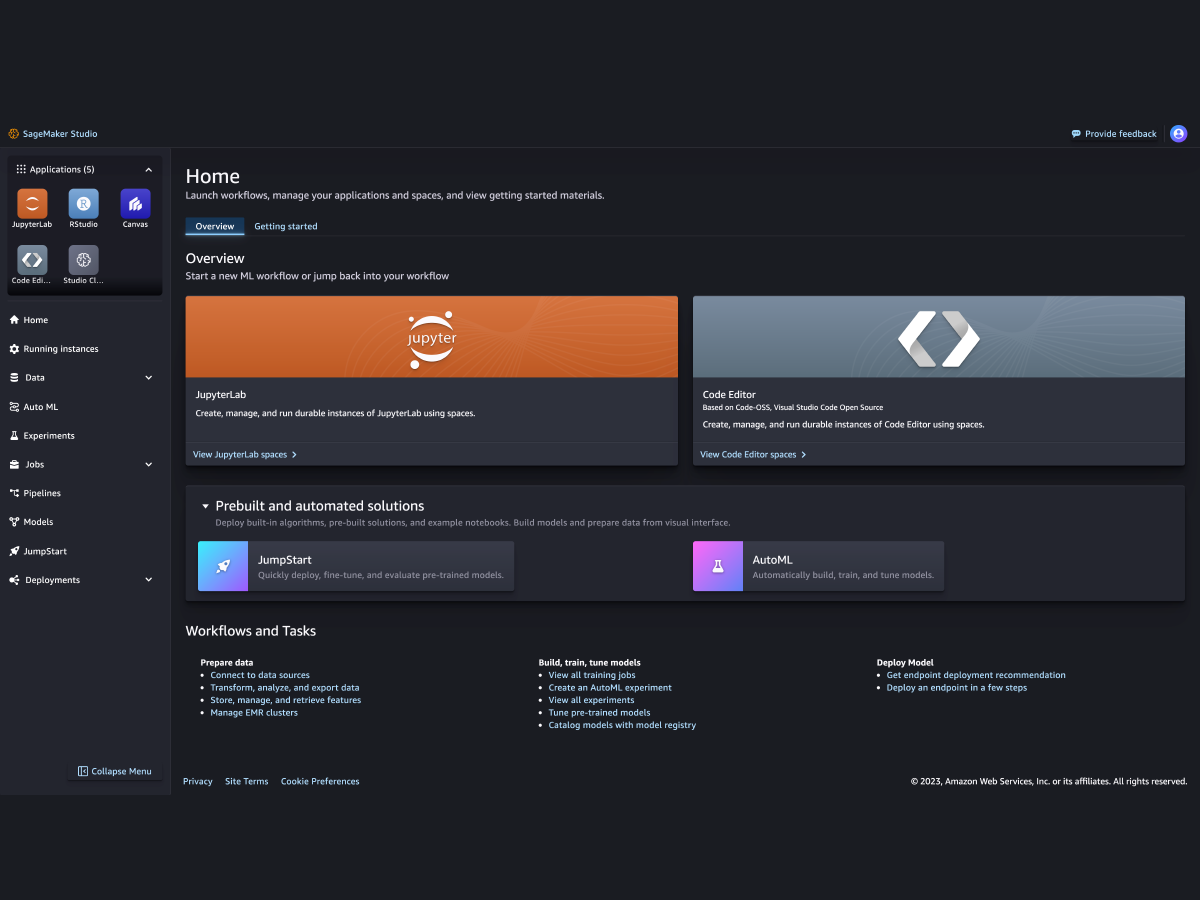

Amazon SageMaker is a fully managed service that brings together a broad set of tools to enable high-performance, low-cost machine learning (ML) for any use case. With SageMaker, you can build, train and deploy ML models at scale using tools like notebooks, debuggers, profilers, pipelines, MLOps, and more – all in one integrated development environment (IDE). SageMaker supports governance requirements with simplified access control and transparency over your ML projects. In addition, you can build your own FMs, large models that were trained on massive datasets, with purpose-built tools to fine-tune, experiment, retrain, and deploy FMs. SageMaker offers access to hundreds of pretrained models, including publicly available FMs, that you can deploy with just a few clicks.

Why Amazon SageMaker?

Amazon SageMaker is a fully managed service that brings together a broad set of tools to enable high-performance, low-cost machine learning (ML) for any use case. With SageMaker, you can build, train and deploy ML models at scale using tools like notebooks, debuggers, profilers, pipelines, MLOps, and more – all in one integrated development environment (IDE). SageMaker supports governance requirements with simplified access control and transparency over your ML projects. In addition, you can build your own FMs, large models that were trained on massive datasets, with purpose-built tools to fine-tune, experiment, retrain, and deploy FMs. SageMaker offers access to hundreds of pretrained models, including publicly available FMs, that you can deploy with just a few clicks.

Benefits of SageMaker

Enable more people to innovate with ML

-

Business analysts

-

Data scientists

-

ML engineers

-

Business analysts

-

Business analysts

Make ML predictions using a visual interface with SageMaker Canvas. -

Data scientists

-

Data scientists

Prepare data and build, train, and deploy models with SageMaker Studio. -

ML engineers

-

ML engineers

Deploy and manage models at scale with SageMaker MLOps.

Support for the leading ML frameworks, toolkits, and programming languages