Module 3: .NET on AWS Lambda

LEARNING MODULE

Please note, you may follow along with the examples presented here, but you do not need to.

AWS Lambda supports multiple .NET versions, on both x86_64 and Arm64 (Graviton2) architectures, you are free to pick whichever architecture you want. Your code and deployment process does not change.

Because Lambda is a serverless service, you only pay for what you use. If your Lambda function needs to run a few times a day, that is all you pay for. But because it can scale to your needs, and can start up a thousand instances simultaneously too!

Time to Complete

60 minutes

Pricing

As mentioned above, you only pay for what you use. The amount you pay is calculated based on the length of time your Lambda function ran for (rounded up to the nearest millisecond), and the amount of memory you allocated to the function.

Keep in mind, it’s the amount of memory allocated, not the amount used during an invocation that is used in the price calculation. This makes it a worthwhile task to test your functions to assess the maximum amount of memory they use during an invocation. Keeping the allocated memory to the lowest necessary amount will help reduce the cost of using Lambda functions. See this page AWS Lambda pricing for more.

Note, if your Lambda function uses other services such as S3, Kinesis, you may incur charges on those services too.

Supported Versions of .NET

There are a variety of ways to run .NET binaries on the Lambda platform. The most common, and the one to consider first, is to use a managed runtime provided by AWS. It is the simplest way to get started, the most convenient, and offers the best performance. If you don't want to, or can't use the managed runtime, you have two other choices: a custom runtime, or a container image. Both options have their own advantages and disadvantages and will be discussed in more detail below.

Managed runtimes

The AWS Lambda service provides a variety of popular runtimes for you to run your code on. In relation to the .NET runtimes, AWS keeps the runtime up to date, and patched as necessary with the latest versions available from Microsoft. You as a developer do not need to do anything in relation to managing the runtime your code will use, other than specify the version you want to use in your familiar .csproj file.

At present, AWS only offers managed runtimes for Long Term Support (LTS) versions of .NET runtime, as of this writing, that is .NET 3.1 and .NET 6. The .NET managed runtimes are available for both x86_64 and arm64 architectures and run on Amazon Linux 2. If you want to use a version of .NET that other than the ones offered by AWS, you can build your own custom runtime, or create a container image that fits your needs.

If you are interested in stepping outside of the .NET world, it is good to know that the Lambda service offers managed runtimes for other languages too - Node.js, Python, Ruby, Java and Go. For full details on the list of managed runtimes times and versions of the languages supported, see this page on available runtimes.

Custom runtimes

Custom runtimes are runtimes you build and bundle yourself. There are a few reasons you would do this. The most common is that you want to use a version .NET that is not offered as a managed runtime by the Lambda service. Another, less common reason would be if you wanted to have precise control over the runtime minor and patch versions.

Creating the custom runtime takes very little effort on your part. All you have to do is pass:

--self-contained true to the build command.

This can be done directly with dotnet build. You can also do it via the aws-lambda-tools-defaults.json file with the following parameter:

"msbuild-parameters": "--self-contained true"

That's all there is to it, a simple compiler flag when bundling the .NET Lambda function. The package to deploy will now contain your code, plus the required files from the .NET runtime you have chosen.

It is now up to you to patch and update the runtime as you see fit. Updating the runtime requires you to redeploy the function, because the function code and the runtime are packaged together.

The package that is deployed is significantly larger compared to the managed runtime because it contains all the necessary runtime files. This negatively affects cold start times (more on this later). To help reduce this size, consider using the .NET compilation features Trimming, and ReadyToRun. But please read the documentation about these features before doing so.

You can create a custom runtime with any version of .NET that runs on Linux. A common use case is to deploy functions with the "current" or preview versions of .NET.

When using custom runtimes, you can use a very wide variety of languages offered by the community. Or even build your own custom runtime, as others have done to run languages like Erlang and COBOL.

Container images

Along with the managed runtime and custom runtime, the AWS Lambda service also offers you the ability to package your code in a container image and deploy this image to the Lambda service. The option will suit teams that have invested time in building and deploying their code to containers, or for those who need more control over the operating system and environment the code runs in. Images of up to 10 GB in size are supported.

AWS provides a variety of base images for .NET and .NET Core. https://gallery.ecr.aws/lambda/dotnet, these will get you started very quickly.

Another option is to create a custom image specifically for your function. This is a more advanced use case and requires that you edit the Dockerfile to suit your needs. This approach will not be covered in this course, but if you go down that path, take a look at the Dockerfiles in this repository - https://github.com/aws/aws-lambda-dotnet/tree/master/LambdaRuntimeDockerfiles/Images.

Note that updating your Lambda function will be slowest with containers due to the size of upload. Containers also have the worst cold starts of the three options. More on this later in the module.

Choosing the runtime for you

If you want the best start up performance, ease of deployment, ease of getting started, and are happy to stick with LTS versions of .NET, go with managed .NET runtimes.

Container images are a great option that allows you to use images AWS has created for a variety of .NET versions. Or you can choose your own container image, and tweak the operating system and environment the code runs in. Container images are also suitable for organizations that are already using containers extensively.

If you have very specific requirements around the versions of .NET and its runtime libraries, and you want to control these yourself, consider using a custom runtime. However, keep in mind that it is up to you to maintain and patch the runtime. If Microsoft releases a security update, you need to be aware of it, and update your custom runtime appropriately. From a performance point of view, the custom runtime is the slowest of the three to start up.

Once your Lambda function has started, the performance of the managed runtime, container image, and custom runtime will be very similar.

AWS SDK for .NET

If you have been developing .NET applications that use AWS services, you probably have used the AWS SDK for .NET. The SDK makes it easy for a .NET developer to call AWS services in a consistent and familiar way. The SDK is kept up to date as services are released, or as they are updated. The SDK is available for download from NuGet.

As with many things related to AWS, the SDK is broken into smaller packages, each dealing with a single service.

For example, if you want to access S3 buckets from your .NET application, you would use the AWSSDK.S3 NuGet package. Or if you wanted to access DynamoDB from your .NET application, you would use the AWSSDK.DynamoDBv2 NuGet package.

But you only add the NuGet packages you need. By breaking the SDK into smaller packages, you keep your own deployment package smaller.

If your Lambda function handler needs to receive events from other AWS services, look for specific event related NuGet packages. They contain the relevant types to handle the events. The packages follow the naming pattern of AWSSDK.Lambda.[SERVICE]Events.

For example, if your Lambda function is triggered by -

incoming S3 events, use the AWSSDK.Lambda.S3Events package

incoming Kinesis events, use the AWSSDK.Lambda.KinesisEvents package

incoming SNS notifications, use the AWSSDK.Lambda.SNSEvents package

incoming SQS messages, use the AWSSDK.Lambda.SQSEvents package

Using the SDK to interact with AWS services is very easy. Add a reference to the NuGet package in your project and then call the service as you would with any other .NET library you might use.

That you are using the SDK from a Lambda function has no impact on how you use it.

Keep in mind you don't necessarily need the to add any AWS SDK NuGet packages to your project. For example, if your Lambda function calls an AWS RDS SQL Server, you can simply use Entity Framework to access the database. No additional AWS specific libraries are required. But if you want to retrieve a username/password for the database from Secrets Manager, you will need to add the AWSSDK.SecretsManager NuGet package.

A note on permissions

As a general rule, you should use the lowest level of permissions needed to perform a task. Throughout this course, you will be encouraged and shown how to do this.

However, for simplicity in teaching this course, we suggest you use an AWS user with the AdministratorAccess policy attached. This policy allows you to create the roles necessary when deploying Lambda functions. When not working on the course, you should remove this policy from your AWS user.

A Hello World Style .NET Lambda function

As you saw in an earlier module, it is very easy to create, deploy, and invoke a .NET Lambda function. In this section you will do the same, but more slowly, and I will explain what is happening at each step. The generated code and configuration files will be discussed.

Creating the function

You have to install the required tooling to follow along in this, please see module 3 for more detail on how to do this.

If you don't want to jump there right now, here is a quick reminder.

Install the .NET Lambda function templates:

dotnet new -i Amazon.Lambda.Templates

Install the .NET tooling for deploying and managing Lambda functions:

dotnet tool install -g Amazon.Lambda.Tools

Now that you have the templates installed, you can create a new function

From the command line run:

dotnet new lambda.EmptyFunction -n HelloEmptyFunction

This creates a new directory called HelloEmptyFunction. Inside it are two more directories, src and test. As the names suggest, the src directory contains the code for the function, and the test directory contains the unit tests for the function. When you navigate into these directories, you will find they each contain another directory. Inside these subdirectories are the code files for the function, and the unit test files.

HelloEmptyFunction

├───src

│ └───HelloEmptyFunction

│ aws-lambda-tools-defaults.json // The default configuration file

│ Function.cs // The code for the function

│ HelloEmptyFunction.csproj // Standard C# project file

│ Readme.md // A readme file

│

└───test

└───HelloEmptyFunction.Tests

FunctionTest.cs // The unit tests for the function

HelloEmptyFunction.Tests.csproj // Standard C# project fileLet's take a look at the Function.cs file first.

using Amazon.Lambda.Core;

// Assembly attribute to enable the Lambda function's JSON input to be converted into a .NET class.

[assembly: LambdaSerializer(typeof(Amazon.Lambda.Serialization.SystemTextJson.DefaultLambdaJsonSerializer))]

namespace HelloEmptyFunction;

public class Function

{

/// <summary>

/// A simple function that takes a string and does a ToUpper

/// </summary>

/// <param name="input"></param>

/// <param name="context"></param>

/// <returns></returns>

public string FunctionHandler(string input, ILambdaContext context)

{

return input.ToUpper();

}

}Some notes on the lines that need a little explanation:

Line 4, enables conversion of the JSON input into a .NET class.

Line 17, the JSON input to the function will be converted to a string.

Line 17, a ILambdaContext object is passed in as a parameter, this can be used for logging, determining the function's name, how long the function has been running and other information.

As you can see, the code is very simple, and should be familiar to anyone who has worked with C#.

Though the FunctionHandler method here is synchronous, Lambda functions can be asynchronous just like any other .NET method. All you need to do is change the FunctionHandler to

public async Task<string> FunctionHandler(..)

Let's take a look at the aws-lambda-tools-defaults.json file:

{

"Information": [

"This file provides default values for the deployment wizard inside Visual Studio and the AWS Lambda commands added to the .NET Core CLI.",

"To learn more about the Lambda commands with the .NET Core CLI execute the following command at the command line in the project root directory.",

"dotnet lambda help",

"All the command line options for the Lambda command can be specified in this file."

],

"profile": "",

"region": "",

"configuration": "Release",

"function-runtime": "dotnet6",

"function-memory-size": 256,

"function-timeout": 30,

"function-handler": "HelloEmptyFunction::HelloEmptyFunction.Function::FunctionHandler"

}Line 10, specifics that the function should be built in release configuration.

Line 11, specifies to the Lambda service what runtime to use.

Line 12, specifies the amount of memory to allocate to the function, in this case 256 MB.

Line 13, specifies the timeout for the function, in this case 30 seconds. The maximum allowed timeout is 15 minutes.

Line 14, specifies the function handler. This is the method that will be invoked by the Lambda service when this function is called.

The function-handler is made up of three parts:

"AssemblyName::Namespace.ClassName::MethodName"

A little later, you will build and deploy this function to the AWS Lambda service using the configuration in this file.

But first, let's take a look at the test project and it's HelloEmptyFunction.Tests.cs file:

using Xunit;

using Amazon.Lambda.Core;

using Amazon.Lambda.TestUtilities;

namespace HelloEmptyFunction.Tests;

public class FunctionTest

{

[Fact]

public void TestToUpperFunction()

{

// Invoke the lambda function and confirm the string was upper cased.

var function = new Function();

var context = new TestLambdaContext();

var upperCase = function.FunctionHandler("hello world", context);

Assert.Equal("HELLO WORLD", upperCase);

}

}The code here is relatively straightforward and uses the xUnit testing framework. You can test your Lambda functions just like you would test any other method.

Line 14, creates a new instance of the Function class.

Line 15, creates a new instance of the TestLambdaContext class, this will be passed to the Lambda function on the next line.

Line 16, invokes the FunctionHandler method on the function, passing in the string "hello world" and the context. It stores the response in the upperCase variable.

Line 18, asserts that the upperCase variable is equal to "HELLO WORLD".

You can run this test from the command line, or inside your favorite IDE. There are other kinds of tests that can be performed on Lambda functions, if you are interested in learning more about this, please see a later module where you will learn about a variety of ways to test and debug your Lambda functions.

Deploying the function

Now you have a Lambda function and you might have run a unit test, it's time to deploy the Lambda function to the AWS Lambda service.

From the command line, navigate to the directory containing the HelloEmptyFunction.csproj file and run the following command:

dotnet lambda deploy-function HelloEmptyFunction You will see output that includes the following (I have trimmed it for clarity) -

... dotnet publish --output "C:\dev\Lambda_Course_Samples\HelloEmptyFunction\src\HelloEmptyFunction\bin\Release\net6.0\publish" --configuration "Release" --framework "net6.0" /p:GenerateRuntimeConfigurationFiles=true --runtime linux-x64 --self-contained false

Zipping publish folder C:\dev\Lambda_Course_Samples\HelloEmptyFunction\src\HelloEmptyFunction\bin\Release\net6.0\publish to C:\dev\Lambda_Course_Samples\HelloEmptyFunction\src\HelloEmptyFunction\bin\Release\net6.0\HelloEmptyFunction.zip

... zipping: Amazon.Lambda.Core.dll

... zipping: Amazon.Lambda.Serialization.SystemTextJson.dll

... zipping: HelloEmptyFunction.deps.json

... zipping: HelloEmptyFunction.dll

... zipping: HelloEmptyFunction.pdb

... zipping: HelloEmptyFunction.runtimeconfig.json

Created publish archive (C:\dev\Lambda_Course_Samples\HelloEmptyFunction\src\HelloEmptyFunction\bin\Release\net6.0\HelloEmptyFunction.zip).Line 1, compiles and publishes the project. Note the runtime is linux-x64, and the self-contained flag is false (meaning the function will use managed .NET runtime on the Lambda service, as opposed to a custom runtime).

Line 2, zips the published project to a zip file.

Lines 3-8, show the files that are being zipped.

Line 9, confirms that the zip file has been created.

Next you will be asked "Select IAM Role that to provide AWS credentials to your code:", you may be presented with a list of roles you created previously, but at the bottom of the list will be the option "*** Create new IAM Role ***", type in that number beside that option.

You will be asked to "Enter name of the new IAM Role:". Type in "HelloEmptyFunctionRole".

You will then be asked to "Select IAM Policy to attach to the new role and grant permissions" and a list of policies will be presented. It will look something list the below, but yours may be longer -

1) AWSLambdaReplicator (Grants Lambda Replicator necessary permissions to replicate functions ...)

2) AWSLambdaDynamoDBExecutionRole (Provides list and read access to DynamoDB streams and writ ...)

3) AWSLambdaExecute (Provides Put, Get access to S3 and full access to CloudWatch Logs.)

4) AWSLambdaSQSQueueExecutionRole (Provides receive message, delete message, and read attribu ...)

5) AWSLambdaKinesisExecutionRole (Provides list and read access to Kinesis streams and write ...)

6) AWSLambdaBasicExecutionRole (Provides write permissions to CloudWatch Logs.)

7) AWSLambdaInvocation-DynamoDB (Provides read access to DynamoDB Streams.)

8) AWSLambdaVPCAccessExecutionRole (Provides minimum permissions for a Lambda function to exe ...)

9) AWSLambdaRole (Default policy for AWS Lambda service role.)

10) AWSLambdaENIManagementAccess (Provides minimum permissions for a Lambda function to manage ...)

11) AWSLambdaMSKExecutionRole (Provides permissions required to access MSK Cluster within a VP ...)

12) AWSLambda_ReadOnlyAccess (Grants read-only access to AWS Lambda service, AWS Lambda consol ...)

13) AWSLambda_FullAccess (Grants full access to AWS Lambda service, AWS Lambda console feature ...) Select "AWSLambdaBasicExecutionRole", it is number 6 on my list.

After a moment, you will see -

Waiting for new IAM Role to propagate to AWS regions

............... Done

New Lambda function createdNow you can invoke the function.

Invoking the function

Command line

You can use the dotnet lambda tooling to invoke the function from the shell of your choice:

dotnet lambda invoke-function HelloEmptyFunction --payload "Invoking a Lambda function"

For simple Lambda functions like the above, there is no JSON to escape, but when you want to pass in JSON that needs to be deserialized, then the escaping the payload will vary depending on the shell you are using.

You will see output that looks like the following:

Amazon Lambda Tools for .NET Core applications (5.4.1)

Project Home: https://github.com/aws/aws-extensions-for-dotnet-cli, https://github.com/aws/aws-lambda-dotnet

Payload:

"INVOKING A LAMBDA FUNCTION"

Log Tail:

START RequestId: 3d43c8be-8eca-48a1-9e51-96d9c84947b2 Version: $LATEST

END RequestId: 3d43c8be-8eca-48a1-9e51-96d9c84947b2

REPORT RequestId: 3d43c8be-8eca-48a1-9e51-96d9c84947b2 Duration: 244.83 ms Billed Duration: 245 ms

Memory Size: 256 MB Max Memory Used: 68 MB Init Duration: 314.32 msThe output "Payload:" is the response from the Lambda function.

Note how the log tail contains useful information about the Lambda function invocation, like how long it ran for, and how much memory was used. For simple function like this, 244.83 ms might seem like a lot, but this was the first time the function was invoked, meaning more work need to be performed, subsequent invocations would have been quicker. See the section on cold starts for more information.

Let's make a small change to the code to add some of our own log statements.

Add the following above the return statement in the FunctionHandler method:

context.Logger.LogInformation("Input: " + input);

Deploy again using:

dotnet lambda deploy-function HelloEmptyFunction

This time there will be no questions about role or permissions.

After the function is deployed, you can invoke it again.

dotnet lambda invoke-function HelloEmptyFunction --payload "Invoking a Lambda function"

This time, the output will contain an extra log statement.

Payload:

"INVOKING A LAMBDA FUNCTION"

Log Tail:

START RequestId: 7f77a371-c183-494f-bb44-883fe0c57471 Version: $LATEST

2022-06-03T15:36:20.238Z 7f77a371-c183-494f-bb44-883fe0c57471 info Input: Invoking a Lambda function

END RequestId: 7f77a371-c183-494f-bb44-883fe0c57471

REPORT RequestId: 7f77a371-c183-494f-bb44-883fe0c57471 Duration: 457.22 ms Billed Duration: 458 ms

Memory Size: 256 MB Max Memory Used: 62 MB Init Duration: 262.12 msThere, on line 6, is the log statement. Lambda function logs are also written to CloudWatch logs (provided you gave the Lambda function permissions to do so).

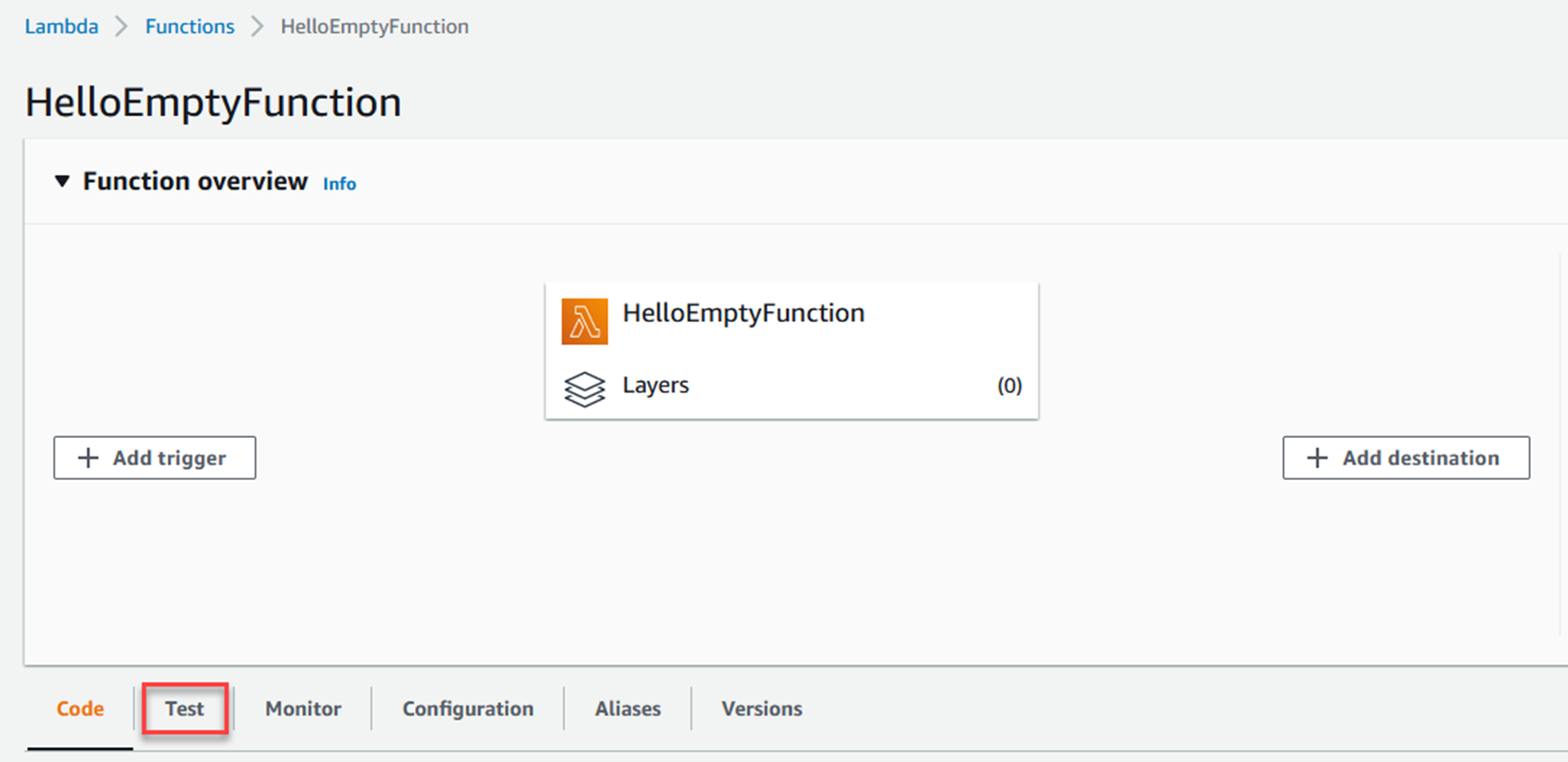

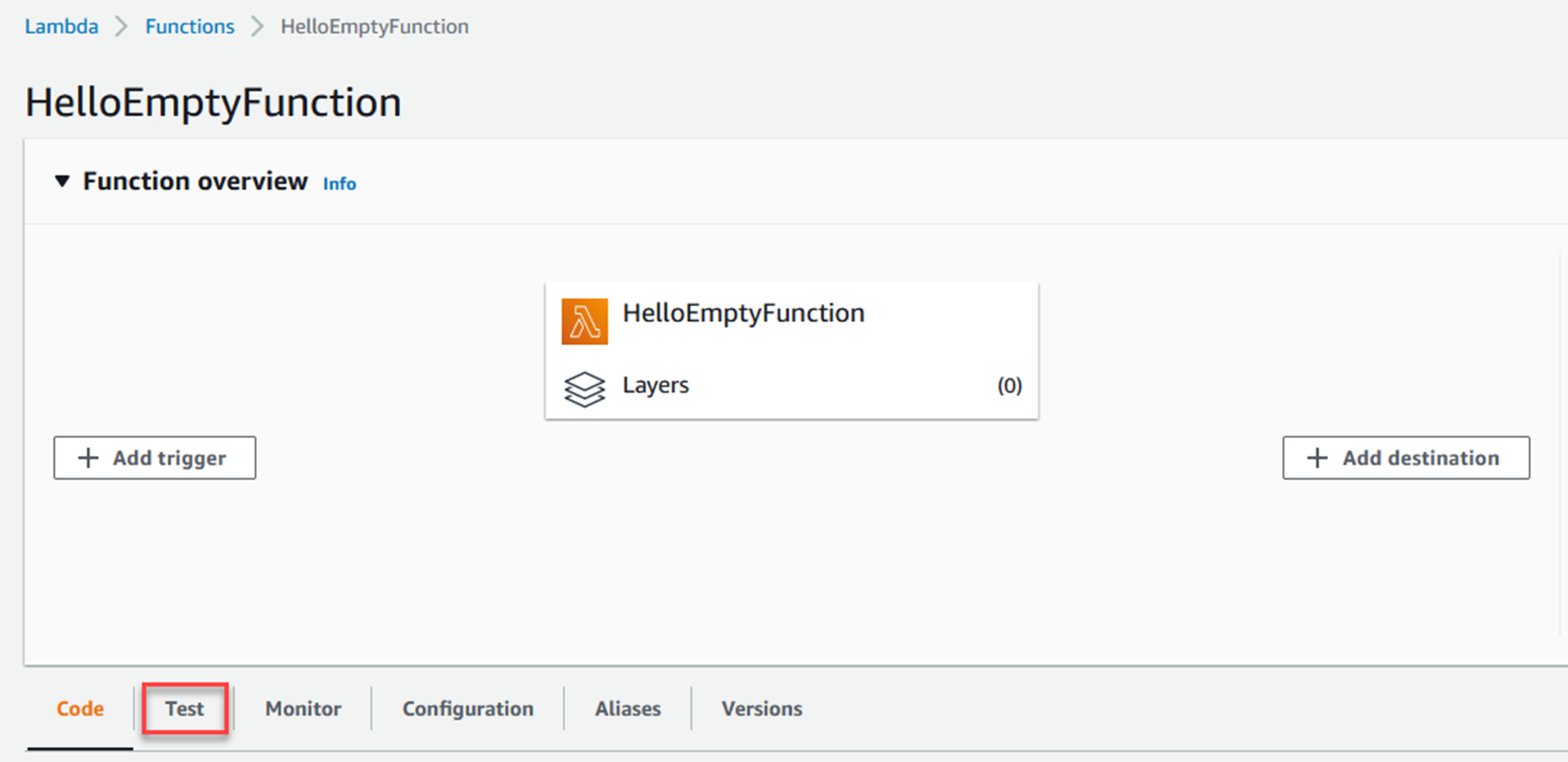

AWS Console

Another way to invoke the function is from the AWS Console.

Log in to the AWS Console and select the Lambda function you want to invoke.

Click the Test tab.

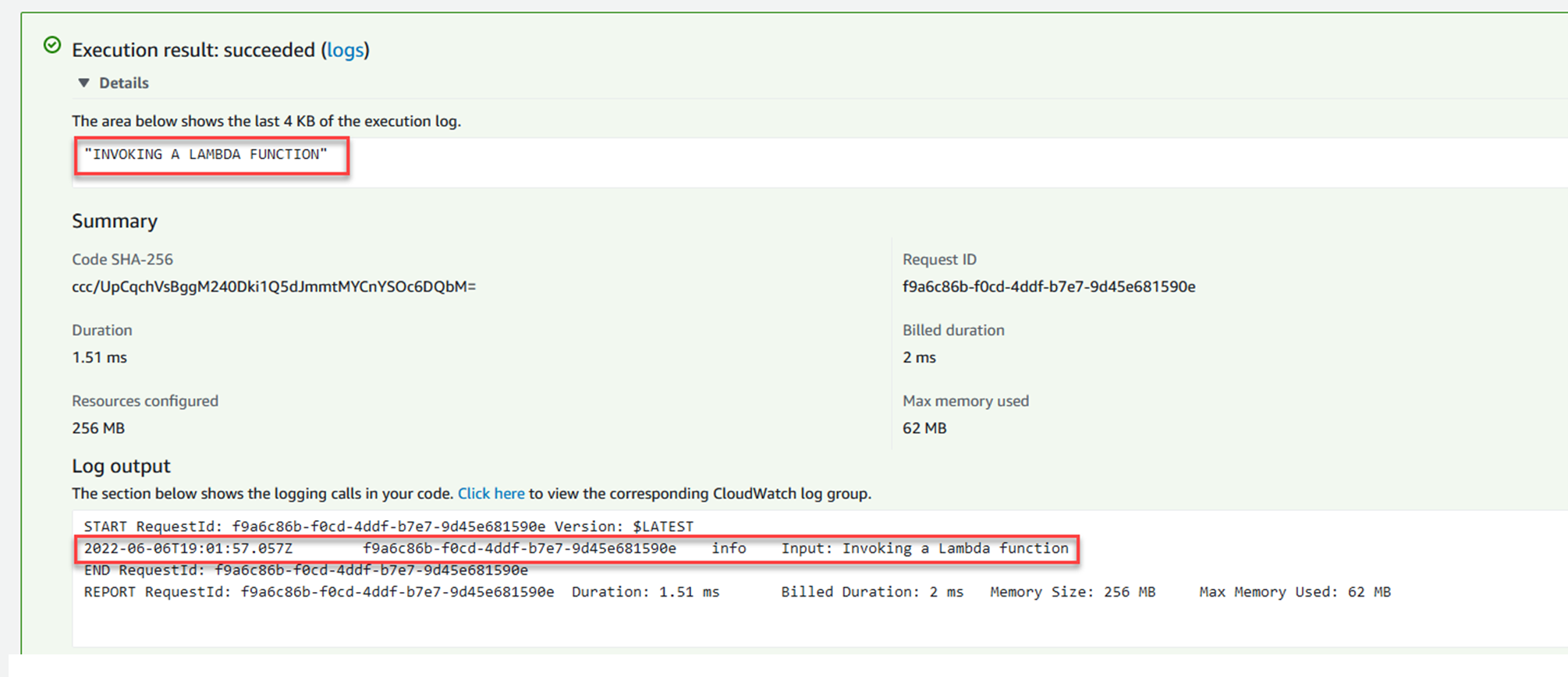

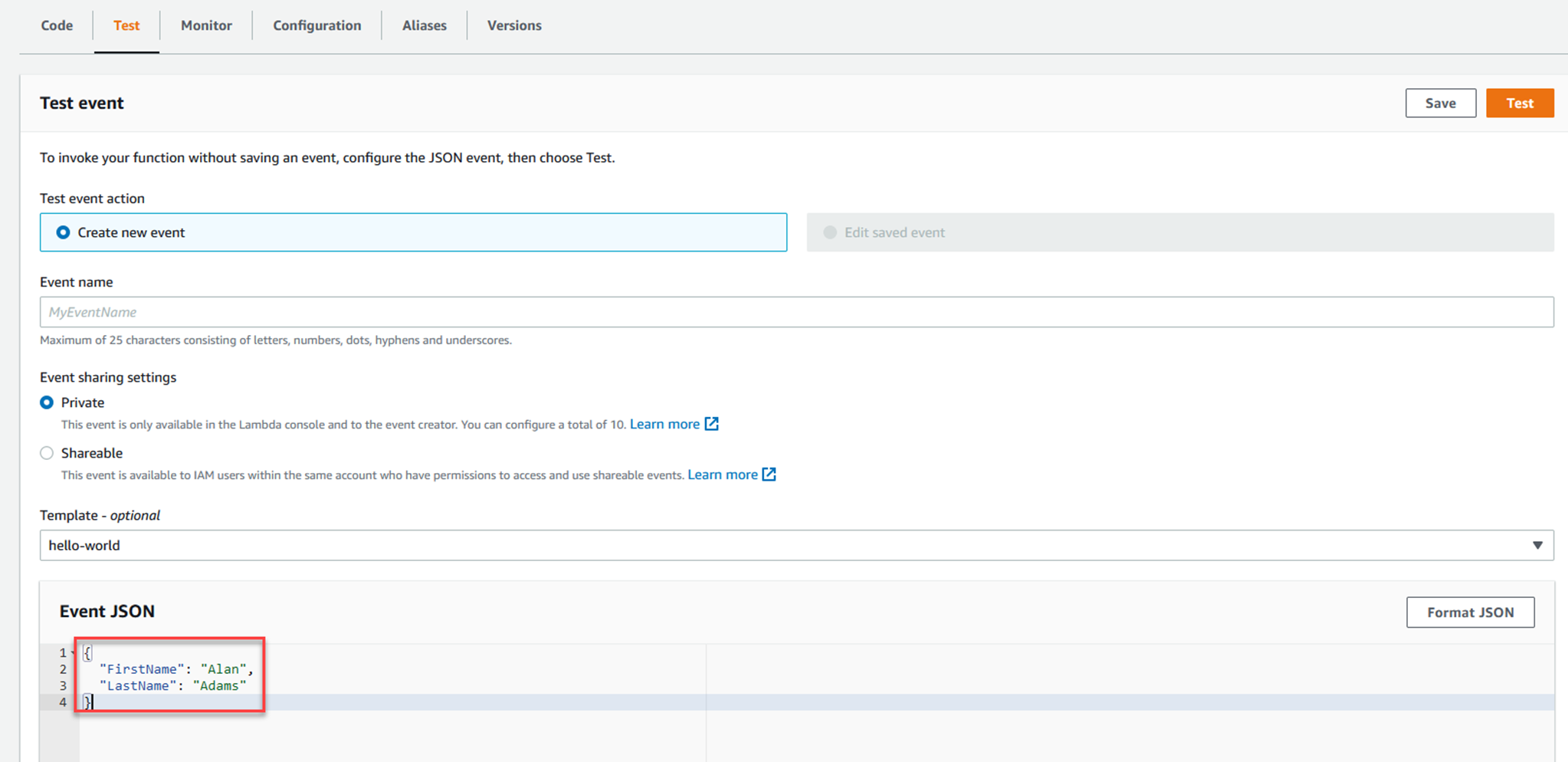

Scroll down to the Event JSON section and put in "Invoking a Lambda function", including the quotes.

Then click the Test button.

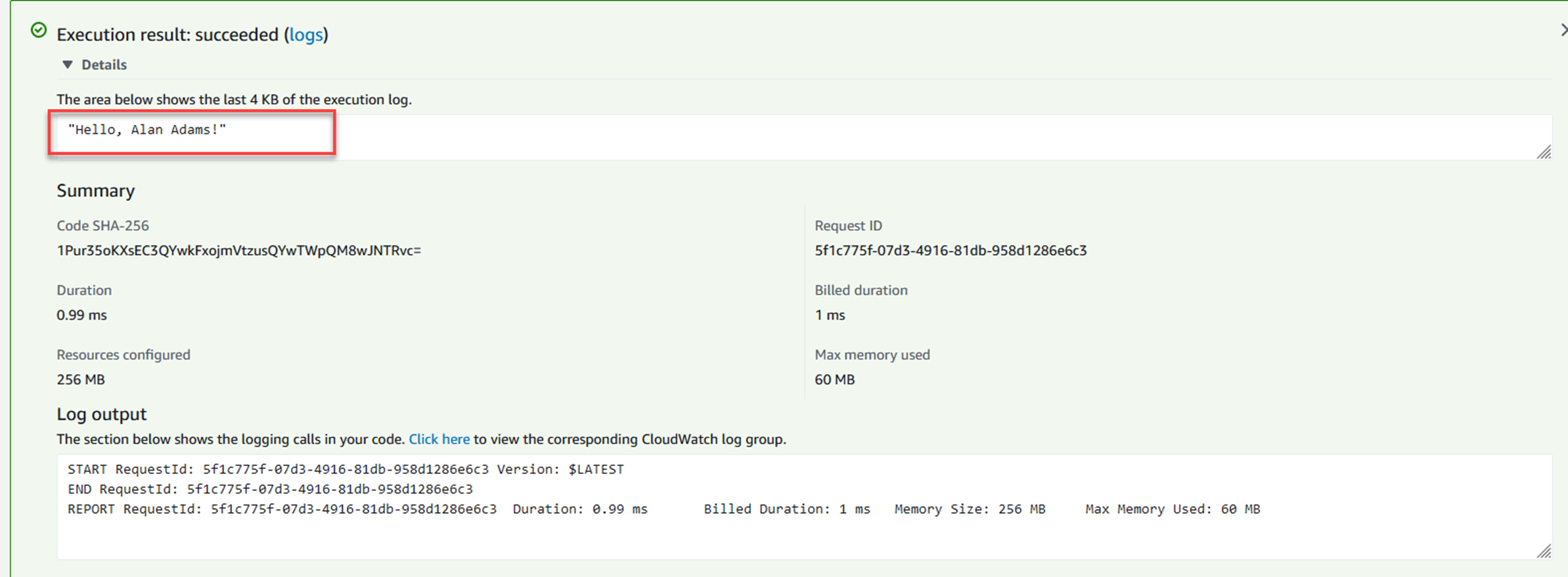

You will see output similar to the following.

Note the log output is visible too.

A .NET Lambda function that takes a JSON payload

The previous example was a simple function that took a string and returned a string, it is a good example to get started with.

But you will probably want to send JSON payloads to Lambda functions. In fact, if another AWS service invokes your Lambda function, it will send a JSON payload. Those JSON payloads are often quite complex, but a model for the payload will be available from NuGet. For example, if you are handling Kinesis events with your Lambda function, the Amazon.Lambda.KinesisEvents package has a KinesisEvent model. The same goes for S3 events, SQS events, etc.

Rather than using one of those models right now, you are going to invoke a new Lambda function with a payload that represents a person.

{

"FirstName": "Alan",

"LastName": "Adams"

}The appropriate C# class to deserialize the JSON payload is:

public class Person

{

public string FirstName { get; init; }

public string LastName { get; init; }

}Create the function

As before, create a new function using the following command:

dotnet new lambda.EmptyFunction -n HelloPersonFunction

Alter the function

Change the code of the FunctionHandler method to look like this:

public string FunctionHandler(Person input, ILambdaContext context)

{

return $"Hello, {input.FirstName} {input.LastName}";

}public class Person

{

public string FirstName { get; init; }

public string LastName { get; init; }

}This is the same command you used a few minutes ago:

dotnet lambda deploy-function HelloPersonFunctionInvoke the function

Now your Lambda function can take a JSON payload, but how you invoke it depends on the shell you are using because of the way JSON is escaped in each shell.

If you are using PowerShell or bash, use:

dotnet lambda invoke-function HelloPersonFunction --payload '{ \"FirstName\": \"Alan\", \"LastName\": \"Adams\" }'

dotnet lambda invoke-function HelloPersonFunction --payload "{ \"FirstName\": \"Alan\", \"LastName\": \"Adams\" }"

Log in to the AWS Console and select the Lambda function you want to invoke.

{

"FirstName": "Alan",

"LastName": "Adams"

}You will see output similar to the following.

In the next section, you will see how to deploy a Lambda function that responds to HTTP requests.

Creating and running a Web API application as a Lambda function

But you can also invoke the Lambda function via an HTTP request, and that is a very common use case.

The AWS tooling for .NET offers a few templates that you can use to create a simple Lambda function hosting a Web API application.

The most familiar will probably be the serverless.AspNetCoreWebAPI template, this creates a simple Web API application that can be invoked via an HTTP request. The project template includes a CloudFormation configuration template that creates an API Gateway that forwards HTTP requests to the Lambda function.

When deployed to AWS Lambda, the API Gateway translates the HTTP Request to an API Gateway event and sends this JSON to the Lambda function. No Kestrel server is running in the Lambda function when it is deployed to the Lambda service.

But when you run it locally, a Kestrel web server is started, this makes it very easy to write your code and test it out in the familiar you would with any Web API application. You can even do the normal line by line debugging. You get the best of both worlds!

Create the function

dotnet new serverless.AspNetCoreWebAPI -n HelloAspNetCoreWebAPI

├───src

│ └───AspNetCoreWebAPI

│ │ appsettings.Development.json

│ │ appsettings.json

│ │ AspNetCoreWebAPI.csproj

│ │ aws-lambda-tools-defaults.json // basic Lambda function config, and points to serverless.template file for deployment

│ │ LambdaEntryPoint.cs // Contains the function handler method, this handles the incoming JSON payload

│ │ LocalEntryPoint.cs // Equivalent to Program.cs when running locally, starts Kestrel (only locally)

│ │ Readme.md

│ │ serverless.template // CloudFormation template for deployment

│ │ Startup.cs // Familiar Startup.cs, can use dependency injection, read config, etc.

│ │

│ └───Controllers

│ ValuesController.cs // Familiar API controller

│

└───test

└───AspNetCoreWebAPI.Tests

│ appsettings.json

│ AspNetCoreWebAPI.Tests.csproj

│ ValuesControllerTests.cs // Unit test for ValuesController

│

└───SampleRequests

ValuesController-Get.json // JSON representing an APIGatewayProxyRequest, used by the unit testDeploy the function

Before attempting to deploy the serverless function, you need an S3 bucket. This will be used by the deployment tooling to store a CloudFormation stack.

You can use an existing S3 bucket, or if you don't have one, use the instructions below.

aws s3api create-bucket --bucket your-unique-bucket-name1234

aws s3api create-bucket --bucket your-unique-bucket-name1234 --create-bucket-configuration LocationConstraint=REGION

aws s3api create-bucket --bucket lambda-course-2022

dotnet lambda deploy-serverless

Enter CloudFormation Stack Name: (CloudFormation stack name for an AWS Serverless application)

You will then be asked for the S3 bucket name. Use the name of the bucket you created earlier, or an existing bucket you want to use for this purpose.

After you enter that, the build and deployment process begins.

This will take longer than the examples that use lambda.* project templates because there is more infrastructure to create and wire up.

The output will be broken into two distinct sections.

The top section will be similar to what you saw when deploying functions earlier, a publish and zip of the project, but this time the artifact is uploaded to S3.

..snip

... zipping: AspNetCoreWebAPI.runtimeconfig.json

... zipping: aws-lambda-tools-defaults.json

Created publish archive (C:\Users\someuser\AppData\Local\Temp\AspNetCoreFunction-CodeUri-Or-ImageUri-637907144179228995.zip).

Lambda project successfully packaged: C:\Users\ someuser\AppData\Local\Temp\AspNetCoreFunction-CodeUri-Or-ImageUri-637907144179228995.zip

Uploading to S3. (Bucket: lambda-course-2022 Key: AspNetCoreWebAPI/AspNetCoreFunction-CodeUri-Or-ImageUri-637907144179228995-637907144208759417.zip)

... Progress: 100%Uploading to S3. (Bucket: lambda-course-2022 Key: AspNetCoreWebAPI/AspNetCoreWebAPI-serverless-637907144211067892.template)

... Progress: 100%

Found existing stack: False

CloudFormation change set created

... Waiting for change set to be reviewed

Created CloudFormation stack AspNetCoreWebAPI

Timestamp Logical Resource Id Status

-------------------- ---------------------------------------- ----------------------------------------

6/10/2022 09:53 AM AspNetCoreWebAPI CREATE_IN_PROGRESS

6/10/2022 09:53 AM AspNetCoreFunctionRole CREATE_IN_PROGRESS

6/10/2022 09:53 AM AspNetCoreFunctionRole CREATE_IN_PROGRESS

6/10/2022 09:54 AM AspNetCoreFunctionRole CREATE_COMPLETE

6/10/2022 09:54 AM AspNetCoreFunction CREATE_IN_PROGRESS

6/10/2022 09:54 AM AspNetCoreFunction CREATE_IN_PROGRESS

6/10/2022 09:54 AM AspNetCoreFunction CREATE_COMPLETE

6/10/2022 09:54 AM ServerlessRestApi CREATE_IN_PROGRESS

6/10/2022 09:54 AM ServerlessRestApi CREATE_IN_PROGRESS

6/10/2022 09:54 AM ServerlessRestApi CREATE_COMPLETE

6/10/2022 09:54 AM ServerlessRestApiDeploymentcfb7a37fc3 CREATE_IN_PROGRESS

6/10/2022 09:54 AM AspNetCoreFunctionProxyResourcePermissionProd CREATE_IN_PROGRESS

6/10/2022 09:54 AM AspNetCoreFunctionRootResourcePermissionProd CREATE_IN_PROGRESS

6/10/2022 09:54 AM AspNetCoreFunctionProxyResourcePermissionProd CREATE_IN_PROGRESS

6/10/2022 09:54 AM AspNetCoreFunctionRootResourcePermissionProd CREATE_IN_PROGRESS

6/10/2022 09:54 AM ServerlessRestApiDeploymentcfb7a37fc3 CREATE_IN_PROGRESS

6/10/2022 09:54 AM ServerlessRestApiDeploymentcfb7a37fc3 CREATE_COMPLETE

6/10/2022 09:54 AM ServerlessRestApiProdStage CREATE_IN_PROGRESS

6/10/2022 09:54 AM ServerlessRestApiProdStage CREATE_IN_PROGRESS

6/10/2022 09:54 AM ServerlessRestApiProdStage CREATE_COMPLETE

6/10/2022 09:54 AM AspNetCoreFunctionProxyResourcePermissionProd CREATE_COMPLETE

6/10/2022 09:54 AM AspNetCoreFunctionRootResourcePermissionProd CREATE_COMPLETE

6/10/2022 09:54 AM AspNetCoreWebAPI CREATE_COMPLETE

Stack finished updating with status: CREATE_COMPLETE

Output Name Value

------------------------------ --------------------------------------------------

ApiURL https://xxxxxxxxx.execute-api.us-east-1.amazonaws.com/Prod/Right at the bottom is the public URL you can use to invoke the API.

Invoking the function

Then try opening https://xxxxxxxx.execute-api.us-east-1.amazonaws.com/Prod/api/values, you will invoke the values controller GET method, just like you would in a normal Web API application.

Note, when using a API Gateway, the gateway imposes its own timeout of 29 seconds. If your Lambda function runs for longer than this, you will not receive a response.

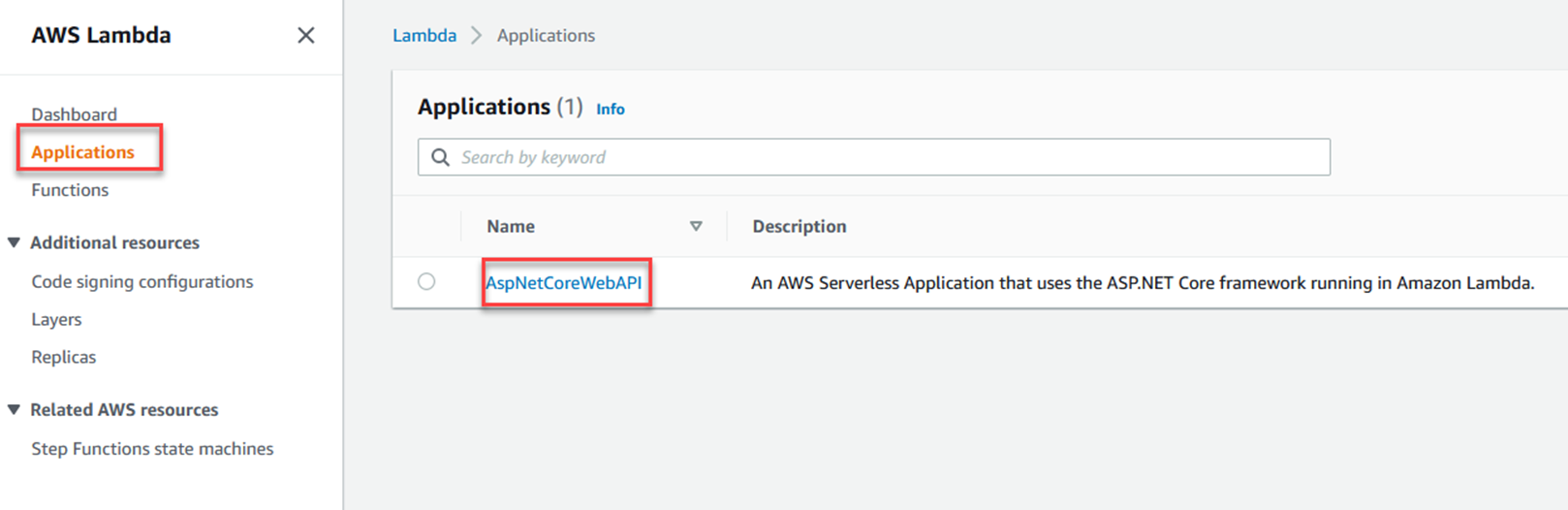

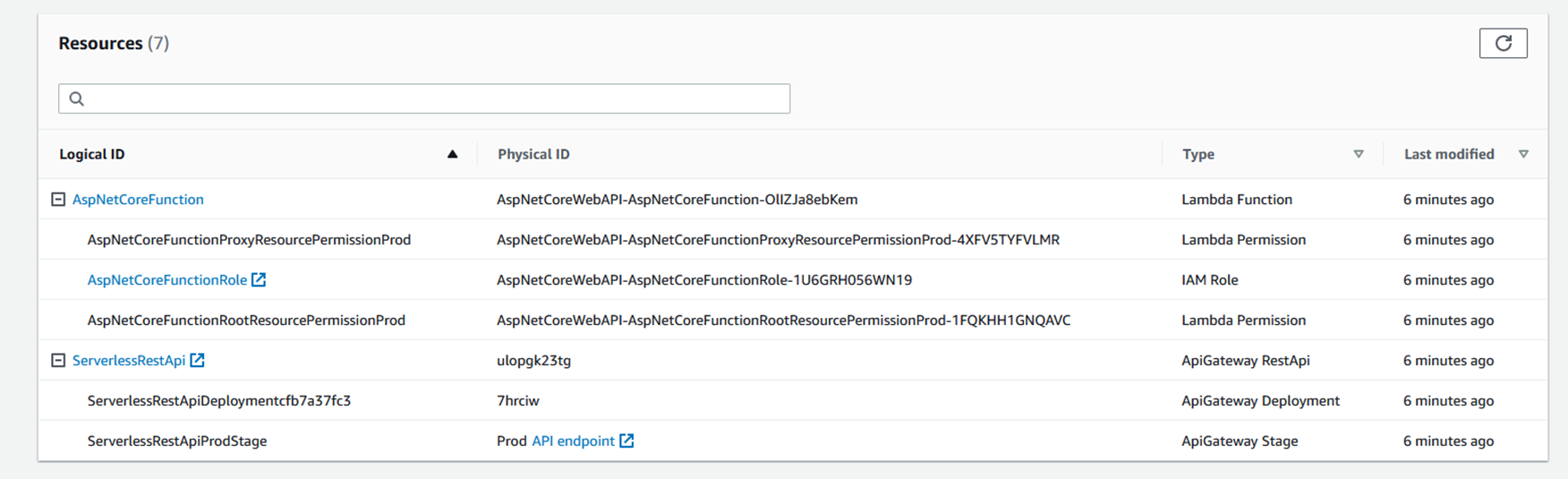

If you are interested, there are a few ways to review the resources that were created.

To review the AWS resources created, you can use:

aws cloudformation describe-stack-resources --stack-name AspNetCoreWebAPI

If you want a more succinct output, use:

aws cloudformation describe-stack-resources --stack-name AspNetCoreWebAPI --query 'StackResources[].[{ResourceType:ResourceType, LogicalResourceId:LogicalResourceId, PhysicalResourceId:PhysicalResourceId}]'

With these examples, you can create and deploy your own Lambda functions. And you might have picked up a little about how .NET Lambda functions are invoked. That is the subject of the next section.

Function URLs – an alternative to API Gateways

If all you need is a Lambda function that responds to a simple HTTP request, you should consider using Lambda function URLs.

They allow you to assign an HTTPS endpoint to a Lambda function. You then invoke the Lambda function by making a request to the HTTPS endpoint. For more information, see this blog post, and these docs.

Cleaning up the resources you created

dotnet lambda delete-function HelloEmptyFunction

dotnet lambda delete-function HelloPersonFunctionNote, that the above commands do not delete the role you created.

To delete the Lambda function that hosted the Web API application, and all associated resources, run:

dotnet lambda delete-serverless AspNetCoreWebAPIHow a .NET Lambda Function is invoked

As you can see from the examples above, you can invoke a .NET Lambda function with a simple string, a JSON object, and an HTTP request. Lambda functions can also be invoked by other services such as S3 (when a file change occurs), Kinesis (when an event arrives), DynamoDB (when a change occurs on a table), SMS (when a message arrives), Step Functions, etc.

How does a Lambda function handle all these different ways of being invoked?

Under the hood, these Lambda functions are invoked when the Lambda service runs the function handler and passes it JSON input. If you looked at the aws-lambda-tools-defaults.json you can see the "function-handler": specified. For .NET Lambda functions, the handler comprises "AssemblyName::Namespace.ClassName::MethodName".

Lambda functions can also be invoked by passing them a stream, but this a less common scenario, see the page on handling streams for more.

Each Lambda function has a single function handler.

Along with the JSON input, the Lambda function handler can also take an optional ILambdaContext object. This gives you access to information about the current invocation, such as the time it has left to complete, the function name and version. You can also write log messages to the via the ILambdaContext object.

All events are JSON

What makes it very easy for an AWS service to invoke a .NET Lambda function is that these services emit JSON, and, as discussed above, the .NET Lambda function accepts JSON input. The events raised by different services all produce differently shaped JSON, but the AWS Lambda event NuGet packages include all the relevant object types needed to serialize the JSON back into an object for you to work with.

See https://www.nuget.org/packages?packagetype=&sortby=relevance&q=Amazon.Lambda&prerel=True for a list of the available Lambda packages, you will have to search within those results for the event type you are interested in.

For example, if you wanted to trigger a Lambda function in response to a file change on an S3 bucket, you need to create a Lambda function that accepts an object of type S3Event. You then add the Amazon.Lambda.S3Events package to your project. Then change the function handler method to:

public async string FunctionHandler(S3Event s3Event, ILambdaContext context)

{

...

}That is all you need to handle the S3 event, you can programmatically examine the event, see what action was performed on the file, what bucket it was in, etc. The Amazon.Lambda.S3Events lets you work with the event, not with S3 itself, if you want to interact with the S3 service, you need to add the AWSSDK.S3 NuGet package to your project too. A later module will go into the topic of AWS services invoking Lambda functions.

The same pattern follows for other types of event, add the NuGet package, changed the parameter to the function handler, and then you can work with the event object.

Here are some of the common packages you might use to handle events from other services -

https://www.nuget.org/packages/Amazon.Lambda.SNSEvents

https://www.nuget.org/packages/Amazon.Lambda.DynamoDBEvents

https://www.nuget.org/packages/Amazon.Lambda.CloudWatchEvents

https://www.nuget.org/packages/Amazon.Lambda.KinesisEvents

https://www.nuget.org/packages/Amazon.Lambda.APIGatewayEvents

You are not limited to using AWS defined event types when invoking a Lambda function. You can create any type of event yourself, remember the Lambda function can take any JSON you send it

How serialization happens

In the case of the "lambda. " templates, there is an assembly attribute near the top of the Function.cs file, this takes care of deserialization of the incoming event into the .NET type in your function handler. In the .csproj file, there is a reference to the Amazon.Lambda.Serialization.SystemTextJson package.

[assembly: LambdaSerializer(typeof(Amazon.Lambda.Serialization.SystemTextJson.DefaultLambdaJsonSerializer))]

For the "serverless. " templates, it works a little differently.

The function handler is specified in the serverless.template file. If you are deploying a serverless.AspNetCoreWebAPI application, look under Resources.AspNetCoreFunction.Properties.Handler for the value. The handler for this type of project will end be in the form - Assembly::Namespace.LambdaEntryPoint::FunctionHandlerAsync.

The LambdaEntryPoint class will be in your project, and it inherits from a class that has a FunctionHandlerAsync method.

The function handler can be set to handle four different event types - an API Gateway REST API, an API Gateway HTTP API payload version 1.0, an API Gateway HTTP API payload version 2.0, and an Application Load Balancer.

By changing which class the LambdaEntryPoint inherits from, you can change the type of JSON event the function handler handles.

Even though it looks as though the Lambda function is responding to an HTTP request you send it, with JSON you define, that is not the case. In reality, your HTTP request is handled by a gateway or load balancer, which then creates a JSON event that is sent to your function handler. This JSON event will have the data originally included in the HTTP request, everything down to the source IP address, and request headers.

Concurrency

There are two kinds of concurrency to consider when working with Lambda functions, reserved concurrency and provisioned concurrency.

An AWS account has a default max limit on the number of concurrent Lambda executions. As of this writing, that limit is 1,000.

When you specify reserved concurrency for a function, you are guaranteeing that the function will be able to reach the specified number of simultaneous executions. For example, if your function has a reserved concurrency of 200, you are ensuring that the function will be able to reach 200 simultaneous executions. Note, this leaves 800 concurrent executions for other functions (1000-200=800).

When you specify provisioned concurrency, you are initializing a specified number of Lambda execution environments. When these have initialized, the Lambda function will be able to respond to requests immediately, avoiding the issue of "cold starts". But, there is a fee associated with using provisioned concurrency.

For more information see, managing Lambda reserved concurrency and managing Lambda provisioned concurrency.

Cold Starts and Warm Starts

Before your Lambda function can be invoked, an execution environment must be initialized, this is done on your behalf by the Lambda service. Your source code is downloaded from an AWS managed S3 bucket (for functions that use managed runtimes, and custom runtimes), or from an Elastic Container Registry (for functions that use container images).

The first time your function runs, your code needs to be JITed, and initialization code is run (e.g. your constructor). This adds to the cold start time.

If your function is being invoked regularly, it will stay "warm", i.e. the execution environment will be maintained. Subsequent invocations of the function will not suffer from the cold start time. "Warm starts" are significantly faster than "cold starts".

If your function is not invoked for a period (the exact time is not specified by the Lambda service), the execution environment is removed. The next invocation of the function will again result in a cold start.

If you upload a new version of the function code, the next invocation of the function will result in a cold start.

The three options for running .NET on Lambda, managed runtime, custom runtime, and container hosted each have different cold start profiles. The slowest is the container, the next slowest is the custom runtime, and the fastest is the managed runtime. If possible, you should always opt for the managed runtime when running .NET Lambda functions.

Cold starts have been found to happen more often in test or development environments than in production environments. In an AWS analysis, cold starts occur in less than 1% of invocations.

If you have a Lambda function in production that is used infrequently, but needs to respond quickly to a request, and you want to avoid cold starts, you can use provisioned concurrency, or use a mechanism to "ping" your function frequently to keep it warm.

If you are interested in more information on optimizing your Lambda function, you can read about cold starts, warm starts, and provisioned concurrency in the AWS Lambda Developer Guide, or check out the Operating Lambda: Performance optimization blog series James Beswick - part 1, part 2, and part 3.

Trimming and Ready to Run for .NET versions pre .NET 7

If you have chosen to use Lambda custom runtimes for a version of .NET pre .NET 7, there are a couple of .NET features you can use to reduce cold start times.

PublishTrimmed will reduce the overall size of the package you deploy by trimming unnecessary libraries from the package.

PublishReadyToRun will perform an ahead of time compilation of your code, there-by reducing the amount of just-in-time compilation that is required. But it increases the size of the package you deploy.

For optimal performance, you will have to test your function when using these options.

PublishTrimmed and PublishReadyToRun can be turned on from your .csproj file.

<PublishTrimmed>true</PublishTrimmed>

<PublishReadyToRun>true</PublishReadyToRun>Conclusion

Native Ahead-of-Time Compilation for .NET 7

dotnet new -i "Amazon.Lambda.Templates::*"dotnet tool update -g Amazon.Lambda.ToolsKnowledge Check

You’ve now completed Module 2, Tooling for .NET development with AWS Lambda. The following test will allow you to check what you’ve learned so far.

1. What versions of .NET managed runtimes does the Lambda service offer? (select two)

b. .NET 6

c. .NET 7

d. .NET Core 3.1

e. .NET Framework 4.8

2. What does cold start refer to? (select one)

b. A Lambda function that uses AWS S3 Glacier storage.

c. The time it takes to deploy your code to the Lambda service.

d. The time it takes to update a function

3. How do you use the AWS .NET SDK with your .NET Lambda functions?

a. Add a reference to the SDK package in your project file.

b. You don’t need to, it’s included the Lambda function templates

c. You don’t need to, the toolkit for the IDEs include it

d. Add the SDK to the Lambda service via the AWS Console

4. When you create a new lambda.EmptyFunction project, what is the name of the file that specifies the configuration for the function?

b. lambda.csproj

c. aws-lambda-tools-defaults.json

5. Which of the following are ways to invoke a Lambda function?

b. Https requests

c. Calls from other AWS services

d. All of the above

Answers: 1-bd, 2-a, 3-a, 4-c, 5-d