Why use Amazon SageMaker with MLflow?

Amazon SageMaker offers a managed MLflow capability for machine learning (ML) and generative AI experimentation. This capability makes it easy for data scientists to use MLflow on SageMaker for model training, registration, and deployment. Admins can quickly set up secure and scalable MLflow environments on AWS. Data scientists and ML developers can efficiently track ML experiments and find the right model for a business problem.

Benefits of Amazon SageMaker with MLflow

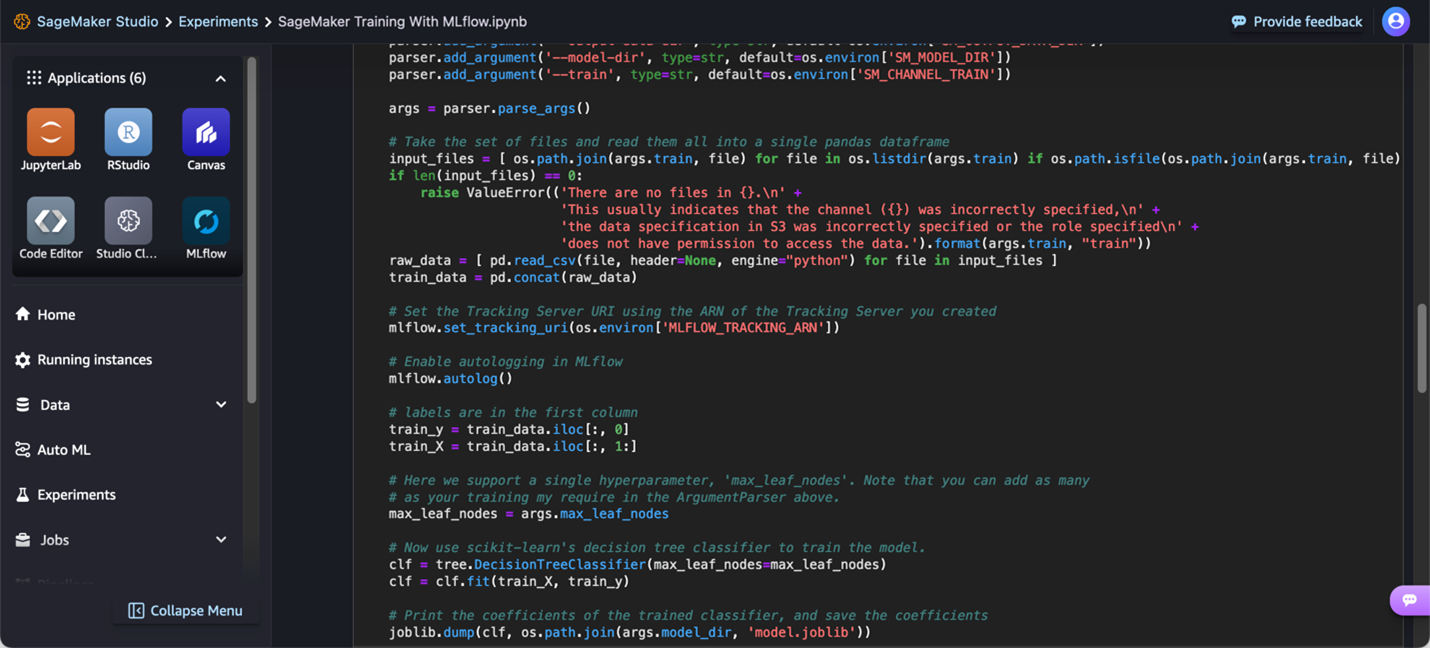

Track experiments from anywhere

ML experiments are performed in diverse environments, including local notebooks, IDEs, cloud-based training code, or managed IDEs in Amazon SageMaker Studio. With SageMaker and MLflow, you can use your preferred environment to train models, track your experiments in MLflow, and launch the MLflow UI directly or through SageMaker Studio for analysis.

Collaborate on model experimentation

Effective team collaboration is essential for successful data science projects. SageMaker Studio allows you to manage and access the MLflow Tracking Servers and experiments, enabling team members to share information and ensure consistent experiment results, making collaboration easier.

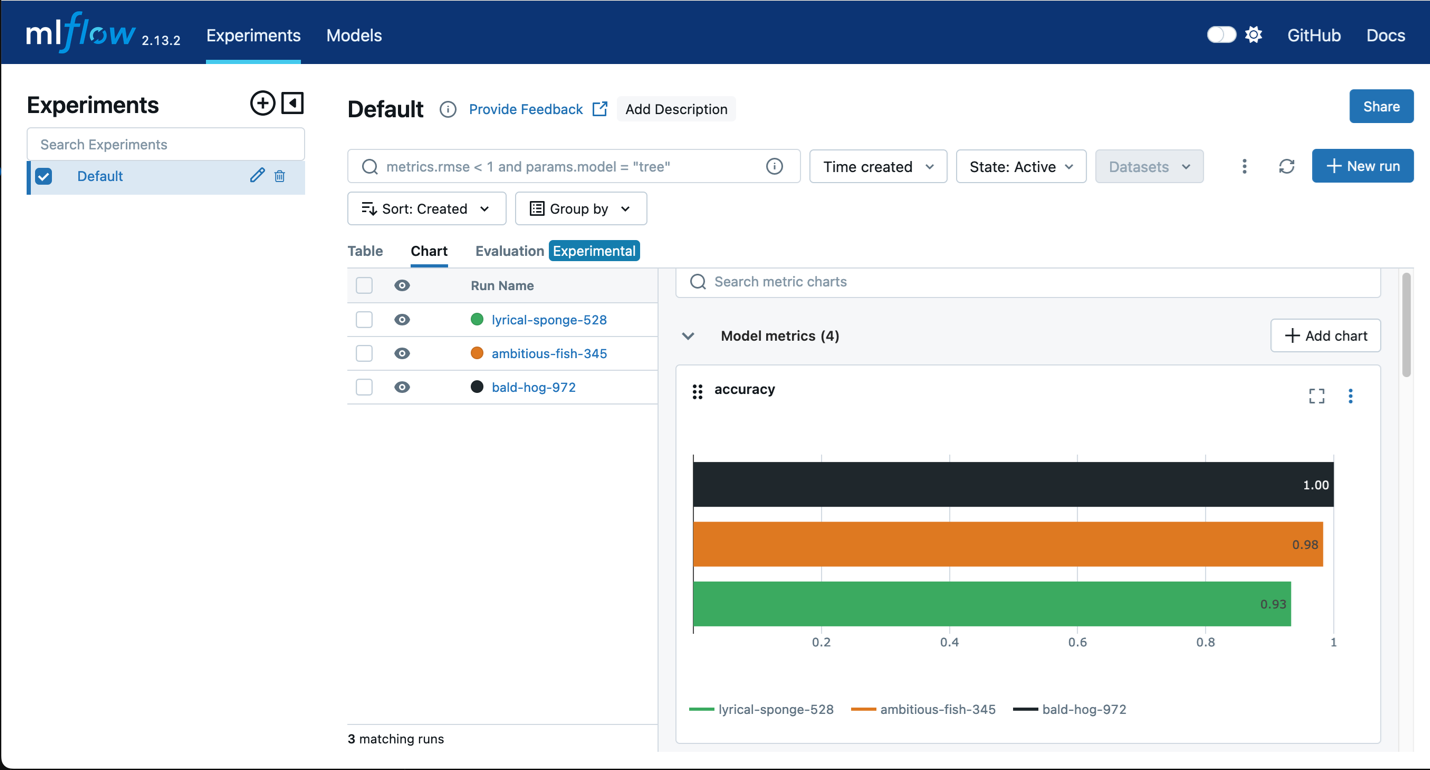

Evaluate experiments

Identifying the best model from multiple iterations requires analysis and comparison of model performance. MLflow offers visualizations such as scatter plots, bar charts, and histograms to compare training iterations. Additionally, MLflow enables the evaluation of models for bias and fairness.

Centrally manage MLflow models

Multiple teams often use MLflow to manage their experiments, with only some models becoming candidates for production. Organizations need an easy way to keep track of all candidate models to make informed decisions about which models proceed to production. MLflow integrates seamlessly with SageMaker Model Registry, allowing organizations to see their models registered in MLflow automatically appear in SageMaker Model Registry, complete with a SageMaker Model Card for governance. This integration enables data scientists and ML engineers to use distinct tools for their respective tasks: MLflow for experimentation and SageMaker Model Registry for managing the production lifecycle with comprehensive model lineage.

Deploy MLflow Models to SageMaker endpoints

Deploying models from MLflow to SageMaker Endpoints is seamless, eliminating the need to build custom containers for model storage. This integration allows customers to leverage SageMaker’s optimized inference containers while retaining the user-friendly experience of MLflow for logging and registering models.