Machine learning on AWS

AWS helps you innovate with machine learning (ML) at scale with the most comprehensive set of ML services, infrastructure, and deployment resources. From the world’s largest enterprises to emerging startups, more than 100,000 customers have chosen AWS machine learning services to solve business problems and drive innovation. With Amazon SageMaker, you can build, train, and deploy machine learning and foundation models at scale with infrastructure and purpose-built tools for each step of the ML lifecycle.

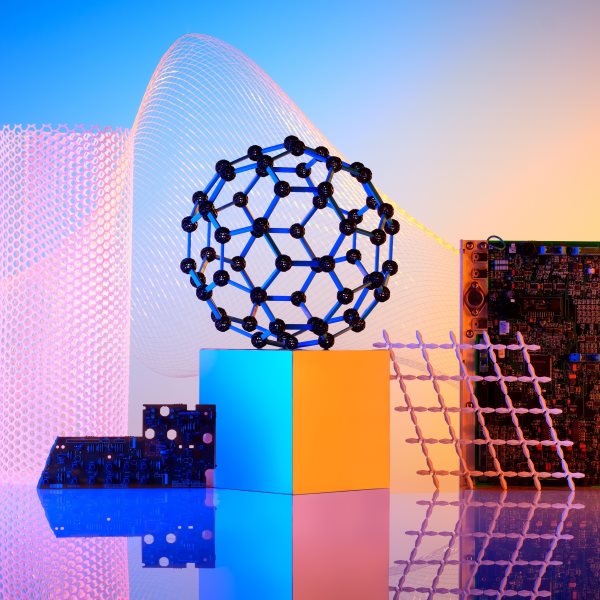

Machine learning tools to build at scale

Infrastructure that pushes the envelope to deliver the highest performance while lowering costs

Building AI responsibly at AWS

The rapid growth of generative AI brings promising new innovation and, at the same time, raises new challenges. At AWS, we are committed to developing AI responsibly, taking a people-centric approach that prioritizes education, science, and our customers—to integrate responsible AI across the end-to-end AI lifecycle with tools like Guardrails for Amazon Bedrock, Amazon SageMaker Clarify, and much more.